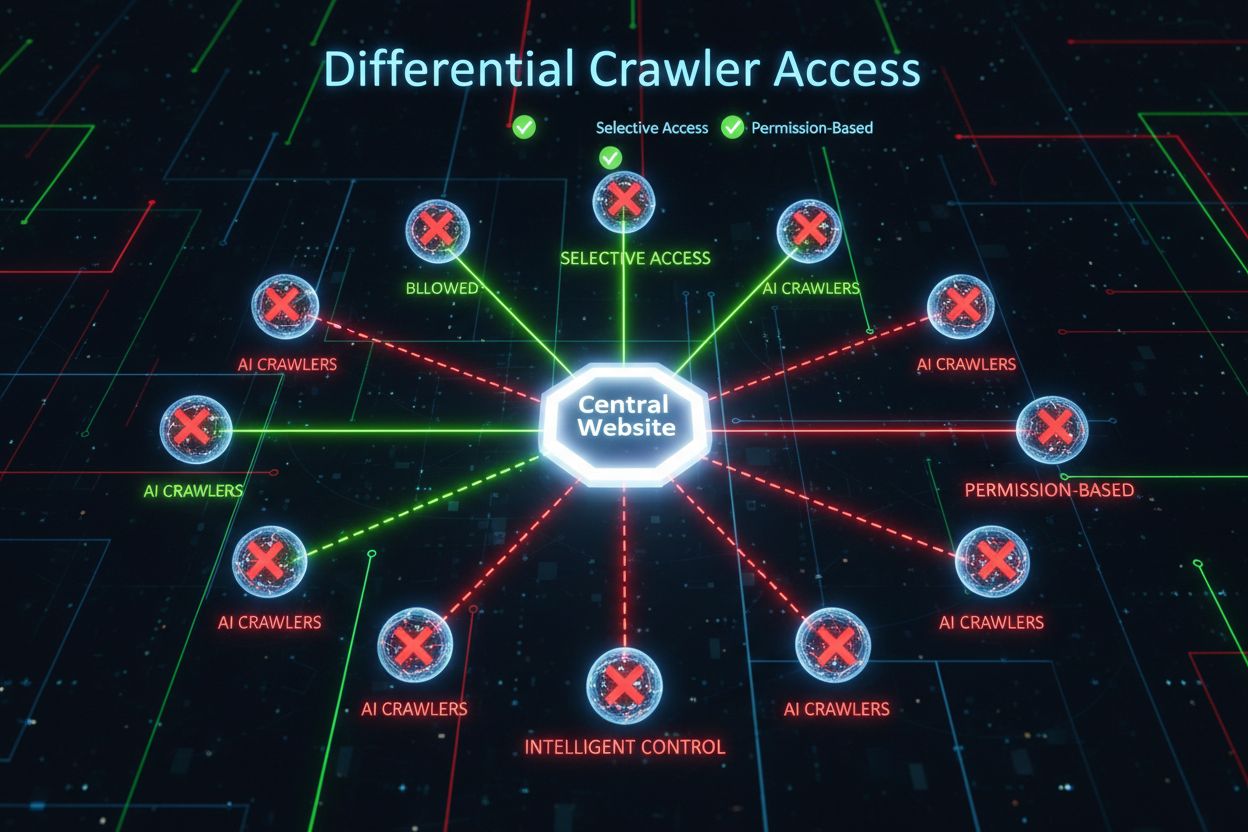

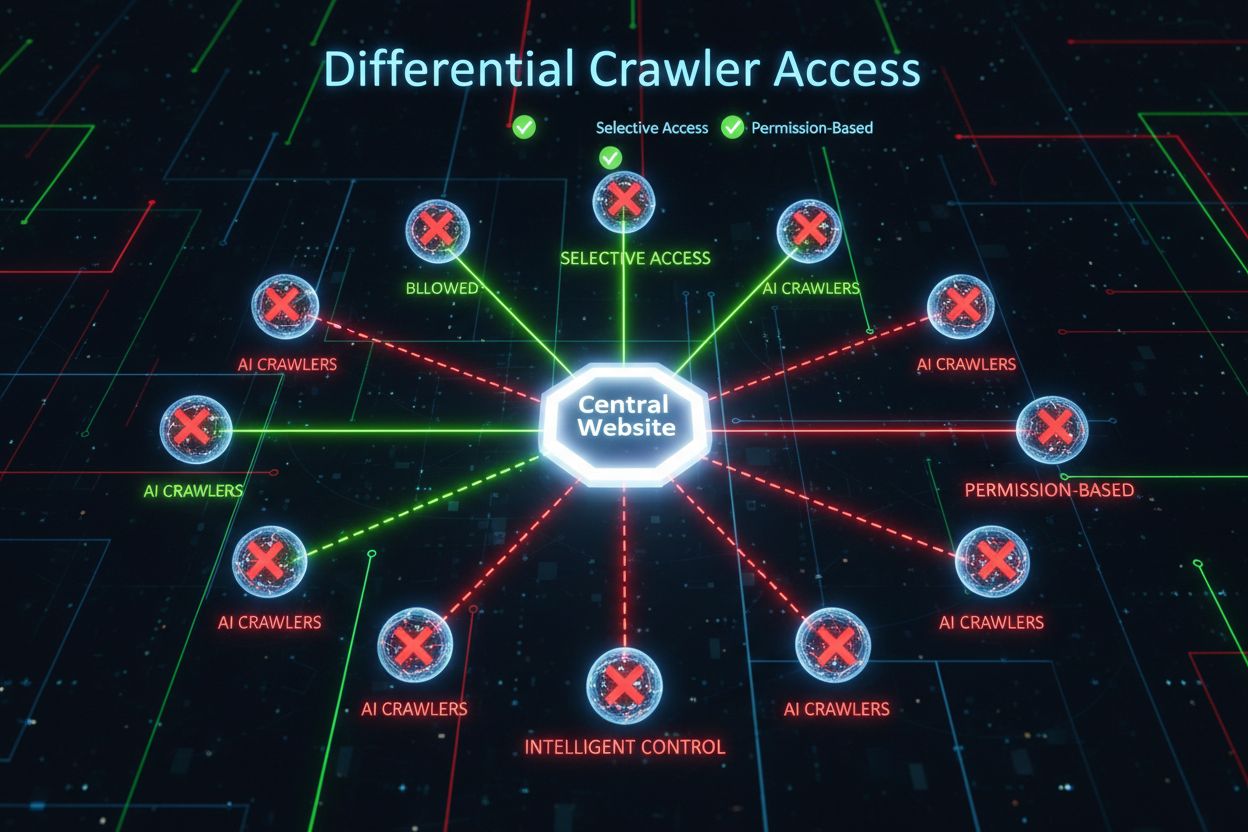

Differential Crawler Access

Learn how to selectively allow or block AI crawlers based on business objectives. Implement differential crawler access to protect content while maintaining vis...

Learn how Web Application Firewalls provide advanced control over AI crawlers beyond robots.txt. Implement WAF rules to protect your content from unauthorized AI scraping and monitor AI citations with AmICited.

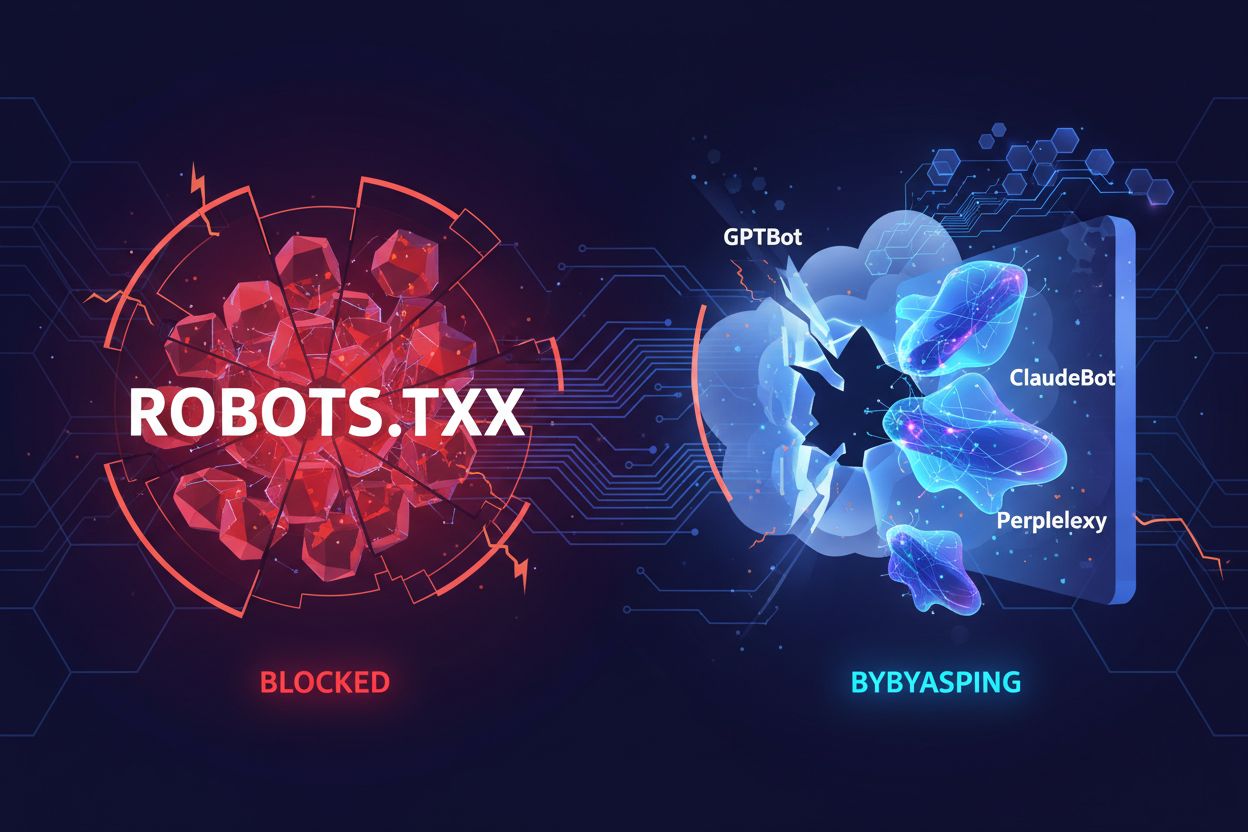

The inadequacy of robots.txt as a standalone defense mechanism has become increasingly apparent in the age of AI-driven content consumption. While traditional search engines generally respect robots.txt directives, modern AI crawlers operate with fundamentally different incentives and enforcement mechanisms, making simple text-based policies insufficient for content protection. According to Cloudflare’s analysis, AI crawlers now account for nearly 80% of all bot traffic to websites, with training crawlers consuming vast amounts of content while returning minimal referral traffic—OpenAI’s crawlers maintain a 400:1 crawl-to-referral ratio, while Anthropic’s reaches as high as 38,000:1. For publishers and content owners, this asymmetrical relationship represents a critical business threat, as AI models trained on their content can directly reduce organic traffic and diminish the value of their intellectual property.

A Web Application Firewall (WAF) operates as a reverse proxy positioned between users and web servers, inspecting every HTTP request in real time to filter undesired traffic based on configurable rules. Unlike robots.txt, which relies on voluntary crawler compliance, WAFs enforce protection at the infrastructure level, making them significantly more effective for controlling AI crawler access. The following comparison illustrates how WAFs differ from traditional security approaches:

| Feature | Robots.txt | Traditional Firewall | Modern WAF |

|---|---|---|---|

| Enforcement Level | Advisory/Voluntary | IP-based blocking | Application-aware inspection |

| AI Crawler Detection | User-agent matching only | Limited bot recognition | Behavioral analysis + fingerprinting |

| Real-time Adaptation | Static file | Manual updates required | Continuous threat intelligence |

| Granular Control | Path-level only | Broad IP ranges | Request-level policies |

| Machine Learning | None | None | Advanced bot classification |

WAFs provide granular bot classification using device fingerprinting, behavioral analysis, and machine learning to profile bots by intent and sophistication, enabling far more nuanced control than simple allow/deny rules.

AI crawlers fall into three distinct categories, each presenting different threats and requiring different mitigation strategies. Training crawlers like GPTBot, ClaudeBot, and Google-Extended systematically collect web content to build datasets for large language model development, accounting for approximately 80% of all AI crawler traffic and returning zero referral value to publishers. Search and citation crawlers such as OAI-SearchBot and PerplexityBot index content for AI-powered search experiences and may provide some referral traffic through citations, though at significantly lower volumes than traditional search engines. User-triggered fetchers activate only when users specifically request content through AI assistants, operating at minimal volume with one-off requests rather than systematic crawling patterns. The threat landscape includes:

Modern WAFs employ sophisticated technical detection methods that go far beyond simple user-agent string matching to identify and classify AI crawlers with high accuracy. These systems utilize behavioral analysis to examine request patterns, including crawl velocity, request sequencing, and response handling characteristics that distinguish bots from human users. Device fingerprinting techniques analyze HTTP headers, TLS signatures, and browser characteristics to identify spoofed user agents attempting to bypass traditional defenses. Machine learning models trained on millions of requests can detect emerging crawler signatures and novel bot tactics in real time, adapting to new threats without requiring manual rule updates. Additionally, WAFs can verify crawler legitimacy by cross-referencing request IP addresses against published IP ranges maintained by major AI companies—OpenAI publishes verified IPs at https://openai.com/gptbot.json, while Amazon provides theirs at https://developer.amazon.com/amazonbot/ip-addresses/—ensuring that only authenticated crawlers from legitimate sources are allowed through.

Implementing effective WAF rules for AI crawlers requires a multi-layered approach combining user-agent blocking, IP verification, and behavioral policies. The following code example demonstrates a basic WAF rule configuration that blocks known training crawlers while allowing legitimate search functionality:

# WAF Rule: Block AI Training Crawlers

Rule Name: Block-AI-Training-Crawlers

Condition 1: HTTP User-Agent matches (GPTBot|ClaudeBot|anthropic-ai|Google-Extended|Meta-ExternalAgent|Amazonbot|CCBot|Bytespider)

Action: Block (return 403 Forbidden)

# WAF Rule: Allow Verified Search Crawlers

Rule Name: Allow-Verified-Search-Crawlers

Condition 1: HTTP User-Agent matches (OAI-SearchBot|PerplexityBot)

Condition 2: Source IP in verified IP range

Action: Allow

# WAF Rule: Rate Limit Suspicious Bot Traffic

Rule Name: Rate-Limit-Suspicious-Bots

Condition 1: Request rate exceeds 100 requests/minute

Condition 2: User-Agent contains bot indicators

Condition 3: No verified IP match

Action: Challenge (CAPTCHA) or Block

Organizations should implement rule precedence carefully, ensuring that more specific rules (such as IP verification for legitimate crawlers) execute before broader blocking rules. Regular testing and monitoring of rule effectiveness is essential, as crawler user-agent strings and IP ranges evolve frequently. Many WAF providers offer pre-built rule sets specifically designed for AI crawler management, reducing implementation complexity while maintaining comprehensive protection.

IP verification and allowlisting represent the most reliable method for distinguishing legitimate AI crawlers from spoofed requests, as user-agent strings can be easily forged while IP addresses are significantly harder to spoof at scale. Major AI companies publish official IP ranges in JSON format, enabling automated verification without manual maintenance—OpenAI provides separate IP lists for GPTBot, OAI-SearchBot, and ChatGPT-User, while Amazon maintains a comprehensive list for Amazonbot. WAF rules can be configured to allowlist only requests originating from these verified IP ranges, effectively preventing bad actors from bypassing restrictions by simply changing their user-agent header. For organizations using server-level blocking via .htaccess or firewall rules, combining IP verification with user-agent matching provides defense-in-depth protection that operates independently of WAF configuration. Additionally, some crawlers respect HTML meta tags like <meta name="robots" content="noarchive">, which signals to compliant crawlers that content should not be used for model training, offering a supplementary control mechanism for publishers who want granular, page-level protection.

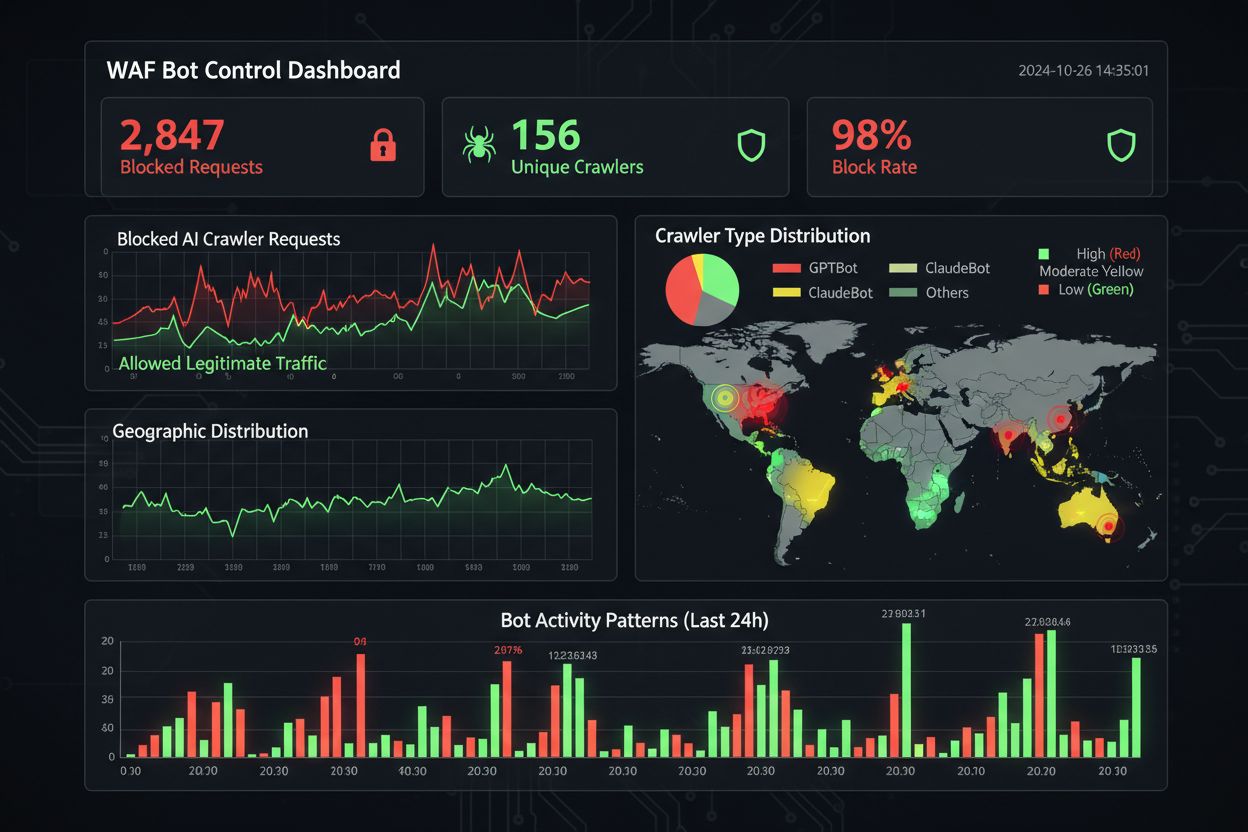

Effective monitoring and compliance requires continuous visibility into crawler activity and verification that blocking rules are functioning as intended. Organizations should regularly analyze server access logs to identify which crawlers are accessing their sites and whether blocked crawlers are still making requests—Apache logs typically reside in /var/log/apache2/access.log while Nginx logs are at /var/log/nginx/access.log, and grep-based filtering can quickly identify suspicious patterns. Analytics platforms increasingly differentiate bot traffic from human visitors, allowing teams to measure the impact of crawler blocking on legitimate metrics like bounce rate, conversion tracking, and SEO performance. Tools like Cloudflare Radar provide global visibility into AI bot traffic patterns and can identify emerging crawlers not yet in your blocklist. From a compliance perspective, WAF logs generate audit trails demonstrating that organizations have implemented reasonable security measures to protect customer data and intellectual property, which is increasingly important for GDPR, CCPA, and other data protection regulations. Quarterly reviews of your crawler blocklist are essential, as new AI crawlers emerge regularly and existing crawlers update their user-agent strings—the community-maintained ai.robots.txt project on GitHub

provides a valuable resource for tracking emerging threats.

Balancing content protection with business objectives requires careful analysis of which crawlers to block versus allow, as overly aggressive blocking can reduce visibility in emerging AI-powered discovery channels. Blocking training crawlers like GPTBot and ClaudeBot protects intellectual property but has no direct traffic impact, since these crawlers never send referral traffic. However, blocking search crawlers like OAI-SearchBot and PerplexityBot may reduce visibility in AI-powered search results where users actively seek citations and sources—a trade-off that depends on your content strategy and audience. Some publishers are exploring alternative approaches, such as allowing search crawlers while blocking training crawlers, or implementing pay-per-crawl models where AI companies compensate publishers for content access. Tools like AmICited.com help publishers track whether their content is being cited in AI-generated responses, providing data to inform blocking decisions. The optimal WAF configuration depends on your business model: news publishers may prioritize blocking training crawlers to protect content while allowing search crawlers for visibility, while SaaS companies might block all AI crawlers to prevent competitors from analyzing pricing and features. Regular monitoring of traffic patterns and revenue metrics after implementing WAF rules ensures that your protection strategy aligns with actual business outcomes.

When comparing WAF solutions for AI crawler management, organizations should evaluate several key capabilities that distinguish enterprise-grade platforms from basic offerings. Cloudflare’s AI Crawl Control integrates with its WAF to provide pre-built rules for known AI crawlers, with the ability to block, allow, or implement pay-per-crawl monetization for specific crawlers—the platform’s order of precedence ensures that WAF rules execute before other security layers. AWS WAF Bot Control offers both basic and targeted protection levels, with the targeted level using browser interrogation, fingerprinting, and behavior heuristics to detect sophisticated bots that don’t self-identify, plus optional machine learning analysis of traffic statistics. Azure WAF provides similar capabilities through its managed rule sets, though with less AI-specific specialization than Cloudflare or AWS. Beyond these major platforms, specialized bot management solutions from vendors like DataDome offer advanced machine learning models trained specifically on AI crawler behavior, though at higher cost. The choice between solutions depends on your existing infrastructure, budget, and required level of sophistication—organizations already using Cloudflare benefit from seamless integration, while AWS customers can leverage Bot Control within their existing WAF infrastructure.

Best practices for AI crawler management emphasize a defense-in-depth approach combining multiple control mechanisms rather than relying on any single solution. Organizations should implement quarterly blocklist reviews to catch new crawlers and updated user-agent strings, maintain server log analysis to verify that blocked crawlers are not bypassing rules, and regularly test WAF configurations to ensure rules are executing in the correct order. The future of WAF technology will increasingly incorporate AI-powered threat detection that adapts in real time to emerging crawler tactics, with integration into broader security ecosystems providing context-aware protection. As regulations tighten around data scraping and AI training data sourcing, WAFs will become essential compliance tools rather than optional security features. Organizations should begin implementing comprehensive WAF rules for AI crawlers now, before emerging threats like browser-based AI agents and headless browser crawlers become widespread—the cost of inaction, measured in lost traffic, compromised analytics, and potential legal exposure, far exceeds the investment required for robust protection infrastructure.

Robots.txt is an advisory file that relies on crawlers voluntarily respecting your directives, while WAF rules are enforced at the infrastructure level and apply to all requests regardless of crawler compliance. WAFs provide real-time detection and blocking, whereas robots.txt is static and easily bypassed by non-compliant crawlers.

Yes, many AI crawlers ignore robots.txt directives because they're designed to maximize training data collection. While well-behaved crawlers from major companies generally respect robots.txt, bad actors and some emerging crawlers don't. This is why WAF rules provide more reliable protection.

Check your server access logs (typically in /var/log/apache2/access.log or /var/log/nginx/access.log) for user-agent strings containing bot identifiers. Tools like Cloudflare Radar provide global visibility into AI crawler traffic patterns, and analytics platforms increasingly differentiate bot traffic from human visitors.

Blocking training crawlers like GPTBot has no direct SEO impact since they don't send referral traffic. However, blocking search crawlers like OAI-SearchBot may reduce visibility in AI-powered search results. Google's AI Overviews follow standard Googlebot rules, so blocking Google-Extended doesn't affect regular search indexing.

Cloudflare's AI Crawl Control, AWS WAF Bot Control, and Azure WAF all offer effective solutions. Cloudflare provides the most AI-specific features with pre-built rules and pay-per-crawl options. AWS offers advanced machine learning detection, while Azure provides solid managed rule sets. Choose based on your existing infrastructure and budget.

Review and update your WAF rules quarterly at minimum, as new AI crawlers emerge regularly and existing crawlers update their user-agent strings. Monitor the community-maintained ai.robots.txt project on GitHub for emerging threats, and check server logs monthly to identify new crawlers hitting your site.

Yes, this is a common strategy. You can configure WAF rules to block training crawlers like GPTBot and ClaudeBot while allowing search crawlers like OAI-SearchBot and PerplexityBot. This protects your content from being used in model training while maintaining visibility in AI-powered search results.

WAF pricing varies by provider. Cloudflare offers WAF starting at $20/month with AI Crawl Control features. AWS WAF charges per web ACL and rule, typically $5-10/month for basic protection. Azure WAF is included with Application Gateway. Implementation costs are minimal compared to the value of protecting your content and maintaining accurate analytics.

AmICited tracks AI crawler activity and monitors how your content is cited across ChatGPT, Perplexity, Google AI Overviews, and other AI platforms. Get visibility into your AI presence and understand which crawlers are accessing your content.

Learn how to selectively allow or block AI crawlers based on business objectives. Implement differential crawler access to protect content while maintaining vis...

Learn how to use robots.txt to control which AI bots access your content. Complete guide to blocking GPTBot, ClaudeBot, and other AI crawlers with practical exa...

Learn how to audit AI crawler access to your website. Discover which bots can see your content and fix blockers preventing AI visibility in ChatGPT, Perplexity,...