How to Dispute and Correct Inaccurate Information in AI Responses

Learn how to dispute inaccurate AI information, report errors to ChatGPT and Perplexity, and implement strategies to ensure your brand is accurately represented...

Learn how to identify, prevent, and correct AI misinformation about your brand. Discover 7 proven strategies and tools to protect your reputation in AI search results.

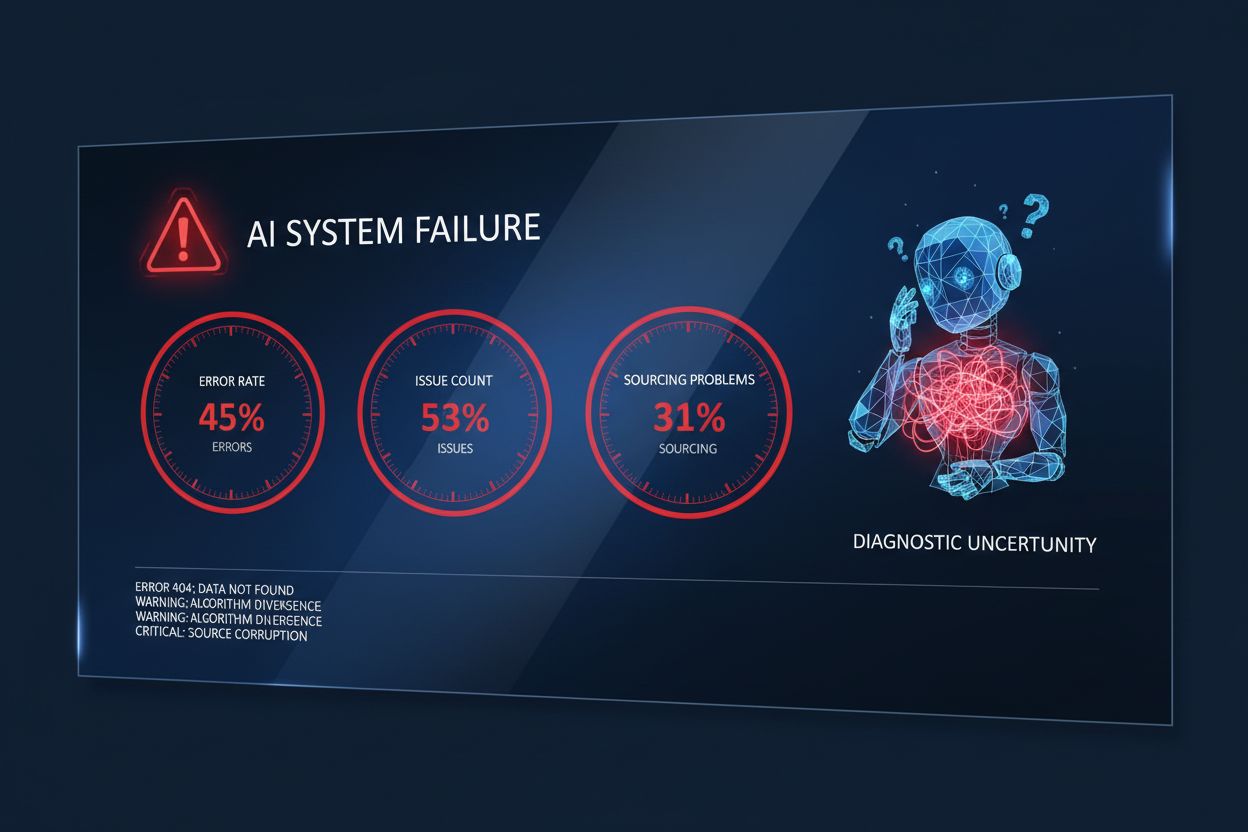

According to a groundbreaking study by the BBC and European Broadcasting Union (EBU) involving 22 international public broadcasters, approximately 45% of AI queries produce erroneous answers. This isn’t a minor glitch—it’s a systemic crisis affecting how billions of people discover information about brands. When a potential customer asks ChatGPT, Perplexity, or Google Gemini about your company, there’s nearly a one-in-two chance they’ll receive inaccurate information. The study revealed shocking examples: AI incorrectly identified the Pope, named the wrong German Chancellor (Scholz instead of Merz), and cited an outdated NATO Secretary General. For brands, this means your reputation is being shaped by information you didn’t create and can’t fully control.

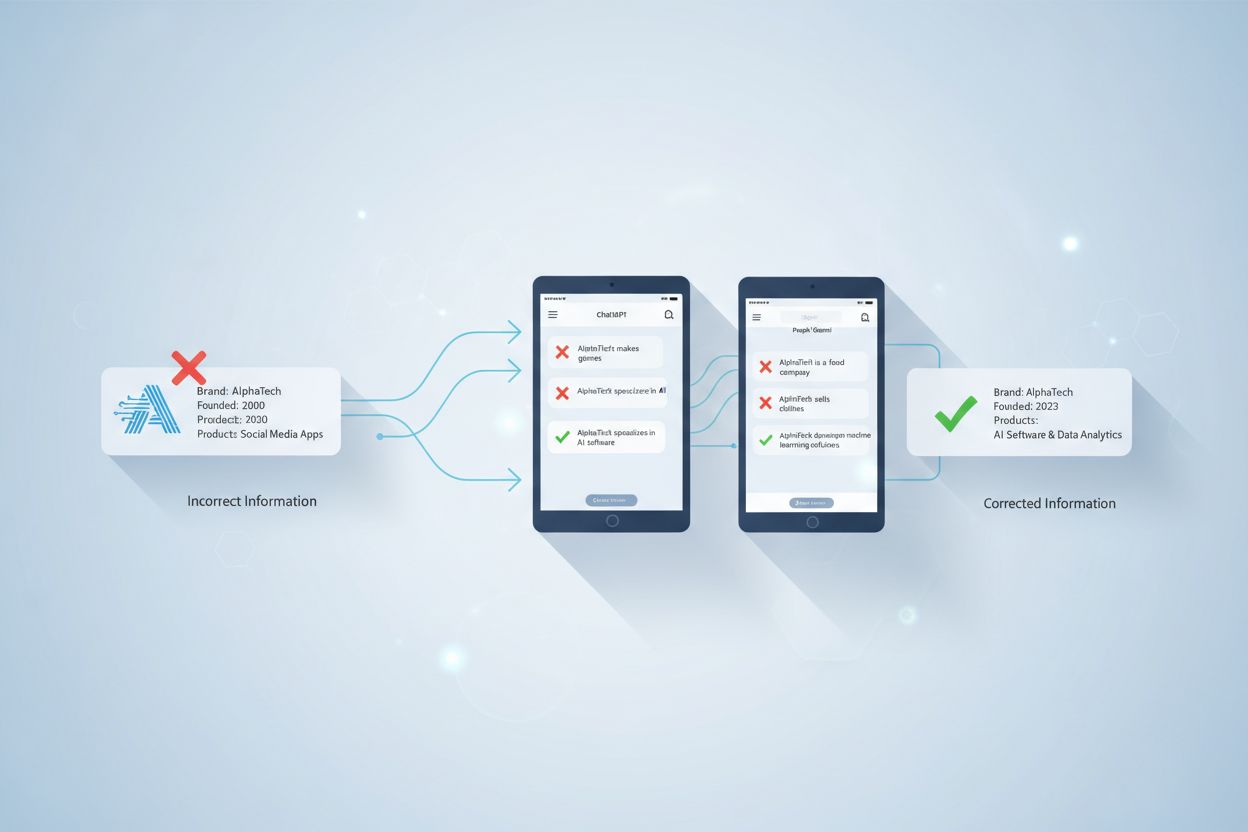

Before you can protect your brand, you need to understand what you’re fighting against. AI misinformation and AI hallucinations are two distinct problems that require different solutions. AI misinformation occurs when language models cite biased, outdated, or erroneous information from their training data—essentially amplifying existing errors from the internet. AI hallucinations, by contrast, happen when an AI invents facts entirely, creating nonexistent studies, URLs, or expert quotes to fill knowledge gaps. The distinction matters because misinformation can be corrected by updating source material, while hallucinations require building more comprehensive training data about your brand.

| Characteristic | AI Misinformation | AI Hallucinations |

|---|---|---|

| Source | Biased or outdated training data | Invented content to fill gaps |

| Confidence Level | Sounds credible and authoritative | Often oddly specific but unverifiable |

| Identifiability | Repeats common myths and narratives | Creates fake URLs, studies, or experts |

| Solution | Update source material and content | Build comprehensive brand information |

| Example | Citing a 2006 BBC article about bird flu vaccines | Claiming a product feature that doesn’t exist |

DW’s independent study found that 53% of AI responses had significant issues, with 31% experiencing serious sourcing problems and 20% containing major factual errors. Gemini performed particularly poorly, with 72% of its responses having sourcing issues. Understanding which type of error your brand faces is crucial for developing an effective response strategy.

The problem starts with how large language models actually work. LLMs don’t “understand” information the way humans do—instead, they use mathematical models called embeddings that calculate statistical relationships between words and concepts. When trained on the entire internet, these models absorb not just accurate information but also biases, outdated facts, and false narratives. This creates what experts call the “poisoned corpus” problem: if flawed information exists in your training data, the AI will confidently reproduce it.

One major culprit is Reddit’s outsized influence on AI training. Research shows that Reddit outranks corporate websites across all industries in AI search results. Google even signed a $60 million deal to train its AI models on Reddit posts. This means casual forum discussions, unverified claims, and outdated complaints about your brand can carry more weight than your official website. Additionally, AI systems have knowledge cutoff dates—ChatGPT’s training data ends in April 2024, meaning any recent changes to your products, leadership, or services won’t be reflected. When multiple sources contradict each other, the AI picks the statistically most common answer, which isn’t always the most accurate.

The consequences of AI misinformation extend far beyond reputation damage. When potential customers receive incorrect information about your brand from AI platforms, they make purchasing decisions based on false premises. A customer might avoid your product because an AI cited an outdated review, or choose a competitor based on fabricated features. This directly impacts your bottom line—lost leads, reduced conversions, and damaged customer lifetime value. The crisis management implications are severe: unlike traditional PR crises that unfold over days or weeks, AI misinformation spreads instantly to millions of users across multiple platforms simultaneously. Your competitors gain an unfair advantage if their brands are accurately represented while yours isn’t. Perhaps most troubling, customers increasingly trust AI answers more than they trust traditional search results, making this problem exponentially more damaging to brand perception.

You can’t fix what you don’t know about. The first step in protecting your brand is conducting a comprehensive audit of how AI platforms perceive and represent you. Start by creating a systematic review process—either manually or using automated tools like OmniSEO that track AI visibility across multiple platforms. Prompt ChatGPT, Perplexity, Google Gemini, and Microsoft Copilot with questions your customers would ask: “What is [your company]?”, “What products does [company] offer?”, “What do people say about [company]?”, and “Is [company] trustworthy?”

Analyze the responses for biased opinions, inaccuracies, unverified claims, and harmful content. Pay special attention to which sources the AI cites most frequently—if it’s relying on Reddit threads and old reviews instead of your official website, you’ve identified a critical problem. Look for patterns: Are certain topics consistently triggering negative responses? Does the AI show bias when discussing specific aspects of your business? Are there noticeable knowledge gaps where the AI lacks information entirely? Document everything, noting which AI platforms have the worst information about your brand and what sources they’re citing. This audit should be conducted quarterly, as AI models are continuously updated and new misinformation can emerge.

Once you’ve identified how AI platforms misrepresent your brand, implement these seven proven strategies:

Flag inaccurate content directly - Most AI platforms allow users to report incorrect responses. When you find misinformation, flag it immediately and provide accurate information as a correction. This trains the system to avoid similar errors.

Update high-impact website content - If AI is citing outdated or contradictory information from your website, refresh that content immediately. Ensure your about page, service descriptions, and key landing pages are current, clear, and structured for AI readability using headers, bullet points, and FAQ sections.

Create “best of” positioning content - AI loves citing “best of” articles when recommending products. Write content like “The 5 Best Solutions for [Problem]” and position your product as the top choice. This directly influences AI recommendations to your target audience.

Engage authentically in cited communities - If AI is citing Reddit, Quora, or industry forums, build a presence there. Answer questions about your industry, respond to mentions of your brand, and share valuable insights. Avoid overt self-promotion; focus on genuine helpfulness.

Create original forum posts with case studies - Beyond responding to existing discussions, post your own content on commonly cited platforms. Share case studies showing real customer results, outline problems you’ve solved, and demonstrate your expertise. These posts become sources AI can cite.

Build relationships with influencers and publishers - Identify which industry voices and publications AI cites most. If they’ve posted inaccurate information about your brand, reach out to clarify. Partner with them to create accurate, positive content that AI will cite in future responses.

Encourage detailed customer reviews - Happy customers are your best defense against misinformation. Actively encourage satisfied clients to leave detailed reviews on review sites, industry directories, and platforms AI cites. Detailed reviews that address specific benefits are more likely to be cited by AI than generic praise.

While these strategies are essential, they’re only effective if you know what’s happening in real-time. This is where AmICited becomes indispensable. AmICited is specifically designed to monitor how AI platforms—ChatGPT, Perplexity, Google AI Overviews, and others—reference your brand. Rather than manually checking each AI platform weekly, AmICited automatically tracks mentions, identifies misinformation patterns, and alerts you to emerging issues before they damage your reputation.

The platform provides detailed insights into which sources AI is citing about your brand, how frequently misinformation appears, and which AI platforms are most problematic. You get real-time alerts when new inaccuracies emerge, allowing you to respond quickly with corrections and updated content. AmICited’s competitive analysis feature shows how your brand’s AI representation compares to competitors, revealing opportunities to gain market advantage through better AI visibility. By using AmICited alongside the seven strategies above, you transform brand protection from a reactive crisis management exercise into a proactive, data-driven process. The competitive advantage is clear: brands that monitor and manage their AI presence will outpace those that ignore it.

Long-term brand protection requires building an ecosystem where AI platforms naturally cite accurate information about you. This starts with creating authoritative, well-structured content that AI systems prefer. Use clear hierarchies with H1, H2, and H3 headings; organize information with bullet points and numbered lists; include FAQ sections that answer common questions; and create comparison tables that help AI understand your positioning. Implement structured data markup (schema.org) on your website so search engines and AI systems can easily extract accurate information about your company, products, and services.

Become the go-to source for information in your industry by publishing thought leadership content that addresses gaps competitors are missing. When you’re consistently cited as an authoritative source, AI systems learn to trust your information over less reliable sources. Maintain a “brand hub” on your website—a centralized location with accurate company information, leadership bios, product specifications, and customer success stories. Update this regularly and ensure consistency across all your digital properties. The goal is to make your official information so comprehensive, authoritative, and well-structured that AI systems naturally prefer it over Reddit threads and outdated reviews. This isn’t a quick fix; it’s a long-term investment in your brand’s digital foundation that pays dividends as AI becomes increasingly central to how customers discover and evaluate companies.

According to a BBC and European Broadcasting Union study involving 22 international public broadcasters, approximately 45% of AI queries produce erroneous answers. DW's independent study found that 53% of AI responses had significant issues, with 31% experiencing serious sourcing problems and 20% containing major factual errors.

AI misinformation occurs when language models cite biased, outdated, or erroneous information from their training data. AI hallucinations happen when an AI invents facts entirely, creating nonexistent studies or expert quotes. Misinformation can be corrected by updating source material, while hallucinations require building more comprehensive training data about your brand.

Reddit outranks corporate websites across all industries in AI search results because Google signed a $60 million deal to train its AI models on Reddit posts. Additionally, AI systems are trained on the entire internet, and casual forum discussions often carry more statistical weight than official company information in the training data.

You can manually prompt ChatGPT, Perplexity, Google Gemini, and Microsoft Copilot with questions your customers would ask, then analyze the responses for inaccuracies. For continuous monitoring, use tools like AmICited that automatically track how AI platforms reference your brand and alert you to emerging misinformation.

The fastest approach combines three tactics: (1) Flag inaccurate responses directly in AI platforms with corrections, (2) Update outdated or contradictory information on your website, and (3) Create authoritative content that AI systems prefer to cite. Most corrections take effect within weeks as AI models are updated.

You can't completely prevent it, but you can significantly reduce it by keeping your website content current, implementing structured data markup, and creating comprehensive brand information that AI systems prefer. The more authoritative and well-structured your official information is, the more likely AI will cite it instead of outdated sources.

Quick fixes (flagging errors, updating website content) show results within weeks. However, building long-term trust with AI systems takes 2-3 months of consistent effort. Creating a comprehensive brand information ecosystem and becoming an authoritative source in your industry is a long-term investment that pays dividends over 6-12 months.

AmICited monitors how ChatGPT, Perplexity, Google AI Overviews, and other platforms reference your brand in real-time. It identifies misinformation patterns, alerts you to emerging issues before they spread, shows which sources AI is citing, and provides competitive analysis to help you gain market advantage through better AI visibility.

Monitor how AI platforms reference your brand in real-time. Identify misinformation patterns before they damage your reputation and stay ahead of competitors.

Learn how to dispute inaccurate AI information, report errors to ChatGPT and Perplexity, and implement strategies to ensure your brand is accurately represented...

Learn how to identify and correct incorrect brand information in AI systems like ChatGPT, Gemini, and Perplexity. Discover monitoring tools, source-level correc...

Learn how to prepare for AI search crises with monitoring, response playbooks, and crisis management strategies for ChatGPT, Perplexity, and Google AI.