Sunset Platforms and AI Visibility: Managing Transitions

Learn how to manage AI platform transitions and maintain citation visibility when platforms sunset. Strategic guide for handling deprecated AI platforms and tra...

Learn how to adapt your AI strategy when platforms change. Discover migration strategies, monitoring tools, and best practices for handling AI platform deprecation and updates.

The retirement of OpenAI’s GPT-4o API in 2026 represents a watershed moment for businesses built on AI platforms—it’s no longer a theoretical concern but an immediate reality that demands strategic attention. Unlike traditional software deprecations that often provide extended support windows, AI platform changes can happen with relatively short notice, forcing organizations to make rapid decisions about their technology stack. Platforms retire models for multiple compelling reasons: safety concerns about older systems that may not meet current standards, liability protection against potential misuse or harmful outputs, evolving business models that prioritize newer offerings, and the need to consolidate resources around cutting-edge research. When a business has integrated a specific model deeply into its operations—whether for customer-facing applications, internal analytics, or critical decision-making systems—the announcement of API retirement creates immediate pressure to migrate, test, and validate alternatives. The financial impact extends beyond simple engineering costs; there’s lost productivity during migration, potential service disruptions, and the risk of performance degradation if alternative models don’t match the original’s capabilities. Organizations that haven’t prepared for this scenario often find themselves in a reactive scramble, negotiating extended support timelines or accepting suboptimal alternatives simply because they lack a coherent migration strategy. The key insight is that platform deprecation is no longer an edge case—it’s a predictable feature of the AI landscape that requires proactive planning.

Traditional business continuity frameworks, such as ISO 22301, were designed around infrastructure failures—systems go down, and you restore them from backups or failover systems. These frameworks rely on metrics like Recovery Time Objective (RTO) and Recovery Point Objective (RPO) to measure how quickly you can restore service and how much data loss is acceptable. However, AI failures operate fundamentally differently, and this distinction is critical: the system continues running, producing outputs, and serving users while silently making wrong decisions. A fraud detection model might approve fraudulent transactions at an increasing rate; a pricing engine might systematically underprice products; a loan approval system might develop hidden biases that discriminate against protected groups—all while appearing to function normally. Traditional continuity plans have no mechanism to detect these failures because they’re not looking for degraded accuracy or emerging bias; they’re looking for system crashes and data loss. The new reality demands additional metrics: Recovery Accuracy Objective (RAO), which defines acceptable performance thresholds, and Recovery Fairness Objective (RFO), which ensures that model changes don’t introduce or amplify discriminatory outcomes. Consider a financial services company using an AI model for credit decisions; if that model drifts and begins systematically denying credit to certain demographics, the traditional continuity plan sees no problem—the system is up and running. Yet the business faces regulatory violations, reputational damage, and potential legal liability.

| Aspect | Traditional Infrastructure Failures | AI Model Failures |

|---|---|---|

| Detection | Immediate (system down) | Delayed (outputs appear normal) |

| Impact Visibility | Clear and measurable | Hidden in accuracy metrics |

| Recovery Metric | RTO/RPO | RAO/RFO needed |

| Root Cause | Hardware/network issues | Drift, bias, data changes |

| User Experience | Service unavailable | Service available but wrong |

| Compliance Risk | Data loss, downtime | Discrimination, liability |

Platform deprecation cycles typically follow a predictable pattern, though the timeline can vary significantly depending on the platform’s maturity and user base. Most platforms announce deprecation with a 12-24 month notice period, giving developers time to migrate—though this window is often shorter for rapidly evolving AI platforms where newer models represent significant improvements. The announcement itself creates immediate pressure: development teams must assess impact, evaluate alternatives, plan migrations, and secure budget and resources, all while maintaining current operations. Version management complexity increases substantially when organizations run multiple models simultaneously during transition periods; you’re essentially maintaining two parallel systems, doubling testing and monitoring overhead. The migration timeline isn’t just about switching API calls; it involves retraining on new model outputs, validating that the new model performs acceptably on your specific use cases, and potentially retuning parameters that were optimized for the deprecated model’s behavior. Some organizations face additional constraints: regulatory approval processes that require validation of new models, contractual obligations that specify particular model versions, or legacy systems so deeply integrated with a specific API that refactoring requires substantial engineering effort. Understanding these cycles allows you to move from reactive scrambling to proactive planning, building migration timelines into your product roadmaps rather than treating them as emergency situations.

The direct costs of platform migration are often underestimated, extending far beyond the obvious engineering hours required to update API calls and integrate new models. Development effort includes not just code changes but architectural modifications—if your system was optimized around specific latency characteristics, throughput limits, or output formats of the deprecated model, the new platform may require significant refactoring. Testing and validation represents a substantial hidden cost; you can’t simply swap models and hope for the best, especially in high-stakes applications. Every use case, edge case, and integration point must be tested against the new model to ensure it produces acceptable results. Performance differences between models can be dramatic—the new model might be faster but less accurate, cheaper but with different output characteristics, or more capable but requiring different input formatting. Compliance and audit implications add another layer: if your organization operates in regulated industries (finance, healthcare, insurance), you may need to document the migration, validate that the new model meets regulatory requirements, and potentially obtain approval before switching. The opportunity cost of engineering resources diverted to migration work is substantial—those developers could be building new features, improving existing systems, or addressing technical debt. Organizations often discover that the “new” model requires different hyperparameter tuning, different data preprocessing, or different monitoring approaches, extending the migration timeline and costs.

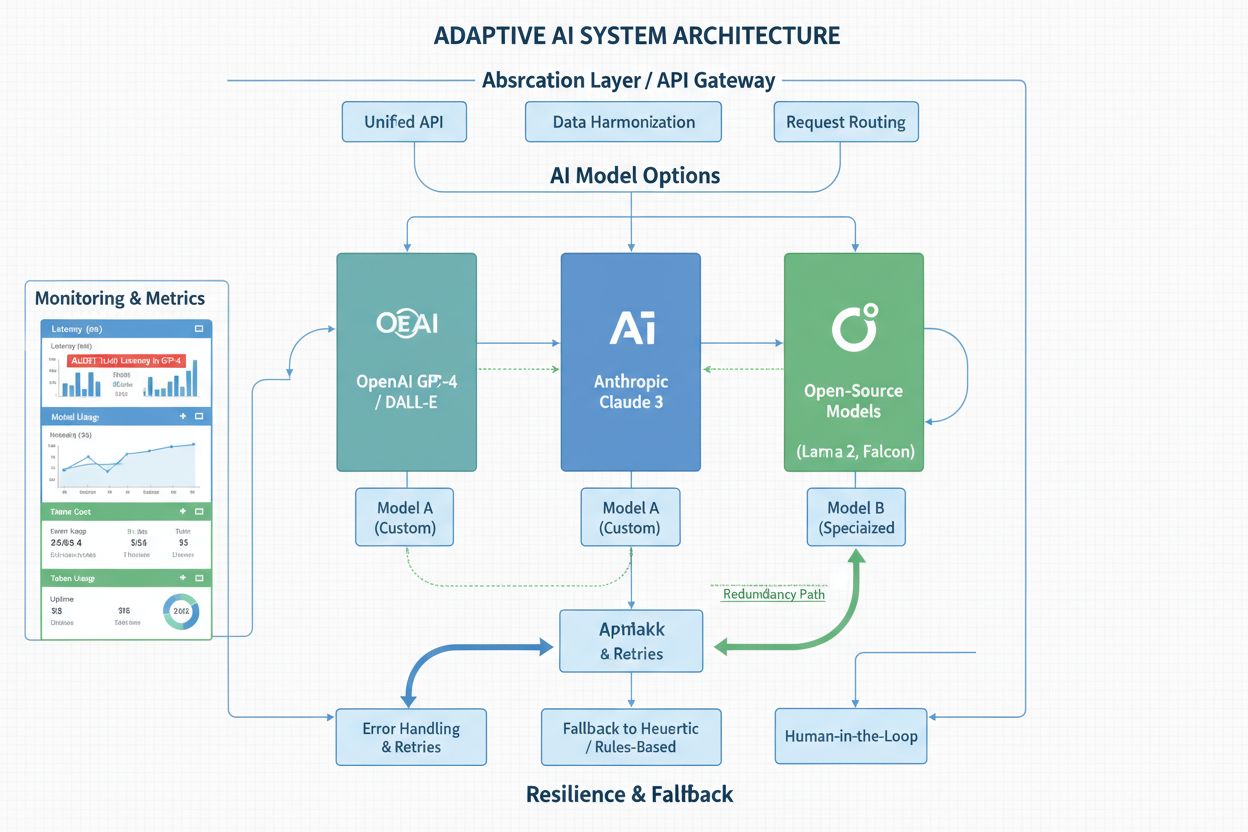

The most resilient organizations design their AI systems with platform independence as a core architectural principle, recognizing that today’s cutting-edge model will eventually be deprecated. Abstraction layers and API wrappers are essential tools for this approach—rather than embedding API calls directly throughout your codebase, you create a unified interface that abstracts away the specific model provider. This means when you need to migrate from one platform to another, you only need to update the wrapper, not dozens of integration points across your system. Multi-model strategies provide additional resilience; some organizations run multiple models in parallel for critical decisions, using ensemble methods to combine predictions or maintaining a secondary model as a fallback. This approach adds complexity and cost but provides insurance against platform changes—if one model is deprecated, you already have another in production. Fallback mechanisms are equally important: if your primary model becomes unavailable or produces suspicious outputs, your system should gracefully degrade to a secondary option rather than failing completely. Robust monitoring and alerting systems allow you to detect performance degradation, accuracy drift, or unexpected behavior changes before they impact users. Documentation and version control practices should explicitly track which models are in use, when they were deployed, and what their performance characteristics are—this institutional knowledge is invaluable when migration decisions must be made quickly. Organizations that invest in these architectural patterns find that platform changes become manageable events rather than crises.

Staying informed about platform announcements and deprecation notices requires systematic monitoring rather than hoping you’ll catch important news in your email inbox. Most major AI platforms publish deprecation timelines on their official blogs, documentation sites, and developer portals, but these announcements can be easy to miss amid the constant stream of product updates and feature releases. Setting up automated alerts for specific platforms—using RSS feeds, email subscriptions, or dedicated monitoring services—ensures you’re notified immediately when changes are announced rather than discovering them months later. Beyond official announcements, tracking AI model performance changes in production is critical; platforms sometimes modify models subtly, and you may notice accuracy degradation or behavioral changes before any official announcement. Tools like AmICited provide valuable monitoring capabilities for tracking how AI platforms reference your brand and content, offering insights into platform changes and updates that might affect your business. Competitive intelligence on platform updates helps you understand industry trends and anticipate which models might be deprecated next—if competitors are already migrating away from a particular model, that’s a signal that change is coming. Some organizations subscribe to platform-specific newsletters, participate in developer communities, or maintain relationships with platform account managers who can provide early warning of upcoming changes. The investment in monitoring infrastructure pays dividends when you receive advance notice of deprecation, giving you months of additional planning time rather than scrambling to migrate on a compressed timeline.

A well-structured platform change response plan transforms what could be a chaotic emergency into a managed process with clear phases and decision points. The assessment phase begins immediately upon learning of a deprecation announcement; your team evaluates the impact on all systems using the deprecated model, estimates the effort required for migration, and identifies any regulatory or contractual constraints that might affect your timeline. This phase produces a detailed inventory of affected systems, their criticality, and their dependencies—information that drives all subsequent decisions. The planning phase develops a detailed migration roadmap, allocating resources, establishing timelines, and identifying which systems will migrate first (typically starting with non-critical systems to build experience before tackling mission-critical applications). The testing phase is where most of the actual work happens; teams validate that alternative models perform acceptably on your specific use cases, identify any performance gaps or behavioral differences, and develop workarounds or optimizations as needed. The rollout phase executes the migration in stages, starting with canary deployments to a small percentage of traffic, monitoring for issues, and gradually increasing the percentage of traffic routed to the new model. Post-migration monitoring continues for weeks or months, tracking performance metrics, user feedback, and system behavior to ensure the migration was successful and the new model is performing as expected. Organizations that follow this structured approach consistently report smoother migrations with fewer surprises and less disruption to users.

Selecting a replacement platform or model requires systematic evaluation against clear criteria for platform selection that reflect your organization’s specific needs and constraints. Performance characteristics are obvious—accuracy, latency, throughput, and cost—but equally important are less obvious factors like vendor stability (will this platform still exist in five years?), support quality, documentation, and community size. The open-source vs. proprietary trade-off deserves careful consideration; open-source models offer independence from vendor decisions and the ability to run models on your own infrastructure, but they may require more engineering effort to deploy and maintain. Proprietary platforms offer convenience, regular updates, and vendor support, but they introduce vendor lock-in risks—your business becomes dependent on the platform’s continued existence and pricing decisions. Cost-benefit analysis should account for total cost of ownership, not just per-API-call pricing; a cheaper model that requires more engineering effort to integrate or produces lower-quality results may be more expensive overall. Long-term sustainability is a critical but often overlooked factor; choosing a model from a well-funded, stable platform reduces the risk of future deprecations, while choosing a model from a startup or research project introduces higher risk of platform changes. Some organizations deliberately choose multiple platforms to reduce dependency on any single vendor, accepting higher complexity in exchange for reduced risk of future disruptions. The evaluation process should be documented and revisited periodically, as the landscape of available models and platforms changes constantly.

Organizations that thrive in the rapidly evolving AI landscape embrace continuous learning and adaptation as core operational principles rather than treating platform changes as occasional disruptions. Building and maintaining relationships with platform providers—through account management, participation in user advisory boards, or regular communication with product teams—provides early visibility into upcoming changes and sometimes opportunities to influence deprecation timelines. Participating in beta programs for new models and platforms allows your organization to evaluate alternatives before they’re widely available, giving you a head start on migration planning if your current platform is eventually deprecated. Staying informed about industry trends and forecasting helps you anticipate which models and platforms are likely to become dominant and which might fade away; this forward-looking perspective allows you to make strategic choices about which platforms to invest in. Building internal expertise in AI model evaluation, deployment, and monitoring ensures your organization isn’t dependent on external consultants or vendors for critical decisions about platform changes. This expertise includes understanding how to evaluate model performance, how to detect drift and bias, how to design systems that can adapt to model changes, and how to make sound technical decisions under uncertainty. Organizations that invest in these capabilities find that platform changes become manageable challenges rather than existential threats, and they’re better positioned to capitalize on improvements in AI technology as new models and platforms emerge.

Most AI platforms provide 12-24 months notice before deprecating a model, though this timeline can vary. The key is to start planning immediately upon announcement rather than waiting until the deadline approaches. Early planning gives you time to thoroughly test alternatives and avoid rushed migrations that introduce bugs or performance issues.

Platform deprecation typically means a model or API version is no longer receiving updates and will eventually be removed. API retirement is the final step where access is completely shut down. Understanding this distinction helps you plan your migration timeline—you may have months of deprecation notice before actual retirement occurs.

Yes, and many organizations do for critical applications. Running multiple models in parallel or maintaining a secondary model as a fallback provides insurance against platform changes. However, this approach adds complexity and cost, so it's typically reserved for mission-critical systems where reliability is paramount.

Start by documenting all AI models and platforms your organization uses, including which systems depend on each one. Monitor official platform announcements, subscribe to deprecation notices, and use monitoring tools to track platform changes. Regular audits of your AI infrastructure help you stay aware of potential impacts.

Failing to adapt to platform changes can result in service disruptions when platforms shut down access, performance degradation if you're forced to use suboptimal alternatives, regulatory violations if your system becomes non-compliant, and reputational damage from service outages. Proactive adaptation prevents these costly scenarios.

Design your systems with abstraction layers that isolate platform-specific code, maintain relationships with multiple platform providers, evaluate open-source alternatives, and document your architecture to enable easier migrations. These practices reduce your dependency on any single vendor and provide flexibility when platforms change.

Tools like AmICited monitor how AI platforms reference your brand and track platform updates. Additionally, subscribe to official platform newsletters, set up RSS feeds for deprecation announcements, participate in developer communities, and maintain relationships with platform account managers for early warning of changes.

Review your AI platform strategy at least quarterly, or whenever you learn of significant platform changes. More frequent reviews (monthly) are appropriate if you're in a rapidly evolving industry or depend on multiple platforms. Regular reviews ensure you're aware of emerging risks and can plan migrations proactively.

Monitor how AI platforms reference your brand and track critical platform updates before they impact your business. Get real-time alerts on deprecation notices and platform changes.

Learn how to manage AI platform transitions and maintain citation visibility when platforms sunset. Strategic guide for handling deprecated AI platforms and tra...

Learn how to prepare your organization for unknown future AI platforms. Discover the AI readiness framework, essential pillars, and practical steps to stay comp...

Discover the fastest-growing emerging AI platforms reshaping the market. Track how new AI tools are referenced in AI search results and gain competitive visibil...