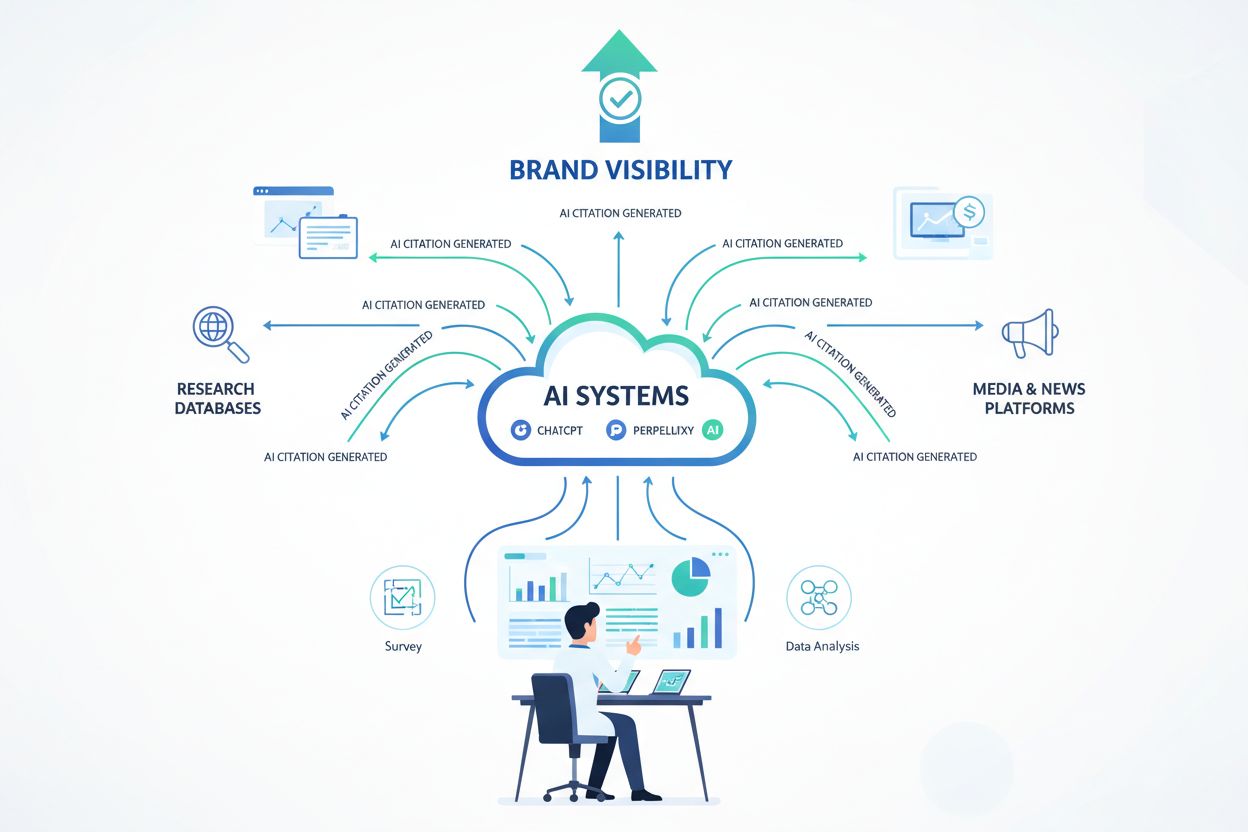

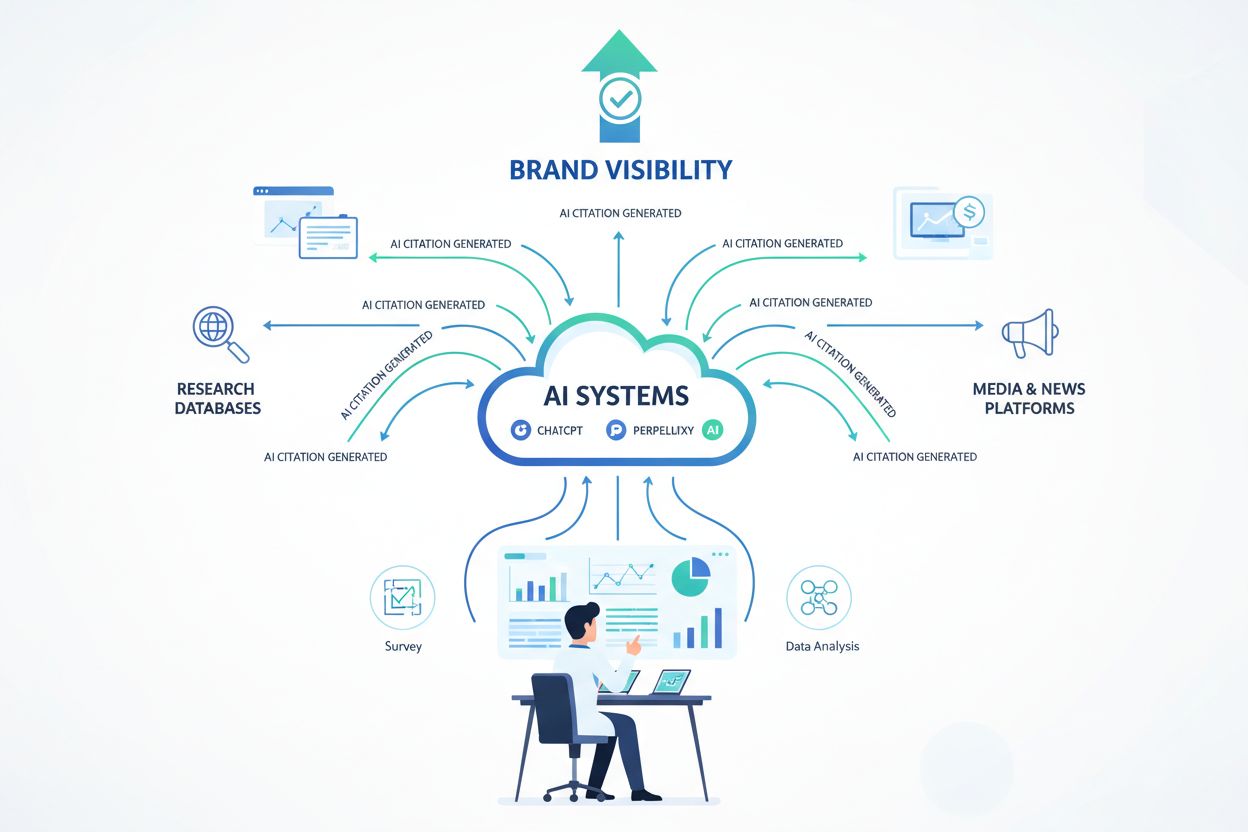

Originalforschung: Der 30–40% Sichtbarkeits-Boost für KI-Zitationen

Entdecken Sie, wie Originalforschung und First-Party-Daten einen Sichtbarkeits-Boost von 30–40% bei KI-Zitationen in ChatGPT, Perplexity und Google KI-Überblick...

Every AI visibility guide says: “Create original research.”

Sounds great in theory. In practice, it’s a MASSIVE investment:

My concerns:

Can we actually compete with HubSpot, McKinsey, Gartner who already dominate research citations?

Is the AI visibility payoff real, or are we just creating expensive content that gets buried?

How do we even know if our research is getting cited by AI?

Our situation:

The pitch from our agency: “Original research gets 10x the AI citations of regular content.”

My skepticism: That’s probably true for THEIR clients (Fortune 500). Is it true for mid-market companies like us?

Anyone here actually done original research specifically for AI visibility? What were the results? Was the ROI real?

I’ve managed original research programs for both enterprise ($1B+) and mid-market ($30-100M) companies. Here’s the real picture:

The “10x citations” claim is accurate but misleading:

What actually determines research citation:

| Factor | Impact | Mid-market Reality |

|---|---|---|

| Data quality | High | Achievable if focused |

| Brand authority | Very high | Harder to overcome |

| Sample size | Medium | Can be sufficient |

| Uniqueness of angle | Critical | THIS is your advantage |

| Promotion & distribution | High | Resource-constrained |

Where mid-market can win:

Niche expertise - Don’t research “marketing trends.” Research “marketing trends for manufacturing companies under 500 employees.”

Proprietary data - You have data competitors don’t: customer behavior, usage patterns, support tickets.

Speed - You can research emerging topics before enterprises slow-roll their processes.

The honest ROI for mid-market:

It works. But it’s a 3-year bet, not a campaign.

We’re exactly your size. Started original research 2 years ago. Here’s our journey:

Year 1:

Year 2:

Now (Year 3):

The key insight: We didn’t compete with McKinsey. We competed in our specific niche where McKinsey doesn’t care to go. We became the authority for mid-market companies in our space.

Investment vs. return:

It took patience. But the compounding is real now.

Don’t have $50K? Here’s how we do research on a shoestring:

Low-cost research methods:

Customer survey research

Proprietary data analysis

Expert interview compilations

Trend analysis

What we’ve learned:

| Method | AI Citation Rate | Cost |

|---|---|---|

| Big survey report | High | $$$$ |

| Customer-based research | Medium-High | $$ |

| Proprietary data analysis | Medium-High | $ |

| Expert interviews | Medium | $ |

| Public data analysis | Low-Medium | $ |

The key: Make it genuinely useful and unique. A well-done $5K study can outperform a lazy $50K study.

Let me share what research actually gets cited by AI:

High citation content patterns:

What we’ve measured using Am I Cited:

Content with original research statistics: 4.3x citation rate Content with third-party statistics: 1.8x citation rate Content with no statistics: 1x baseline

BUT here’s what matters more than quantity:

Extractability - Can AI easily pull your statistic? Format matters:

Verification - Can AI cross-reference your claim?

Uniqueness - Is this data available elsewhere?

My advice: Before investing in research, audit what unique data you ALREADY have. Most companies sit on goldmines they don’t realize.

I worked at one of the big research firms. Let me demystify how we operated:

The enterprise research machine:

What mid-market can learn:

They’re not as smart as you think - A lot of enterprise research is recycled surveys with big sample sizes. Insights are often shallow.

They can’t go niche - Gartner won’t write about “marketing automation for pet supply e-commerce.” You can.

They’re slow - Enterprise research takes 6-18 months. You can ship in 6-8 weeks.

They’re expensive - Their research requires massive investment to be profitable. Yours just needs to be useful.

The real competition: You’re not competing with McKinsey for “marketing trends.” You’re competing with other mid-market companies for your specific niche queries.

Most of your actual competitors probably aren’t doing original research at all. That’s your opportunity.

Strategic targeting: Find 5-10 specific questions AI gets asked about your space. Create research that answers those exact questions. You don’t need to boil the ocean.

Let me share a cautionary tale about research done wrong.

Our mistake:

Spent $80K on a “State of the Industry” report.

Result:

What went wrong:

What we learned:

The research itself was fine. The strategy was wrong.

If we did it again:

It’s not just about doing research. It’s about doing research AI can find, parse, and cite.

Great failure analysis. Here’s a framework to avoid those mistakes:

The AI-Optimized Research Framework:

Step 1: Niche selection

Step 2: Format optimization

Step 3: Distribution strategy

Step 4: Measurement

Step 5: Update cycle

The 80/20: 80% of AI citations come from 20% of your research. Find what’s working and double down.

You don’t have to go big immediately. Here’s an incremental approach:

Quarter 1: Micro-research

Quarter 2: Expand if it works

Quarter 3: Full research if validated

This approach:

Our results with this approach:

Each phase funded the next. Much easier to get buy-in than asking for $50K upfront.

This thread changed my thinking. Here’s my new plan:

What I was wrong about:

Competing with giants - We don’t have to. We can own our niche.

Needing massive budget - Start small, validate, then invest.

Research = PDFs - Web-first, HTML content, extractable statistics.

One-and-done - It’s a multi-year program, not a campaign.

Our new approach:

Phase 1 (Q1): Validate the concept

Phase 2 (Q2): Expand if it works

Phase 3 (Q3-Q4): Full program if validated

The mental shift: We’re not creating “content.” We’re building a citation asset that compounds over time. The ROI calculation isn’t first-year. It’s years 2 and 3.

Specific niche we’re targeting: [Our specific industry segment] - a space where big players don’t focus but where our customers desperately want data.

Thanks everyone. This is actually executable now.

Get personalized help from our team. We'll respond within 24 hours.

Überwachen Sie, wie Ihre eigene Forschung in ChatGPT, Perplexity und anderen KI-Plattformen zitiert wird. Sehen Sie, welche Datenpunkte am häufigsten referenziert werden.

Entdecken Sie, wie Originalforschung und First-Party-Daten einen Sichtbarkeits-Boost von 30–40% bei KI-Zitationen in ChatGPT, Perplexity und Google KI-Überblick...

Community-Diskussion über die Nutzung eigener Forschung und Umfragen zur Verbesserung von KI-Zitationen. Marketer teilen Erfahrungen mit der Veröffentlichung pr...

Erfahren Sie, warum die Erstellung eigener Forschung für die AI-Sichtbarkeit entscheidend ist. Lernen Sie, wie eigene Forschung dazu beiträgt, dass Ihre Marke i...

Cookie-Zustimmung

Wir verwenden Cookies, um Ihr Surferlebnis zu verbessern und unseren Datenverkehr zu analysieren. See our privacy policy.