How do you track when competitors are mentioned in AI chatbots? Need competitive intelligence for AI

Community discussion on tracking competitor mentions in AI chatbots. Strategies for competitive intelligence in the AI search era.

I’ve been doing competitive intelligence for 10 years. I know how to benchmark against competitors in traditional search, paid media, social - you name it.

But AI visibility benchmarking? I feel like I’m making it up as I go.

What we’re currently doing (and it feels inadequate):

What I want to know:

I know I’m not alone in figuring this out. What’s working for everyone else?

Let me share the framework I use with clients:

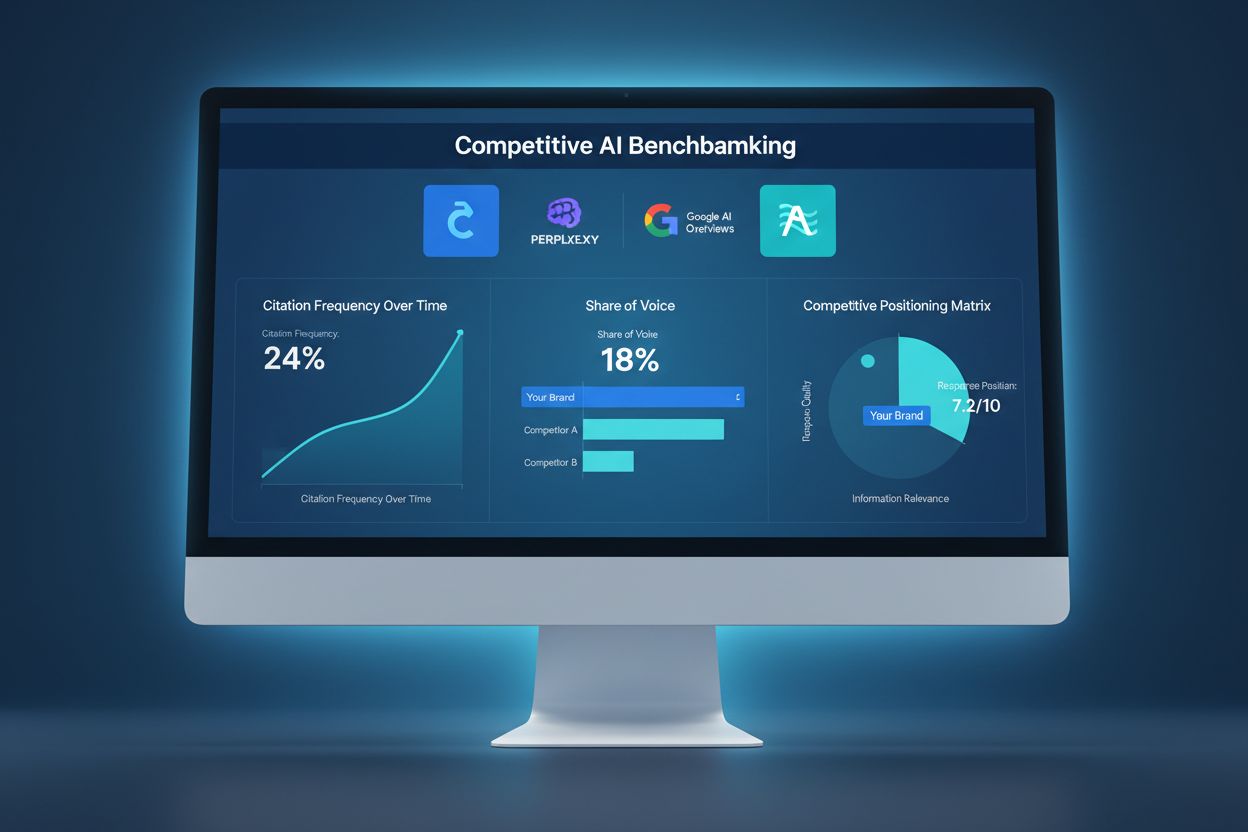

The 5 Core Metrics for AI Competitive Benchmarking:

| Metric | What It Measures | Target Benchmark |

|---|---|---|

| Citation Frequency Rate (CFR) | % of relevant queries where you appear | 15-30% for established brands |

| Response Position Index (RPI) | Where you appear in response (1st, 2nd, etc.) | 7.0+ on 10-point scale |

| Competitive Share of Voice (CSOV) | Your mentions vs total competitor mentions | 25%+ in your category |

| Sentiment Score | How AI describes you (positive/neutral/negative) | 80%+ positive |

| Source Diversity Index | How many AI platforms cite you | 4+ platforms |

How to calculate these:

What “winning” looks like:

Market leaders: 35-45% CSOV Strong competitors: 20-30% CSOV Emerging brands: 5-15% CSOV

Manual testing won’t give you statistical significance. You need automated monitoring across hundreds of queries.

This framework is exactly what I needed.

Question: How do you define the “relevant queries” you test against? Do you work from a fixed query set or expand it over time?

Both. Here’s my approach:

Core query set (fixed, for trend tracking):

Expansion set (dynamic, for discovery):

Query categorization:

Am I Cited lets you set up both fixed and dynamic query tracking. I usually do 60% fixed core + 40% dynamic expansion.

Adding the data science perspective:

Your AI competitors may not be who you think.

We assumed our AI competitors were the same as our traditional competitors. We were wrong.

How we identified actual AI competitors:

What we found:

The lesson:

AI competitors are whoever AI thinks is relevant to the queries your customers ask. That may not match your traditional competitive set.

Run the analysis. Let data define your AI competitive landscape.

Frequency matters for benchmarking.

What we learned the hard way:

We did monthly benchmarks. Thought we were doing fine. Then a competitor published a major content series, and by the time we noticed in our next monthly check, they’d pulled ahead significantly.

Current approach:

What triggers an immediate deep dive:

AI visibility changes faster than traditional SEO rankings. Monthly isn’t frequent enough for meaningful competitive intelligence.

Enterprise perspective on scaling this:

The challenge: We track 50+ competitors across 8 product lines. Manual benchmarking is impossible.

Our stack:

What we report to leadership:

The key insight:

AI visibility is now part of competitive intelligence, not a separate discipline. It goes in the same reports as market share, win/loss analysis, and brand perception data.

Startup take:

We can’t afford comprehensive competitive monitoring tools yet. Here’s our scrappy approach:

Weekly manual process (2 hours):

Monthly analysis (2 hours):

What we track:

It’s not fancy, but it’s better than nothing. As we grow, we’ll invest in proper tooling.

For agency folks serving multiple clients:

Benchmark framework we use:

Industry-specific observations:

What clients actually want to know:

Frame benchmarks around these questions, not vanity metrics.

On visualization:

What works for communicating AI competitive benchmarks:

What doesn’t work:

Dashboard design tip:

Start with the answer to “Are we winning or losing in AI?” Everything else supports that top-line answer.

This thread has been incredibly helpful. Here’s my synthesis:

Framework I’m implementing:

Immediate next steps:

Key mindset shift:

AI visibility is competitive intelligence, not a separate discipline. It belongs in the same conversations as market share and brand perception.

Thanks everyone for contributing. This community is incredible.

Get personalized help from our team. We'll respond within 24 hours.

See how your brand stacks up against competitors in AI-generated answers. Track share of voice, citation frequency, and positioning across ChatGPT, Perplexity, and more.

Community discussion on tracking competitor mentions in AI chatbots. Strategies for competitive intelligence in the AI search era.

Community discussion on tracking competitor mentions in AI platforms. Strategies for building systematic competitive monitoring for AI visibility.

Learn how to benchmark your AI visibility against competitors. Track citations, share of voice, and competitive positioning across ChatGPT, Perplexity, and Goog...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.