Keyword Density

Keyword density measures how often a keyword appears in content relative to total word count. Learn optimal percentages, best practices, and how it impacts AI s...

Discover why keyword density no longer matters for AI search. Learn what ChatGPT, Perplexity, and Google AI Overviews actually prioritize in content ranking and citation.

Keyword density has minimal impact on AI systems and modern search engines. Research shows top-ranking pages average just 0.04% keyword density, while AI models prioritize semantic meaning, topical authority, and content depth over keyword frequency. Focus on natural language and comprehensive topic coverage instead.

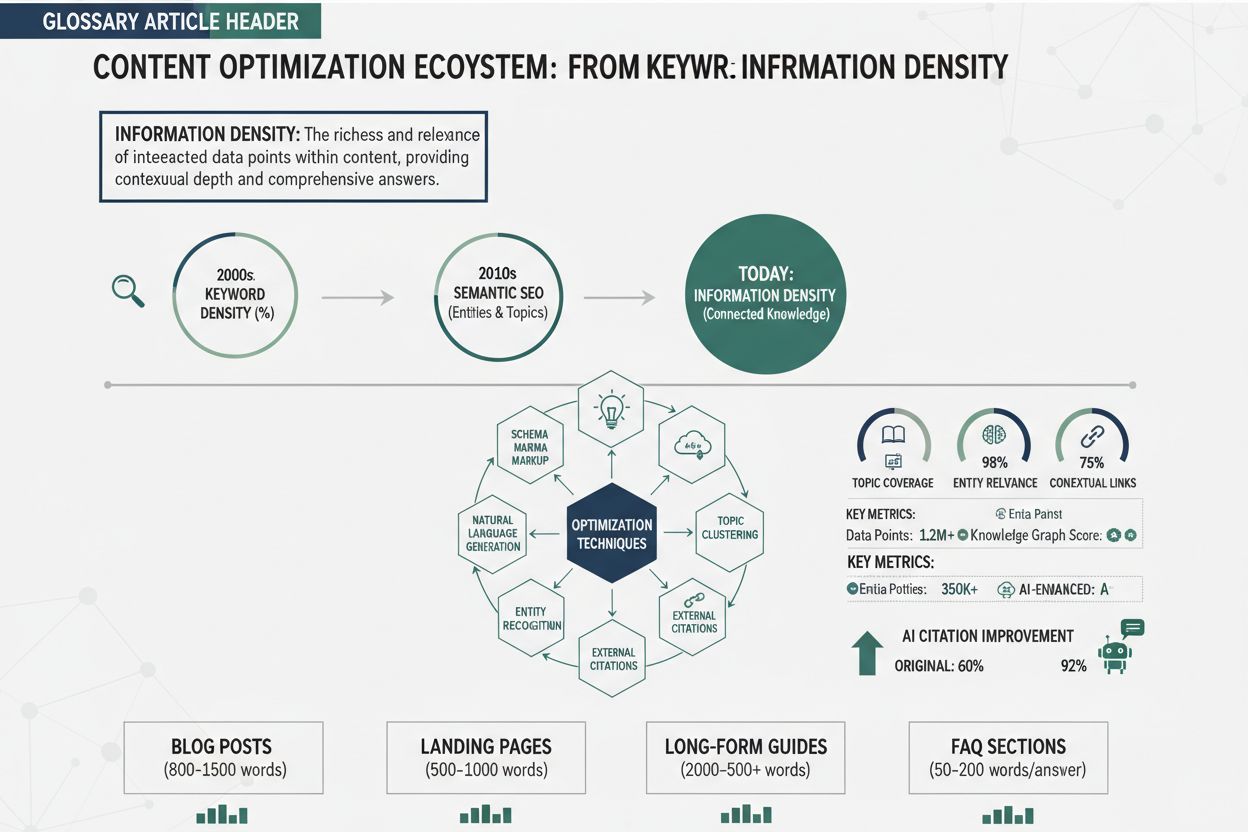

Keyword density refers to the percentage of times a particular keyword appears within a web page compared to the total word count. Historically, this metric was central to SEO strategy—the more frequently a keyword appeared, the more relevant a page seemed for that term. However, the landscape has fundamentally shifted with the rise of artificial intelligence, large language models (LLMs), and semantic search. Today, keyword density is no longer a primary ranking factor for Google, ChatGPT, Perplexity, Google AI Overviews, or Claude. Instead, these systems evaluate content based on semantic meaning, topical authority, entity relationships, and user intent. Understanding this shift is critical for anyone creating content that needs to rank in traditional search results and be cited by AI systems. The evolution from keyword-focused optimization to meaning-focused optimization represents one of the most significant changes in digital content strategy over the past decade.

The history of search ranking reveals why keyword density has become obsolete. In the early 2000s, search engines like Google relied heavily on keyword frequency and backlinks as primary ranking signals. This led to widespread keyword stuffing—the practice of overloading content with keywords in unnatural ways to manipulate rankings. Pages would repeat phrases awkwardly, compromising readability and user experience. Google’s former Head of Webspam, Matt Cutts, famously stated that there are “diminishing returns” to keyword repetition, signaling the company’s shift away from this metric. By 2013, Google introduced Hummingbird, an algorithm update that prioritized search intent over exact keyword matching. This was followed by RankBrain (2015), BERT (2018), and MUM (2021)—each advancement enabling Google to better understand context, semantics, and the relationships between concepts. Modern AI systems now analyze the meaning behind words rather than counting their frequency. A 2025 research study analyzing 1,536 Google search results found no consistent correlation between keyword density and ranking position, with top-10 results averaging just 0.04% keyword density compared to 0.07-0.08% in lower-ranking positions. This data definitively shows that lower keyword density is actually associated with better rankings.

| Aspect | Keyword Density | Semantic Depth | Topical Authority |

|---|---|---|---|

| Definition | Percentage of times a keyword appears in content | Degree to which content comprehensively covers a topic and its subtopics | Breadth of expertise demonstrated across a subject area |

| How It’s Measured | (Keyword frequency / Total words) × 100 | Entity coverage, relationship mapping, content clustering | Volume and quality of related content across domain |

| Relevance to AI | Minimal to none | Critical for AI citation and ranking | Essential for AI system trust |

| Recommended Range | 0.5-2% (though no strict rule) | Deep, interconnected content clusters | Multiple comprehensive articles per topic |

| Impact on Rankings | Negligible; can harm if excessive | Direct positive impact on visibility | Strong positive impact on AI citations |

| User Experience | Can reduce readability if forced | Improves user satisfaction and engagement | Builds long-term authority and trust |

| AI System Preference | Ignored or penalized | Highly valued for citations | Prioritized for source selection |

This comparison reveals why content creators must fundamentally rethink their optimization strategy. Keyword density is a mechanical metric that doesn’t reflect how modern AI systems evaluate content. Semantic depth and topical authority, by contrast, directly influence whether AI systems like ChatGPT, Perplexity, and Google AI Overviews will cite your content as a trusted source.

Large language models and AI search engines use sophisticated neural networks to understand content meaning rather than counting keywords. Google’s BERT (Bidirectional Encoder Representations from Transformers) can understand the context of words in relation to all other words in a sentence, not just their position or frequency. MUM (Multitask Unified Model) goes further, understanding information across multiple languages and formats simultaneously. These systems map content to knowledge graphs—structured representations of entities, their attributes, and relationships. When you search for “retirement planning,” AI systems don’t look for pages with the highest keyword density for that phrase. Instead, they identify pages that comprehensively cover related entities like “401(k),” “Roth IRA,” “pension plans,” and explain their attributes like “contribution limits,” “tax treatment,” and “employer matching.” Research from BrightEdge found that 82.5% of AI Overview citations point to “deep pages” located two or more clicks from the homepage—pages with substantial, interconnected content rather than surface-level summaries. This demonstrates that AI systems prioritize depth and comprehensiveness over keyword frequency. When AI systems select sources for answers, they use a “query fan-out” technique, splitting complex queries into related subtopics and combining supporting pages into coherent responses. Pages with strong entity coverage and subtopic depth are far more likely to be selected as sources.

Keyword stuffing is explicitly penalized by Google and other search systems. Google’s official spam policies state that “filling a web page with keywords or numbers in an attempt to manipulate rankings” violates their guidelines and can result in manual penalties or algorithmic demotion. Content that artificially repeats keywords reads unnaturally to both humans and AI systems. Modern language models can detect forced keyword usage and recognize when content prioritizes search engine manipulation over user value. When AI systems encounter keyword-stuffed content, they often deprioritize it because it signals low-quality, user-hostile content. Additionally, keyword stuffing typically results in lower engagement metrics—users bounce quickly from pages that don’t read naturally. Search engines monitor time on page, scroll depth, and click-through rates as signals of content quality. Pages with forced keyword repetition typically show poor engagement, which further damages their rankings. The risk-reward calculation is clear: attempting to game rankings through keyword density offers minimal benefit and substantial risk of penalties.

Each major AI system has distinct characteristics, but they share common evaluation criteria that have nothing to do with keyword density. ChatGPT (with search enabled) prioritizes authoritative sources, comprehensive coverage, and recency. When ChatGPT cites sources, it selects pages that thoroughly answer the query with clear, well-organized information. Perplexity similarly values topical depth, expert credentials, and original research. The platform’s algorithm identifies pages that demonstrate genuine expertise and provide unique insights rather than generic summaries. Google AI Overviews (formerly SGE) uses Google’s existing ranking systems as a foundation but adds additional evaluation for comprehensiveness and trustworthiness. Pages that appear in AI Overviews typically have strong E-E-A-T signals (Experience, Expertise, Authoritativeness, Trustworthiness), clear author credentials, and interconnected content clusters. Claude (Anthropic’s AI) emphasizes accuracy, nuance, and original analysis. When Claude references sources, it favors pages that provide balanced perspectives and acknowledge complexity rather than oversimplified answers. Across all these systems, the common thread is clear: semantic meaning, topical authority, and content depth matter far more than keyword frequency. If you want your content cited by AI systems, focus on becoming a recognized authority on your topic through comprehensive, interconnected content rather than optimizing for keyword density.

Topical authority has replaced keyword density as the key measure of relevance for both traditional search and AI systems. Topical authority refers to the level of expertise, credibility, and trustworthiness a website demonstrates on a specific subject. Rather than repeating keywords, you build topical authority by creating extensive, well-structured content that thoroughly addresses a topic and its related subtopics. This approach involves creating content clusters—interconnected networks of pages organized around a central pillar topic. For example, a financial services site might create a pillar page on “retirement planning” supported by sub-pillars on “401(k) plans,” “Roth IRAs,” and “pension plans,” with cluster pages addressing specific questions like “401(k) contribution limits,” “Roth IRA tax benefits,” and “401(k) vs. Roth IRA comparison.” This structure signals to both search engines and AI systems that your site has deep expertise in retirement planning. The internal linking within these clusters reinforces entity relationships and helps AI systems understand how concepts connect. When you build topical authority, you naturally incorporate relevant keywords in context—not through forced repetition, but through comprehensive coverage of related topics. A 3,000+ word guide on retirement planning will naturally include keywords like “401(k),” “contribution,” “tax,” and “employer match” multiple times, but the keyword density will remain low (typically 0.5-1.5%) because the content is focused on providing value rather than keyword optimization.

Different AI platforms have slightly different citation patterns, but none prioritize keyword density. Google AI Overviews tend to cite pages that rank well in traditional Google search, which means they favor pages with strong topical authority, E-E-A-T signals, and comprehensive coverage. Pages with clear structure (proper heading hierarchy, schema markup, and organized information) are more likely to be selected. Perplexity appears to value original research, expert credentials, and unique perspectives. Pages that cite studies, include expert quotes, or present original data are frequently cited. ChatGPT (with search) prioritizes recency for time-sensitive topics and authority for evergreen topics. Pages from established, trusted domains are more likely to be cited. Claude emphasizes accuracy and nuance, often citing pages that acknowledge complexity and present balanced viewpoints. To optimize for citation across these platforms, focus on: creating original, research-backed content; establishing clear author credentials; building interconnected content clusters that demonstrate topical depth; using structured data (schema markup) to clarify content meaning; and maintaining high accuracy standards with proper citations and fact-checking. None of these strategies involve optimizing keyword density.

Transitioning from keyword-density thinking to semantic optimization requires a fundamental shift in content strategy. Start by identifying your core topics and mapping them to entities that Google recognizes in its Knowledge Graph. For each core topic, create a pillar page that provides comprehensive overview coverage, then develop sub-pillars and cluster pages that address specific aspects, comparisons, and user questions. Use natural language throughout—write for humans first, search engines second. Include your target keywords naturally within the context of helpful, comprehensive content, but don’t force them. Incorporate semantic variations and related terms to help AI systems understand the full scope of your topic. For example, instead of repeating “retirement planning” dozens of times, use variations like “retirement strategy,” “retirement savings,” “retirement accounts,” and “retirement income planning.” Structure your content with clear heading hierarchies (H1, H2, H3), bulleted lists, comparison tables, and relevant visuals. This formatting helps both users and AI systems parse your content more effectively. Implement schema markup (Article, FAQ, HowTo, Product, etc.) to explicitly tell search engines what your content is about. Use internal linking strategically to connect related pages within your content clusters, using descriptive anchor text that clarifies the relationship between pages. Monitor your performance using Google Search Console to track which queries drive impressions and clicks, and use analytics to measure engagement metrics like time on page and scroll depth. Tools like AmICited can help you track where your content appears across AI platforms, giving you visibility into which pages are being cited and which topics need more depth.

The evolution from SEO to AI-driven search is leading to a new discipline called Generative Engine Optimization (GEO). While traditional SEO focused on ranking for keywords, and AI Overviews Optimization (AIO) focuses on appearing in AI-generated answers, GEO takes a broader view of how content is discovered, retrieved, and synthesized by AI systems. In the GEO era, content strategy must account for how large language models retrieve and combine information from multiple sources. This means building semantically rich content ecosystems where pages are interconnected through clear entity relationships and topical depth. The pages most likely to be cited in AI-generated answers are those that demonstrate comprehensive coverage of a topic, clear expertise, and trustworthy information. Keyword density is irrelevant to this future. What matters is whether your content can be easily retrieved, understood, and cited by AI systems as an authoritative source. As AI systems become more sophisticated, they will increasingly prioritize content that demonstrates genuine expertise and provides unique value. Sites that invest in building topical authority and semantic depth now will have a durable competitive advantage as search continues to evolve. The shift away from keyword density represents a maturation of search technology—from mechanical pattern-matching to genuine understanding of meaning and expertise.

Track where your content appears across ChatGPT, Perplexity, Google AI Overviews, and Claude. Understand what makes AI systems cite your content and optimize accordingly.

Keyword density measures how often a keyword appears in content relative to total word count. Learn optimal percentages, best practices, and how it impacts AI s...

Community discussion on whether keyword density matters for AI search. Real experiences from SEO professionals testing keyword optimization impact on ChatGPT an...

Learn what information density is and how it improves AI citation likelihood. Discover practical techniques to optimize content for AI systems like ChatGPT, Per...