How to Increase AI Crawl Frequency for Better Visibility

Learn proven strategies to increase how often AI crawlers visit your website, improve content discoverability in ChatGPT, Perplexity, and other AI search engine...

Learn how AI crawlers prioritize pages using crawl capacity and demand. Understand crawl budget optimization for ChatGPT, Perplexity, Google AI, and Claude.

AI crawlers prioritize pages based on crawl capacity limits (server resources and site health) and crawl demand (page popularity, freshness, and update frequency). They use algorithmic processes to determine which sites to crawl, how often, and how many pages to fetch from each site, balancing the need to discover new content with avoiding server overload.

AI crawlers are automated programs that systematically discover, access, and analyze web pages to build the knowledge bases that power generative AI platforms like ChatGPT, Perplexity, Google AI Overviews, and Claude. Unlike traditional search engine crawlers that focus on ranking pages for keyword queries, AI crawlers prioritize pages based on a sophisticated two-factor system: crawl capacity limits and crawl demand. Understanding this prioritization mechanism is essential for ensuring your content gets discovered, indexed, and cited by AI systems. As AI search becomes increasingly important for brand visibility—with over 400 million weekly ChatGPT users and Perplexity processing billions of queries monthly—optimizing for crawler prioritization directly impacts whether your content appears in AI-generated answers or remains invisible to these powerful discovery systems.

Crawl capacity limit and crawl demand work together to determine a site’s overall crawl budget—the total number of pages an AI crawler will visit within a specific timeframe. This system emerged from the fundamental reality that AI platforms have finite computational resources distributed across millions of websites. Google’s Googlebot and similar crawlers cannot possibly visit every page on every website continuously, so they must make strategic decisions about resource allocation. The crawl capacity limit represents the maximum number of simultaneous connections a crawler can establish with your server, while crawl demand reflects how urgently the crawler wants to revisit specific pages based on their value and change frequency.

Think of crawl budget like a daily allowance: if your site receives a budget of 100 pages per day, the crawler must decide which 100 pages matter most. A site with poor server performance might receive only 50 pages per day because the crawler throttles back to avoid overloading your infrastructure. Conversely, a site with exceptional performance and high-value content might receive 500+ pages per day. The crawler continuously adjusts these limits based on real-time signals from your server, creating a dynamic system that rewards technical excellence and content quality while penalizing poor performance.

Crawl capacity limit is determined by how much crawling your server can handle without degrading performance or becoming unresponsive. AI crawlers are programmed to be respectful of server resources—they deliberately avoid overwhelming websites with excessive requests. This self-regulation mechanism protects websites from being hammered by crawler traffic while ensuring crawlers can access content efficiently.

Several factors influence your crawl capacity limit. Server response time is critical: if your pages load quickly (under 2.5 seconds), crawlers infer your server has capacity for more requests and increase crawling frequency. Conversely, slow response times signal server strain, causing crawlers to reduce request rates. HTTP status codes provide explicit signals about server health. When crawlers encounter 5xx server errors (indicating server problems), they interpret this as a signal to slow down and reduce crawling. Connection timeouts and DNS failures similarly trigger capacity reductions. The crawler essentially asks: “Is this server healthy enough to handle more requests?” and adjusts accordingly.

Hosting infrastructure significantly impacts capacity limits. Websites on shared hosting with hundreds of other sites share a collective crawl budget—if other sites on the same server consume resources, your crawl capacity decreases. Dedicated servers provide isolated resources, allowing higher crawl capacity. Content delivery networks (CDNs) that distribute content across geographically dispersed servers can handle higher crawler traffic more efficiently. Large enterprises often see dramatic crawl budget increases after migrating from shared hosting to dedicated infrastructure or implementing CDN solutions.

Rendering requirements also affect capacity. Pages requiring extensive JavaScript rendering consume more crawler resources than static HTML pages. If your site relies heavily on client-side rendering, crawlers must spend more time and computational power processing each page, reducing the total pages they can crawl within their resource budget. Server-side rendering (SSR) or static site generation (SSG) dramatically improves crawler efficiency by delivering fully-formed HTML that requires minimal processing.

Crawl demand reflects how much crawlers want to revisit specific pages based on their perceived value and change patterns. This factor is more strategic than capacity—it’s about prioritization rather than technical limitations. Even if your server could handle 1,000 crawler requests daily, crawlers might only send 100 requests if they determine most pages aren’t worth revisiting frequently.

Popularity is the primary driver of crawl demand. Pages receiving many internal links from other pages on your site signal importance to crawlers. Pages with high external backlinks from other websites indicate broader recognition and authority. Pages generating significant user engagement (measured through click-through rates, time on page, and return visits) demonstrate value to end users, which crawlers interpret as worth revisiting. Query volume—how many searches target a particular page—influences demand. Pages ranking for high-volume keywords receive more crawler attention because they drive significant traffic.

Freshness and update frequency dramatically impact crawl demand, particularly for AI platforms. Research on Perplexity optimization reveals that content visibility begins declining just 2-3 days after publication without strategic updates. This creates a recency bias where recently updated content receives higher crawl priority. Crawlers monitor publication dates, last modified timestamps, and content change patterns to determine update frequency. Pages that change daily receive more frequent crawls than pages that haven’t changed in years. This makes sense: if a page hasn’t changed in 12 months, crawling it weekly wastes resources. Conversely, if a page updates daily, crawling it weekly misses important changes.

Content type influences crawl demand. News and breaking news content receives extremely high crawl priority because timeliness matters enormously. Product pages on e-commerce sites receive frequent crawls because prices, inventory, and availability change constantly. Blog posts receive moderate crawl frequency based on publication recency. Evergreen foundational content receives lower crawl frequency unless it’s being actively updated. Crawlers essentially ask: “How likely is this page to have changed since I last visited?” and adjust crawl frequency accordingly.

| Factor | Google AI Overviews | ChatGPT Search | Perplexity AI | Claude |

|---|---|---|---|---|

| Primary Crawl Signal | Traditional SEO signals + E-E-A-T | Domain authority + content depth | Recency + update frequency | Academic authority + factual accuracy |

| Crawl Frequency | 3-7 days for established content | 1-3 days for priority content | 2-3 days (aggressive) | 5-10 days |

| Content Decay Rate | Moderate (weeks) | Moderate (weeks) | Rapid (2-3 days) | Slow (months) |

| Capacity Limit Impact | High (traditional SEO factors) | Moderate (less strict) | High (very responsive) | Low (less aggressive) |

| Demand Priority | Popularity + freshness | Depth + authority | Freshness + updates | Accuracy + citations |

| Schema Markup Weight | 5-10% of ranking | 3-5% of ranking | 10% of ranking | 2-3% of ranking |

| Update Frequency Reward | Weekly updates beneficial | 2-3 day updates beneficial | Daily updates optimal | Monthly updates sufficient |

Before crawlers can prioritize pages, they must first discover them. URL discovery happens through several mechanisms, each affecting how quickly new content enters the crawler’s queue. Sitemaps provide explicit lists of URLs you want crawled, allowing crawlers to discover pages without following links. Internal linking from existing pages to new pages helps crawlers find content through natural navigation. External backlinks from other websites signal new content worth discovering. Direct submissions through tools like Google Search Console explicitly notify crawlers about new URLs.

The discovery method influences prioritization. Pages discovered through sitemaps with <lastmod> tags indicating recent updates receive higher initial priority. Pages discovered through high-authority backlinks jump the queue ahead of pages discovered through low-authority sources. Pages discovered through internal links from popular pages receive higher priority than pages linked only from obscure internal pages. This creates a cascading effect: popular pages that link to new content help those new pages get crawled faster.

Crawl queue management determines the order in which discovered pages get visited. Crawlers maintain multiple queues: a high-priority queue for important pages needing frequent updates, a medium-priority queue for standard content, and a low-priority queue for less important pages. Pages move between queues based on signals. A page that hasn’t been updated in 6 months might move from high-priority to low-priority, freeing up crawl budget for more important content. A page that just received a major update moves to high-priority, ensuring the crawler discovers changes quickly.

Page speed directly impacts prioritization decisions. Crawlers measure how quickly pages load and render. Pages loading in under 2.5 seconds receive higher crawl priority than slower pages. This creates a virtuous cycle: faster pages get crawled more frequently, allowing crawlers to discover updates sooner, which improves freshness signals, which increases crawl priority further. Conversely, slow pages create a vicious cycle: reduced crawl frequency means updates get discovered slowly, content becomes stale, crawl priority decreases further.

Mobile optimization influences prioritization, particularly for AI platforms that increasingly prioritize mobile-first indexing. Pages with responsive design, readable fonts, and mobile-friendly navigation receive higher priority than pages requiring desktop viewing. Core Web Vitals—Google’s performance metrics measuring loading speed, interactivity, and visual stability—correlate strongly with crawl priority. Pages with poor Core Web Vitals get crawled less frequently.

JavaScript rendering requirements affect prioritization. Pages that deliver content through client-side JavaScript require more crawler resources than pages serving static HTML. Crawlers must execute JavaScript, wait for rendering, and then parse the resulting DOM. This extra processing means fewer pages can be crawled within the same resource budget. Pages using server-side rendering (SSR) or static site generation (SSG) get crawled more efficiently and receive higher priority.

Robots.txt and meta robots directives explicitly control crawler access. Pages blocked in robots.txt won’t be crawled at all, regardless of priority. Pages marked with noindex meta tags will be crawled (crawlers must read the page to find the directive) but won’t be indexed. This wastes crawl budget—crawlers spend resources on pages they won’t index. Canonical tags help crawlers understand which version of duplicate content to prioritize, preventing wasted crawl budget on multiple versions of the same content.

Experience, Expertise, Authoritativeness, and Trustworthiness (E-E-A-T) signals influence how crawlers prioritize pages, particularly for AI platforms. Crawlers assess E-E-A-T through multiple indicators. Author credentials and author bios demonstrating expertise signal that content deserves higher priority. Publication dates and author history help crawlers assess whether authors have consistent expertise or are one-time contributors. Backlink profiles from authoritative sources indicate trustworthiness. Social signals and brand mentions across the web suggest recognition and authority.

Pages from established domains with long histories and strong backlink profiles receive higher crawl priority than pages from new domains. This isn’t necessarily fair to new websites, but it reflects crawler logic: established sites have proven track records, so their content is more likely to be valuable. New sites must earn crawl priority through exceptional content quality and rapid growth in authority signals.

Topical authority influences prioritization. If your site has published 50 high-quality articles about email marketing, crawlers recognize you as an authority on that topic and prioritize new email marketing content from your site. Conversely, if your site publishes random content across unrelated topics, crawlers don’t recognize topical expertise and prioritize less aggressively. This rewards content clustering and topical focus strategies.

Understanding crawler prioritization enables strategic optimization. Content refresh schedules that update important pages every 2-3 days signal freshness and maintain high crawl priority. This doesn’t require complete rewrites—adding new sections, updating statistics, or incorporating recent examples suffices. Internal linking optimization ensures important pages receive many internal links, signaling priority to crawlers. Sitemap optimization with accurate <lastmod> tags helps crawlers identify recently updated content.

Server performance optimization directly increases crawl capacity. Implementing caching strategies, image optimization, code minification, and CDN distribution all reduce page load times and increase crawler efficiency. Removing low-value pages from your site reduces crawl waste. Pages that don’t serve users (duplicate content, thin pages, outdated information) consume crawl budget without providing value. Consolidating duplicate content, removing outdated pages, and blocking low-value pages with robots.txt frees up budget for important content.

Structured data implementation helps crawlers understand content more efficiently. Schema markup in JSON-LD format provides explicit information about page content, reducing the processing required for crawlers to understand what a page is about. This efficiency improvement allows crawlers to process more pages within the same resource budget.

Monitoring crawl patterns through server logs and Google Search Console reveals how crawlers prioritize your site. Analyzing which pages get crawled most frequently, which pages rarely get crawled, and how crawl frequency changes over time provides insights into crawler behavior. If important pages aren’t being crawled frequently enough, investigate why: are they buried deep in site architecture? Do they lack internal links? Are they slow to load? Addressing these issues improves prioritization.

Crawler prioritization continues evolving as AI platforms mature. Real-time indexing is becoming more common, with some platforms crawling pages within hours of publication rather than days. Multimodal crawling that processes images, videos, and audio alongside text will influence prioritization—pages with rich media may receive different priority than text-only pages. Personalized crawling based on user interests may emerge, with crawlers prioritizing content relevant to specific user segments.

Entity recognition will increasingly influence prioritization. Crawlers will recognize when pages discuss recognized entities (people, companies, products, concepts) and adjust priority based on entity importance. Pages discussing trending entities may receive higher priority than pages about obscure topics. Semantic understanding will improve, allowing crawlers to recognize content quality and relevance more accurately, potentially reducing the importance of traditional signals like backlinks.

Understanding how AI crawlers prioritize pages transforms your optimization strategy from guesswork to data-driven decision-making. By optimizing for both crawl capacity and crawl demand, you ensure your most important content gets discovered, crawled frequently, and cited by AI systems. The brands that master crawler prioritization will dominate AI search visibility, while those ignoring these principles risk invisibility in the AI-powered future of search.

Track how AI crawlers discover and cite your content across ChatGPT, Perplexity, Google AI Overviews, and Claude with AmICited's AI prompt monitoring platform.

Learn proven strategies to increase how often AI crawlers visit your website, improve content discoverability in ChatGPT, Perplexity, and other AI search engine...

Understand AI crawler visit frequency, crawl patterns for ChatGPT, Perplexity, and other AI systems. Learn what factors influence how often AI bots crawl your s...

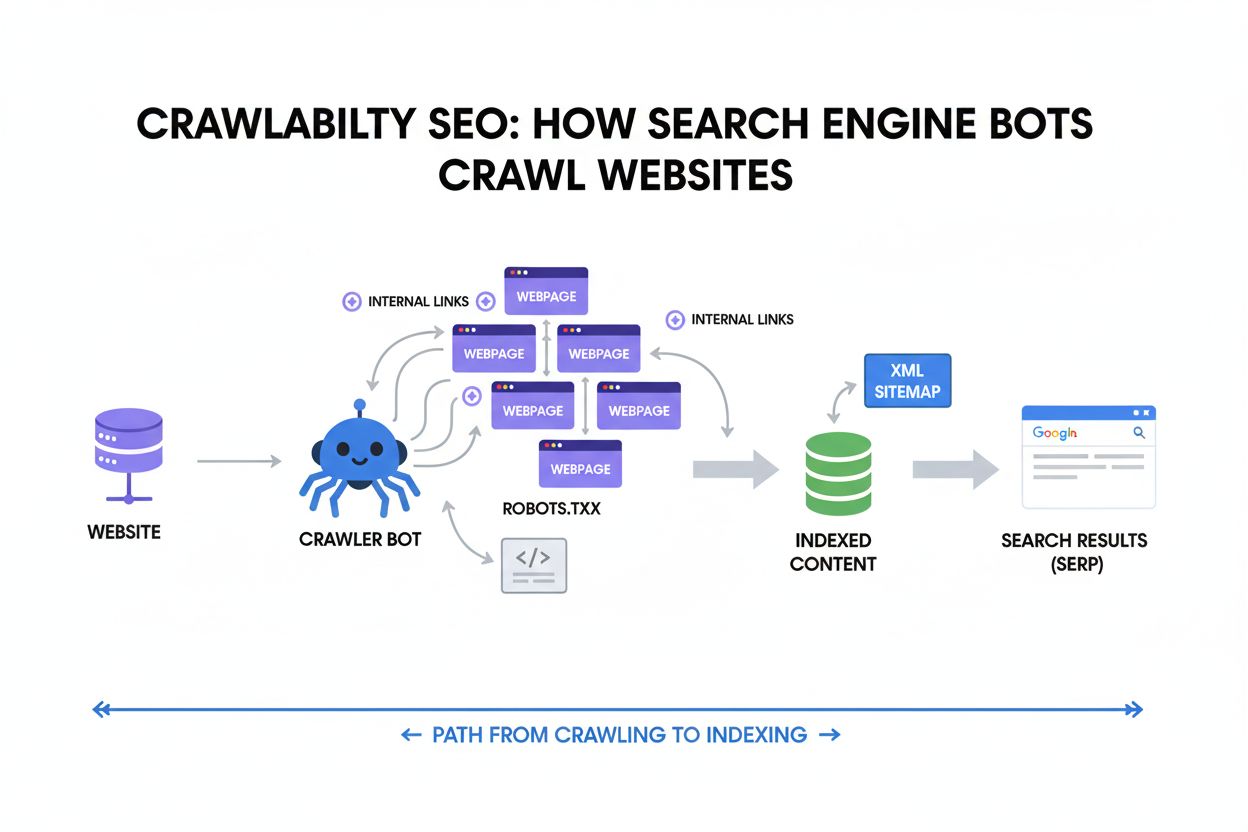

Crawlability is the ability of search engines to access and navigate website pages. Learn how crawlers work, what blocks them, and how to optimize your site for...