Certifications and AI Visibility: Do Trust Badges Matter?

Learn how certifications and trust badges impact your visibility in AI-generated answers. Discover why trust signals matter for ChatGPT, Perplexity, and Google ...

Discover how AI certifications establish trust through standardized frameworks, transparency requirements, and third-party validation. Learn about CSA STAR, ISO 42001, and compliance standards.

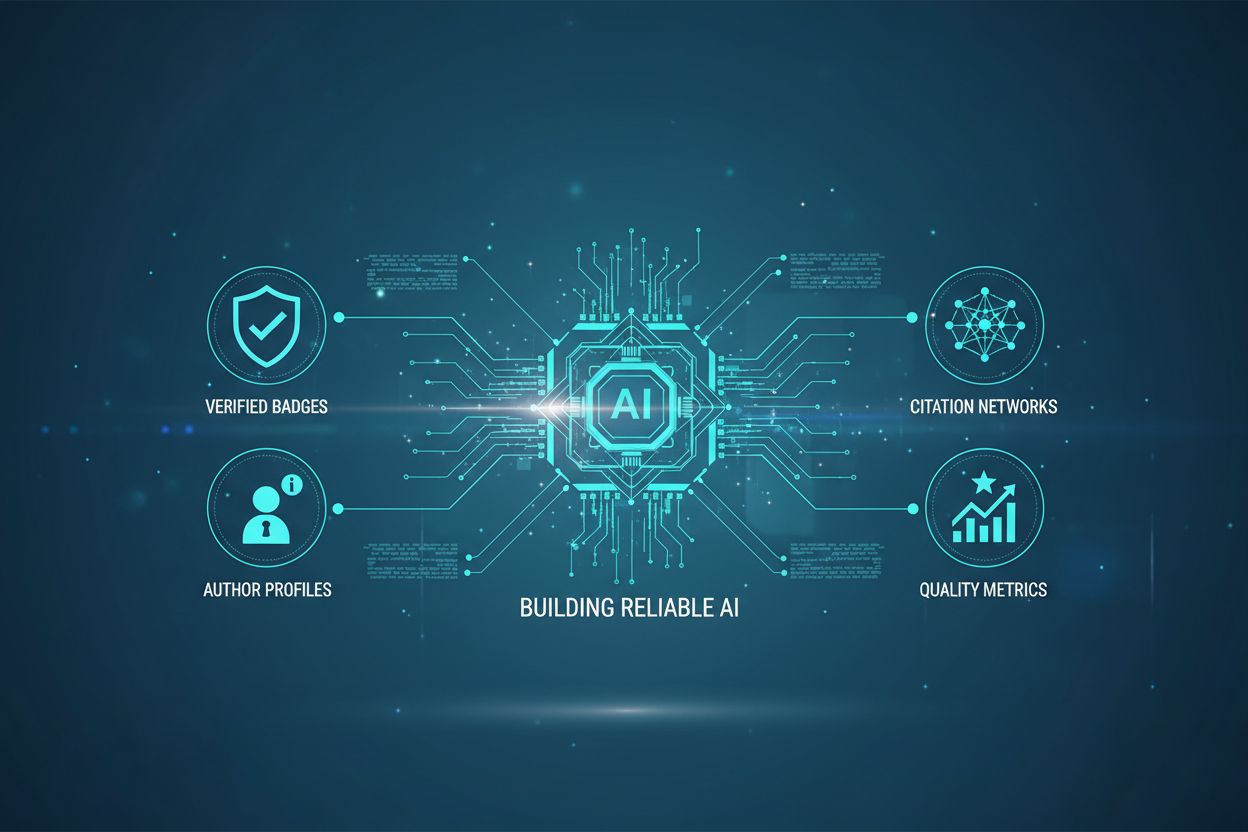

AI certifications build trust by establishing standardized frameworks for safety, transparency, and accountability. They provide third-party validation of AI systems' compliance with ethical standards, security controls, and regulatory requirements, giving stakeholders confidence in responsible AI deployment.

AI certifications serve as critical mechanisms for establishing confidence in artificial intelligence systems by providing independent verification that these systems meet established standards for safety, security, and ethical operation. In an era where AI systems influence critical decisions across healthcare, finance, and public services, certifications function as a bridge between technical complexity and stakeholder confidence. They represent a formal commitment to responsible AI practices and provide measurable evidence that organizations have implemented appropriate controls and governance structures. The certification process itself demonstrates organizational maturity in managing AI risks, from data handling to bias mitigation and transparency requirements.

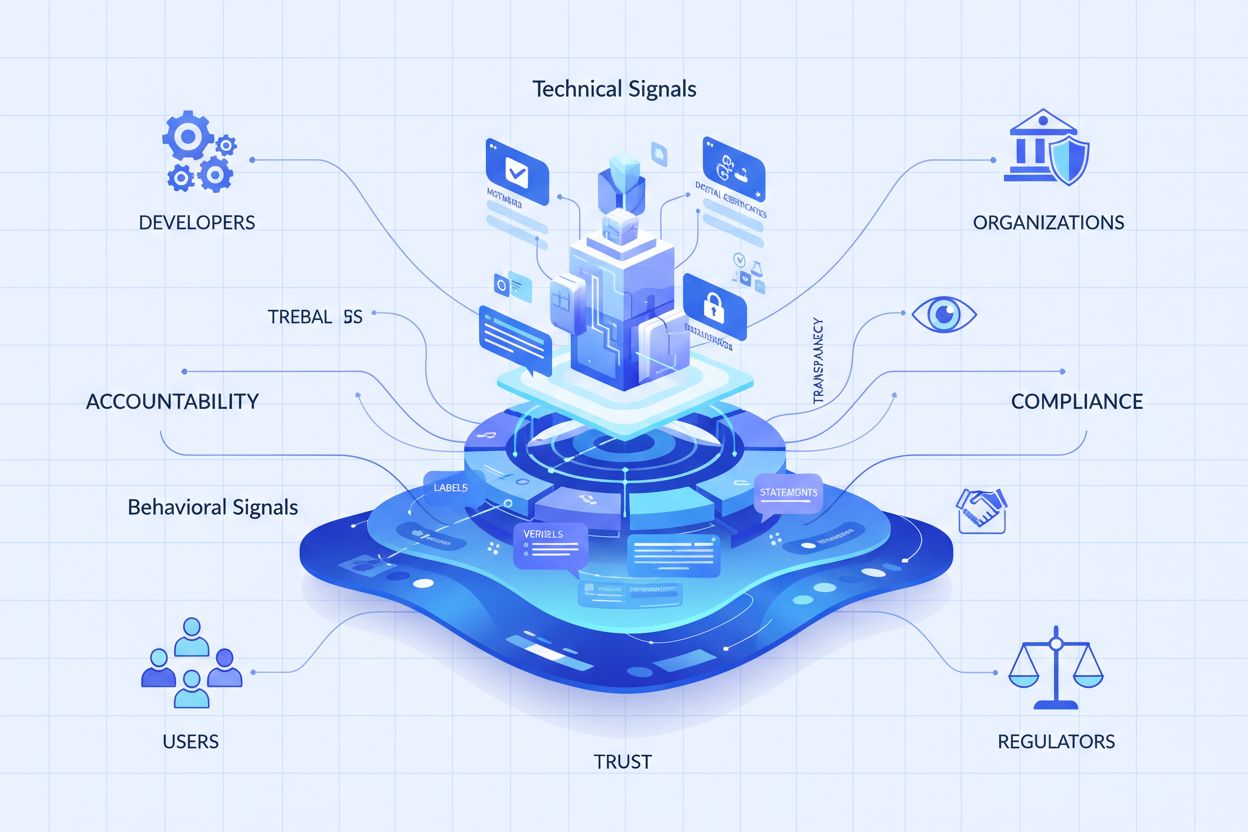

The relationship between certifications and trust operates on multiple levels. At the organizational level, pursuing certification signals a commitment to responsible AI development and deployment. At the stakeholder level, certifications provide assurance that independent auditors have verified compliance with established standards. For regulators and the public, certifications create accountability mechanisms that ensure AI systems operate within defined parameters and meet societal expectations for safety and fairness. This multi-layered approach to trust-building has become increasingly important as AI systems become more prevalent in decision-making processes that affect individuals and organizations.

| Certification Framework | Focus Area | Key Components | Scope |

|---|---|---|---|

| CSA STAR for AI | AI safety and trustworthiness | AI Trustworthy Pledge, AI Controls Matrix (243 controls), Risk-based audits | AI developers, cloud providers, enterprises |

| ISO/IEC 42001:2023 | AI management systems | Governance, transparency, accountability, risk management | Organizations implementing AI systems |

| EU AI Act Compliance | Regulatory transparency | Risk classification, disclosure requirements, content labeling | All AI systems operating in EU |

| TRUSTe Responsible AI | Data practices and governance | AI governance frameworks, responsible data handling | Organizations with 10,000+ certifications |

The CSA STAR for AI framework represents one of the most comprehensive approaches to AI certification, building upon the established Cloud Security Alliance’s STAR program which has already assessed over 3,400 organizations globally. This framework specifically addresses AI-related risks including data leakage, ethical considerations, and system reliability. The CSA STAR for AI comprises three main components: the AI Trustworthy Pledge, which commits organizations to high-level principles on AI safety and responsibility; the AI Controls Matrix with 243 control objectives across 18 domains; and the forthcoming Trusted AI Safety Knowledge Certification Program launching in 2025. The framework’s strength lies in its vendor-neutral approach, aligning with international standards including ISO 42001 and NIST AI Risk Management Framework.

ISO/IEC 42001:2023 stands as the world’s first international standard specifically designed for AI management systems. This certification framework establishes comprehensive requirements for organizations implementing AI systems, focusing on governance structures, transparency mechanisms, accountability frameworks, and systematic risk management. Organizations pursuing ISO 42001 certification must demonstrate that they have established dedicated AI ethics governance teams bringing together expertise from AI development, legal affairs, compliance, risk management, and ethics philosophy. The standard requires organizations to document their entire AI development pipeline, from data sourcing and labeling through model architecture decisions and deployment procedures. This documentation requirement ensures traceability and enables auditors to verify that ethical considerations have been integrated throughout the AI lifecycle.

Transparency forms the cornerstone of trust in AI systems, and certifications mandate specific transparency requirements that organizations must meet to achieve and maintain certification status. The EU AI Act, effective August 2024 with full compliance required by August 2026, establishes the world’s first comprehensive legal framework for AI transparency, requiring organizations to disclose their involvement with AI systems and provide clear explanations of AI decision-making processes. This regulatory framework classifies AI systems into risk categories, with high-risk systems facing the most stringent transparency requirements. Organizations must inform users before their first interaction with AI systems, provide clear labeling of AI-generated content in machine-readable formats, and maintain comprehensive technical documentation explaining system capabilities and limitations.

Certification frameworks require organizations to implement explainability mechanisms that make AI decision-making understandable to stakeholders. This extends beyond simple notifications to include comprehensive disclosure about system capabilities, limitations, and potential risks. For high-risk applications such as emotion recognition systems or biometric identification, explainability must address how the system arrived at specific conclusions and what factors influenced the decision-making process. Organizations must also provide interpretability documentation that enables technical teams to analyze and understand how input data, parameters, and processes within AI systems produce specific outputs. This may require specialized tools for model inspection or visualization that support internal audits and regulatory review. The combination of explainability for end-users and interpretability for technical teams ensures that transparency operates at multiple levels, serving different stakeholder needs.

Accountability mechanisms embedded in certification frameworks establish clear responsibility chains for AI system decisions, errors, and downstream consequences. Certifications require organizations to maintain comprehensive audit trails documenting the development, training, input data, and operating contexts of AI systems. This traceability enables reconstruction of decisions and supports both internal governance and regulatory review. The CSA STAR for AI framework introduces risk-based audits and continuous monitoring approaches that differ from traditional point-in-time assessments, recognizing that AI systems evolve and require ongoing oversight. Organizations must establish incident reporting systems that track adverse outcomes and enable rapid response to identified problems.

Bias auditing and mitigation represents a critical component of certification requirements, addressing one of the most significant risks in AI systems. Certification frameworks require organizations to conduct thorough bias audits examining potential discrimination impacts across protected characteristics including gender, race, age, disability status, and socioeconomic status. These audits must examine the entire AI development pipeline, from data sourcing decisions through model architecture choices, recognizing that seemingly neutral technical decisions carry ethical implications. Organizations pursuing certification must implement ongoing monitoring procedures that reassess bias as AI systems mature and encounter new data through repeated interaction. This systematic approach to bias management demonstrates organizational commitment to fairness and helps prevent costly discrimination incidents that could damage brand reputation and trigger regulatory action.

Certification requirements establish governance structures that formalize AI ethics and risk management within organizations. The ISO/IEC 42001 standard requires organizations to establish dedicated AI ethics governance teams with cross-functional expertise spanning technical, legal, compliance, and ethics domains. These governance teams serve as ethical compasses for organizations, interpreting broad ideals into operational policies and closing the gap between optimization-oriented technical groups and business-oriented executives focused on regulatory compliance and risk management. Certification frameworks require governance teams to oversee day-to-day AI operations, serve as focal points for external auditors and certifying agencies, and identify emerging ethical problems before they become costly issues.

The certification process itself demonstrates organizational maturity in managing AI risks. Organizations pursuing certification must document their AI governance policies, decision-making processes, and corrective action workflows, creating auditable trails that demonstrate organizational learning and continuous improvement capacity. This documentation requirement transforms AI governance from an administrative checkbox into an integral part of development workflows. Digital tools can automate documentation procedures through automated logging of records, version tracking, and centralized management of user access, converting document handling from a burdensome administrative task into an intrinsic development process. Organizations that successfully achieve certification position themselves as leaders in responsible AI practices, gaining competitive advantages in markets increasingly focused on ethical technology deployment.

Regulatory frameworks increasingly mandate AI certifications or certification-equivalent compliance measures, making certification pursuit a strategic business necessity rather than optional best practice. The EU AI Act establishes some of the world’s harshest penalties for AI regulatory violations, with serious violations carrying fines up to €35 million or 7% of global annual turnover. Transparency-specific violations carry fines up to €7.5 million or 1% of global turnover. These penalties apply extraterritorially, meaning organizations worldwide face enforcement action if their AI systems impact EU users, regardless of company location or headquarters. Certification frameworks help organizations navigate these complex regulatory requirements by providing structured pathways to compliance.

Organizations pursuing certification gain several risk mitigation benefits beyond regulatory compliance. Certifications provide documented evidence of due diligence in AI system development and deployment, which can prove valuable in litigation or regulatory investigations. The comprehensive documentation requirements ensure that organizations can reconstruct decision-making processes and demonstrate that appropriate safeguards were implemented. Certification also enables organizations to identify and address risks proactively before they manifest as costly incidents. By implementing the governance structures, bias auditing procedures, and transparency mechanisms required for certification, organizations reduce the likelihood of discrimination claims, data breaches, or regulatory enforcement actions that could damage brand reputation and financial performance.

Third-party audits form a critical component of certification frameworks, providing independent verification that organizations have implemented appropriate controls and governance structures. The CSA STAR for AI framework includes Level 1 self-assessments and Level 2 third-party certifications, with Level 2 certifications involving independent auditors verifying compliance with the 243 AICM controls while integrating ISO 27001 and ISO 42001 standards. This independent verification process provides stakeholders with confidence that certification claims have been validated by qualified auditors rather than relying solely on organizational self-assessment. Third-party auditors bring external expertise and objectivity to the assessment process, identifying gaps and risks that internal teams might overlook.

The certification process creates public recognition of organizational commitment to responsible AI practices. Organizations achieving certification receive digital badges and public recognition through certification registries, signaling to customers, partners, and regulators that they have met established standards. This public visibility creates reputational incentives for maintaining certification status and continuing to improve AI governance practices. Customers increasingly prefer to work with certified organizations, viewing certification as evidence of responsible AI deployment. Partners and investors view certification as a risk mitigation factor, reducing concerns about regulatory exposure or reputational damage from AI-related incidents. This market-driven demand for certification creates positive feedback loops where organizations pursuing certification gain competitive advantages, encouraging broader adoption of certification frameworks across industries.

Certification frameworks increasingly integrate with existing compliance requirements including data protection laws, consumer protection regulations, and industry-specific standards. The ISO/IEC 42001 standard aligns with GDPR requirements for transparency in automated decision-making, creating synergies between AI governance and data protection compliance. Organizations implementing ISO 42001 certification simultaneously advance their GDPR compliance posture by establishing governance structures and documentation practices that satisfy both frameworks. This integration reduces compliance burden by enabling organizations to implement unified governance approaches that satisfy multiple regulatory requirements simultaneously.

The EU AI Act’s transparency requirements align with GDPR’s explainability requirements for automated decision-making, creating a comprehensive regulatory framework for responsible AI deployment. Organizations pursuing certification under these frameworks must implement transparency mechanisms that satisfy both AI-specific and data protection requirements. This integrated approach ensures that organizations address the full spectrum of AI-related risks, from data protection concerns through ethical considerations and system reliability. As regulatory frameworks continue evolving, certifications provide organizations with structured pathways to compliance that anticipate future requirements and position organizations as leaders in responsible technology deployment.

Ensure your organization is properly cited and represented when AI systems reference your content. Track how your brand appears across ChatGPT, Perplexity, and other AI answer generators.

Learn how certifications and trust badges impact your visibility in AI-generated answers. Discover why trust signals matter for ChatGPT, Perplexity, and Google ...

Learn how AI systems evaluate trust signals through E-E-A-T framework. Discover the credibility factors that help LLMs cite your content and build authority.

Learn essential AI transparency and disclosure best practices. Discover behavioral, verbal, and technical disclosure methods to build trust and ensure complianc...