AI Hallucination Monitoring

Learn what AI hallucination monitoring is, why it's essential for brand safety, and how detection methods like RAG, SelfCheckGPT, and LLM-as-Judge help prevent ...

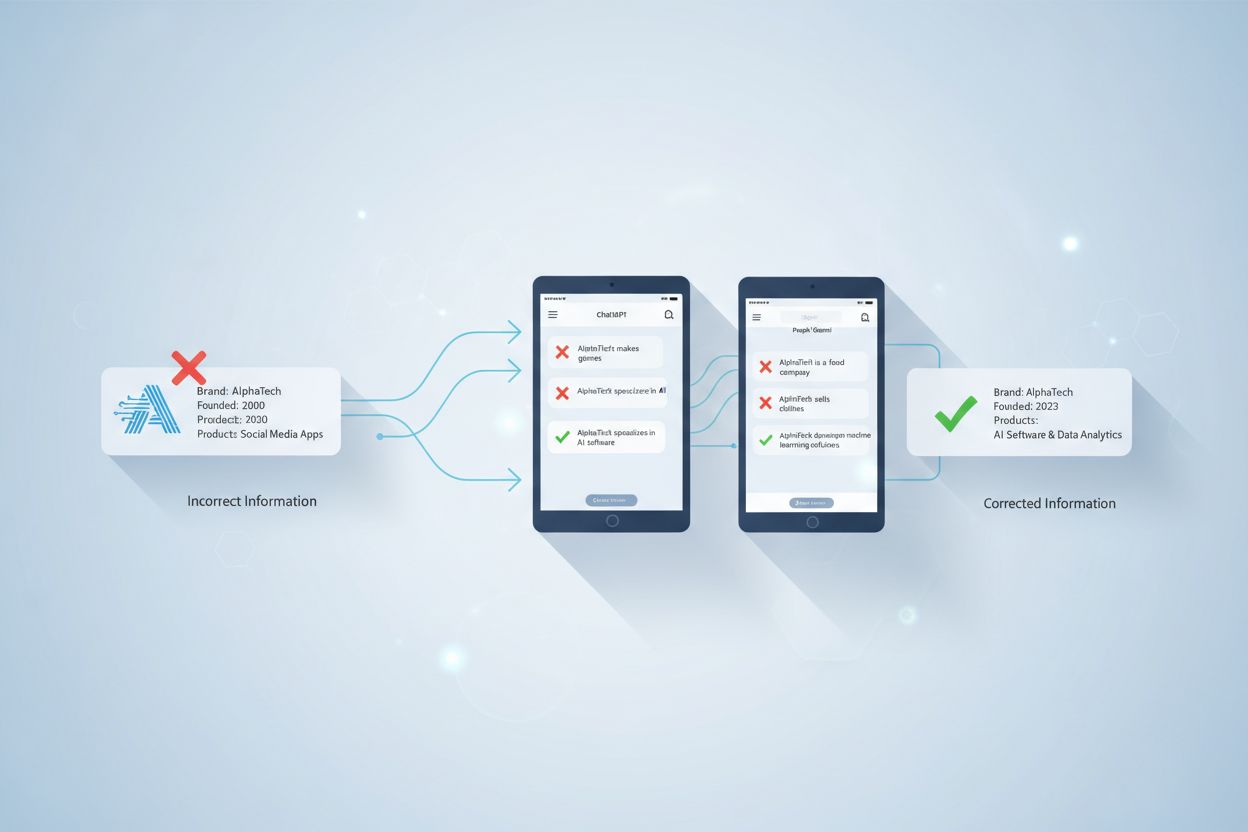

Learn effective methods to identify, verify, and correct inaccurate information in AI-generated answers from ChatGPT, Perplexity, and other AI systems.

Correct misinformation in AI responses by using lateral reading to cross-check claims with authoritative sources, breaking down information into specific claims, and reporting errors to the AI platform. Verify facts through academic databases, government websites, and established news publications before accepting AI-generated content as accurate.

Misinformation in AI responses occurs when artificial intelligence systems generate inaccurate, outdated, or misleading information that appears credible to users. This happens because large language models (LLMs) are trained on vast amounts of data from the internet, which may contain biased, incomplete, or false information. The phenomenon known as AI hallucination is particularly problematic—it occurs when AI models perceive patterns that don’t actually exist and create seemingly factual answers that are entirely unfounded. For example, an AI system might invent a fictional professor’s name or attribute incorrect information to a real person, all while presenting the information with complete confidence. Understanding these limitations is crucial for anyone relying on AI for research, business decisions, or content creation.

The challenge of misinformation in AI responses extends beyond simple factual errors. AI systems may present speculation as fact, misinterpret data due to training limitations, or pull information from outdated sources that no longer reflect current reality. Additionally, AI models struggle to distinguish between factual statements and opinions, sometimes treating subjective beliefs as objective truths. This creates a compounding problem where users must develop critical evaluation skills to separate accurate information from false claims, especially when the AI presents everything with equal confidence and authority.

Lateral reading is the most effective technique for identifying and correcting misinformation in AI responses. This method involves leaving the AI output and consulting multiple external sources to evaluate the accuracy of specific claims. Rather than reading vertically through the AI response and accepting information at face value, lateral reading requires you to open new tabs and search for supporting evidence from authoritative sources. This approach is particularly important because AI output is a composite of multiple unidentifiable sources, making it impossible to assess credibility by examining the source itself—instead, you must evaluate the factual claims independently.

The lateral reading process begins with fractionation, which means breaking down the AI response into smaller, specific, searchable claims. Instead of trying to verify an entire paragraph at once, isolate individual statements that can be independently verified. For instance, if an AI response claims that a particular person attended a specific university and studied under a named professor, these become three separate claims to verify. Once you’ve identified these claims, open new browser tabs and search for evidence supporting each one using reliable sources like Google Scholar, academic databases, government websites, or established news publications. The key advantage of this method is that it forces you to examine the assumptions embedded in both your prompt and the AI’s response, helping you identify where errors originated.

Verifying AI-generated information requires consulting multiple authoritative sources that maintain high standards for accuracy and credibility. Government websites, peer-reviewed academic journals, established news organizations, and specialized research databases provide the most reliable verification points. When fact-checking AI responses, prioritize sources with specific characteristics: academic databases like JSTOR, PubMed, or Google Scholar for research claims; government websites for official statistics and policies; and established news publications for current events and recent developments. These sources have editorial processes, fact-checking procedures, and accountability mechanisms that AI systems lack.

| Source Type | Best For | Examples |

|---|---|---|

| Academic Databases | Research claims, historical facts, technical information | JSTOR, PubMed, Google Scholar, WorldCat |

| Government Websites | Official statistics, policies, regulations | .gov domains, official agency sites |

| Established News Publications | Current events, recent developments, breaking news | Major newspapers, news agencies with editorial standards |

| Specialized Databases | Industry-specific information, technical details | Industry associations, professional organizations |

| Non-profit Organizations | Verified information, research reports | .org domains with transparent funding |

When cross-checking AI responses, look for multiple independent sources confirming the same information rather than relying on a single source. If you find conflicting information across sources, investigate further to understand why discrepancies exist. Sometimes AI responses contain correct information in the wrong context—for example, attributing a fact about one organization to another, or placing accurate information in an incorrect time period. This type of error is particularly insidious because the individual facts may be verifiable, but their combination creates misinformation.

Effective correction of misinformation requires a systematic approach to analyzing AI responses. Start by identifying the specific factual claims within the response, then evaluate each claim independently. This process involves asking critical questions about what assumptions the AI made based on your prompt, what perspective or agenda might influence the information, and whether the claims align with what you discover through independent research. For each claim, document whether it is completely accurate, partially misleading, or factually incorrect.

When analyzing AI responses, pay special attention to confidence indicators and how the AI presents information. AI systems often present uncertain or speculative information with the same confidence as well-established facts, making it difficult for users to distinguish between verified information and educated guesses. Additionally, examine whether the AI response includes citations or source references—while some AI systems attempt to cite sources, these citations may be inaccurate, incomplete, or point to sources that don’t actually contain the claimed information. If an AI system cites a source, verify that the source actually exists and that the quoted information appears in that source exactly as presented.

Most major AI platforms provide mechanisms for users to report inaccurate or misleading responses. Perplexity, for example, allows users to report incorrect answers through a dedicated feedback system or by creating a support ticket. ChatGPT and other AI systems similarly offer feedback options that help developers identify and correct problematic responses. When reporting misinformation, provide specific details about what information was inaccurate, what the correct information should be, and ideally, links to authoritative sources that support the correction. This feedback contributes to improving the AI system’s training and helps prevent the same errors from being repeated to other users.

Reporting errors serves multiple purposes beyond just correcting individual responses. It creates a feedback loop that helps AI developers understand common failure modes and areas where their systems struggle. Over time, this collective feedback from users helps improve the accuracy and reliability of AI systems. However, it’s important to recognize that reporting errors to the platform is not a substitute for your own fact-checking—you cannot rely on the platform to correct misinformation before you encounter it, so personal verification remains essential.

AI hallucinations represent one of the most challenging types of misinformation because they are generated with complete confidence and often sound plausible. These occur when AI models create information that sounds reasonable but has no basis in reality. Common examples include inventing fictional people, creating fake citations, or attributing false accomplishments to real individuals. Research has shown that some AI models correctly identify truths nearly 90 percent of the time but identify falsehoods less than 50 percent of the time, meaning they are actually worse than random chance at recognizing false statements.

To identify potential hallucinations, look for red flags in AI responses: claims about obscure people or events that you cannot verify through any source, citations to articles or books that don’t exist, or information that seems too convenient or perfectly aligned with your prompt. When an AI response includes specific names, dates, or citations, these become prime candidates for verification. If you cannot find any independent confirmation of a specific claim after searching multiple sources, it’s likely a hallucination. Additionally, be skeptical of AI responses that provide extremely detailed information about niche topics without citing sources—this level of specificity combined with lack of verifiable sources is often a sign of fabricated information.

AI systems have knowledge cutoff dates, meaning they cannot access information published after their training data ends. This creates a significant source of misinformation when users ask about recent events, current statistics, or newly published research. An AI response about current market conditions, recent policy changes, or breaking news may be completely inaccurate simply because the AI’s training data predates these developments. When seeking information about recent events or current data, always verify that the AI’s response reflects the most up-to-date information available.

To address outdated information, check publication dates on any sources you find during fact-checking and compare them with the AI’s response date. If the AI response references statistics or information from several years ago but presents it as current, this is a clear indication of outdated information. For topics where information changes frequently—such as technology, medicine, law, or economics—always supplement AI responses with the most recent sources available. Consider using AI systems that have access to real-time information or that explicitly state their knowledge cutoff date, allowing you to understand the limitations of their responses.

AI systems trained on internet data inherit the biases present in that data, which can manifest as misinformation that favors certain perspectives while excluding others. When evaluating AI responses, assess whether the information presents multiple perspectives on controversial or complex topics, or whether it presents one viewpoint as objective fact. Misinformation often appears when AI systems present subjective opinions or culturally specific viewpoints as universal truths. Additionally, examine whether the AI response acknowledges uncertainty or disagreement among experts on the topic—if experts genuinely disagree, a responsible AI response should acknowledge this rather than presenting one perspective as definitive.

To identify bias-related misinformation, research how different authoritative sources address the same topic. If you find significant disagreement among reputable sources, the AI response may be presenting an incomplete or biased version of the information. Look for whether the AI acknowledges limitations, counterarguments, or alternative interpretations of the information it provides. A response that presents information as more certain than it actually is, or that omits important context or alternative viewpoints, may be misleading even if individual facts are technically accurate.

While human fact-checking remains essential, specialized fact-checking tools and resources can assist in verifying AI-generated information. Websites dedicated to fact-checking, such as Snopes, FactCheck.org, and PolitiFact, maintain databases of verified and debunked claims that can help you quickly identify false statements. Additionally, some AI systems are being developed specifically to help identify when other AI systems are being overconfident about false predictions. These emerging tools use techniques like confidence calibration to help users understand when an AI system is likely to be wrong, even when it expresses high confidence.

Academic and research institutions increasingly provide resources for evaluating AI-generated content. University libraries, research centers, and educational institutions offer guides on lateral reading, critical evaluation of AI content, and fact-checking techniques. These resources often include step-by-step processes for breaking down AI responses, identifying claims, and verifying information systematically. Taking advantage of these educational resources can significantly improve your ability to identify and correct misinformation in AI responses.

Track how your domain, brand, and URLs appear in AI-generated responses across ChatGPT, Perplexity, and other AI search engines. Get alerts when misinformation about your business appears in AI answers and take corrective action.

Learn what AI hallucination monitoring is, why it's essential for brand safety, and how detection methods like RAG, SelfCheckGPT, and LLM-as-Judge help prevent ...

Learn proven strategies to protect your brand from AI hallucinations in ChatGPT, Perplexity, and other AI systems. Discover monitoring, verification, and govern...

Learn how to identify and correct incorrect brand information in AI systems like ChatGPT, Gemini, and Perplexity. Discover monitoring tools, source-level correc...