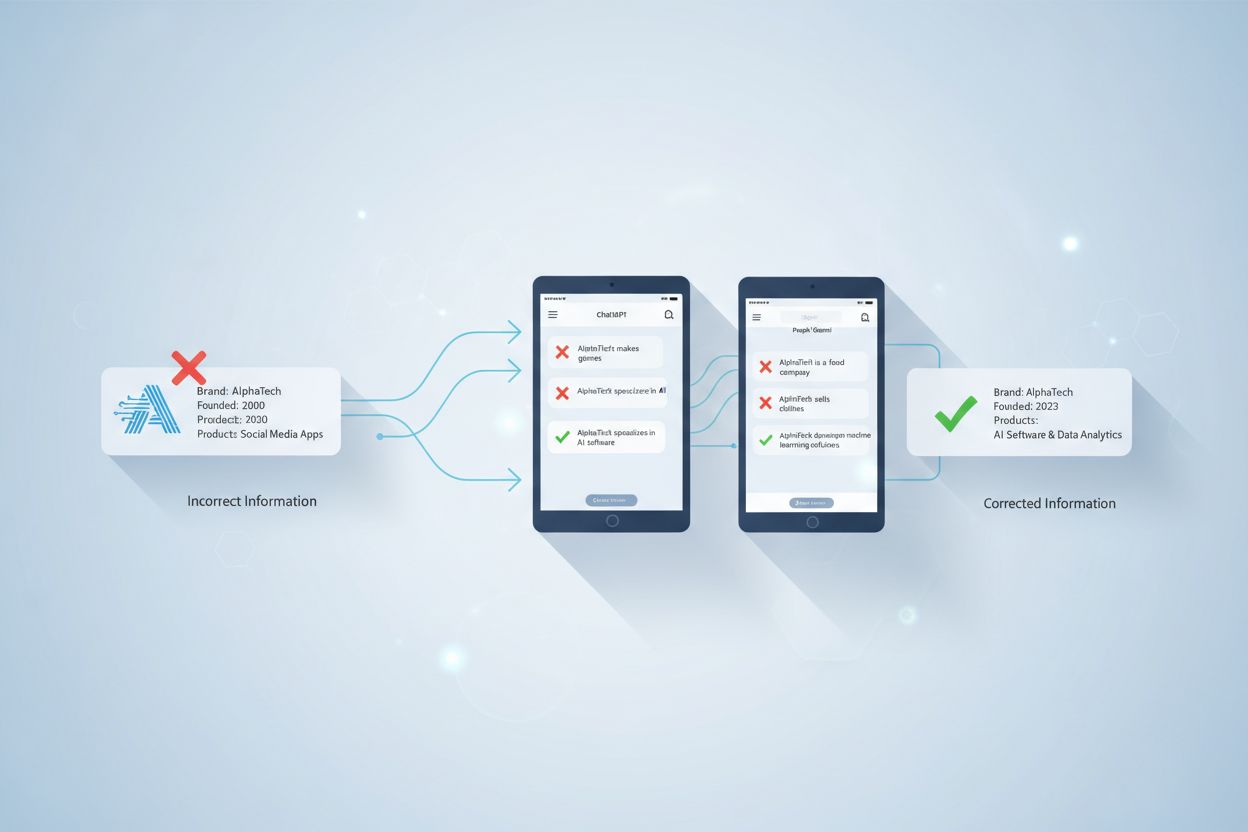

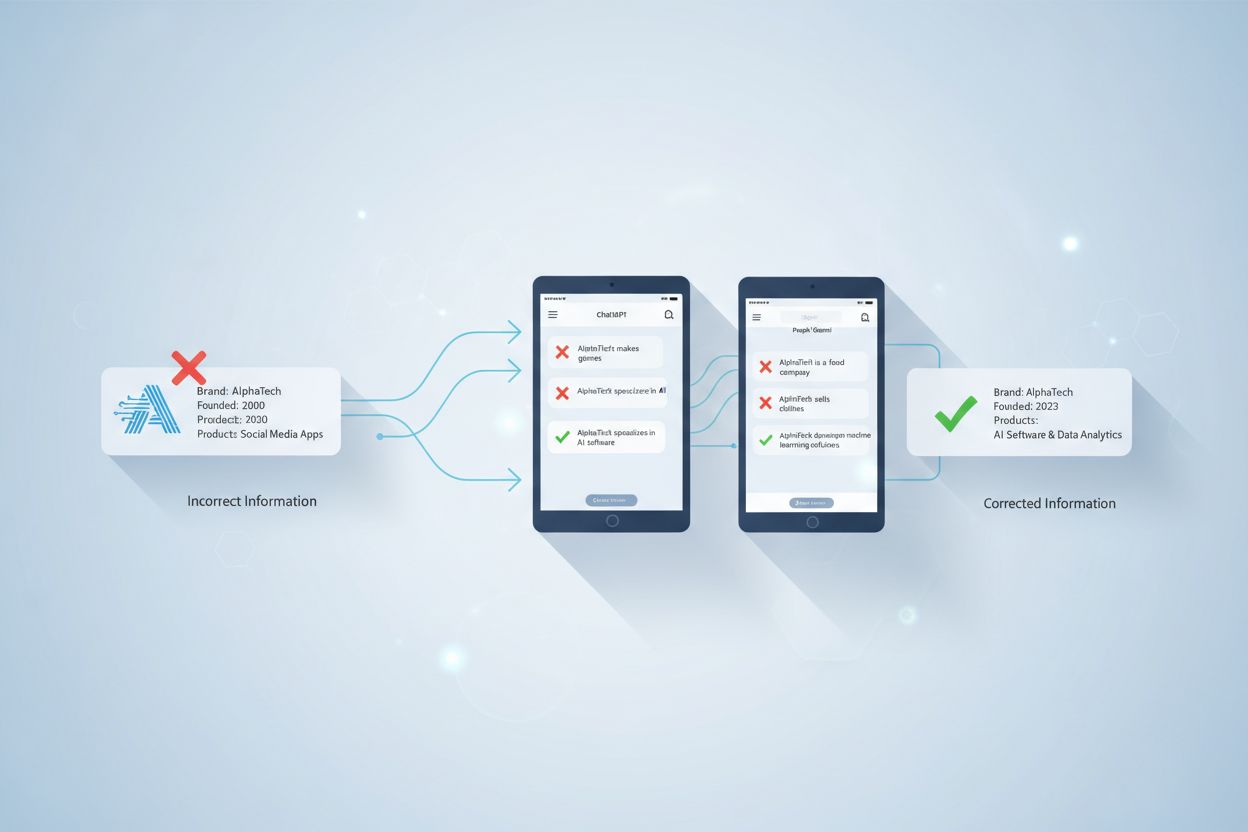

AI Misinformation Correction

Learn how to identify and correct incorrect brand information in AI systems like ChatGPT, Gemini, and Perplexity. Discover monitoring tools, source-level correc...

Learn how to dispute inaccurate AI information, report errors to ChatGPT and Perplexity, and implement strategies to ensure your brand is accurately represented in AI-generated answers.

You can dispute inaccurate AI information by reporting it directly to the AI platform, optimizing your content for AI systems, using brand monitoring tools, and implementing structured data to ensure accurate representation across ChatGPT, Perplexity, and other AI search engines.

Inaccurate information in AI responses represents a significant challenge for businesses and individuals in the modern digital landscape. When AI systems like ChatGPT, Perplexity, and Gemini generate incorrect information about your brand, products, or services, it can damage your reputation, mislead potential customers, and result in lost business opportunities. The problem is particularly acute because these AI-powered search engines are fundamentally reshaping how users discover and evaluate information online. With 60% of Google searches ending without a click in 2024 and organic click-through rates dropping by more than half when AI answers appear, ensuring accurate brand representation in AI responses has become critical for maintaining competitive advantage.

AI systems generate inaccurate information through multiple mechanisms. These systems operate on a dual architecture consisting of pre-trained knowledge and real-time search augmentation, meaning incorrect information can become embedded in two distinct ways. First, outdated or incorrect information from training data can persist in the model’s responses. Second, inaccurate content from current web sources can be retrieved and cited by the AI system. The fundamental issue is that AI models are designed to generate the most likely sequence of words to answer a prompt, not to verify whether the information is actually correct. This means the AI cannot distinguish between accurate and inaccurate information—it simply produces what it believes is the most probable response based on its training data.

Most major AI platforms provide mechanisms for users to report inaccurate or problematic responses. Perplexity, for example, allows users to report incorrect or inaccurate answers by using the flag icon located below the answer. Users can also create a support ticket through Perplexity’s support window or email support@perplexity.ai directly with details about the inaccuracy. This reporting mechanism helps the platform identify patterns of misinformation and improve its response quality over time. When reporting inaccurate information, it’s important to be specific about what information is incorrect, provide evidence of the correct information, and explain how the inaccuracy could impact users or your business.

ChatGPT and other AI platforms similarly offer feedback mechanisms, though the specific process varies by platform. Users can typically flag responses as problematic or inaccurate through built-in feedback buttons. However, it’s important to understand that reporting inaccurate information to individual AI platforms is a reactive approach—it addresses specific instances but doesn’t prevent future occurrences. The real challenge is that AI systems continuously generate new responses based on their training data and real-time search results, so a single report may not prevent the same inaccuracy from appearing in future responses to similar queries.

The most effective long-term strategy for disputing inaccurate AI information is to optimize your content specifically for AI system consumption. This approach, known as Generative Engine Optimization (GEO), differs fundamentally from traditional SEO. While traditional SEO focuses on ranking in search results pages, GEO ensures your brand information is accurately cited and embedded within AI-generated responses. This involves structuring and formatting your content to be easily understood, extracted, and cited by AI platforms.

Structured data markup plays a crucial role in this process. By implementing proper schema markup on your website, you provide AI systems with clear, machine-readable information about your business, products, and services. This makes it significantly easier for AI systems to access accurate information about your brand rather than relying on outdated or incorrect data from other sources. Additionally, clear and consistent brand messaging across all digital touchpoints helps AI systems understand and accurately represent your brand. When your website, social media profiles, and other online properties present consistent information, AI systems are more likely to cite this accurate information in their responses.

| Strategy | Implementation | Expected Outcome |

|---|---|---|

| Structured Data Markup | Implement schema.org markup on website | AI systems access accurate, machine-readable data |

| Content Optimization | Create clear, factual, well-sourced content | Improved citation accuracy in AI responses |

| Brand Consistency | Maintain consistent messaging across platforms | Reduced confusion and misrepresentation |

| Regular Updates | Keep content fresh and current | AI systems prioritize recent, authoritative information |

| Authority Building | Develop relationships with industry publications | Enhanced credibility signals for AI systems |

AI brand monitoring tools provide essential visibility into how your brand is being represented across different AI platforms. These specialized tools continuously monitor how AI systems describe your brand, identify inconsistencies, and track changes in brand representation over time. By using these tools, you can detect inaccuracies quickly and implement corrective measures before they cause significant damage to your reputation.

Comprehensive AI brand monitoring platforms offer several key capabilities. They can audit how your brand appears across ChatGPT, Perplexity, and other AI search engines, providing detailed information on brand sentiment, keyword usage, and competitive positioning. These tools often include AI Visibility Scores that combine mentions, citations, sentiment, and rankings to form a reliable overview of your brand’s visibility in AI systems. By regularly monitoring your brand’s AI presence, you can identify patterns of misinformation, track the effectiveness of your corrective efforts, and ensure that accurate information is being cited in AI responses.

The advantage of using dedicated monitoring tools is that they provide continuous, systematic tracking rather than relying on occasional manual checks. This allows you to catch inaccuracies quickly and respond with corrective content before the misinformation becomes widespread. Additionally, these tools often provide competitive intelligence, showing you how your brand’s AI representation compares to competitors and identifying opportunities to improve your positioning.

Once you’ve identified inaccurate information about your brand in AI responses, the most effective remedy is to create and distribute authoritative corrective content. This content should directly address the inaccuracies and provide clear, factual information that AI systems can easily extract and cite. The key to success is ensuring that this corrective content is highly authoritative, well-sourced, and optimized for AI understanding.

Effective corrective content follows several best practices. First, it should contain clear, factual statements that directly contradict the inaccurate information. Rather than simply stating what is correct, the content should explain why the previous information was incorrect and provide evidence supporting the accurate information. Second, the content should use structured formatting with clear headings, bullet points, and organized sections that make it easy for AI systems to parse and understand. Third, the content should be regularly updated to reflect the most current information about your brand, signaling to AI systems that this is authoritative, up-to-date information worthy of citation.

Distribution strategy is equally important as content creation. Your corrective content should be published on high-authority platforms where AI systems are likely to discover and cite it. This includes your official website, industry publications, and relevant directories. By distributing corrective content across multiple channels, you increase the likelihood that AI systems will encounter and cite this accurate information rather than relying on outdated or incorrect sources. Additionally, amplifying this content through social media and industry influencers can increase its visibility and authority signals, making it more likely to be cited by AI systems.

AI hallucinations represent a particularly challenging form of inaccurate information where AI systems generate completely fabricated facts, citations, or sources. These aren’t simple errors or misinterpretations—they’re instances where the AI confidently presents false information as if it were factual. For example, an AI might cite non-existent research papers, invent fake statistics, or create false quotes attributed to real people. These hallucinations are particularly problematic because they’re often presented with such confidence that users may not question their accuracy.

The root cause of AI hallucinations lies in how these systems are trained and operate. AI models are optimized to generate plausible-sounding text rather than to verify factual accuracy. When an AI system encounters a prompt it doesn’t have clear training data for, it may generate what seems like a reasonable answer based on patterns in its training data, even if that answer is completely fabricated. This is why AI systems often generate fake citations and sources—they’re creating what they believe are plausible references rather than actually retrieving real sources.

To combat AI hallucinations about your brand, you need to ensure that accurate, authoritative information about your brand is widely available and easily discoverable by AI systems. When AI systems have access to clear, factual information from authoritative sources, they’re less likely to hallucinate alternative information. Additionally, by monitoring AI responses regularly, you can catch hallucinations quickly and implement corrective measures before they spread.

In cases where inaccurate AI information causes significant harm to your business, legal action may be necessary. Several high-profile cases have established precedent for holding AI systems and their operators accountable for providing inaccurate information. For example, Air Canada faced legal liability when its AI chatbot provided incorrect information about bereavement fares, resulting in a tribunal ruling that the airline was liable for negligent misrepresentation. These cases demonstrate that companies can be held responsible for inaccurate information generated by their AI systems.

Beyond legal action, consulting with brand consultants and SEO experts can help you develop comprehensive strategies for addressing widespread misrepresentation. These professionals can help you identify the root causes of inaccuracies, develop corrective content strategies, and implement systematic approaches to improving your brand’s representation in AI systems. Additionally, working with your legal team to understand your rights and potential remedies is important, particularly if the inaccurate information is causing measurable business harm.

For complex brand issues or widespread misrepresentation, professional help is often necessary. Specialists in Generative Engine Optimization and AI brand management can help you navigate the complexities of correcting inaccurate information across multiple AI platforms. They can also help you implement systematic monitoring and correction processes to prevent future inaccuracies.

Effectively disputing inaccurate AI information requires systematic measurement and tracking of your efforts. By establishing clear metrics and monitoring progress over time, you can determine whether your corrective strategies are working and adjust your approach as needed. Key performance indicators should include accuracy improvements, visibility gains, and competitive positioning changes.

Businesses implementing systematic correction strategies typically see 80-95% reduction in factual errors within 30 days, along with significant improvements in brand visibility and competitive positioning. These improvements are measurable through regular testing of AI responses to brand-related queries, tracking changes in how AI systems describe your brand, and monitoring sentiment and citation patterns. By establishing baseline measurements before implementing corrective strategies, you can clearly demonstrate the impact of your efforts.

Additionally, tracking business impact metrics such as lead quality, customer inquiries, and conversion rates can help you understand the real-world consequences of inaccurate AI information and the value of your correction efforts. When you can demonstrate that correcting inaccurate AI information leads to improved business outcomes, it becomes easier to justify investment in ongoing monitoring and optimization efforts.

Take control of how your brand appears in AI-generated answers. Track mentions, sentiment, and visibility across ChatGPT, Perplexity, and other AI platforms.

Learn how to identify and correct incorrect brand information in AI systems like ChatGPT, Gemini, and Perplexity. Discover monitoring tools, source-level correc...

Learn effective strategies to identify, monitor, and correct inaccurate information about your brand in AI-generated answers from ChatGPT, Perplexity, and other...

Learn how to identify, prevent, and correct AI misinformation about your brand. Discover 7 proven strategies and tools to protect your reputation in AI search r...