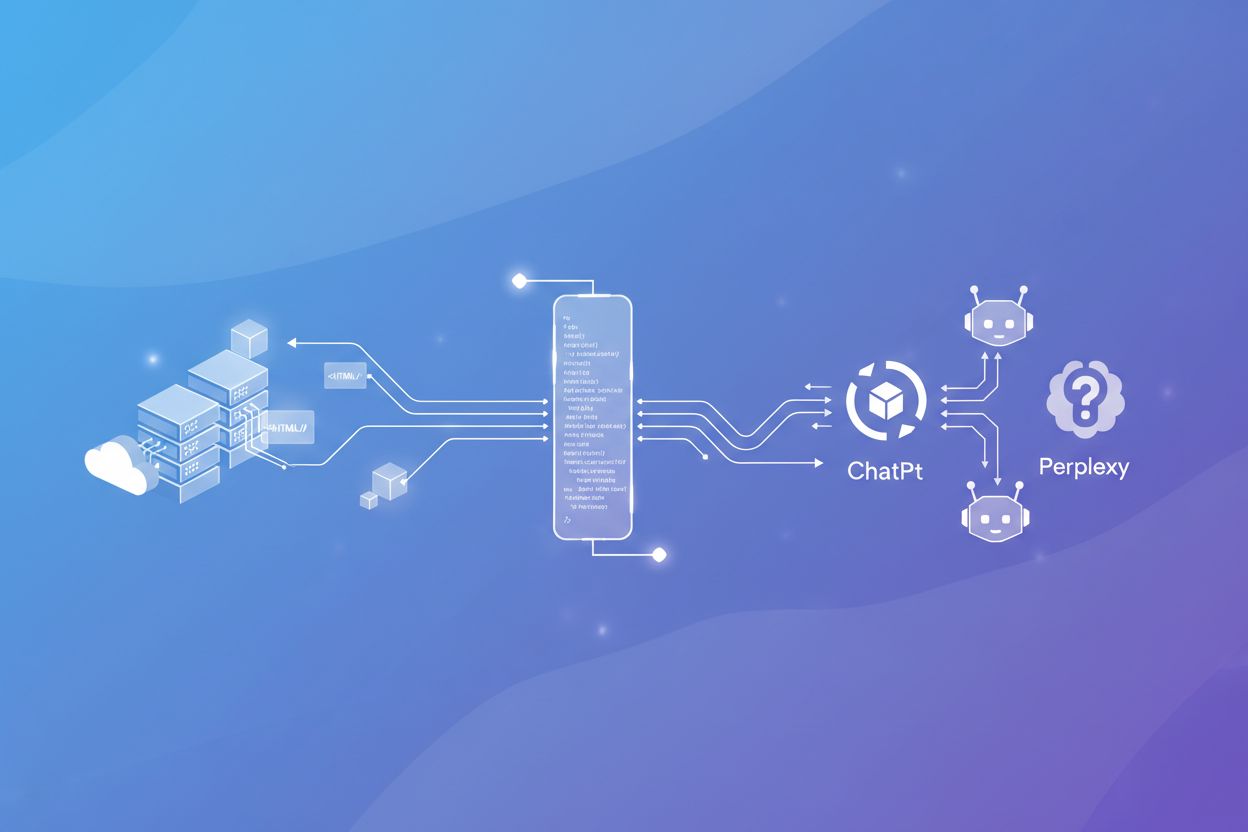

Prerendering for AI Crawlers: Making JavaScript Content Accessible

Learn how prerendering makes JavaScript content visible to AI crawlers like ChatGPT, Claude, and Perplexity. Discover the best technical solutions for AI search...

Learn how to implement infinite scroll while maintaining crawlability for AI crawlers, ChatGPT, Perplexity, and traditional search engines. Discover pagination strategies, URL structure best practices, and technical implementation methods.

Implement a hybrid approach combining infinite scroll with traditional pagination URLs. Create distinct, crawlable component pages with unique URLs that AI crawlers can access without JavaScript execution. Use pushState/replaceState to update URLs as users scroll, and ensure all content is accessible through static HTML fallbacks.

Infinite scroll creates a seamless user experience where content loads automatically as visitors scroll down the page. However, this approach presents a critical problem for AI crawlers like ChatGPT’s GPTBot, Claude’s ClaudeBot, and Perplexity’s PerplexityBot. These AI systems don’t scroll through pages or simulate human interaction—they load a page once in a fixed state and extract whatever content is immediately available. When your content loads only through JavaScript triggered by scroll events, AI crawlers miss everything beyond the initial viewport, making your content invisible to AI-powered search engines and answer generators.

The fundamental issue stems from how AI crawlers operate differently from traditional search bots. While Google’s Googlebot can render JavaScript to some extent, most AI crawlers lack a full browser environment with a JavaScript engine. They parse HTML and metadata to understand content quickly, prioritizing structured, easily retrievable data. If your content exists only in the DOM after JavaScript execution, these crawlers cannot access it. This means a website with hundreds of products, articles, or listings might appear to have only a dozen items to AI systems.

AI crawlers operate under two critical constraints that make infinite scroll problematic. First, they load pages at a fixed size—typically viewing only what appears in the initial viewport without scrolling. Second, they operate in a fixed state, meaning they don’t interact with the page after the initial load. They won’t click buttons, scroll down, or trigger any JavaScript events. This is fundamentally different from how human users experience your site.

When infinite scroll relies entirely on JavaScript to load additional content, AI crawlers see only the first batch of items. Everything loaded after the initial page render remains hidden. For e-commerce sites, this means product listings beyond the first screen are invisible. For blogs and news sites, only the first few articles appear in AI search results. For directories and galleries, the majority of your content never gets indexed by AI systems.

| Aspect | AI Crawlers | Human Users |

|---|---|---|

| Scrolling behavior | No scrolling; fixed viewport | Scroll to load more content |

| JavaScript execution | Limited or no execution | Full JavaScript support |

| Page interaction | No clicks, no form submission | Full interaction capability |

| Content visibility | Only initial HTML + metadata | All dynamically loaded content |

| Time per page | Seconds (fixed timeout) | Unlimited |

The most effective approach is not to abandon infinite scroll, but to implement it as an enhancement on top of a traditional paginated series. This hybrid model serves both human users and AI crawlers. Users enjoy the seamless infinite scroll experience, while AI crawlers can access all content through distinct, crawlable URLs.

Google’s official recommendations for infinite scroll emphasize creating component pages—separate URLs that represent each page of your paginated series. Each component page should be independently accessible, contain unique content, and have a distinct URL that doesn’t rely on JavaScript to function. For example, instead of loading all products on a single page via infinite scroll, create URLs like /products?page=1, /products?page=2, /products?page=3, and so on.

Each page in your paginated series must have its own full URL that directly accesses the content without requiring user history, cookies, or JavaScript execution. This is essential for AI crawlers to discover and index your content. The URL structure should be clean and semantic, clearly indicating the page number or content range.

Good URL structures:

example.com/products?page=2example.com/blog/page/3example.com/items?lastid=567Avoid these URL structures:

example.com/products#page=2 (URL fragments don’t work for crawlers)example.com/products?days-ago=3 (relative time parameters become stale)example.com/products?radius=5&lat=40.71&long=-73.40 (non-semantic parameters)Each component page should be directly accessible in a browser without any special setup. If you visit /products?page=2, the page should load immediately with the correct content, not require scrolling from page 1 to reach it. This ensures AI crawlers can jump directly to any page in your series.

Duplicate content across pages confuses AI crawlers and wastes crawl budget. Each item should appear on exactly one page in your paginated series. If a product appears on both page 1 and page 2, AI systems may struggle to understand which version is canonical, potentially diluting your visibility.

To prevent overlap, establish clear boundaries for each page. If you display 25 items per page, page 1 contains items 1-25, page 2 contains items 26-50, and so on. Avoid buffering or showing the last item from the previous page at the top of the next page, as this creates duplication that AI crawlers will detect.

Help AI crawlers understand that each page is distinct by creating unique title tags and H1 headers for every component page. Instead of generic titles like “Products,” use descriptive titles that indicate the page number and content focus.

Example title tags:

<title>Premium Coffee Beans | Shop Our Selection</title><title>Premium Coffee Beans | Page 2 | More Varieties</title><title>Premium Coffee Beans | Page 3 | Specialty Blends</title>Example H1 headers:

<h1>Premium Coffee Beans - Our Complete Selection</h1><h1>Premium Coffee Beans - Page 2: More Varieties</h1><h1>Premium Coffee Beans - Page 3: Specialty Blends</h1>These unique titles and headers signal to AI crawlers that each page contains distinct content worth indexing separately. This increases the likelihood that your deeper pages appear in AI-generated answers and summaries.

AI crawlers discover content by following links. If your pagination links are hidden or only appear through JavaScript, crawlers won’t find your component pages. You must explicitly expose navigation links in a way that crawlers can detect and follow.

On your main listing page (page 1), include a visible or hidden link to page 2. This can be implemented in several ways:

Option 1: Visible “Next” Link

<a href="/products?page=2">Next</a>

Place this link at the end of your product list. When users scroll down and trigger infinite scroll, you can hide this link via CSS or JavaScript, but crawlers will still see it in the HTML.

Option 2: Hidden Link in Noscript Tag

<noscript>

<a href="/products?page=2">Next Page</a>

</noscript>

The <noscript> tag displays content only when JavaScript is disabled. Crawlers treat this as regular HTML and follow the link, even though human users with JavaScript enabled won’t see it.

Option 3: Load More Button with Href

<a href="/products?page=2" id="load-more" class="button">Load More</a>

If you use a “Load More” button, include the next page URL in the href attribute. JavaScript can prevent the default link behavior and trigger infinite scroll instead, but crawlers will follow the href to the next page.

Each component page should include navigation links to other pages in the series. This can be implemented as:

Important: Always link to the main page (page 1) without a page parameter. If your main page is /products, never link to /products?page=1. Instead, ensure that /products?page=1 redirects to /products to maintain a single canonical URL for the first page.

While AI crawlers need distinct URLs, human users expect a seamless infinite scroll experience. Use pushState and replaceState from the History API to update the browser URL as users scroll, creating the best of both worlds.

pushState adds a new entry to the browser history, allowing users to navigate back through pages they’ve scrolled through. replaceState updates the current history entry without creating a new one. For infinite scroll, use pushState when users actively scroll to new content, as this allows them to use the back button to return to previous scroll positions.

// When new content loads via infinite scroll

window.history.pushState({page: 2}, '', '/products?page=2');

This approach ensures that:

Before launching your infinite scroll solution, thoroughly test that AI crawlers can access all your content.

The simplest test is to disable JavaScript in your browser and navigate through your site. Use a browser extension like “Toggle JavaScript” to turn off scripts, then visit your listing pages. You should be able to access all pages through pagination links without JavaScript. Whatever content disappears when JavaScript is disabled is invisible to AI crawlers.

If your site has 50 pages of products, visiting /products?page=999 should return a 404 error, not a blank page or redirect to page 1. This signals to crawlers that the page doesn’t exist, preventing them from wasting crawl budget on non-existent pages.

As users scroll and new content loads, verify that the URL in the address bar updates correctly. The page parameter should reflect the current scroll position. If users scroll to page 3 content, the URL should show /products?page=3.

Use Google Search Console’s URL Inspection tool to test how your paginated pages are rendered and indexed. Submit a few component pages and verify that Google can see all the content. If Google can access it, AI crawlers are likely to as well.

Beyond pagination, use Schema.org structured data to help AI crawlers understand your content more deeply. Add markup for products, articles, reviews, or other relevant types to each component page.

<script type="application/ld+json">

{

"@context": "https://schema.org",

"@type": "Product",

"name": "Premium Coffee Beans",

"description": "High-quality arabica coffee beans",

"price": "12.99",

"paginationInfo": {

"pageNumber": 2,

"itemsPerPage": 25

}

}

</script>

Structured data provides explicit signals about your content’s meaning and context, increasing the likelihood that AI systems accurately represent your information in generated answers.

Mistake 1: Relying Solely on JavaScript for Pagination If pagination links only appear after JavaScript execution, crawlers won’t find them. Always include pagination links in the initial HTML.

Mistake 2: Using URL Fragments for Pagination

URLs like /products#page=2 don’t work for crawlers. Fragments are client-side only and invisible to servers. Use query parameters or path segments instead.

Mistake 3: Creating Overlapping Content If the same product appears on multiple pages, AI crawlers may index duplicates or struggle to determine the canonical version. Maintain strict page boundaries.

Mistake 4: Ignoring Mobile Crawlers Ensure your pagination works on mobile viewports. Some AI crawlers may use mobile user agents, and your pagination must function across all screen sizes.

Mistake 5: Not Testing Crawler Accessibility Don’t assume your pagination works for crawlers. Test by disabling JavaScript and verifying that all pages are accessible through links.

After implementing pagination for infinite scroll, monitor how your content appears in AI search results. Track which pages are indexed by AI crawlers and whether your content appears in ChatGPT, Perplexity, and other AI answer generators. Use tools to audit your site’s crawlability and ensure AI systems can access all your content.

The goal is to create a seamless experience where human users enjoy infinite scroll while AI crawlers can systematically discover and index every page of your content. This hybrid approach maximizes your visibility across both traditional search and emerging AI-powered discovery channels.

Track how your content appears in ChatGPT, Perplexity, and other AI answer generators. Get alerts when your brand is mentioned and measure your visibility across AI platforms.

Learn how prerendering makes JavaScript content visible to AI crawlers like ChatGPT, Claude, and Perplexity. Discover the best technical solutions for AI search...

Learn how to test whether AI crawlers like ChatGPT, Claude, and Perplexity can access your website content. Discover testing methods, tools, and best practices ...

Discover how SSR and CSR rendering strategies affect AI crawler visibility, brand citations in ChatGPT and Perplexity, and your overall AI search presence.