How to Measure Content Performance in AI Search Engines

Learn how to measure content performance in AI systems including ChatGPT, Perplexity, and other AI answer generators. Discover key metrics, KPIs, and monitoring...

Learn how to measure AI search performance across ChatGPT, Perplexity, and Google AI Overviews. Discover key metrics, KPIs, and monitoring strategies for tracking brand visibility in AI-generated answers.

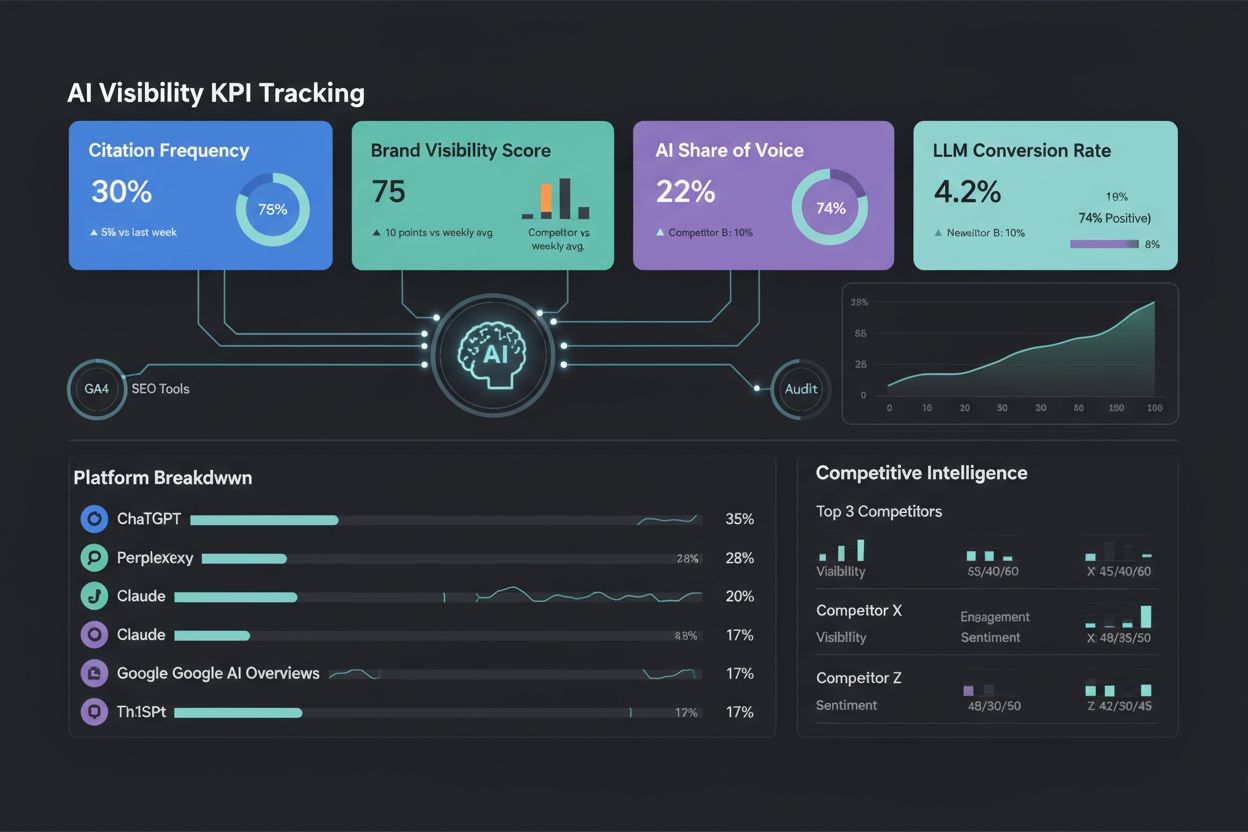

Measure AI search performance using three core KPIs: AI Signal Rate (brand visibility in AI answers), Answer Accuracy Rate (credibility of AI-generated content about your brand), and AI-Influenced Conversion Rate (business impact from AI-sourced traffic). Track these metrics across ChatGPT, Perplexity, Gemini, and Google AI Overviews using dedicated monitoring platforms.

Measuring AI search performance represents a fundamental shift from traditional search engine optimization metrics. Unlike conventional search where users click through to websites, AI-powered search engines like ChatGPT, Perplexity, and Google AI Overviews generate direct answers to user queries, often without requiring users to visit external websites. This shift breaks the traditional clickstream, making legacy KPIs like impressions, rankings, and click-through rates insufficient for understanding your brand’s true visibility and impact in AI-driven discovery environments. The challenge lies in measuring what happens when AI systems answer questions directly about your brand, products, or services without generating the trackable interactions that traditional analytics platforms capture.

The emergence of AI answer generators has created an entirely new discovery channel that marketers must understand and measure. When consumers ask Perplexity about the best solutions in your category or request ChatGPT to compare your brand with competitors, your visibility depends on whether AI systems have access to accurate information about your company and whether they choose to cite your content as a trusted source. This requires a completely different measurement framework than what worked for Google search optimization.

AI Signal Rate represents the foundational metric for understanding your brand’s presence in AI-generated answers. This KPI measures how frequently your brand appears when AI tools answer questions within your category, regardless of whether users click through to your website. The metric answers the critical question: “Is your brand visible when AI tools answer questions that matter to your business?”

The formula for calculating AI Signal Rate is straightforward: divide the number of AI answers that mention your brand by the total number of AI questions asked in your category. For example, if you monitor 100 questions about your industry and your brand appears in 45 of those answers, your AI Signal Rate would be 45 percent. This metric becomes increasingly valuable when tracked over time, allowing you to measure whether your AI optimization efforts are improving your visibility in these critical discovery moments.

AI Signal Rate varies significantly by market position and industry maturity. Leaders in established categories often achieve citation rates between 60 to 80 percent, while challenger brands typically start at 5 to 10 percent visibility. The key is tracking direction and improvement rather than pursuing perfection immediately. As you optimize your content for AI systems and ensure your brand information is accurate and accessible, your signal rate should gradually increase. This metric also enables competitive benchmarking, allowing you to compare your visibility against direct competitors and understand your relative market position in AI-driven discovery.

Answer Accuracy Rate measures how correctly and credibly AI systems represent your brand when they mention it in generated answers. This metric is critical because visibility without accuracy creates significant risk—if AI systems provide incorrect information about your products, services, or company values, you damage credibility with potential customers who rely on these answers for decision-making. The metric answers: “When AI tools mention your brand, do they represent it accurately and in alignment with your brand identity?”

Measuring answer accuracy requires establishing a Brand Canon—a comprehensive document containing your mission statement, core values, product specifications, service descriptions, and any other information you want AI systems to know about your organization. Once you’ve defined your canon, you evaluate each AI-generated answer mentioning your brand against specific criteria. Each answer is typically scored on three key dimensions: factual correctness (does the AI state accurate facts about your brand?), alignment with canon (does the representation match your official brand positioning?), and hallucination presence (does the AI invent false claims or features?). Each dimension is worth 0 to 2 points, creating a maximum score of 6 points per answer.

Brands with strong content foundations and clear brand documentation typically achieve Answer Accuracy Rates above 85 percent, indicating that AI systems consistently represent them correctly. Scores below 70 percent signal real risk and suggest that your content may be unclear, incomplete, or contradictory, leading AI systems to generate inaccurate representations. This metric directly impacts your brand reputation in AI search environments and should be monitored continuously as AI systems evolve and encounter new information about your organization.

AI-Influenced Conversion Rate connects your AI search visibility directly to business outcomes by measuring the conversion rate among users who discovered your brand through AI-powered search engines. This is the metric that resonates with finance teams and executives because it demonstrates concrete return on investment from your AI search optimization efforts. The formula divides conversions from AI-influenced sessions by total AI-influenced sessions, revealing what percentage of users who found you through AI actually complete desired actions like purchases, sign-ups, or inquiries.

Measuring AI-influenced conversions requires implementing proper tracking mechanisms to identify traffic originating from AI platforms. There are three primary approaches: direct tracking using UTM parameters or custom channel groupings to identify AI referrers, behavioral inference by analyzing patterns like branded search occurrences or deep page entry points that suggest AI discovery, and post-conversion surveys asking users “What led you here today?” to capture self-reported AI discovery. Each method has strengths and limitations, and many organizations use a combination to build a comprehensive picture of AI-influenced conversions.

Data from leading organizations shows that AI-influenced sessions often convert at rates between 3 to 16 percent, which frequently exceeds average traffic conversion rates. This higher conversion rate makes sense because users who discover your brand through AI answers have already received credible third-party validation—the AI system itself has recommended or mentioned your solution. This pre-qualification effect means AI-sourced traffic often represents higher-intent users compared to cold search traffic, making it particularly valuable for driving business growth.

| Metric Category | Key Metrics | Purpose | Measurement Method |

|---|---|---|---|

| Visibility | AI Citation Rate, Primary Source Rate, AI Share of Voice, Topic Coverage, Entity Presence, AI Snippet Visibility | Measure how often your brand appears in AI answers | Query monitoring across platforms |

| Credibility | Answer Accuracy Rate, Content Depth, Semantic Relevance, Trust Signal Strength, Source Context Integrity | Evaluate how accurately AI represents your brand | Rubric-based evaluation of answers |

| Outcomes | Zero Click Impact Score, Branded Query Retention, Cross Channel Lift, AI-Influenced Conversion Rate, Revenue per AI Visit | Connect visibility to business results | Analytics integration and attribution |

Implementing effective AI search performance measurement requires a structured approach that moves beyond spot-checking individual answers. Start by building a comprehensive query set of approximately 100 prompts that represent how your target audience actually searches for solutions in your category. Structure these prompts across different intent types: category questions (general information about your industry), comparison queries (how your solution compares to alternatives), educational content (how-to and learning questions), and problem-solving prompts (specific challenges your solution addresses). Allocate roughly 80 percent of your queries to unbranded searches that don’t mention your company and 20 percent to branded searches that specifically reference your brand.

Once you’ve established your query set, establish a baseline by running these prompts across all relevant AI platforms—ChatGPT, Perplexity, Google AI Overviews, Microsoft Copilot, and Claude. Document your brand’s appearance in answers, the accuracy of information provided, any misattributions or hallucinations, and the competitive landscape of which other brands appear in the same answers. This baseline becomes your starting point for measuring improvement and understanding your current position in AI search environments.

Simultaneously, audit your content foundation to ensure it supports strong AI search performance. Evaluate your website for completeness (do you address all questions your audience asks?), clarity (is your information easy for AI systems to understand and extract?), entity accuracy (are your company details, locations, and key information correct?), and trust signals (do you have credentials, testimonials, and authority indicators that AI systems recognize?). Many visibility problems in AI search stem from incomplete or unclear content rather than from AI system limitations.

Manual evaluation of AI answers works for initial audits but cannot sustain ongoing measurement. Leading organizations implement hybrid monitoring systems that combine automation with human oversight to evaluate hundreds or thousands of AI answers consistently. These systems typically work by automatically generating and executing your query set across AI platforms, feeding the results to an AI agent that evaluates each answer against your established rubrics, and assigning confidence scores to each evaluation. Answers below a specified confidence threshold (typically 75 percent initially) are escalated to human reviewers who verify the evaluation and provide feedback that trains the system for improved accuracy.

This approach ensures your measurement is scalable, consistent, explainable, and cost-efficient while maintaining high quality. The system learns from human feedback, continuously improving its ability to evaluate answer accuracy and identify credibility issues. Most organizations find that bi-weekly measurement cycles provide sufficient frequency to track performance trends while remaining manageable in terms of resource requirements.

Once you’ve established baseline metrics and implemented ongoing monitoring, the optimization cycle begins. Use your AI Signal Rate data to identify which topics and queries your brand appears in and which gaps exist where competitors are mentioned but you’re not. This reveals content opportunities—topics where you should create or improve content to increase visibility. Use your Answer Accuracy Rate data to identify specific misrepresentations or hallucinations that AI systems generate about your brand, then update your website content to provide clearer, more accurate information that AI systems can reliably extract and cite.

Use your AI-Influenced Conversion Rate data to understand which AI platforms and query types drive the most valuable traffic. If you discover that Perplexity users convert at higher rates than ChatGPT users, you might prioritize optimization for Perplexity’s specific indexing and citation patterns. If comparison queries drive higher conversion rates than educational content, you might focus content creation on comparative positioning against alternatives.

The optimization process follows a continuous cycle: draft content improvements, measure their impact on your KPIs, learn what works in your specific market, and improve iteratively. This data-driven approach ensures your AI search optimization efforts deliver measurable business results rather than pursuing vanity metrics that don’t connect to actual outcomes.

Start tracking how your brand appears in AI-generated answers across all major platforms. Get real-time insights into visibility, accuracy, and conversion impact with comprehensive monitoring.

Learn how to measure content performance in AI systems including ChatGPT, Perplexity, and other AI answer generators. Discover key metrics, KPIs, and monitoring...

Learn the essential KPIs for monitoring your brand's visibility in AI search engines like ChatGPT, Perplexity, and Google AI Overviews. Track AI Signal Rate, An...

Learn how to set AI visibility KPIs and measure success in AI search. Discover the 5 metrics that matter: citation frequency, brand visibility score, AI share o...