How RAG Changes AI Citations

Discover how Retrieval-Augmented Generation transforms AI citations, enabling accurate source attribution and grounded answers across ChatGPT, Perplexity, and G...

Learn how Retrieval-Augmented Generation systems manage knowledge base freshness, prevent stale data, and maintain current information through indexing strategies and refresh mechanisms.

RAG systems handle outdated information through regular knowledge base updates, periodic re-indexing of embeddings, metadata-driven freshness signals, and automated refresh pipelines that keep external data sources synchronized with retrieval indexes.

Retrieval-Augmented Generation (RAG) systems face a fundamental challenge: the external knowledge bases they rely on are not static. Documents get updated, new information emerges, old facts become irrelevant, and without proper management mechanisms, RAG systems can confidently serve outdated or incorrect information to users. This problem, often called the “freshness problem,” is one of the most critical issues in production RAG deployments. Unlike traditional large language models that have a fixed knowledge cutoff date, RAG systems promise access to current information—but only if the underlying data infrastructure is properly maintained and refreshed.

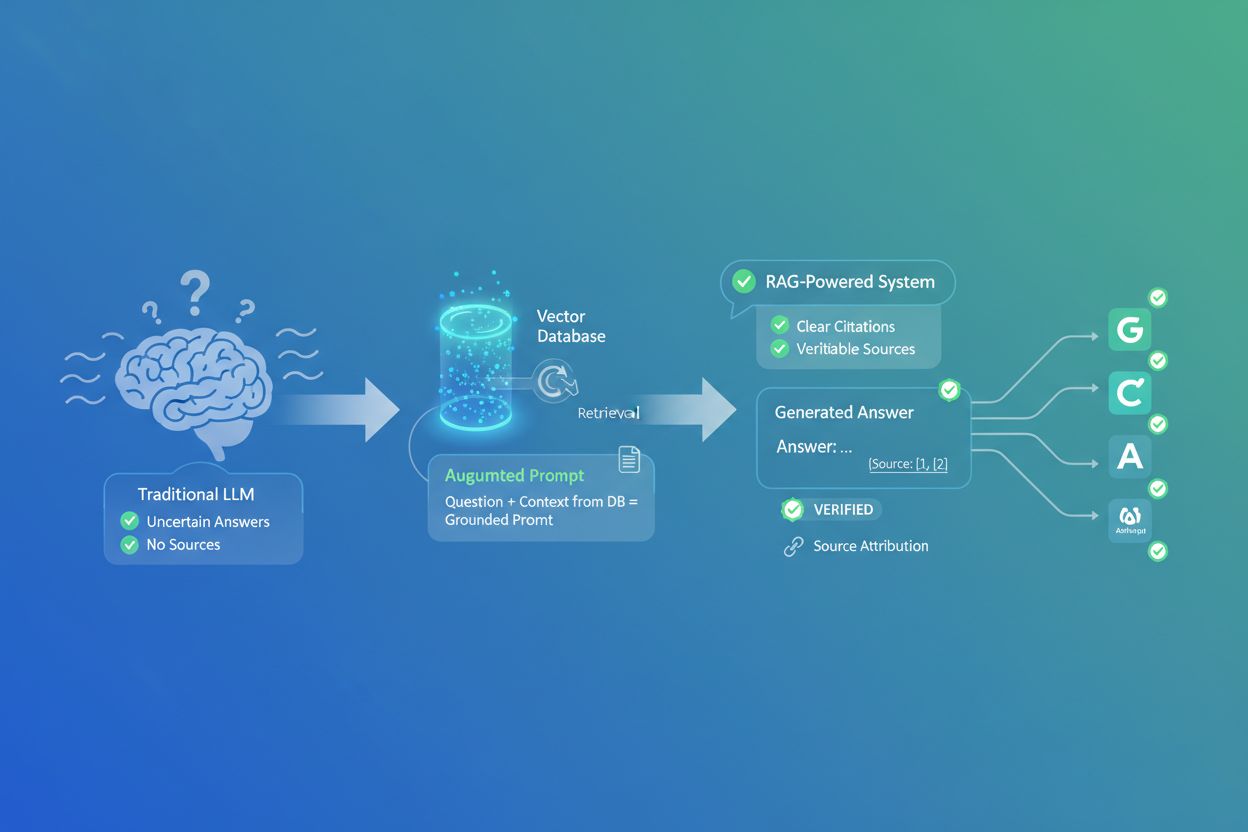

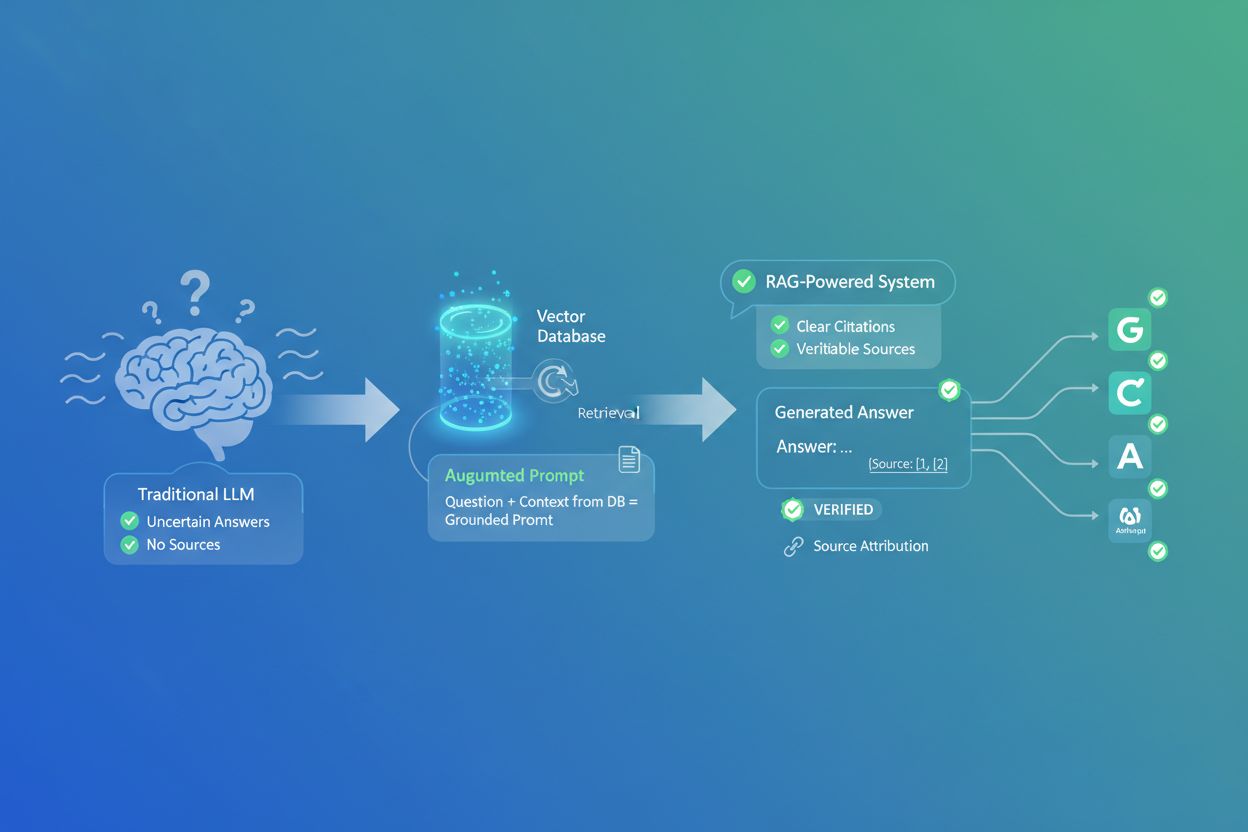

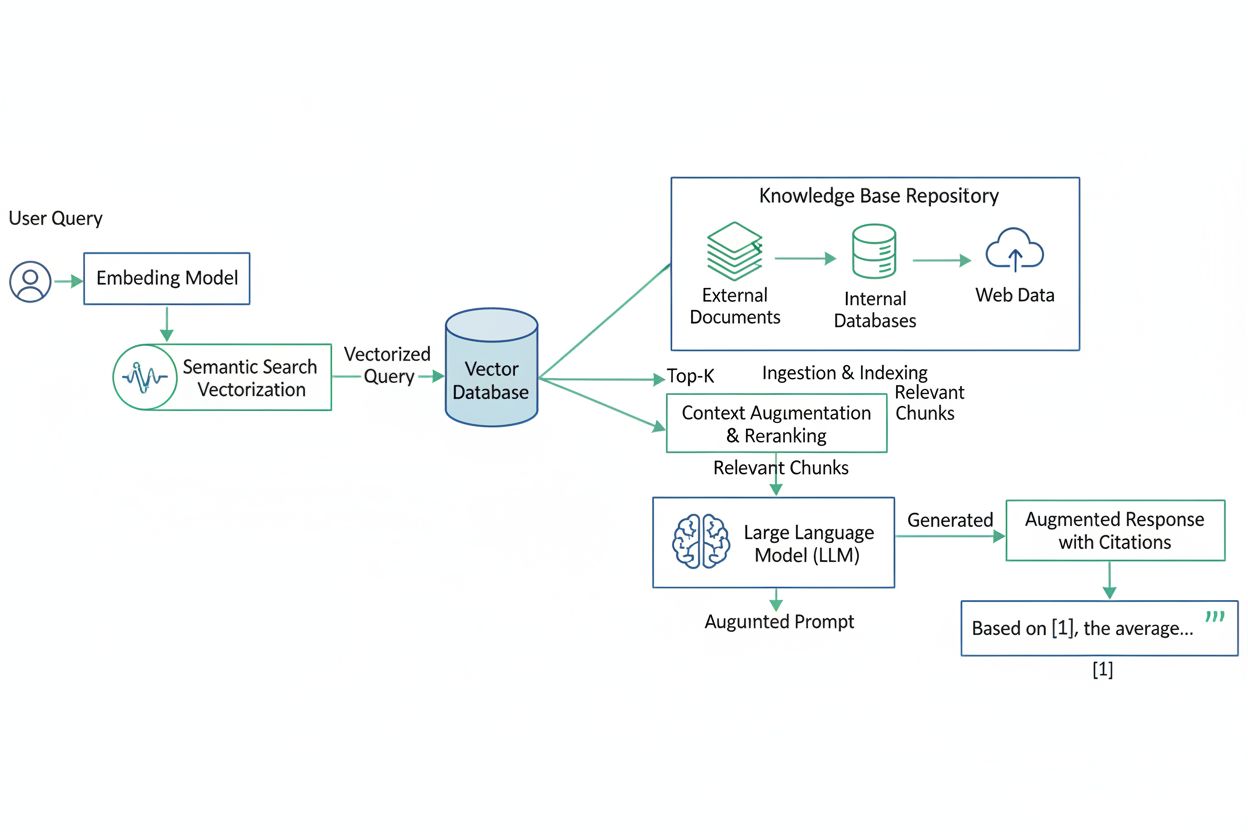

The core issue stems from how RAG systems work. They retrieve relevant documents from an external knowledge base and augment the LLM prompt with this retrieved context before generating answers. If the knowledge base contains stale information, the retrieval step will pull outdated content, and the LLM will generate responses based on that stale data. This creates a false sense of accuracy because the answer appears grounded in external sources, when in reality those sources are no longer current. Organizations deploying RAG systems must implement deliberate strategies to detect, prevent, and remediate outdated information throughout their retrieval pipelines.

Outdated information in RAG systems typically originates from several interconnected sources. The most common cause is incomplete knowledge base updates, where new documents are added to the source system but the vector index used for retrieval is not refreshed. This creates a synchronization gap: the raw data may be current, but the searchable index remains frozen in time. When users query the system, the retriever searches the stale index and cannot find newly added or updated documents, even though they technically exist in the knowledge base.

Another critical source of staleness is embedding drift. Embeddings are numerical representations of text that enable semantic search in RAG systems. When the embedding model itself is updated or improved, or when language and terminology evolve over time, the old embeddings no longer accurately represent the current content. Studies show that outdated embeddings can cause performance declines of up to 20% in retrieval accuracy. A document that was previously ranked highly for a query may suddenly become invisible because its embedding no longer matches the query’s semantic meaning.

Metadata staleness represents a third category of problems. RAG systems often use metadata like timestamps, document categories, or source credibility scores to prioritize retrieval results. If this metadata is not updated when documents change, the system may continue ranking outdated documents above newer, more relevant ones. For example, a customer support RAG system might retrieve an old solution article dated 2023 before a newer, corrected solution from 2025, simply because the metadata-driven ranking logic was not updated.

| Staleness Source | Impact | Frequency | Severity |

|---|---|---|---|

| Unrefreshed vector index | Newly added documents invisible to retrieval | High | Critical |

| Outdated embeddings | Reduced semantic matching accuracy | Medium | High |

| Stale metadata signals | Wrong documents ranked first | Medium | High |

| Incomplete knowledge base | Missing information for queries | High | Critical |

| Conflicting information | Multiple versions of same fact | Medium | High |

The most effective approach to managing outdated information is implementing automated refresh pipelines that continuously synchronize the knowledge base with the retrieval index. Rather than manually triggering updates, organizations deploy scheduled processes that run on defined intervals—daily, hourly, or even in real-time depending on data volatility. These pipelines typically follow a multi-stage process: they fetch fresh data from source systems, process and chunk the content appropriately, generate updated embeddings, and finally re-index the vector database.

Modern RAG platforms support incremental indexing, which updates only the documents that have changed rather than rebuilding the entire index from scratch. This approach dramatically reduces computational overhead and allows for more frequent refresh cycles. When a document is modified in the source system, the pipeline detects the change, re-embeds just that document, and updates its representation in the vector index. This means new information can be available to the retrieval system within minutes rather than hours or days.

The sophistication of refresh mechanisms varies significantly across implementations. Basic approaches use batch processing where the entire knowledge base is re-indexed on a fixed schedule, typically nightly. More advanced systems implement event-driven updates that trigger re-indexing whenever source documents change, detected through webhooks, database triggers, or polling mechanisms. The most mature implementations combine both approaches: continuous incremental updates for frequently-changing data sources plus periodic full re-indexing to catch any missed changes and recalibrate embeddings.

Beyond simply updating the index, RAG systems can leverage metadata to signal document freshness and guide retrieval ranking. By attaching timestamps, version numbers, and source credibility scores to each document, the system can intelligently prioritize newer information over older alternatives. When multiple documents answer the same query, the retriever can boost documents with recent timestamps and demote those marked as archived or superseded.

Implementing metadata-driven prioritization requires careful prompt engineering and ranking configuration. The retrieval system must be instructed to consider freshness signals alongside semantic relevance. For example, a RAG system supporting customer support might use a hybrid ranking approach: first filter documents by relevance using vector similarity, then re-rank results by a combination of semantic score (70% weight) and recency score (30% weight). This ensures that while the most semantically relevant document is still preferred, a significantly newer document addressing the same question will rank higher if the semantic scores are comparable.

Conflict resolution becomes critical when the knowledge base contains multiple versions of the same information. A policy document might exist in three versions: the original from 2023, an updated version from 2024, and the current version from 2025. Without explicit conflict resolution logic, the retriever might return all three, confusing the LLM about which version to trust. Effective RAG systems implement versioning strategies where only the latest version is indexed by default, with older versions archived separately or marked with deprecation flags that instruct the LLM to ignore them.

The choice and maintenance of embedding models directly impacts how well RAG systems handle information changes. Embedding models convert text into numerical vectors that enable semantic search. When an embedding model is updated—either to a newer version with better semantic understanding or fine-tuned for domain-specific terminology—all existing embeddings become potentially misaligned with the new model’s representation space.

Organizations deploying RAG systems must establish embedding model governance practices. This includes documenting which embedding model version is in use, monitoring for newer or better-performing models, and planning controlled transitions to improved models. When upgrading embedding models, the entire knowledge base must be re-embedded using the new model before the old embeddings are discarded. This is computationally expensive but necessary to maintain retrieval accuracy.

Domain-specific embedding models offer particular advantages for managing information freshness. Generic embedding models trained on broad internet data may struggle with specialized terminology in healthcare, legal, or technical domains. Fine-tuning embedding models on domain-specific question-document pairs improves semantic understanding of evolving terminology within that domain. For example, a legal RAG system might fine-tune its embedding model on pairs of legal questions and relevant case documents, enabling it to better understand how legal concepts are expressed and evolve over time.

Preventing outdated information requires maintaining high-quality, well-curated knowledge bases from the start. Poor data quality—including duplicate documents, conflicting information, and irrelevant content—compounds the staleness problem. When the knowledge base contains multiple versions of the same fact with different answers, the retriever may pull contradictory information, and the LLM will struggle to generate coherent responses.

Effective knowledge base curation involves:

Organizations should implement data freshness pipelines that timestamp documents and automatically archive or flag content that exceeds a defined age threshold. In rapidly changing domains like news, technology, or healthcare, documents older than 6-12 months might be automatically archived unless explicitly renewed. This prevents the knowledge base from accumulating stale information that gradually degrades retrieval quality.

Proactive monitoring is essential for detecting when RAG systems begin serving outdated information. Retrieval quality metrics should be continuously tracked, including recall@K (whether relevant documents appear in the top K results) and mean reciprocal rank (MRR). Sudden drops in these metrics often indicate that the index has become stale or that embedding drift has occurred.

Organizations should implement production monitoring that samples retrieved documents and evaluates their freshness. This can be automated by checking document timestamps against a freshness threshold, or through human review of a sample of retrieved results. When monitoring detects that retrieved documents are consistently older than expected, it signals that the refresh pipeline may be failing or that the knowledge base lacks current information on certain topics.

User feedback signals provide valuable indicators of staleness. When users report that answers are outdated or incorrect, or when they explicitly state that information contradicts what they know to be current, these signals should be logged and analyzed. Patterns in user feedback can reveal which topics or document categories are most prone to staleness, allowing teams to prioritize refresh efforts.

When RAG systems retrieve multiple documents containing conflicting information, the LLM must decide which to trust. Without explicit guidance, the model may blend contradictory statements or express uncertainty, reducing answer quality. Conflict detection and resolution mechanisms help manage this challenge.

One approach is to implement explicit conflict labeling in the prompt. When the retriever returns documents with conflicting information, the system can instruct the LLM: “The following documents contain conflicting information. Document A states [X], while Document B states [Y]. Document B is more recent (dated 2025 vs 2023). Prioritize the more recent information.” This transparency helps the LLM make informed decisions about which information to trust.

Another strategy is to prevent conflicts from reaching the LLM by filtering them out during retrieval. If the system detects that multiple versions of the same document exist, it can return only the latest version. If conflicting policies or procedures are detected, the system can flag this as a knowledge base quality issue requiring human review and resolution before the documents are indexed.

For use cases requiring the most current information, organizations can implement real-time or near-real-time update mechanisms. Rather than waiting for scheduled batch refreshes, these systems detect changes in source data immediately and update the retrieval index within seconds or minutes.

Real-time updates typically rely on event streaming architectures where source systems emit events whenever data changes. A document management system might emit a “document_updated” event, which triggers a pipeline that re-embeds the document and updates the vector index. This approach requires more sophisticated infrastructure but enables RAG systems to serve information that is current within a few minutes of source data changes.

Hybrid approaches combine real-time updates for frequently-changing data with periodic batch refreshes for stable data. A customer support RAG system might use real-time updates for the knowledge base of current policies and procedures, while using nightly batch refreshes for less frequently-updated reference materials. This balances the need for current information with computational efficiency.

Organizations should establish freshness evaluation frameworks that measure how current their RAG systems’ answers actually are. This involves defining what “current” means for different types of information—news might need to be current within hours, while reference materials might be acceptable if updated monthly.

Evaluation approaches include:

By implementing comprehensive monitoring and evaluation, organizations can identify freshness problems early and adjust their refresh strategies accordingly.

Track how your domain, brand, and URLs appear in AI-generated answers across ChatGPT, Perplexity, and other AI search engines. Ensure your information stays current and accurate in AI systems.

Discover how Retrieval-Augmented Generation transforms AI citations, enabling accurate source attribution and grounded answers across ChatGPT, Perplexity, and G...

Learn how RAG combines LLMs with external data sources to generate accurate AI responses. Understand the five-stage process, components, and why it matters for ...

Learn what Retrieval-Augmented Generation (RAG) is, how it works, and why it's essential for accurate AI responses. Explore RAG architecture, benefits, and ente...