Dynamic Rendering

Dynamic rendering serves static HTML to search engine bots while delivering client-side rendered content to users. Learn how this technique improves SEO, crawl ...

Learn how dynamic rendering impacts AI crawlers, ChatGPT, Perplexity, and Claude visibility. Discover why AI systems can’t render JavaScript and how to optimize for AI search.

Dynamic rendering serves fully-rendered HTML to AI crawlers while delivering client-side rendered content to users, improving AI visibility since most AI crawlers like ChatGPT and Claude cannot execute JavaScript. This technique helps ensure AI systems can access and index critical content that would otherwise remain invisible in their training data and search results.

Dynamic rendering is a technical approach that serves different versions of web content to different visitors: fully-rendered HTML to AI crawlers and interactive, client-side rendered content to human users. This distinction has become critically important as AI systems like ChatGPT, Perplexity, Claude, and Google AI Overviews increasingly crawl the web to train their models and generate answers. The primary entity here is dynamic rendering, a server-side technique that bridges the gap between how modern web applications are built and how AI systems can actually read them. Understanding this relationship matters because it directly impacts whether your brand’s content becomes visible in AI-generated responses, which now influence how millions of people discover information online. As AI search grows, dynamic rendering has evolved from a niche SEO optimization technique into a fundamental requirement for maintaining visibility across both traditional search engines and emerging AI platforms.

JavaScript is the programming language that powers interactive web experiences—animations, real-time updates, dynamic forms, and personalized content. However, this same technology creates a critical visibility problem for AI systems. Unlike Google’s Googlebot, which can execute JavaScript after an initial page visit, research from Vercel and MERJ reveals that none of the major AI crawlers currently render JavaScript. This includes OpenAI’s GPTBot and ChatGPT-User, Anthropic’s ClaudeBot, Perplexity’s PerplexityBot, Meta’s ExternalAgent, and ByteDance’s Bytespider. These AI crawlers can fetch JavaScript files as text (ChatGPT fetches 11.50% JavaScript, Claude fetches 23.84%), but they cannot execute the code to reveal the content it generates. This means any critical information loaded dynamically through JavaScript—product details, pricing, navigation menus, article content—remains completely invisible to AI systems. The consequence is severe: if your website relies heavily on client-side rendering, AI crawlers see only the bare HTML skeleton, missing the actual content that would make your pages valuable sources for AI-generated answers.

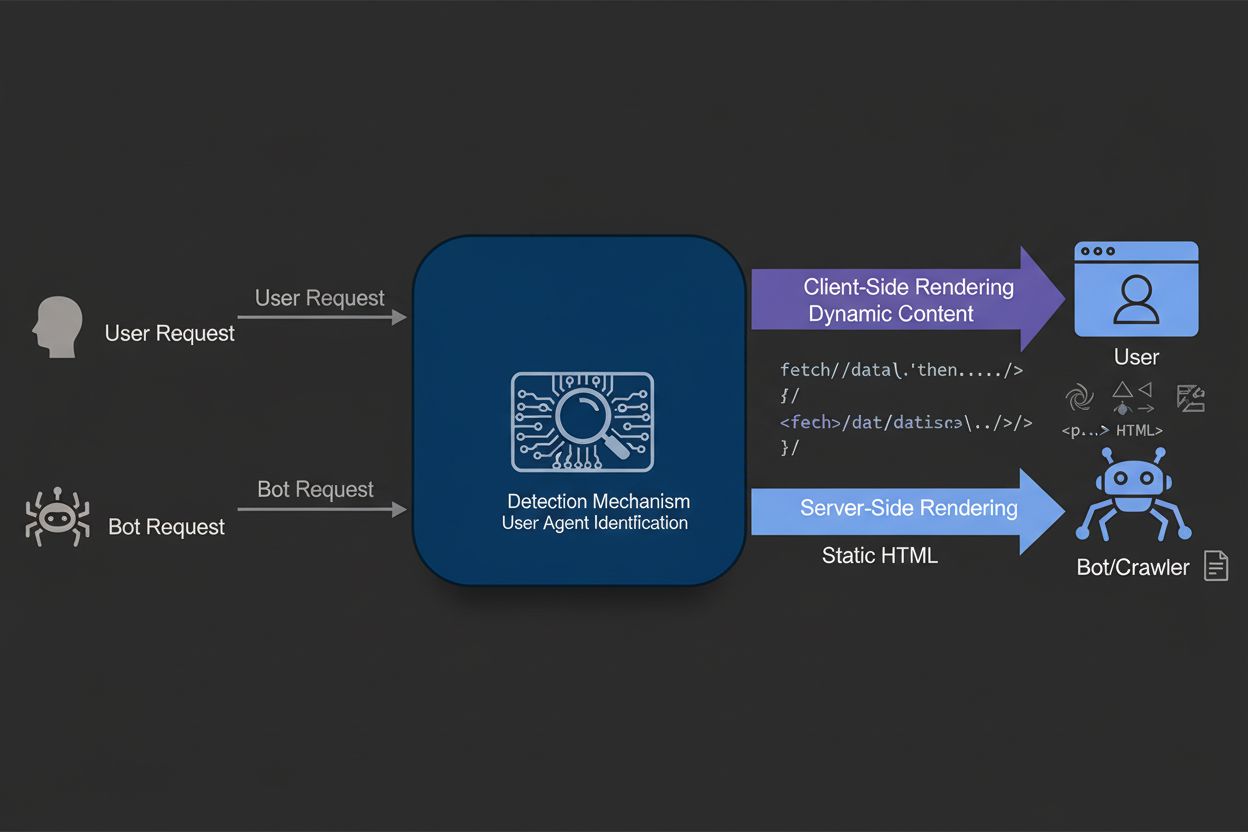

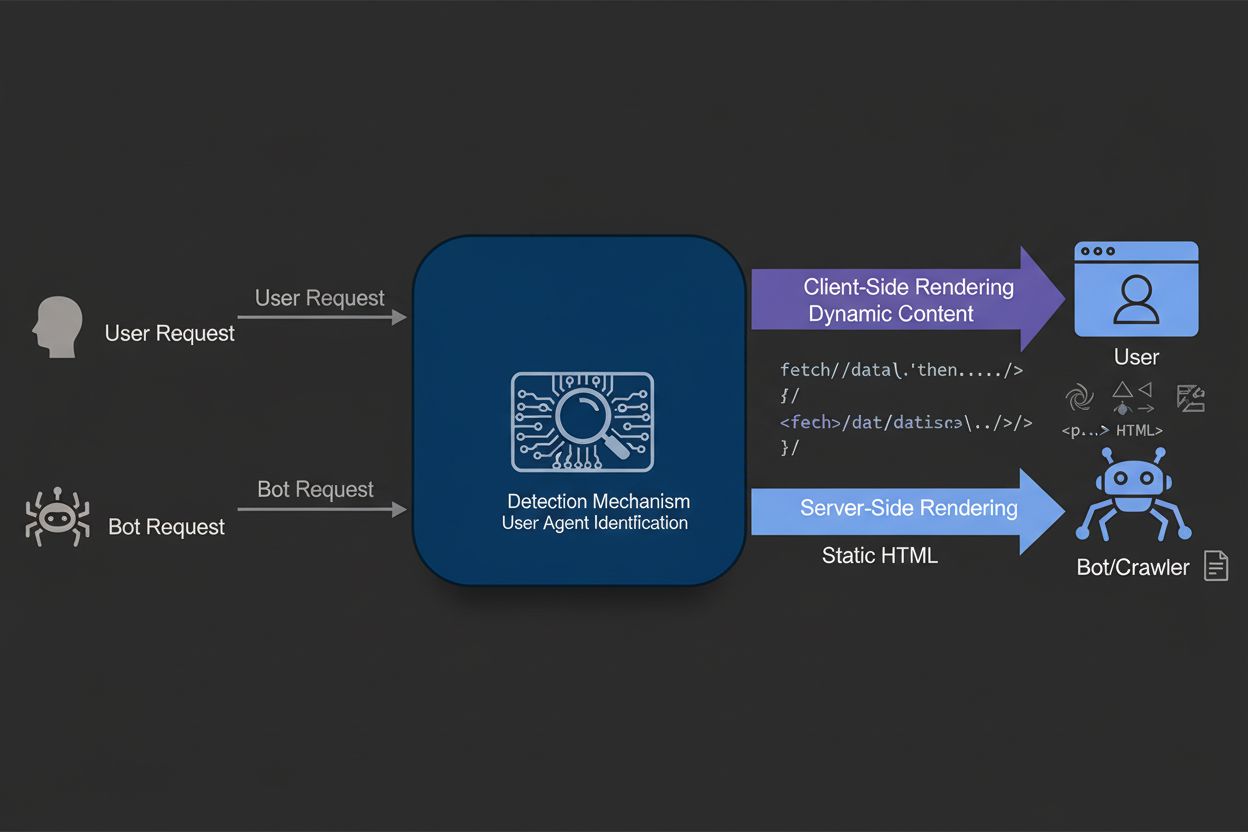

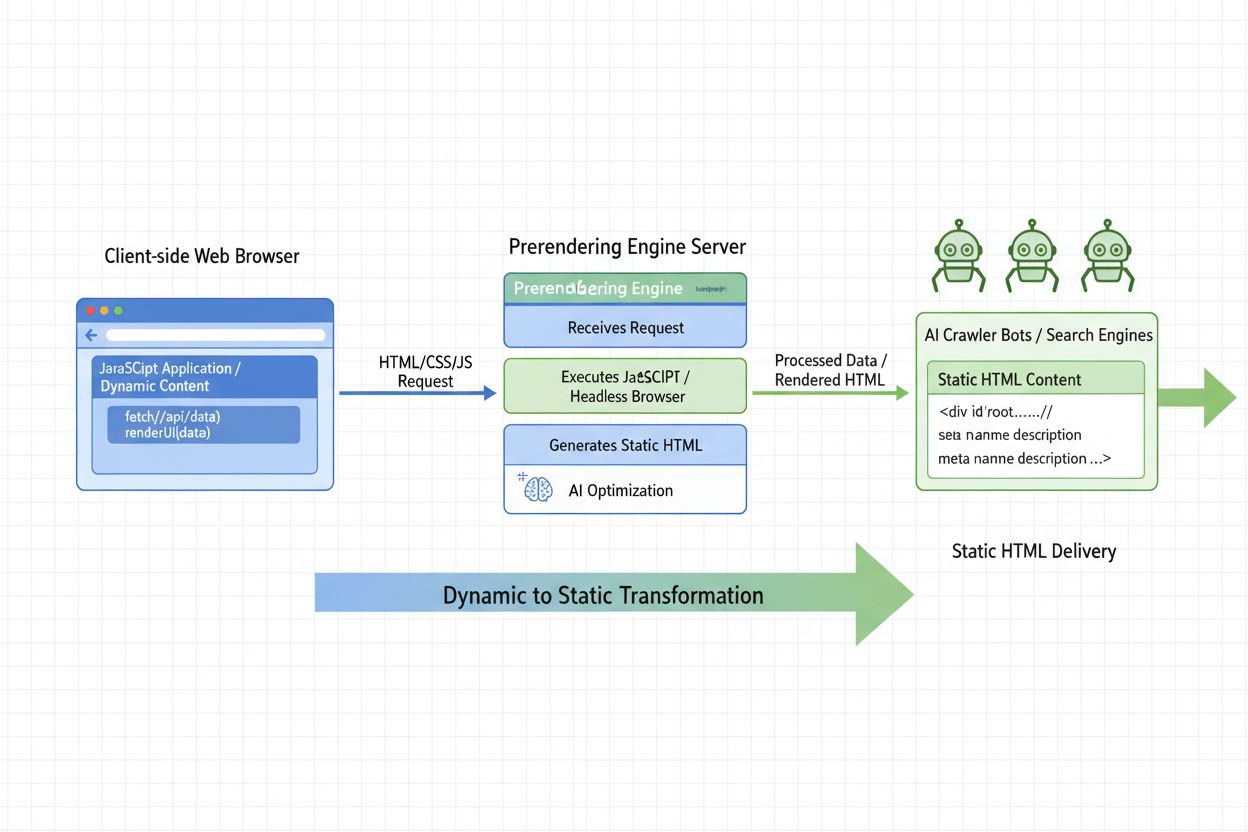

Dynamic rendering operates through a straightforward three-step process that detects incoming requests and routes them intelligently. First, a rendering server is configured to generate static HTML versions of your pages and cache them for rapid delivery. Second, middleware on your web server identifies whether an incoming request comes from a bot or a human user by examining the user agent string. Third, requests from AI crawlers are automatically redirected to the pre-rendered, static HTML version, while human visitors continue receiving the full, interactive client-side rendered experience. This approach ensures that AI crawlers receive fully-formed HTML containing all critical content—text, metadata, structured data, and links—without needing to execute any JavaScript. The rendering happens on-demand or on a schedule, and the static version is cached to avoid performance bottlenecks. Tools like Prerender.io, Rendertron, and Nostra AI’s Crawler Optimization service automate this process, making implementation relatively straightforward compared to alternatives like full server-side rendering.

| Rendering Method | How It Works | AI Crawler Access | User Experience | Implementation Complexity | Cost |

|---|---|---|---|---|---|

| Client-Side Rendering (CSR) | Content loads in browser via JavaScript | ❌ Limited/None | ✅ Highly interactive | Low | Low |

| Server-Side Rendering (SSR) | Content rendered on server before delivery | ✅ Full access | ✅ Interactive | High | High |

| Static Site Generation (SSG) | Pages pre-built at build time | ✅ Full access | ✅ Fast | Medium | Medium |

| Dynamic Rendering | Separate static version for bots, CSR for users | ✅ Full access | ✅ Interactive | Medium | Medium |

| Hydration | Server renders, then JavaScript takes over | ✅ Partial access | ✅ Interactive | High | High |

Recent data from Vercel’s analysis of crawler behavior reveals the massive scale at which AI systems are now accessing web content. In a single month, GPTBot generated 569 million requests across Vercel’s network, while Claude generated 370 million requests. For perspective, this combined volume represents approximately 28% of Googlebot’s total traffic, making AI crawlers a significant force in web traffic patterns. Perplexity’s crawler generated 24.4 million requests, demonstrating that even newer AI platforms are crawling at substantial scale. These numbers underscore why dynamic rendering has shifted from optional optimization to strategic necessity—AI systems are actively crawling your content at rates comparable to traditional search engines, and if they can’t access your content due to JavaScript limitations, you’re losing visibility to a massive audience. The geographic concentration of AI crawlers (ChatGPT operates from Des Moines and Phoenix, Claude from Columbus) differs from Google’s distributed approach, but the volume and frequency of visits make optimization equally critical.

The inability of AI crawlers to execute JavaScript stems from resource constraints and architectural decisions. Rendering JavaScript at scale requires significant computational resources—browsers must parse code, execute functions, manage memory, handle asynchronous operations, and render the resulting DOM. For AI companies crawling billions of pages to train large language models, this overhead becomes prohibitively expensive. Google can afford this investment because search ranking is their core business, and they’ve optimized their infrastructure over decades. AI companies, by contrast, are still optimizing their crawling strategies and prioritizing cost efficiency. Research shows that ChatGPT spends 34.82% of its fetches on 404 pages and Claude spends 34.16% on 404s, indicating that AI crawlers are still inefficient at URL selection and validation. This inefficiency suggests that adding JavaScript rendering to their crawling process would compound these problems. Additionally, AI models train on diverse content types—HTML, images, plain text, JSON—and executing JavaScript would complicate the training pipeline without necessarily improving model quality. The architectural choice to skip JavaScript execution is therefore both a technical and economic decision that shows no signs of changing in the near term.

When you implement dynamic rendering, you fundamentally change how AI systems perceive your content. Instead of seeing an empty or incomplete page, AI crawlers receive fully-rendered HTML containing all your critical information. This has direct implications for how your brand appears in AI-generated answers. Research from Conductor shows that AI crawlers visit content more frequently than traditional search engines—in one case, ChatGPT visited a page 8 times more often than Google within five days of publication. This means that when you implement dynamic rendering, AI systems can immediately access and understand your content, potentially leading to faster inclusion in their training data and more accurate citations in their responses. The visibility improvement is substantial: brands using dynamic rendering solutions report up to 100% improvement in AI search visibility compared to JavaScript-heavy sites without rendering solutions. This translates directly to increased likelihood of being cited in ChatGPT responses, Perplexity answers, Claude outputs, and Google AI Overviews. For competitive industries where multiple sources compete for the same queries, this visibility difference can determine whether your brand becomes the authoritative source or remains invisible.

Each AI platform exhibits distinct crawling patterns that affect how dynamic rendering benefits your visibility. ChatGPT’s crawler (GPTBot) prioritizes HTML content (57.70% of fetches) and generates the highest volume of requests, making it the most aggressive AI crawler. Claude’s crawler shows different priorities, focusing heavily on images (35.17% of fetches), suggesting Anthropic is training their model on visual content alongside text. Perplexity’s crawler operates at lower volume but with similar JavaScript limitations, meaning dynamic rendering provides the same visibility benefits. Google’s Gemini, uniquely, leverages Google’s infrastructure and can execute JavaScript like Googlebot, so it doesn’t face the same constraints. However, Google AI Overviews still benefit from dynamic rendering because faster-loading pages improve crawl efficiency and content freshness. The key insight is that dynamic rendering provides universal benefits across all major AI platforms—it ensures your content is accessible to every AI system regardless of their rendering capabilities. This universal benefit makes dynamic rendering a platform-agnostic optimization strategy that protects your visibility across the entire AI search landscape.

Successful dynamic rendering implementation requires strategic planning and careful execution. Start by identifying which pages need dynamic rendering—typically your highest-value content like homepage, product pages, blog articles, and documentation. These are the pages most likely to be cited in AI responses and most critical for visibility. Next, choose your rendering solution: Prerender.io offers a managed service that handles rendering and caching automatically, Rendertron provides an open-source option for technical teams, and Nostra AI integrates rendering with broader performance optimization. Configure your server middleware to detect AI crawlers by their user agent strings (GPTBot, ClaudeBot, PerplexityBot, etc.) and route their requests to the pre-rendered version. Ensure your cached HTML includes all critical content, structured data (schema markup), and metadata—this is where AI systems extract information for their responses. Monitor your implementation using tools like Google Search Console and Conductor Monitoring to verify that AI crawlers are accessing your rendered pages and that content is being indexed properly. Test your pages using the URL Inspection tool to confirm that both the rendered and original versions display correctly. Finally, maintain your dynamic rendering setup by updating cached pages when content changes, monitoring for rendering errors, and adjusting your strategy as AI crawler behavior evolves.

Content freshness plays a critical role in AI visibility, and dynamic rendering affects this relationship in important ways. AI crawlers visit content more frequently than traditional search engines, sometimes within hours of publication. When you implement dynamic rendering, you must ensure that your cached HTML updates quickly when content changes. Stale cached content can actually harm your AI visibility more than no rendering at all, because AI systems will cite outdated information. This is where real-time monitoring becomes essential—platforms like AmICited can track when AI crawlers visit your pages and whether they’re accessing fresh content. The ideal dynamic rendering setup includes automatic cache invalidation when content updates, ensuring that AI crawlers always receive the latest version. For rapidly changing content like news articles, product inventory, or pricing information, this becomes especially critical. Some dynamic rendering solutions offer on-demand rendering, where pages are rendered fresh for each crawler request rather than served from cache, providing maximum freshness at the cost of slightly higher latency. The trade-off between cache performance and content freshness must be carefully balanced based on your content type and update frequency.

Measuring the effectiveness of dynamic rendering requires tracking metrics specific to AI search visibility. Traditional SEO metrics like organic traffic and rankings don’t capture AI visibility because AI search operates differently—users don’t click through to your site from AI responses in the same way they do from Google results. Instead, focus on citation metrics: how often your content is mentioned or cited in AI-generated responses. Tools like AmICited specifically monitor when your brand, domain, or URLs appear in answers from ChatGPT, Perplexity, Claude, and Google AI Overviews. Track crawler activity using server logs or monitoring platforms to verify that AI crawlers are visiting your pages and accessing the rendered content. Monitor indexation status through each platform’s available tools (though AI platforms provide less transparency than Google). Measure content freshness by comparing when you publish content to when AI crawlers access it—dynamic rendering should reduce this lag. Track Core Web Vitals to ensure rendering doesn’t negatively impact performance. Finally, correlate these metrics with business outcomes—increased brand mentions in AI responses should eventually correlate with increased traffic, leads, or conversions as users discover your brand through AI recommendations.

The landscape of dynamic rendering will continue evolving as AI systems mature and web technologies advance. Currently, the assumption is that AI crawlers will remain unable to execute JavaScript due to cost and complexity. However, as AI companies scale and optimize their infrastructure, this could change. Some experts predict that within 2-3 years, major AI crawlers might develop JavaScript rendering capabilities, making dynamic rendering less critical. Conversely, the web is moving toward server-side rendering and edge computing architectures that naturally solve the JavaScript problem without requiring separate dynamic rendering solutions. Frameworks like Next.js, Nuxt, and SvelteKit increasingly default to server-side rendering, which benefits both users and crawlers. The rise of React Server Components and similar technologies allows developers to send pre-rendered content in the initial HTML while maintaining interactivity, effectively combining the benefits of dynamic rendering with better user experience. For brands implementing dynamic rendering today, the investment remains worthwhile because it provides immediate AI visibility benefits and aligns with broader web performance best practices. As the web evolves, dynamic rendering may become less necessary, but the underlying principle—ensuring critical content is accessible to all crawlers—will remain fundamental to online visibility strategy.

+++

Track how ChatGPT, Perplexity, Claude, and Google AI Overviews crawl and cite your content. Use AmICited to monitor your brand's presence in AI-generated answers.

Dynamic rendering serves static HTML to search engine bots while delivering client-side rendered content to users. Learn how this technique improves SEO, crawl ...

Learn what AI Prerendering is and how server-side rendering strategies optimize your website for AI crawler visibility. Discover implementation strategies for C...

Learn how pre-rendering helps your website appear in AI search results from ChatGPT, Perplexity, and Claude. Understand the technical implementation and benefit...