Retrieval-Augmented Generation (RAG)

Learn what Retrieval-Augmented Generation (RAG) is, how it works, and why it's essential for accurate AI responses. Explore RAG architecture, benefits, and ente...

Learn how RAG combines LLMs with external data sources to generate accurate AI responses. Understand the five-stage process, components, and why it matters for AI systems like ChatGPT and Perplexity.

Retrieval-Augmented Generation (RAG) works by combining large language models with external knowledge bases through a five-stage process: users submit queries, retrieval models search knowledge bases for relevant data, retrieved information is returned, the system augments the original prompt with context, and the LLM generates an informed response. This approach enables AI systems to provide accurate, current, and domain-specific answers without retraining.

Retrieval-Augmented Generation (RAG) is an architectural approach that enhances large language models (LLMs) by connecting them with external knowledge bases to produce more authoritative and accurate content. Rather than relying solely on static training data, RAG systems dynamically retrieve relevant information from external sources and inject it into the generation process. This hybrid approach combines the strengths of information retrieval systems with generative AI models, enabling AI systems to provide responses grounded in current, domain-specific data. RAG has become essential for modern AI applications because it addresses fundamental limitations of traditional LLMs: outdated knowledge, hallucinations, and lack of domain expertise. According to recent market research, over 60% of organizations are developing AI-powered retrieval tools to improve reliability and personalize outputs using internal data.

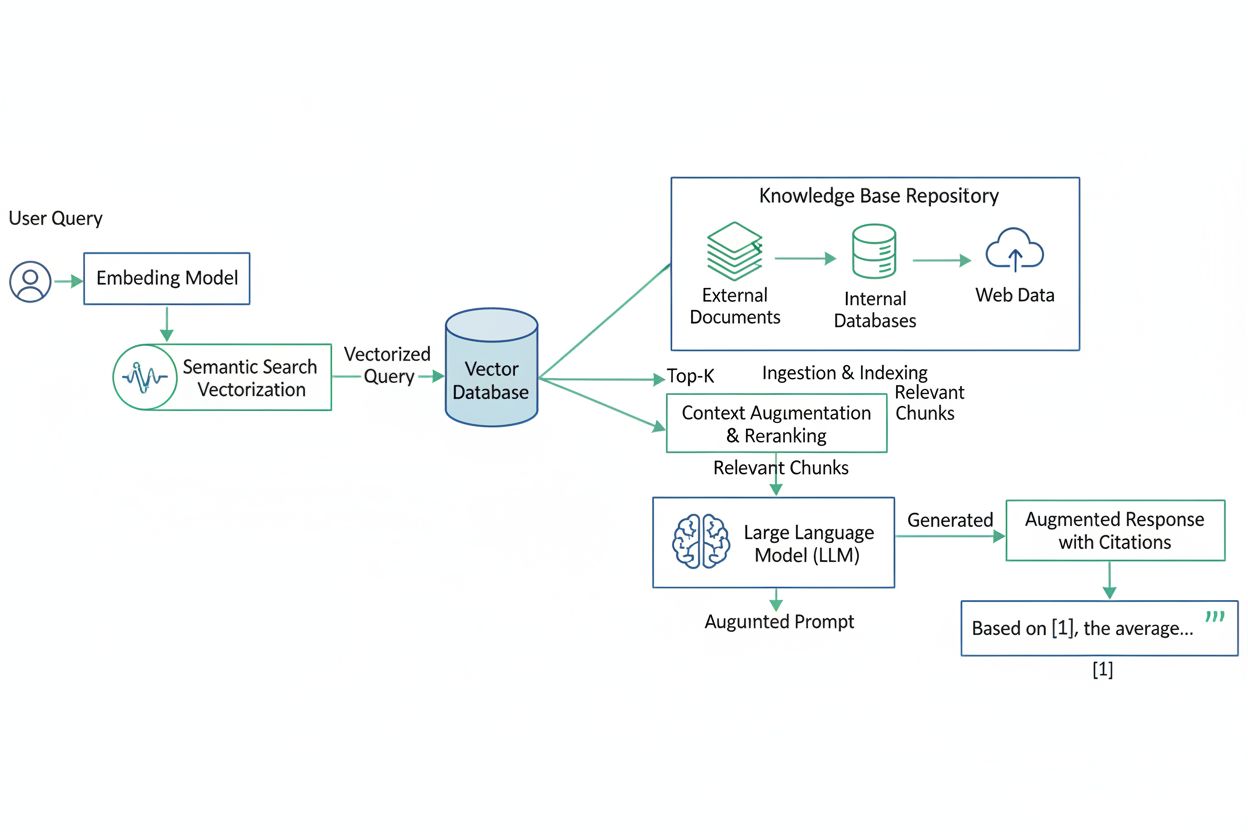

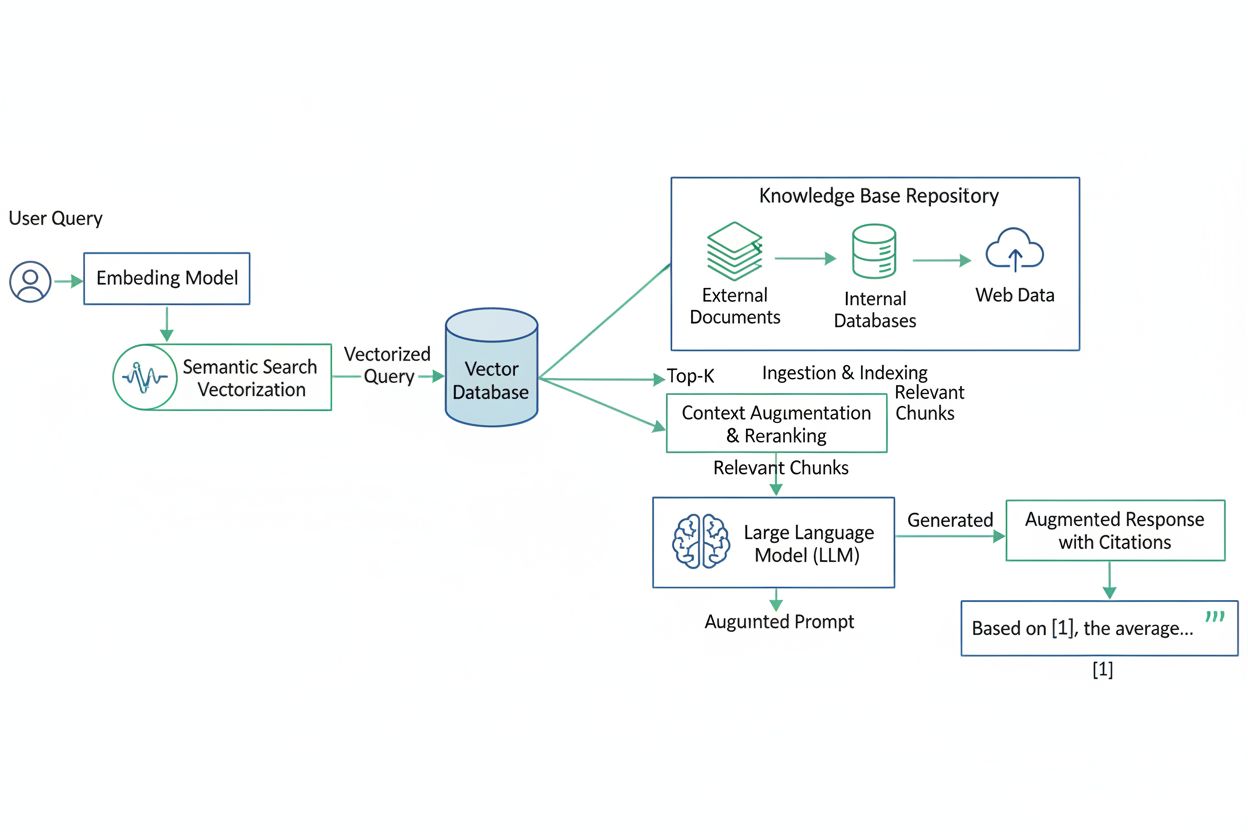

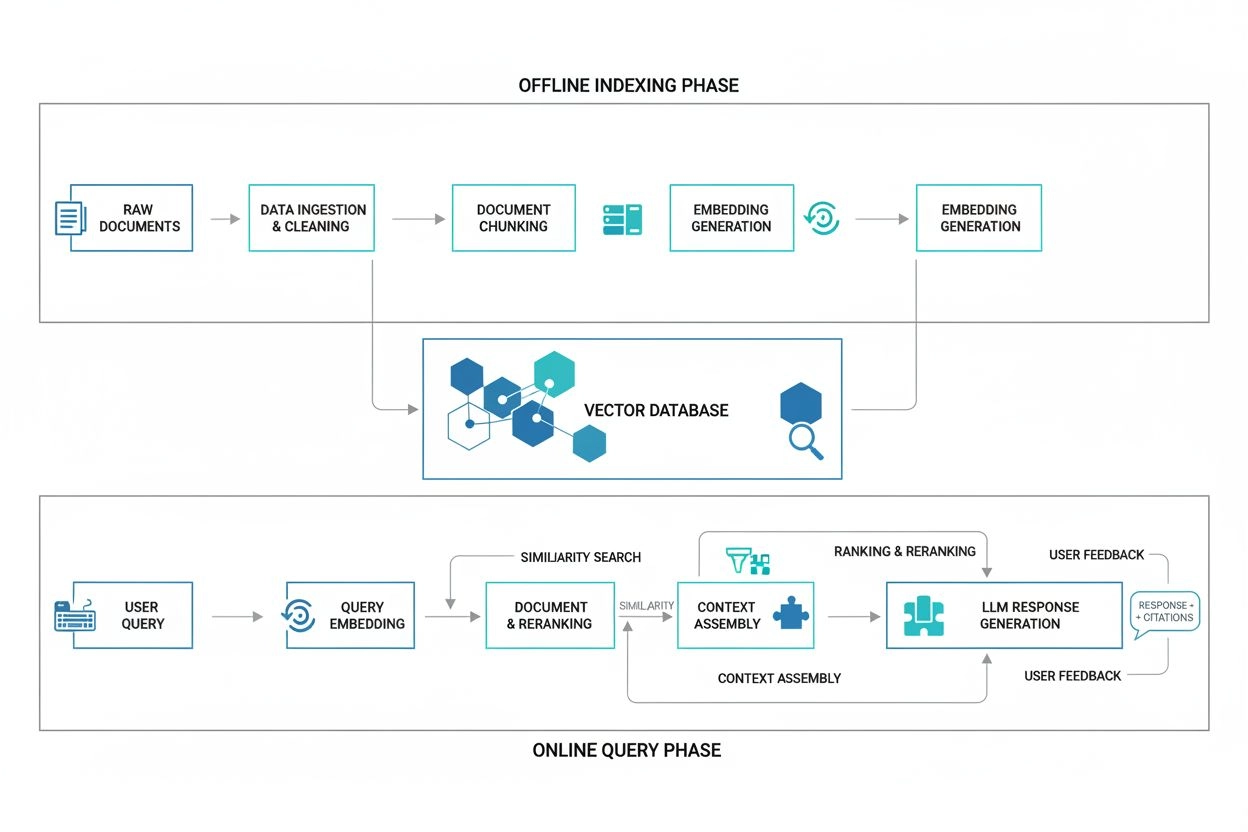

The RAG workflow follows a clearly defined five-stage process that orchestrates how information flows through the system. First, a user submits a prompt or query to the system. Second, the information retrieval model queries the knowledge base using semantic search techniques to identify relevant documents or data points. Third, the retrieval component returns matching information from the knowledge base to an integration layer. Fourth, the system engineers an augmented prompt by combining the original user query with the retrieved context, using prompt engineering techniques to optimize the LLM’s input. Fifth, the generator (typically a pretrained LLM like GPT, Claude, or Llama) produces an output based on this enriched prompt and returns it to the user. This process showcases how RAG derives its name: it retrieves data, augments the prompt with context, and generates a response. The entire workflow enables AI systems to provide answers that are not only coherent but also grounded in verifiable sources, which is particularly valuable for applications requiring accuracy and transparency.

A complete RAG architecture consists of four primary components working in concert. The knowledge base serves as the external data repository containing documents, PDFs, databases, websites, and other unstructured data sources. The retriever is an AI model that searches this knowledge base for relevant information using vector embeddings and semantic search algorithms. The integration layer coordinates the overall functioning of the RAG system, managing data flow between components and orchestrating prompt augmentation. The generator is the LLM that synthesizes the user query with retrieved context to produce the final response. Additional components may include a ranker that scores retrieved documents by relevance and an output handler that formats responses for end users. The knowledge base must be continuously updated to maintain relevance, and documents are typically processed through chunking—dividing large documents into smaller, semantically coherent segments—to ensure they fit within the LLM’s context window without losing meaning.

The technical foundation of RAG relies on vector embeddings and vector databases to enable efficient semantic search. When documents are added to a RAG system, they undergo an embedding process where text is converted into numerical vectors representing semantic meaning in multidimensional space. These vectors are stored in a vector database, which allows the system to perform rapid similarity searches. When a user submits a query, the retrieval model converts that query into an embedding using the same embedding model, then searches the vector database for vectors most similar to the query embedding. This semantic search approach is fundamentally different from traditional keyword-based search because it understands meaning rather than just matching words. For example, a query about “employee benefits” would retrieve documents about “compensation packages” because the semantic meaning is similar, even though the exact words differ. The efficiency of this approach is remarkable: vector databases can search millions of documents in milliseconds, making RAG practical for real-time applications. The quality of embeddings directly impacts RAG performance, which is why organizations carefully select embedding models optimized for their specific domains and use cases.

| Aspect | RAG | Fine-Tuning |

|---|---|---|

| Approach | Retrieves external data at query time | Retrains model on domain-specific data |

| Cost | Low to moderate; no model retraining | High; requires significant computational resources |

| Implementation Time | Days to weeks | Weeks to months |

| Data Requirements | External knowledge base or vector database | Thousands of labeled training examples |

| Knowledge Cutoff | Eliminates cutoff; uses current data | Frozen at training time |

| Flexibility | Highly flexible; update sources anytime | Requires retraining for updates |

| Use Case | Dynamic data, current information needs | Behavior change, specialized language patterns |

| Hallucination Risk | Reduced through grounding in sources | Still present; depends on training data quality |

RAG and fine-tuning are complementary approaches rather than competing alternatives. RAG is ideal when organizations need to incorporate dynamic, frequently-updated data without the expense and complexity of retraining models. Fine-tuning is more appropriate when you want to fundamentally change how a model behaves or teach it specialized language patterns specific to your domain. Many organizations use both techniques together: fine-tuning a model to understand domain-specific terminology and desired output formats, while simultaneously using RAG to ensure responses are grounded in current, authoritative information. The global RAG market is experiencing explosive growth, estimated at $1.85 billion in 2025 and projected to reach $67.42 billion by 2034, reflecting the technology’s critical importance in enterprise AI deployments.

One of the most significant benefits of RAG is its ability to reduce AI hallucinations—instances where models generate plausible-sounding but factually incorrect information. Traditional LLMs rely entirely on patterns learned during training, which can lead them to confidently assert false information when they lack knowledge about a topic. RAG anchors LLMs in specific, authoritative knowledge by requiring the model to base responses on retrieved documents. When the retrieval system successfully identifies relevant, accurate sources, the LLM is constrained to synthesize information from those sources rather than generating content from its training data alone. This grounding effect significantly reduces hallucinations because the model must work within the boundaries of retrieved information. Additionally, RAG systems can include source citations in their responses, allowing users to verify claims by consulting original documents. Research indicates that RAG implementations achieve approximately 15% improvement in precision when using appropriate evaluation metrics like Mean Average Precision (MAP) and Mean Reciprocal Rank (MRR). However, it’s important to note that RAG cannot eliminate hallucinations entirely—if the retrieval system returns irrelevant or low-quality documents, the LLM may still generate inaccurate responses. This is why retrieval quality is critical to RAG success.

Different AI systems implement RAG with varying architectures and capabilities. ChatGPT uses retrieval mechanisms when accessing external knowledge through plugins and custom instructions, allowing it to reference current information beyond its training cutoff. Perplexity is fundamentally built on RAG principles, retrieving real-time information from the web to ground its responses in current sources, which is why it can cite specific URLs and publications. Claude by Anthropic supports RAG through its API and can be configured to reference external documents provided by users. Google AI Overviews (formerly SGE) integrate retrieval from Google’s search index to provide synthesized answers with source attribution. These platforms demonstrate that RAG has become the standard architecture for modern AI systems that need to provide accurate, current, and verifiable information. The implementation details vary—some systems retrieve from the public web, others from proprietary databases, and enterprise implementations retrieve from internal knowledge bases—but the fundamental principle remains consistent: augmenting generation with retrieved context.

Implementing RAG at scale introduces several technical and operational challenges that organizations must address. Retrieval quality is paramount; even the most capable LLM will generate poor responses if the retrieval system returns irrelevant documents. This requires careful selection of embedding models, similarity metrics, and ranking strategies optimized for your specific domain. Context window limitations present another challenge: injecting too much retrieved content can overwhelm the LLM’s context window, leading to truncated sources or diluted responses. The chunking strategy—how documents are divided into segments—must balance semantic coherence with token efficiency. Data freshness is critical because RAG’s primary advantage is accessing current information; without scheduled ingestion jobs or automated updates, document indexes quickly become stale, reintroducing hallucinations and outdated answers. Latency can be problematic when dealing with large datasets or external APIs, as retrieval, ranking, and generation all add processing time. Finally, RAG evaluation is complex because traditional AI metrics fall short; evaluating RAG systems requires combining human judgment, relevance scoring, groundedness checks, and task-specific performance metrics to assess response quality comprehensively.

RAG is rapidly evolving from a workaround into a foundational component of enterprise AI architecture. The technology is moving beyond simple document retrieval toward more sophisticated, modular systems. Hybrid architectures are emerging that combine RAG with tools, structured databases, and function-calling agents, where RAG provides unstructured grounding while structured data handles precise tasks. This multimodal approach enables more reliable end-to-end automation for complex business processes. Retriever-generator co-training represents another major development, where the retrieval and generation components are trained jointly to optimize each other’s performance. This approach reduces the need for manual prompt engineering and fine-tuning while improving overall system quality. As LLM architectures mature, RAG systems are becoming more seamless and contextual, moving beyond finite stores of memory to handle real-time data flows, multi-document reasoning, and persistent memory. The integration of RAG with AI agents is particularly significant—agents can use RAG to access knowledge bases while making autonomous decisions about which information to retrieve and how to act on it. This evolution positions RAG as essential infrastructure for trustworthy, intelligent AI systems that can operate reliably in production environments.

For organizations deploying AI systems, understanding RAG is crucial because it determines how your content and brand information appears in AI-generated responses. When AI systems like ChatGPT, Perplexity, Claude, and Google AI Overviews use RAG to retrieve information, they’re pulling from indexed knowledge bases that may include your website, documentation, or other published content. This makes brand monitoring in AI systems increasingly important. Tools like AmICited track how your domain, brand, and specific URLs appear in AI-generated answers across multiple platforms, helping you understand whether your content is being properly attributed and whether your brand messaging is accurately represented. As RAG becomes the standard architecture for AI systems, the ability to monitor and optimize your presence in these retrieval-augmented responses becomes a critical component of your digital strategy. Organizations can use this visibility to identify opportunities to improve their content’s relevance for AI retrieval, ensure proper attribution, and understand how their brand is being represented in the AI-powered search landscape.

Track how your content appears in AI system responses powered by RAG. AmICited monitors your domain across ChatGPT, Perplexity, Claude, and Google AI Overviews to ensure your brand gets proper attribution.

Learn what Retrieval-Augmented Generation (RAG) is, how it works, and why it's essential for accurate AI responses. Explore RAG architecture, benefits, and ente...

Learn what RAG (Retrieval-Augmented Generation) is in AI search. Discover how RAG improves accuracy, reduces hallucinations, and powers ChatGPT, Perplexity, and...

Learn what RAG pipelines are, how they work, and why they're critical for accurate AI responses. Understand retrieval mechanisms, vector databases, and how AI s...