Does Page Speed Affect AI Search Visibility? Complete Guide to AEO Performance

Learn how page speed impacts your visibility in AI search engines like ChatGPT, Perplexity, and Gemini. Discover optimization strategies and metrics that matter...

Discover the critical factors affecting AI indexing speed including site performance, crawl budget, content structure, and technical optimization. Learn how to optimize your website for faster AI search engine indexing.

AI indexing speed is affected by multiple factors including site performance and load times, crawl budget availability, content quality and structure, technical SEO configuration, database indexing efficiency, schema markup implementation, and the complexity of your website architecture. Optimizing these elements ensures AI crawlers can efficiently discover, process, and index your content.

AI indexing speed determines how quickly your content becomes discoverable in AI-powered search engines like ChatGPT, Perplexity, and Google’s AI Overviews. Unlike traditional search engines that simply match keywords to pages, AI systems must crawl, understand, and synthesize your content to generate accurate responses. The speed at which this happens depends on numerous interconnected factors that directly impact your visibility in AI-generated answers and your ability to capture traffic from AI search platforms.

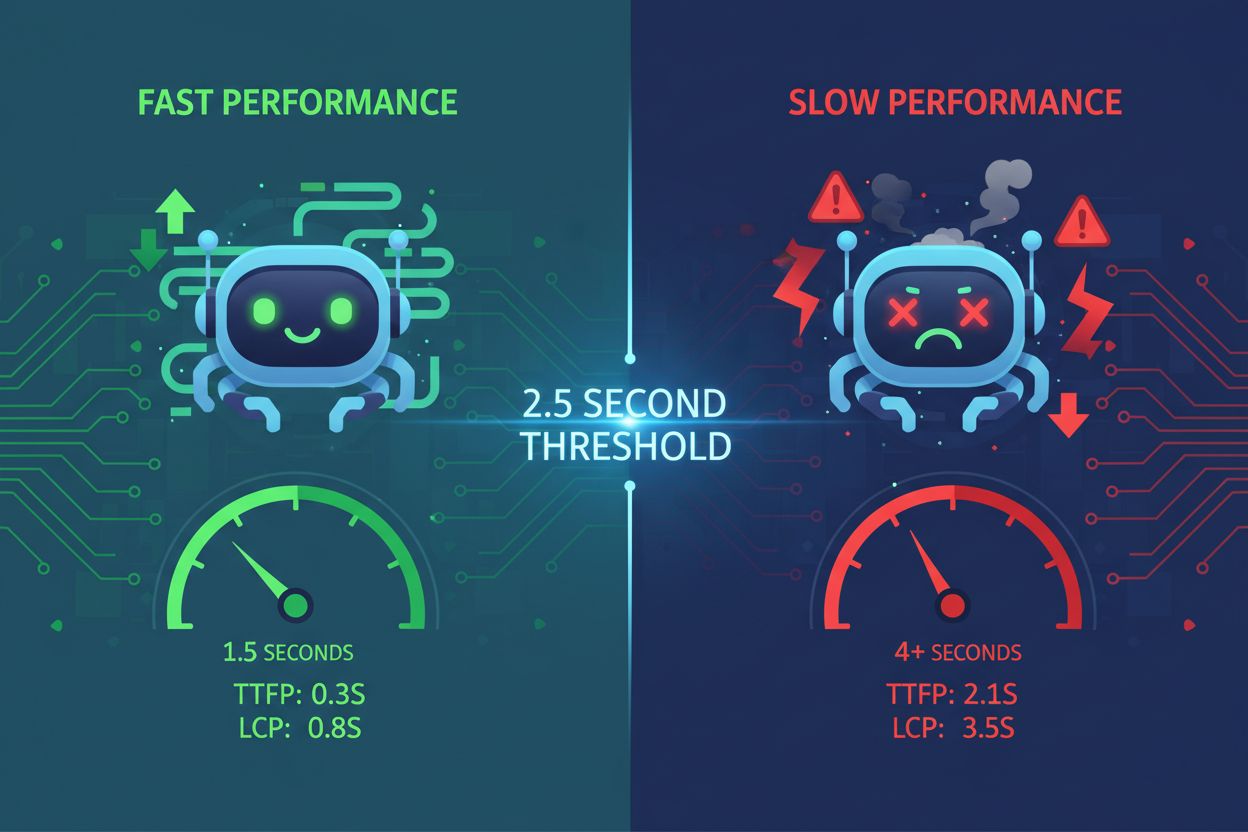

Page load speed is one of the most critical factors affecting AI indexing speed. AI crawlers operate within strict resource constraints and time limits—typically between 1-5 seconds per page request. When your website loads slowly, crawlers spend more time retrieving content, which means fewer pages get indexed within their allocated crawl window. This creates a cascading effect where slow performance directly reduces the number of your pages that AI systems can process and index.

Slow-loading sites signal poor maintenance to AI systems. When pages take excessive time to render, AI crawlers may timeout before accessing your actual content, leaving only your HTML skeleton indexed. This is particularly problematic for JavaScript-heavy websites where content loads dynamically on the client side. The two-step rendering process required for JavaScript adds significant latency, making it harder for AI systems to extract meaningful information. Compressing images, minifying code, implementing lazy loading, and using Content Delivery Networks (CDNs) can dramatically improve load times and allow AI crawlers to process more pages efficiently.

| Performance Metric | Target | Impact on AI Indexing |

|---|---|---|

| Page Load Time | Under 3 seconds | Allows more pages to be crawled per session |

| Largest Contentful Paint (LCP) | Under 2.5 seconds | Ensures AI sees meaningful content quickly |

| First Input Delay (FID) | Below 100ms | Improves crawler responsiveness |

| Cumulative Layout Shift (CLS) | Close to zero | Prevents content extraction errors |

Crawl budget refers to the limited number of pages AI systems can visit within a specific timeframe. Every website receives a finite crawl allocation from AI search engines, similar to how Google allocates crawl budget. When your crawl budget is depleted, AI systems stop indexing new content, regardless of quality. This limitation becomes especially problematic for large websites with hundreds or thousands of pages competing for limited indexing resources.

Optimizing crawl budget allocation is essential for faster AI indexing. You should prioritize high-value pages—those that generate revenue, attract traffic, or contain critical information—over low-value pages like duplicate category filters or redundant tag pages. Using robots.txt files strategically, you can block AI crawlers from accessing pages that don’t need indexing, preserving your crawl budget for content that matters. Additionally, maintaining an updated XML sitemap and using proper internal linking structure helps AI systems discover your most important pages faster, ensuring they get indexed before your crawl budget runs out.

AI systems heavily penalize duplicate and thin content, which slows indexing significantly. When AI crawlers encounter content that lacks originality or substance, they deprioritize crawling similar pages on your site. This is because AI systems evaluate content quality using E-E-A-T signals (Experience, Expertise, Authoritativeness, and Trustworthiness). Content that appears to be low-effort, AI-generated without human review, or duplicated from other sources receives lower priority in the indexing queue.

High-quality, comprehensive content gets indexed faster by AI systems. Content exceeding 3,000 words that thoroughly answers user questions from multiple angles receives priority indexing. AI systems favor content that includes supporting data, statistics, real-world examples, and case studies because these elements demonstrate expertise and trustworthiness. When you create original, well-researched content that provides genuine value, AI crawlers allocate more resources to indexing your pages, resulting in faster discovery and inclusion in AI-generated answers.

Improper robots.txt configuration can accidentally block AI crawlers from indexing your content. Many websites inadvertently prevent AI search bots from accessing their pages by misconfiguring robots.txt files. Different AI systems use different crawler identifiers—ChatGPT uses OAI-SearchBot, Perplexity uses PerplexityBot, and others use AndiBot or ExaBot. If your robots.txt doesn’t explicitly allow these crawlers, they cannot index your content, regardless of quality or relevance.

Clean HTML structure and semantic markup accelerate AI indexing. AI crawlers struggle with JavaScript-heavy implementations and complex nested structures. Using semantic HTML5 tags (article, section, nav), proper heading hierarchies (H1-H6), descriptive link text, and alt text for images makes your content immediately accessible to AI systems. Server-side rendering (SSR) frameworks like Next.js or Gatsby pre-render content on the server, ensuring AI crawlers receive fully-formed pages without requiring JavaScript execution, which significantly speeds up indexing.

Over-indexing in your database creates latency that slows AI indexing speed. When databases have excessive, redundant, or overlapping indexes, every insert, update, and delete operation must update multiple indexes, creating write performance bottlenecks. This overhead directly impacts how quickly your content management system can serve pages to AI crawlers. Redundant indexes consume storage space, introduce latency, and force query planners to make suboptimal decisions, all of which slow down content delivery to AI systems.

Optimizing database indexes improves content delivery speed to AI crawlers. Regularly audit your database to identify unused or redundant indexes using tools like pg_stat_user_indexes (PostgreSQL) or sys.schema_unused_indexes (MySQL). Remove indexes that haven’t been accessed in weeks or months, consolidate overlapping indexes, and ensure your database schema matches current query patterns. A well-optimized database serves content faster to AI crawlers, enabling quicker indexing and inclusion in AI-generated responses.

Missing or incomplete schema markup delays AI content understanding and indexing. Schema markup provides structured data that helps AI systems quickly understand your content’s context, meaning, and relationships. Without proper schema implementation, AI crawlers must spend additional processing time inferring content structure and extracting key information. This additional processing time reduces indexing speed because crawlers allocate more resources per page.

Implementing comprehensive schema markup accelerates AI indexing. FAQ schema, How-To schema, Article schema, and Product schema all provide AI systems with pre-structured information that can be immediately understood and indexed. When you include author information, publication dates, ratings, and other structured data, AI systems can quickly categorize and index your content without additional processing. Research shows that 36.6% of search keywords trigger featured snippets derived from schema markup, demonstrating how structured data directly impacts AI visibility and indexing speed.

Weak internal linking prevents AI crawlers from discovering your content efficiently. Internal links act as a roadmap that guides AI crawlers through your website architecture. Without strategic internal linking, AI systems may struggle to discover important pages, especially newer content buried deep in your site structure. This discovery delay directly translates to slower indexing because crawlers must spend more time finding pages to index.

Strategic internal linking accelerates AI content discovery and indexing. Linking new content from relevant existing pages helps AI crawlers find and index it faster. Using descriptive anchor text that clearly indicates what the linked page contains helps AI systems understand content relationships and context. Proper internal linking structure ensures that high-value pages receive more crawl attention, resulting in faster indexing and higher priority in AI-generated responses.

Poor user experience signals to AI systems that your content may not be valuable. High bounce rates, short session durations, and low engagement metrics indicate to AI crawlers that your content doesn’t meet user needs. AI systems increasingly use behavioral signals to assess content quality, and pages with poor UX receive lower indexing priority. When users quickly leave your pages, AI systems interpret this as a signal that the content lacks value, slowing its inclusion in AI-generated answers.

Reader-friendly content structure improves AI indexing speed. Content formatted with short paragraphs (2-3 sentences), descriptive subheadings, bullet points, numbered lists, and tables is easier for both users and AI systems to process. This improved scannability allows AI crawlers to quickly extract key information and understand content structure without extensive processing. When your content is well-organized and accessible, AI systems can index it faster and with higher confidence in its quality and relevance.

Slow hosting infrastructure creates bottlenecks that delay AI indexing. Shared hosting environments often have limited resources and slower response times, which means AI crawlers must wait longer for each page to load. This waiting time reduces the number of pages that can be indexed within the crawler’s allocated time window. Server response time directly impacts crawl efficiency—every millisecond of delay reduces the total pages indexed per session.

Upgrading to fast, scalable hosting accelerates AI indexing. Managed WordPress hosts, VPS solutions, and cloud platforms like Google Cloud or AWS provide faster server response times and better resource allocation. CDNs like Cloudflare cache content globally and serve pages faster to crawlers regardless of their geographic location. When your hosting infrastructure delivers pages quickly, AI crawlers can process more content per session, resulting in faster overall indexing and better visibility in AI-generated answers.

Continuous monitoring of AI indexing performance enables proactive optimization. Tools like Google Search Console, SE Ranking’s AI Results Tracker, and Peec.ai help you track how quickly your content appears in AI search results and identify pages that aren’t being indexed. By monitoring these metrics, you can identify bottlenecks and implement targeted improvements that directly impact indexing speed.

Regular audits and updates maintain optimal AI indexing speed. Conduct periodic performance audits using tools like Google PageSpeed Insights, GTmetrix, and WebPageTest to identify speed bottlenecks. Update your XML sitemap regularly, refresh content to maintain freshness signals, and continuously optimize your database and infrastructure. Consistent optimization ensures that your AI indexing speed remains competitive and your content continues to appear quickly in AI-generated answers.

Track how quickly your content appears in AI search results like ChatGPT, Perplexity, and other AI answer generators. Get real-time insights into your AI indexing performance.

Learn how page speed impacts your visibility in AI search engines like ChatGPT, Perplexity, and Gemini. Discover optimization strategies and metrics that matter...

Discover how site speed directly impacts AI visibility and citations in ChatGPT, Gemini, and Perplexity. Learn the 2.5-second threshold and optimization strateg...

Community discussion on whether page speed affects AI search visibility. Real data from performance engineers and SEO professionals analyzing speed correlation ...