Understanding Vector Embeddings: How AI Matches Content to Queries

Learn how vector embeddings enable AI systems to understand semantic meaning and match content to queries. Explore the technology behind semantic search and AI ...

Learn how embeddings work in AI search engines and language models. Understand vector representations, semantic search, and their role in AI-generated answers.

Embeddings are numerical vector representations of text, images, or other data that capture semantic meaning and relationships. They enable AI systems to understand context and perform efficient similarity searches, making them fundamental to how modern AI search engines and language models retrieve and generate relevant information.

Embeddings are mathematical representations of data converted into numerical vectors that capture semantic meaning and relationships. In the context of AI search, embeddings transform complex information like text, images, or documents into a format that machine learning models can process efficiently. These vectors exist in high-dimensional space, where similar items are positioned closer together, reflecting their semantic relationships. This fundamental technology powers how modern AI search engines like ChatGPT, Perplexity, and other AI answer generators understand queries and retrieve relevant information from vast knowledge bases.

The core purpose of embeddings is to bridge the gap between human language and machine understanding. When you search for information or ask a question in an AI search engine, your query is converted into an embedding—a numerical representation that captures the meaning of your words. The AI system then compares this query embedding against embeddings of documents, articles, or other content in its knowledge base to find the most semantically similar and relevant results. This process happens in milliseconds, enabling the rapid retrieval of information that powers AI-generated answers.

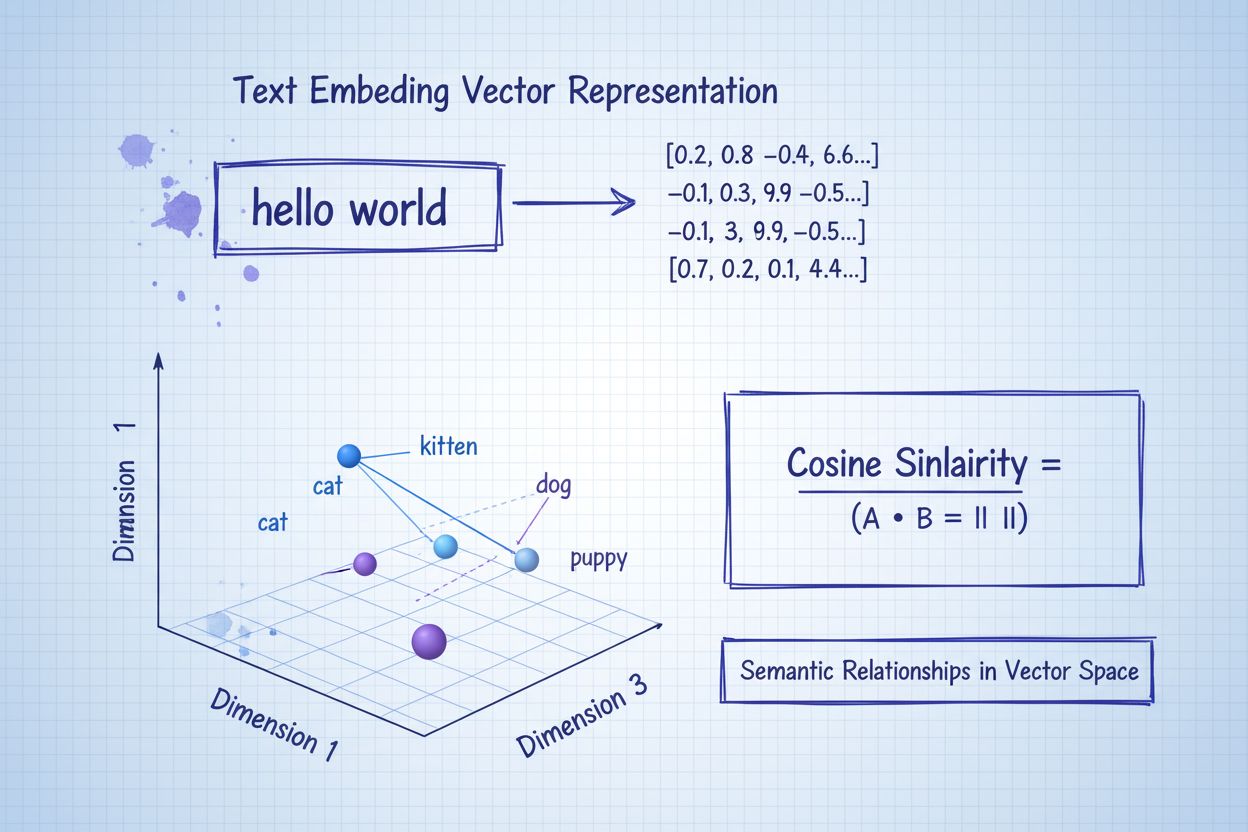

Embeddings function by encoding semantic information into vectors of numbers, typically ranging from hundreds to thousands of dimensions. Each dimension in the vector represents different aspects of meaning, context, or features of the original data. For example, in text embeddings, one dimension might capture whether a word relates to technology, another might represent sentiment, and another might indicate formality level. The beauty of this approach is that semantically similar content produces embeddings that are mathematically close to each other in vector space.

The process of creating embeddings involves training neural networks, particularly transformer-based models, on large datasets of text or images. These models learn to recognize patterns and relationships in the data, gradually developing the ability to represent meaning numerically. Modern embedding models like Sentence-BERT (SBERT), OpenAI’s text-embedding-ada-002, and Universal Sentence Encoder have been fine-tuned specifically for semantic similarity tasks. They can process entire sentences or paragraphs and generate embeddings that accurately reflect the semantic content, rather than just individual words.

When an AI search engine receives your query, it uses the same embedding model that was used to embed the knowledge base content. This consistency is crucial—using different embedding models for queries versus stored documents would result in misaligned vectors and poor retrieval accuracy. The system then performs a similarity search by calculating the distance between your query embedding and all stored embeddings, typically using metrics like cosine similarity. Documents with embeddings closest to your query embedding are returned as the most relevant results.

Retrieval-Augmented Generation (RAG) is a technique that combines large language models with external knowledge bases, and embeddings are absolutely essential to this process. In RAG systems, embeddings enable the retrieval component to find relevant documents or passages from a knowledge base before the language model generates an answer. This approach allows AI systems to provide more accurate, current, and domain-specific information than they could generate from training data alone.

| Component | Function | Role of Embeddings |

|---|---|---|

| Query Processing | Convert user question to vector | Enables semantic understanding of the question |

| Document Retrieval | Find relevant documents | Matches query embedding against document embeddings |

| Context Provision | Supply relevant information to LLM | Ensures LLM has accurate source material |

| Answer Generation | Create response based on context | Uses retrieved context to generate accurate answers |

In a typical RAG workflow, when you ask a question, the system first converts your query into an embedding. It then searches a vector database containing embeddings of all available documents or passages. The system retrieves the documents with embeddings most similar to your query embedding, providing the language model with relevant context. The language model then uses this context to generate a more accurate and informed answer. This two-stage process—retrieval followed by generation—significantly improves the quality and reliability of AI-generated answers.

Different types of data require different embedding approaches. For text data, sentence-level embeddings have become the standard in modern AI systems. Sentence-BERT generates high-quality embeddings by fine-tuning BERT specifically for semantic similarity tasks, capturing the meaning of entire sentences rather than individual words. OpenAI’s embedding models produce embeddings suitable for various text lengths, from short queries to long documents. These models have been trained on billions of text examples, enabling them to understand nuanced semantic relationships across different domains and languages.

For image data, models like CLIP (Contrastive Language-Image Pretraining) create embeddings that represent visual features and semantic content. CLIP is particularly powerful because it aligns visual and textual information in a shared embedding space, enabling multimodal retrieval where you can search for images using text queries or vice versa. This capability is increasingly important as AI search engines become more multimodal, handling not just text but also images, videos, and other media types.

For audio data, deep learning models such as Wav2Vec 2.0 generate embeddings that capture higher-level semantic content, making them suitable for voice search and audio-based AI applications. For graph data and structured relationships, techniques like Node2Vec and Graph Convolutional Networks create embeddings that preserve network neighborhoods and relationships. The choice of embedding technique depends on the specific type of data and the requirements of the AI application.

One of the most powerful applications of embeddings is semantic search, which goes beyond simple keyword matching. Traditional search engines look for exact word matches, but semantic search understands the meaning behind words and finds results based on conceptual similarity. When you search for “best restaurants near me” in an AI search engine, the system doesn’t just look for pages containing those exact words. Instead, it understands that you’re looking for dining establishments in your geographic area and retrieves relevant results based on semantic meaning.

Embeddings enable this semantic understanding by representing meaning as mathematical relationships in vector space. Two documents might use completely different words but express similar ideas—their embeddings would still be close together in vector space. This capability is particularly valuable in AI search because it allows systems to find relevant information even when the exact terminology differs. For example, a query about “vehicle transportation” would retrieve results about “cars” and “automobiles” because these concepts have similar embeddings, even though the words are different.

The efficiency of semantic search through embeddings is remarkable. Rather than comparing your query against every document word-by-word, the system performs a single mathematical operation comparing vectors. Modern vector databases use advanced indexing techniques like Approximate Nearest Neighbor (ANN) search with algorithms such as HNSW (Hierarchical Navigable Small World) and IVF (Inverted File Index) to make these searches incredibly fast, even when searching through billions of embeddings.

As AI systems process increasingly large amounts of data, storing and managing embeddings efficiently becomes critical. Vector databases are specialized databases designed specifically for storing and searching high-dimensional vectors. Popular vector databases include Pinecone, which offers cloud-native architecture with low-latency search; Weaviate, an open-source solution with GraphQL and RESTful APIs; and Milvus, a scalable open-source platform supporting various indexing algorithms.

These databases use optimized data structures and algorithms to enable rapid similarity searches across millions or billions of embeddings. Without specialized vector databases, searching through embeddings would be prohibitively slow. Vector databases implement sophisticated indexing techniques that reduce search time from linear (checking every embedding) to logarithmic or near-constant time. Quantization is another important technique used in vector databases, where vectors are compressed to reduce storage requirements and speed up computations, though with a small trade-off in accuracy.

The scalability of vector databases is essential for modern AI search engines. They support horizontal scaling through sharding and replication, allowing systems to handle massive datasets distributed across multiple servers. Some vector databases support incremental updates, enabling new documents to be added to the knowledge base without requiring a complete reindexing of all existing data. This capability is crucial for AI search engines that need to stay current with new information.

Before data can be embedded and used in AI search systems, it must be properly prepared. This process involves extraction, curation, and chunking. Unstructured data like PDFs, Word documents, emails, and web pages must first be parsed to extract text and metadata. Data curation ensures that extracted text accurately reflects the original content and is suitable for embedding generation. Chunking divides long documents into smaller, contextually meaningful sections—a critical step because embedding models have input length limits and because smaller chunks often retrieve more precisely than entire documents.

The quality of data preparation directly impacts the quality of embeddings and the accuracy of AI search results. If documents are chunked too small, important context is lost. If chunks are too large, they may contain irrelevant information that dilutes the semantic signal. Effective chunking strategies preserve information flow while ensuring each chunk is focused enough to be retrieved accurately. Modern platforms automate much of this preprocessing, extracting information from various file formats, cleaning data, and formatting it for embedding generation.

Metadata enrichment is another important aspect of data preparation. Extracting and preserving metadata like document titles, authors, dates, and source information helps improve retrieval accuracy and allows AI systems to provide better citations and context. When an AI search engine retrieves information to answer your question, having rich metadata enables it to tell you exactly where that information came from, improving transparency and trustworthiness of AI-generated answers.

Track how your content appears in AI-generated answers across ChatGPT, Perplexity, and other AI search engines. Get real-time alerts when your brand, domain, or URLs are mentioned.

Learn how vector embeddings enable AI systems to understand semantic meaning and match content to queries. Explore the technology behind semantic search and AI ...

Learn what embeddings are, how they work, and why they're essential for AI systems. Discover how text transforms into numerical vectors that capture semantic me...

Community discussion explaining embeddings in AI search. Practical explanations for marketers on how vector embeddings affect content visibility in ChatGPT, Per...