BERT Update

Learn about Google's BERT Update, a major 2019 algorithm change using bidirectional transformers to improve natural language understanding in search queries and...

Learn about BERT, its architecture, applications, and current relevance. Understand how BERT compares to modern alternatives and why it remains essential for NLP tasks.

BERT (Bidirectional Encoder Representations from Transformers) is a machine learning model for natural language processing released by Google in 2018. While newer models like ModernBERT have emerged, BERT remains highly relevant with 68+ million monthly downloads, serving as the foundation for countless NLP applications in production systems worldwide.

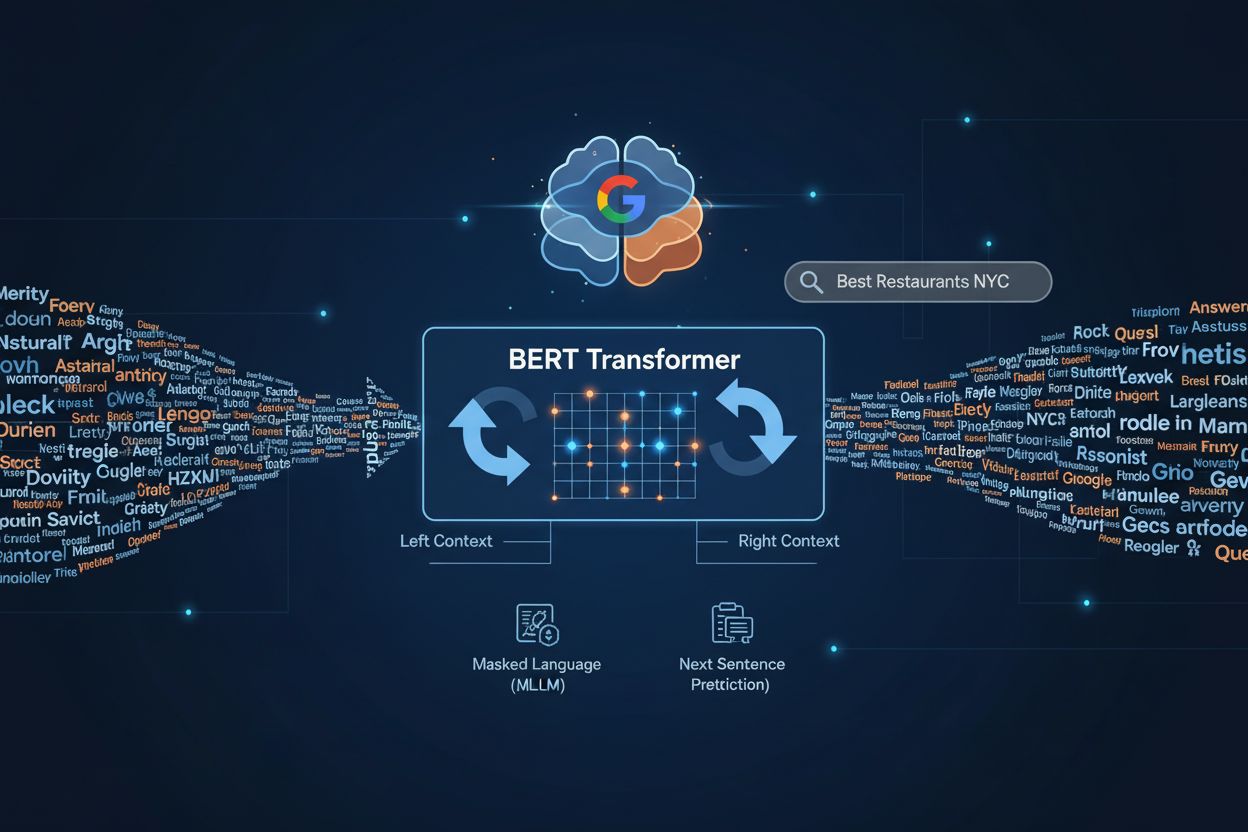

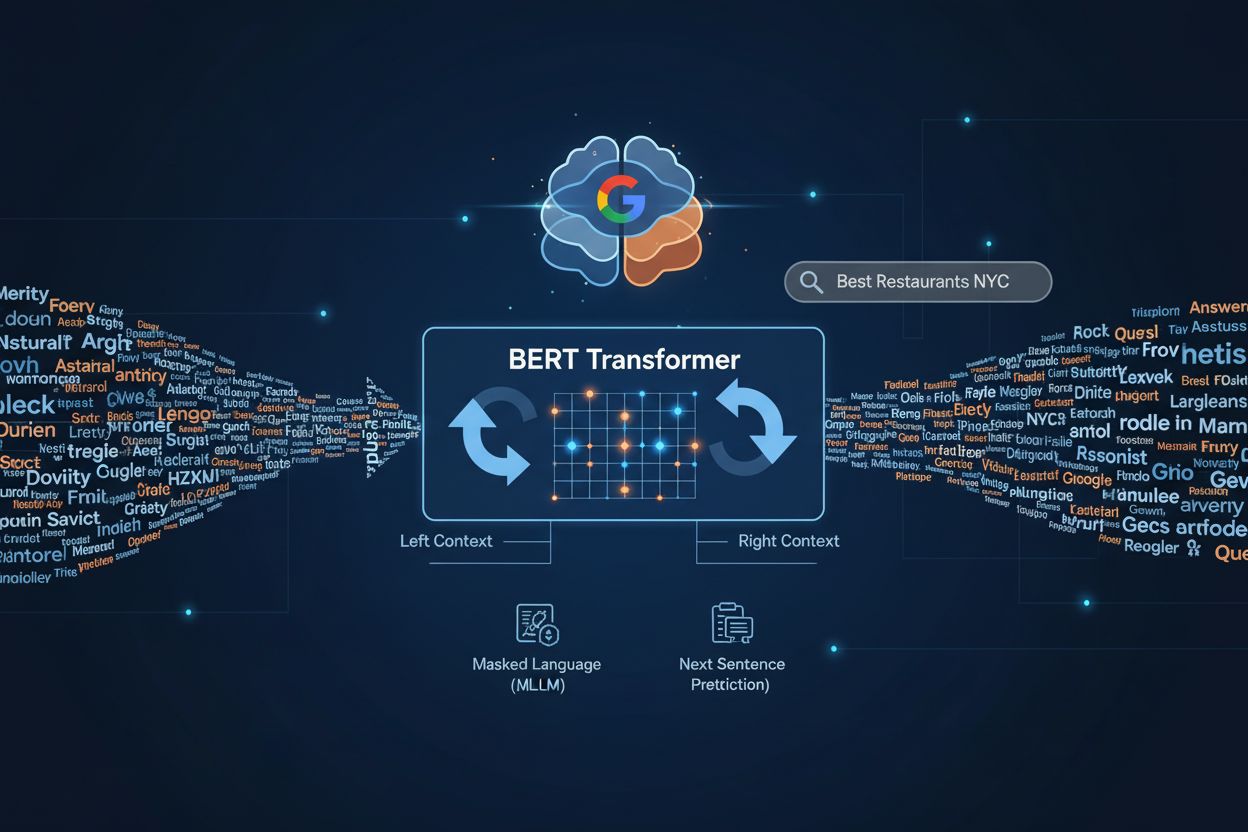

BERT, which stands for Bidirectional Encoder Representations from Transformers, is an open-source machine learning framework developed by Google AI Language in 2018. It represents a revolutionary approach to natural language processing by enabling computers to understand and process human language with contextual awareness. Unlike traditional language models that process text sequentially from left to right or right to left, BERT uses a bidirectional approach, analyzing all words in a sentence simultaneously to understand their relationships and meanings. This fundamental shift in how machines process language made BERT a game-changer in the NLP field, solving for 11+ common language tasks better than previous models and becoming the first to outperform human-level accuracy on several benchmarks.

The core innovation of BERT lies in its ability to understand context from both directions. When you read a sentence, your brain naturally considers words before and after a target word to understand its meaning. BERT mimics this human cognitive process through its Transformer architecture, which uses an attention mechanism to observe relationships between words. This bidirectional understanding is particularly powerful for tasks where context is crucial, such as determining the meaning of ambiguous words like “bank” (financial institution vs. riverbank) based on surrounding text.

BERT operates through a sophisticated two-step process: pre-training on massive unlabeled data followed by fine-tuning on task-specific labeled data. During pre-training, BERT learns general language patterns from enormous datasets, specifically trained on Wikipedia (~2.5 billion words) and Google’s BooksCorpus (~800 million words). This massive dataset of 3.3 billion words contributed to BERT’s deep knowledge not only of the English language but also of world knowledge and contextual relationships.

The pre-training process employs two innovative training strategies that make BERT unique:

| Training Strategy | Description | Purpose |

|---|---|---|

| Masked Language Model (MLM) | 15% of words are randomly masked, and BERT predicts them using surrounding context | Teaches bidirectional understanding by forcing the model to use context from both directions |

| Next Sentence Prediction (NSP) | BERT predicts whether a second sentence follows the first in the original document | Helps the model understand relationships and coherence between sentences |

The Masked Language Model works by hiding random words in sentences and forcing BERT to predict them based on context clues from surrounding words. For example, if the sentence reads “The capital of France is [MASK],” BERT learns to predict “Paris” by understanding the contextual relationship between “capital,” “France,” and the missing word. This training method is inspired by the cloze procedure, a linguistic technique dating back to 1953, but BERT applies it at scale with modern deep learning.

BERT’s architecture comes in two main configurations: BERTbase with 12 transformer layers, 768 hidden units, and 110 million parameters, and BERTlarge with 24 transformer layers, 1024 hidden units, and 340 million parameters. The Transformer architecture itself is the backbone that makes BERT’s efficiency possible, using an attention mechanism that allows the model to parallelize training extremely efficiently. This parallelization made it feasible to train BERT on massive amounts of data in a relatively short period—the original models were trained on 4 TPUs (Tensor Processing Units) for just 4 days.

BERT’s versatility makes it applicable to numerous real-world NLP tasks that organizations encounter daily. The model excels at sentiment analysis, where it determines whether text expresses positive, negative, or neutral sentiment—crucial for analyzing customer reviews and social media monitoring. In question-answering systems, BERT helps chatbots and virtual assistants understand user queries and retrieve relevant information from knowledge bases. Named Entity Recognition (NER) is another critical application where BERT identifies and classifies entities like person names, organizations, locations, and dates within text, essential for information extraction and compliance tasks.

Text classification remains one of BERT’s most deployed applications, handling tasks like spam detection, content moderation, and topic categorization. Google itself has been using BERT to improve search results since November 2020, helping the search engine better understand user intent and surface more relevant results. For instance, BERT now understands that “prescription for someone” in a search query refers to picking up medication for another person, not just general prescription information. Semantic similarity measurement is another powerful application where BERT embeddings help identify duplicate content, paraphrase detection, and information retrieval systems.

Beyond text, BERT has been adapted for machine translation, text summarization, and conversational AI applications. The model’s ability to generate contextual embeddings—numerical representations that capture semantic meaning—makes it invaluable for retrieval systems and recommendation engines. Organizations use BERT-based models for content moderation, privacy compliance (identifying sensitive information), and entity extraction for regulatory requirements.

Despite being released in 2018, BERT remains remarkably relevant and widely deployed. The evidence is compelling: BERT is currently the second most downloaded model on the Hugging Face Hub with over 68 million monthly downloads, surpassed only by another encoder model fine-tuned for retrieval. On a broader scale, encoder-only models like BERT accumulate over 1 billion downloads per month, nearly three times more than decoder-only models (generative models like GPT) with their 397 million monthly downloads. This massive adoption reflects BERT’s continued importance in production systems worldwide.

The practical reasons for BERT’s enduring relevance are substantial. Encoder-only models are lean, fast, and cost-effective compared to large language models, making them ideal for real-world applications where latency and computational resources matter. While generative models like GPT-3 or Llama require significant computational resources and API costs, BERT can run efficiently on consumer-grade hardware and even on CPUs. For organizations processing massive datasets—like the FineWeb-Edu project that filtered 15 trillion tokens—using BERT-based models costs $60,000 in compute, whereas using decoder-only models would cost over one million dollars.

However, BERT’s landscape has evolved. ModernBERT, released in December 2024, represents the first significant replacement for BERT in six years. ModernBERT is a Pareto improvement over BERT, meaning it’s better across both speed and accuracy without tradeoffs. It features an 8,192 token context length (compared to BERT’s 512), is 2-4x faster than BERT, and achieves superior performance on downstream tasks. ModernBERT incorporates modern architectural improvements like rotary positional embeddings (RoPE), alternating attention patterns, and training on 2 trillion tokens including code data. Despite these advances, BERT remains relevant because:

The emergence of newer models has created an important distinction in the NLP landscape. Decoder-only models (GPT, Llama, Claude) excel at text generation and few-shot learning but are computationally expensive and slower for discriminative tasks. Encoder-only models like BERT are optimized for understanding and classification tasks, offering superior efficiency for non-generative applications.

| Aspect | BERT | GPT (Decoder-only) | ModernBERT |

|---|---|---|---|

| Architecture | Bidirectional encoder | Unidirectional decoder | Bidirectional encoder (modernized) |

| Primary Strength | Text understanding, classification | Text generation, few-shot learning | Understanding + efficiency + long context |

| Context Length | 512 tokens | 2,048-4,096+ tokens | 8,192 tokens |

| Inference Speed | Fast | Slow | 2-4x faster than BERT |

| Computational Cost | Low | High | Very low |

| Fine-tuning Requirement | Required for most tasks | Optional (zero-shot capable) | Required for most tasks |

| Code Understanding | Limited | Good | Excellent (trained on code) |

RoBERTa, released after BERT, improved upon the original by training longer on more data and removing the Next Sentence Prediction objective. DeBERTaV3 achieved superior performance on GLUE benchmarks but sacrificed efficiency and retrieval capabilities. DistilBERT offers a lighter alternative, running 60% faster while maintaining over 95% of BERT’s performance, making it ideal for resource-constrained environments. Specialized BERT variants have been fine-tuned for specific domains: BioClinicalBERT for medical text, BERTweet for Twitter sentiment analysis, and various models for code understanding.

Organizations deciding whether to use BERT in 2024-2025 should consider their specific use case. BERT remains the optimal choice for applications requiring fast inference, low computational overhead, and proven reliability on classification and understanding tasks. If you’re building a retrieval system, content moderation tool, or classification pipeline, BERT or its modern variants provide excellent performance-to-cost ratios. For long-document processing (beyond 512 tokens), ModernBERT is now the superior choice with its 8,192 token context length.

The decision between BERT and alternatives depends on several factors:

While BERT itself may not receive major updates, the encoder-only model category continues to evolve. ModernBERT’s success demonstrates that encoder models can benefit from modern architectural improvements and training techniques. The future likely involves specialized encoder models for specific domains (code, medical text, multilingual content) and hybrid systems where encoder models work alongside generative models in RAG (Retrieval Augmented Generation) pipelines.

The practical reality is that encoder-only models will remain essential infrastructure for AI systems. Every RAG pipeline needs an efficient retriever, every content moderation system needs a fast classifier, and every recommendation engine needs embeddings. As long as these needs exist—which they will—BERT and its successors will remain relevant. The question isn’t whether BERT is still relevant, but rather which modern variant (BERT, ModernBERT, RoBERTa, or domain-specific alternatives) best fits your specific requirements.

Track how your domain and brand appear in AI-generated answers across ChatGPT, Perplexity, and other AI search engines. Get insights into your AI visibility.

Learn about Google's BERT Update, a major 2019 algorithm change using bidirectional transformers to improve natural language understanding in search queries and...

Community discussion on whether BERT optimization still matters in the age of GPT-4 and other large language models. Understanding what's changed for SEO and AI...

Learn about Google's Multitask Unified Model (MUM) and its impact on AI search results. Understand how MUM processes complex queries across multiple formats and...