Conversational Context Window

Learn what a conversational context window is, how it affects AI responses, and why it matters for effective AI interactions. Understand tokens, limitations, an...

Learn what context windows are in AI language models, how they work, their impact on model performance, and why they matter for AI-powered applications and monitoring.

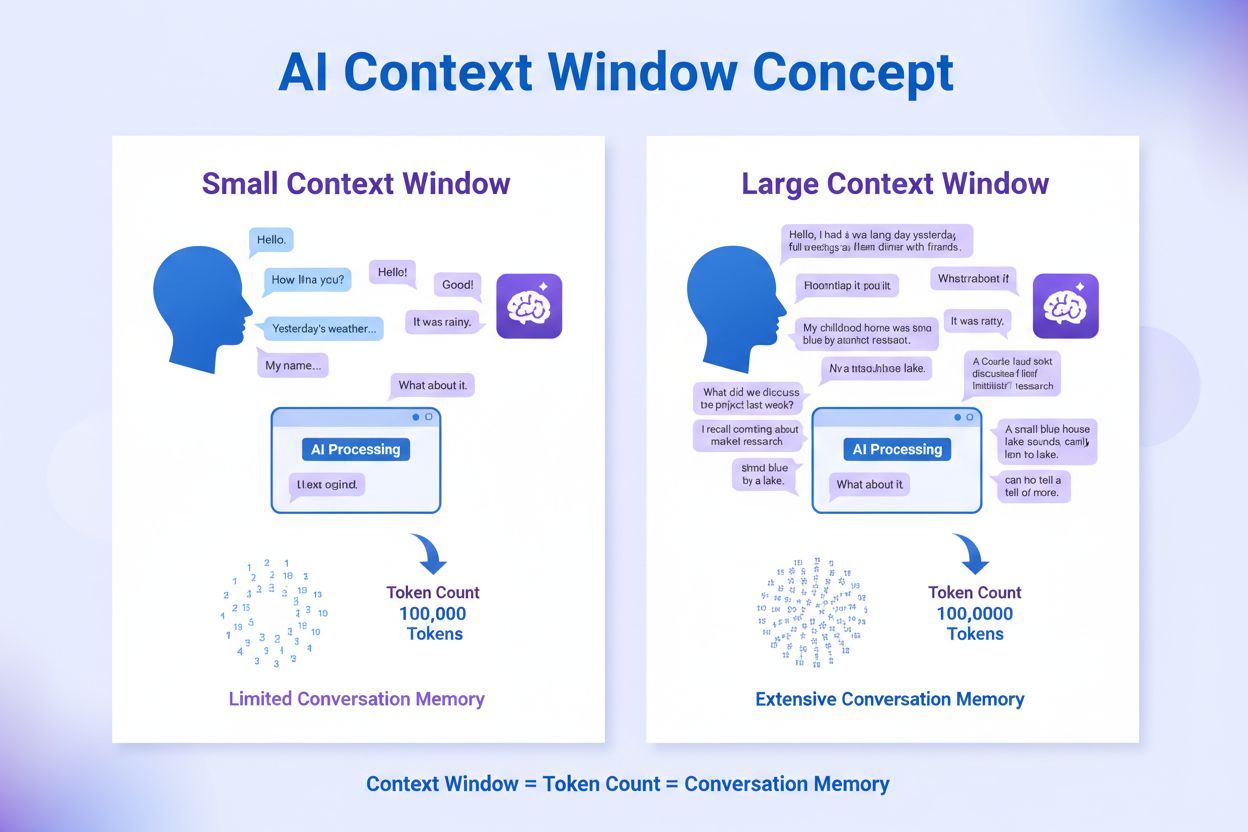

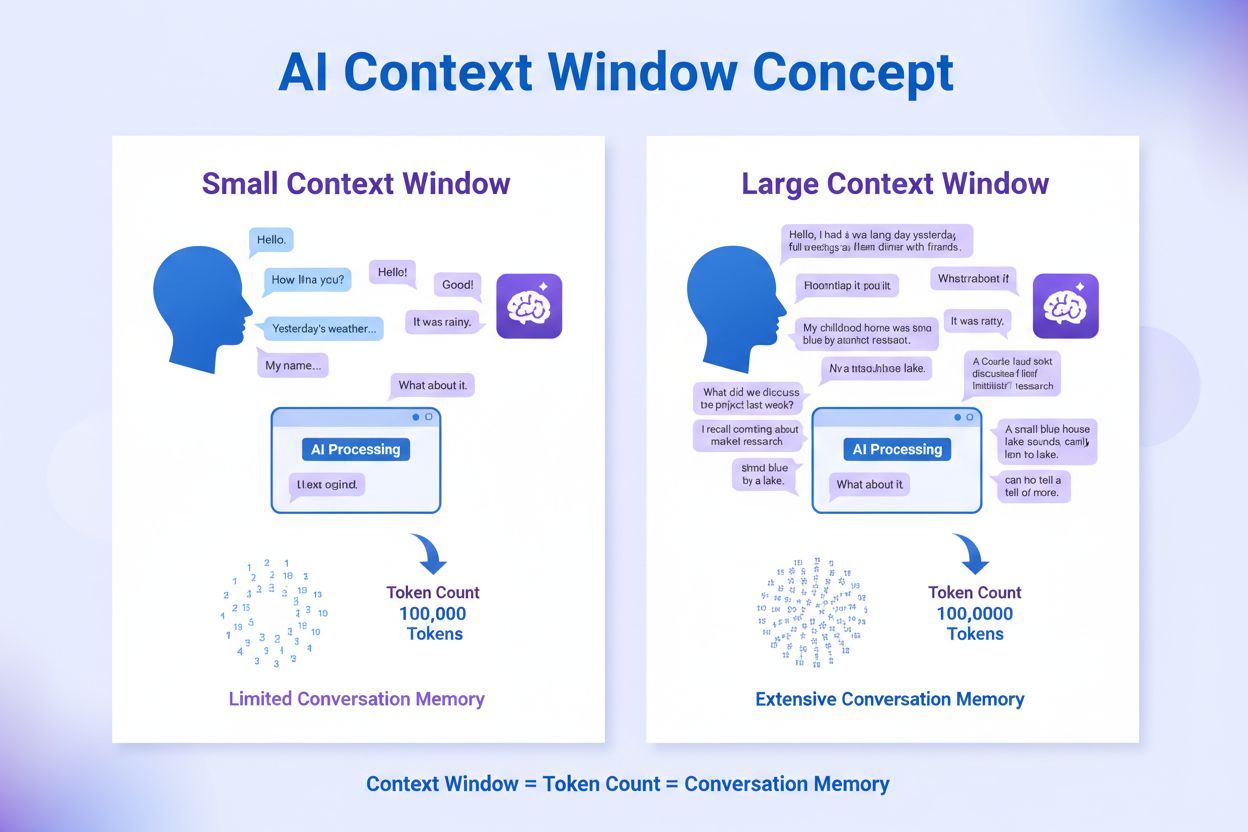

A context window is the working memory of an AI model that determines how much information it can process and remember while generating responses. It's measured in tokens and directly affects the model's ability to understand complex tasks, maintain conversation coherence, and provide accurate answers.

A context window is the working memory of an artificial intelligence model, representing the maximum amount of information it can process and retain simultaneously. Think of it as the AI’s short-term memory—just as humans can only hold a limited amount of information in their minds at once, AI models can only “see” and work with a specific number of tokens within their context window. This fundamental limitation shapes how AI models understand prompts, maintain conversation coherence, and generate accurate responses across various applications.

The context window functions as the space where a language model processes text, measured in tokens rather than words. A token is the smallest unit of language that an AI model processes, which can represent a single character, part of a word, or a short phrase. When you interact with an AI model, it processes your current query plus the entire previous conversation history, depending on the context window size, to generate contextually aware answers. The model’s self-attention mechanism—a core component of transformer-based architectures—computes relationships between all tokens within this window, enabling the model to understand dependencies and connections across the sequence.

The relationship between tokens and context windows is crucial to understanding AI performance. For example, a model with a 3,000-token context window can process exactly 3,000 tokens in a single pass, and any text beyond this limit is ignored or forgotten. A larger window allows the AI to process more tokens, improving its understanding and response generation for lengthy inputs. Conversely, a smaller window limits the AI’s ability to retain context, directly affecting output quality and coherence. The conversion from words to tokens is not one-to-one; a document typically contains approximately 30 percent more tokens than words, though this varies depending on the document type and tokenization process used.

The size of a context window plays a critical role in how well large language models perform, with both significant advantages and important trade-offs depending on the size chosen. Larger context windows enable AI models to handle longer texts by remembering earlier parts of conversations or documents, which proves especially useful for complex tasks like legal document reviews, extended dialogues, and comprehensive code analysis. Access to broader context improves the AI’s comprehension of intricate tasks and allows it to maintain semantic coherence across multiple sections of lengthy documents. This capability is particularly valuable when working with research papers, technical specifications, or multi-file codebases where maintaining long-range dependencies is essential for accuracy.

However, larger context windows require significantly more computational resources, which can slow performance and increase infrastructure costs. The self-attention computation in transformer models scales quadratically with the number of tokens, meaning that doubling the token count requires roughly four times the computational effort. This quadratic scaling impacts inference latency, memory usage, and overall system costs, especially when serving enterprise-scale workflows with strict response time requirements. Smaller context windows, while faster and more efficient, are ideal for short tasks like answering simple questions but struggle to retain context in longer conversations or complex analytical tasks.

| Model | Context Window Size | Use Case Suitability |

|---|---|---|

| GPT-3 | 2,000 tokens | Simple Q&A, short tasks |

| GPT-3.5 Turbo | 4,000 tokens | Basic conversations, summaries |

| GPT-4 | 8,000 tokens | Complex reasoning, moderate documents |

| GPT-4 Turbo | 128,000 tokens | Full documents, code analysis, extended conversations |

| Claude 2 | 100,000 tokens | Long-form content, comprehensive analysis |

| Claude 3 Opus | 200,000 tokens | Enterprise documents, complex workflows |

| Gemini 1.5 Pro | 1,000,000 tokens | Entire codebases, multiple documents, advanced reasoning |

The practical implications of context window size become evident in real-world applications. Google researchers demonstrated the power of extended context windows by using their Gemini 1.5 Pro model to translate from English to Kalamang, a critically endangered language with fewer than 200 speakers. The model received only a single grammar manual as context—information it had never encountered during training—yet performed translation tasks at a skill level comparable to humans using the same resource. This example illustrates how larger context windows enable models to reason over entirely new information without prior training, opening possibilities for specialized and domain-specific applications.

In software development, context window size directly influences code analysis capabilities. AI-powered coding assistants with expanded context windows can handle entire project files rather than focusing on isolated functions or snippets. When working with large web applications, these assistants can analyze relationships between backend APIs and frontend components across multiple files, suggesting code that integrates seamlessly with existing modules. This holistic view of the codebase enables the AI to identify bugs by cross-referencing related files and recommend optimizations such as refactoring large-scale class structures. Without sufficient context, the same assistant would struggle to understand inter-file dependencies and might suggest incompatible changes.

Despite their advantages, large context windows introduce several significant challenges that organizations must address. The “lost in the middle” phenomenon represents one of the most critical limitations, where empirical studies reveal that models attend more reliably to content at the beginning and end of long inputs, while context in the middle becomes noisy and less impactful. This U-shaped performance curve means that crucial information buried in the middle of a lengthy document may be overlooked or misinterpreted, potentially leading to incomplete or inaccurate responses. As inputs consume up to 50 percent of the model’s capacity, this lost-in-the-middle effect peaks; beyond that threshold, performance bias shifts toward recent content only.

Increased computational costs represent another substantial downside of large context windows. Processing more data requires exponentially more computing power—doubling the token count from 1,000 to 2,000 can quadruple the computational demand. This means slower response times and higher costs, which can quickly become a financial strain for businesses using cloud-based services with pay-per-query models. Consider that GPT-4o costs 5 USD per million input tokens and 15 USD per million output tokens; with large context windows, these costs accumulate rapidly. Additionally, larger context windows introduce more room for error; if conflicting information exists within a long document, the model may generate inconsistent answers, and identifying and fixing these errors becomes challenging when the problem is hidden within vast amounts of data.

Distractibility from irrelevant context is another critical concern. A longer window does not guarantee better focus; including irrelevant or contradictory data can actually lead the model astray, exacerbating hallucination rather than preventing it. Key reasoning may be overshadowed by noisy context, reducing the quality of responses. Furthermore, broader context creates an expanded attack surface for security risks, as malicious instructions can be buried deeper in the input, complicating detection and mitigation. This “attack surface expansion” increases the risk of unintended behaviors or toxic outputs that could compromise system integrity.

Organizations have developed several sophisticated strategies to overcome the inherent limitations of fixed context windows. Retrieval-Augmented Generation (RAG) combines traditional language processing with dynamic information retrieval, allowing models to pull relevant information from external sources before generating responses. Instead of depending on the memory space of the context window to hold everything, RAG lets the model gather extra data as needed, making it much more flexible and capable of tackling complex tasks. This approach excels in situations where accuracy is critical, such as educational platforms, customer service, summarizing long legal or medical documents, and enhancing recommendation systems.

Memory-augmented models like MemGPT overcome context window limits by incorporating external memory systems that mimic how computers manage data between fast and slow memory. This virtual memory system allows the model to store information externally and retrieve it when needed, enabling analysis of lengthy texts and retention of context over multiple sessions. Parallel context windows (PCW) solve the challenge of long text sequences by breaking them into smaller chunks, with each chunk operating within its own context window while reusing positional embeddings. This method allows models to process extensive text without retraining, making it scalable for tasks like question answering and document analysis.

Positional skip-wise training (PoSE) helps models manage long inputs by adjusting how they interpret positional data. Instead of fully retraining models on extended inputs, PoSE divides text into chunks and uses skipping bias terms to simulate longer contexts. This technique extends the model’s ability to process lengthy inputs without increasing computational load—for example, allowing models like LLaMA to handle up to 128k tokens even though they were trained on only 2k tokens. Dynamic in-context learning (DynaICL) enhances how LLMs use examples to learn from context by adjusting the number of examples dynamically based on task complexity, reducing token usage by up to 46 percent while improving performance.

Understanding context windows is particularly important for organizations monitoring their brand presence in AI-generated answers. When AI models like ChatGPT, Perplexity, or other AI search engines generate responses, their context windows determine how much information they can consider when deciding whether to mention your domain, brand, or content. A model with a limited context window might miss relevant information about your brand if it’s buried in a larger document or conversation history. Conversely, models with larger context windows can consider more comprehensive information sources, potentially improving the accuracy and completeness of citations to your content.

The context window also affects how AI models handle follow-up questions and maintain conversation coherence when discussing your brand or domain. If a user asks multiple questions about your company or product, the model’s context window determines how much of the previous conversation it can remember, influencing whether it provides consistent and accurate information across the entire exchange. This makes context window size a critical factor in how your brand appears across different AI platforms and in different conversation contexts.

The context window remains one of the most fundamental concepts in understanding how modern AI models work and perform. As models continue to evolve with increasingly larger context windows—from GPT-4 Turbo’s 128,000 tokens to Gemini 1.5’s 1 million tokens—they unlock new possibilities for handling complex, multi-step tasks and processing vast amounts of information simultaneously. However, larger windows introduce new challenges including increased computational costs, the “lost in the middle” phenomenon, and expanded security risks. The most effective approach combines strategic use of extended context windows with sophisticated retrieval and orchestration techniques, ensuring that AI systems can reason accurately and efficiently across complex domains while maintaining cost-effectiveness and security.

Discover how your domain and brand appear in AI-generated responses across ChatGPT, Perplexity, and other AI search engines. Track your visibility and ensure accurate representation.

Learn what a conversational context window is, how it affects AI responses, and why it matters for effective AI interactions. Understand tokens, limitations, an...

Context window explained: the maximum tokens an LLM can process at once. Learn how context windows affect AI accuracy, hallucinations, and brand monitoring acro...

Community discussion on AI context windows and their implications for content marketing. Understanding how context limits affect AI processing of your content.