Crawl Budget Optimization for AI

Learn how to optimize crawl budget for AI bots like GPTBot and Perplexity. Discover strategies to manage server resources, improve AI visibility, and control ho...

Learn what crawl budget for AI means, how it differs from traditional search crawl budgets, and why it matters for your brand’s visibility in AI-generated answers and AI search engines.

Crawl budget for AI refers to the amount of resources and time that AI crawlers (like GPTBot, ClaudeBot, and Perplexity bots) allocate to crawl and index your website. It determines how many pages are discovered, how frequently they're visited, and ultimately whether your content appears in AI-generated answers.

Crawl budget for AI is fundamentally different from traditional Google crawl budget, yet equally critical for your online visibility. While Googlebot has spent decades refining its crawling behavior and respecting server capacity, AI crawlers like GPTBot, ClaudeBot, and Perplexity bots are newer, more aggressive, and often less refined in their approach. These AI bots are consuming an unprecedented amount of bandwidth and server resources, with some sites reporting that OpenAI’s crawlers hit their infrastructure 12 times more frequently than Google does. Understanding and managing this emerging crawl budget is essential for brands that want to appear in AI-generated answers and maintain control over how their content is used by artificial intelligence systems.

The concept of crawl budget for AI extends beyond simple page discovery. It encompasses the allocation of computational resources, bandwidth, and server capacity that AI training systems dedicate to crawling your website. Unlike traditional search engines that primarily aim to index and rank content, AI crawlers are gathering training data, extracting information for answer generation, and building knowledge models. This means your crawl budget for AI directly impacts whether your brand’s information reaches the AI systems that millions of users interact with daily, from ChatGPT to Google’s AI Overviews.

The distinction between AI crawl budget and traditional search crawl budget is crucial for modern SEO and content strategy. Traditional crawl budget, managed by Googlebot, operates within established protocols and respects server capacity limits through sophisticated algorithms developed over two decades. Googlebot slows down when it detects server strain, follows robots.txt directives reliably, and generally behaves as a “good citizen” on the internet. In contrast, AI crawlers are often less sophisticated in their resource management, crawling aggressively without fully rendering JavaScript-driven content, and not always respecting robots.txt rules with the same consistency as Google.

| Aspect | Traditional Search Crawl Budget | AI Crawl Budget |

|---|---|---|

| Primary Purpose | Indexing for search rankings | Training data collection and answer generation |

| Crawler Sophistication | Highly refined, 20+ years of optimization | Newer, less refined, more aggressive |

| JavaScript Rendering | Executes JavaScript to understand content | Often skips JavaScript, grabs raw HTML only |

| robots.txt Compliance | Highly reliable adherence | Variable compliance across different AI providers |

| Server Load Consideration | Actively throttles to prevent overload | Less considerate of server capacity |

| Crawl Frequency | Adaptive based on content freshness | Often more frequent and resource-intensive |

| Impact on Visibility | Determines search rankings and indexation | Determines appearance in AI-generated answers |

| Bandwidth Consumption | Moderate and predictable | High and often unpredictable |

This table illustrates why managing AI crawl budget requires a different strategy than optimizing for traditional search. While you might block certain pages from Googlebot to preserve crawl budget, you may want to allow AI crawlers to access your most authoritative content to ensure it appears in AI answers. The stakes are different: traditional crawl budget affects search visibility, while AI crawl budget affects whether your brand gets cited as a source in AI-generated responses.

The emergence of AI crawl budget as a critical metric reflects a fundamental shift in how information is discovered and consumed online. AI crawler traffic has surged by 96% between May 2024 and May 2025, with GPTBot’s share of total crawler traffic jumping from 5% to 30%. This explosive growth means that AI systems are now competing with traditional search engines for your server resources and bandwidth. For many websites, AI crawlers now consume more bandwidth than Google, creating a new category of technical challenges that didn’t exist just two years ago.

The importance of managing AI crawl budget extends beyond server performance. When AI crawlers efficiently discover and understand your content, they’re more likely to cite your brand in AI-generated answers. This is particularly valuable for Answer Engine Optimization (AEO), where the goal shifts from ranking in search results to being selected as a source in AI responses. If your crawl budget for AI is wasted on low-value pages, outdated content, or pages that don’t render properly for AI systems, your most authoritative and valuable content may never reach the AI models that generate answers for millions of users daily.

Understanding the mechanics of AI crawl budget requires examining its two fundamental components: crawl capacity limit and crawl demand. These elements work together to determine how much of your website’s content gets discovered and processed by AI systems.

Crawl Capacity Limit represents the technical ceiling—the maximum number of simultaneous connections and requests that AI crawlers can make to your server without causing performance degradation. This limit is influenced by your server’s response time, available bandwidth, and ability to handle concurrent requests. Unlike Googlebot, which actively monitors server health and throttles itself when it detects strain, many AI crawlers are less considerate of server capacity, potentially causing unexpected spikes in resource consumption. If your server responds slowly or returns errors, the crawl capacity limit may be reduced, but this happens less predictably with AI bots than with Google.

Crawl Demand for AI systems is driven by different factors than traditional search. While Google’s crawl demand is influenced by content freshness, popularity, and perceived quality, AI crawl demand is driven by the perceived value of your content for training and answer generation. AI systems prioritize content that is factual, well-structured, authoritative, and relevant to common questions. If your site contains comprehensive, well-organized information on topics that AI systems need to answer user queries, your crawl demand will be higher. Conversely, if your content is thin, outdated, or poorly structured, AI crawlers may deprioritize your site.

The behavioral differences between AI crawlers and Googlebot have significant implications for how you should manage your crawl budget for AI. Googlebot has evolved to be highly respectful of server resources and follows established web standards meticulously. It respects robots.txt directives, understands canonical tags, and actively manages its crawl rate to avoid overwhelming servers. AI crawlers, by contrast, often operate with less sophistication and more aggression.

Many AI crawlers do not fully render JavaScript, meaning they only see the raw HTML that’s initially served. This is a critical distinction because if your critical content is loaded via JavaScript, AI crawlers may not see it at all. They grab the initial HTML response and move on, missing important information that Googlebot would discover through its Web Rendering Service. Additionally, AI crawlers are less consistent in respecting robots.txt rules. While some AI providers like Anthropic have published guidelines for their crawlers, others are less transparent about their crawling behavior, making it difficult to control your AI crawl budget through traditional directives.

The crawl patterns of AI bots also differ significantly. Some AI crawlers, like ClaudeBot, have been observed crawling with an extremely unbalanced crawl-to-referral ratio—for every visitor that Claude refers back to a website, the bot crawls tens of thousands of pages. This means AI crawlers are consuming massive amounts of your crawl budget while sending minimal traffic in return, creating a one-sided resource drain that traditional search engines don’t exhibit to the same degree.

Effective management of AI crawl budget requires a multi-layered approach that balances allowing AI systems to discover your best content while protecting server resources and preventing crawl waste. The first step is identifying which AI crawlers are accessing your site and understanding their behavior patterns. Tools like Cloudflare Firewall Analytics allow you to filter traffic by user-agent strings to see exactly which AI bots are visiting and how frequently. By examining your server logs, you can determine whether AI crawlers are spending their budget on high-value content or wasting resources on low-priority pages.

Once you understand your AI crawl patterns, you can implement strategic controls to optimize your crawl budget. This might include using robots.txt to block AI crawlers from accessing low-value sections like internal search results, pagination beyond the first few pages, or outdated archive content. However, this strategy must be balanced carefully—blocking AI crawlers entirely from your site means your content won’t appear in AI-generated answers, which could represent a significant loss of visibility. Instead, selective blocking of specific URL patterns or directories allows you to preserve crawl budget for your most important content.

Server-level controls provide another powerful mechanism for managing AI crawl budget. Using reverse proxy rules in Nginx or Apache, you can implement rate limiting specifically for AI crawlers, controlling how aggressively they can access your site. Cloudflare and similar services offer bot management features that allow you to set different rate limits for different crawlers, ensuring that AI bots don’t monopolize your server resources while still allowing them to discover your important content. These controls are more effective than robots.txt because they operate at the infrastructure level and don’t rely on crawler compliance.

The question of whether to block AI crawlers entirely is one of the most important strategic decisions facing modern website owners. The answer depends entirely on your business model and competitive positioning. For publishers and brands that depend heavily on organic visibility and want to appear in AI-generated answers, blocking AI crawlers is generally counterproductive. If you prevent AI systems from accessing your content, your competitors’ content will be used instead, potentially giving them an advantage in AI-driven search results.

However, there are legitimate scenarios where blocking certain AI crawlers makes sense. Legal and compliance-sensitive content may need to be protected from AI training. For example, a law firm with archived legislation from previous years might not want AI systems to cite outdated legal information that could mislead users. Similarly, proprietary or confidential information should be blocked from AI crawlers to prevent unauthorized use. Some businesses may also choose to block AI crawlers if they’re experiencing significant server strain and don’t see a clear business benefit from AI visibility.

The more nuanced approach is selective blocking—allowing AI crawlers to access your most authoritative, high-value content while blocking them from low-priority sections. This strategy maximizes the likelihood that your best content appears in AI answers while minimizing crawl waste on pages that don’t deserve AI attention. You can implement this through careful robots.txt configuration, using the emerging llms.txt standard (though adoption is still limited), or through server-level controls that allow different crawlers different levels of access.

Beyond managing crawl budget allocation, you should optimize your content to be easily discoverable and understandable by AI crawlers. This involves several technical and content-level considerations. First, ensure critical content is in static HTML rather than JavaScript-rendered content. Since many AI crawlers don’t execute JavaScript, content that’s loaded dynamically after page render will be invisible to these bots. Server-side rendering (SSR) or static HTML generation ensures that AI crawlers see your full content in their initial request.

Structured data markup is increasingly important for AI crawlers. Using Schema.org markup for FAQPage, HowTo, Article, and other relevant types helps AI systems quickly understand the purpose and content of your pages. This structured information makes it easier for AI crawlers to extract answers and cite your content appropriately. When you provide clear, machine-readable structure, you’re essentially making your content more valuable to AI systems, which increases the likelihood they’ll prioritize crawling and citing your pages.

Content clarity and factual accuracy directly impact how AI systems treat your content. AI crawlers are looking for reliable, well-sourced information that can be used to generate accurate answers. If your content is thin, contradictory, or poorly organized, AI systems will deprioritize it. Conversely, comprehensive, well-researched content with clear formatting, bullet points, and logical structure is more likely to be crawled frequently and cited in AI answers. This means that optimizing for AI crawl budget is inseparable from optimizing content quality.

Effective management of AI crawl budget requires ongoing monitoring and measurement. Google Search Console provides valuable data about traditional crawl activity, but it doesn’t currently offer detailed insights into AI crawler behavior. Instead, you need to rely on server log analysis to understand how AI bots are interacting with your site. Tools like Screaming Frog’s Log File Analyzer or enterprise solutions like Splunk allow you to filter server logs to isolate AI crawler requests and analyze their patterns.

Key metrics to monitor include:

By tracking these metrics over time, you can identify patterns and make data-driven decisions about how to optimize your AI crawl budget. If you notice that AI crawlers are spending 80% of their time on low-value pages, you can implement robots.txt blocks or server-level controls to redirect that budget toward your most important content.

As AI systems become increasingly sophisticated and prevalent, managing AI crawl budget will become as important as managing traditional search crawl budget. The emergence of new AI crawlers, the increasing aggressiveness of existing ones, and the growing importance of AI-generated answers in search results all point to a future where AI crawl budget optimization is a core technical SEO discipline.

The development of standards like llms.txt (similar to robots.txt but specifically for AI crawlers) may eventually provide better tools for managing AI crawl budget. However, adoption is currently limited, and it’s unclear whether all AI providers will respect these standards. In the meantime, server-level controls and strategic content optimization remain your most reliable tools for managing how AI systems interact with your site.

The competitive advantage will go to brands that proactively manage their AI crawl budget, ensuring their best content is discovered and cited by AI systems while protecting server resources from unnecessary crawl waste. This requires a combination of technical implementation, content optimization, and ongoing monitoring—but the payoff in terms of visibility in AI-generated answers makes it well worth the effort.

Track how your content appears in AI-generated answers across ChatGPT, Perplexity, and other AI search engines. Ensure your brand gets proper visibility where AI systems cite sources.

Learn how to optimize crawl budget for AI bots like GPTBot and Perplexity. Discover strategies to manage server resources, improve AI visibility, and control ho...

Community discussion on AI crawl budget management. How to handle GPTBot, ClaudeBot, and PerplexityBot without sacrificing visibility.

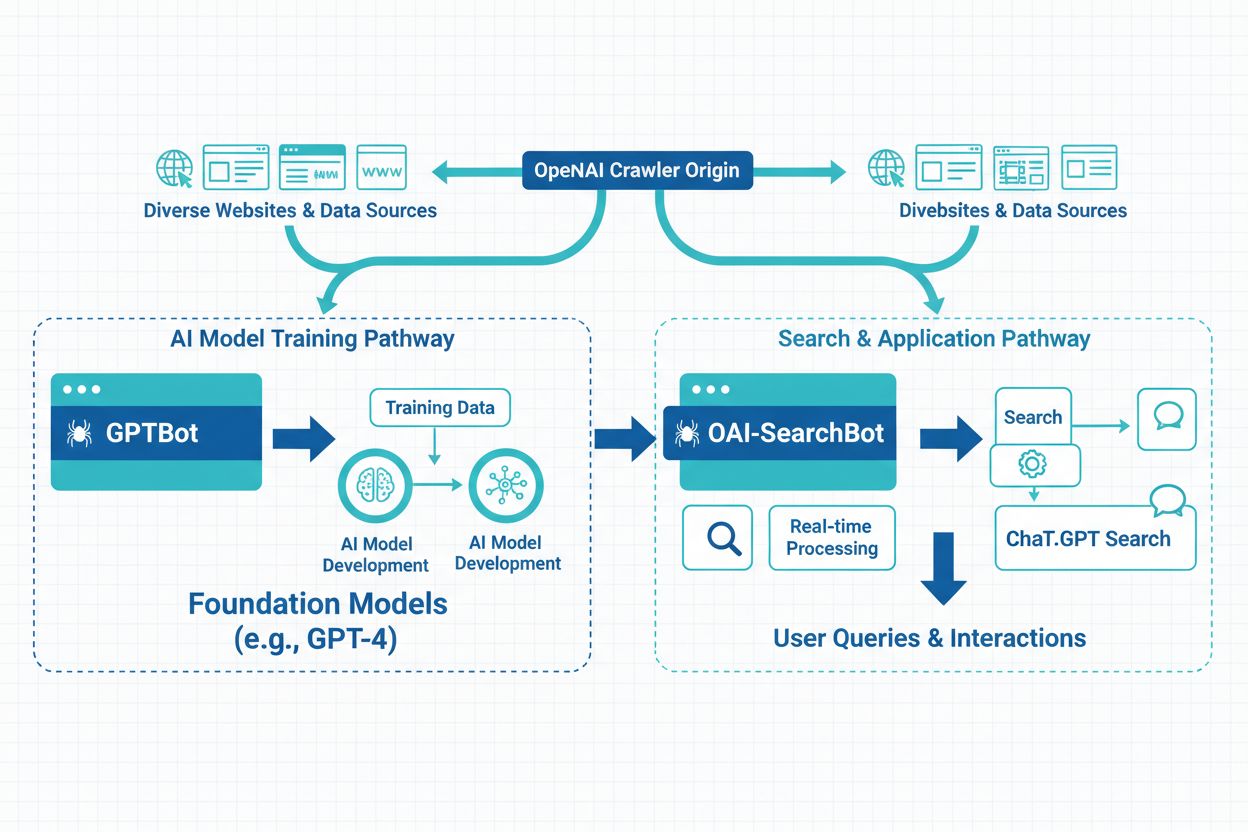

Learn the key differences between GPTBot and OAI-SearchBot crawlers. Understand their purposes, crawl behaviors, and how to manage them for optimal content visi...