GPTBot

Learn what GPTBot is, how it works, and whether you should block it from your website. Understand the impact on SEO, server load, and brand visibility in AI sea...

Learn what GPTBot is, how it works, and whether you should allow or block OpenAI’s web crawler. Understand the impact on your brand visibility in AI search engines and ChatGPT.

GPTBot is OpenAI's web crawler that collects data from publicly accessible websites to train AI models like ChatGPT. Whether to allow it depends on your priorities: allow it for better brand visibility in AI search results and ChatGPT responses, or block it if you have concerns about content usage, intellectual property, or server resources.

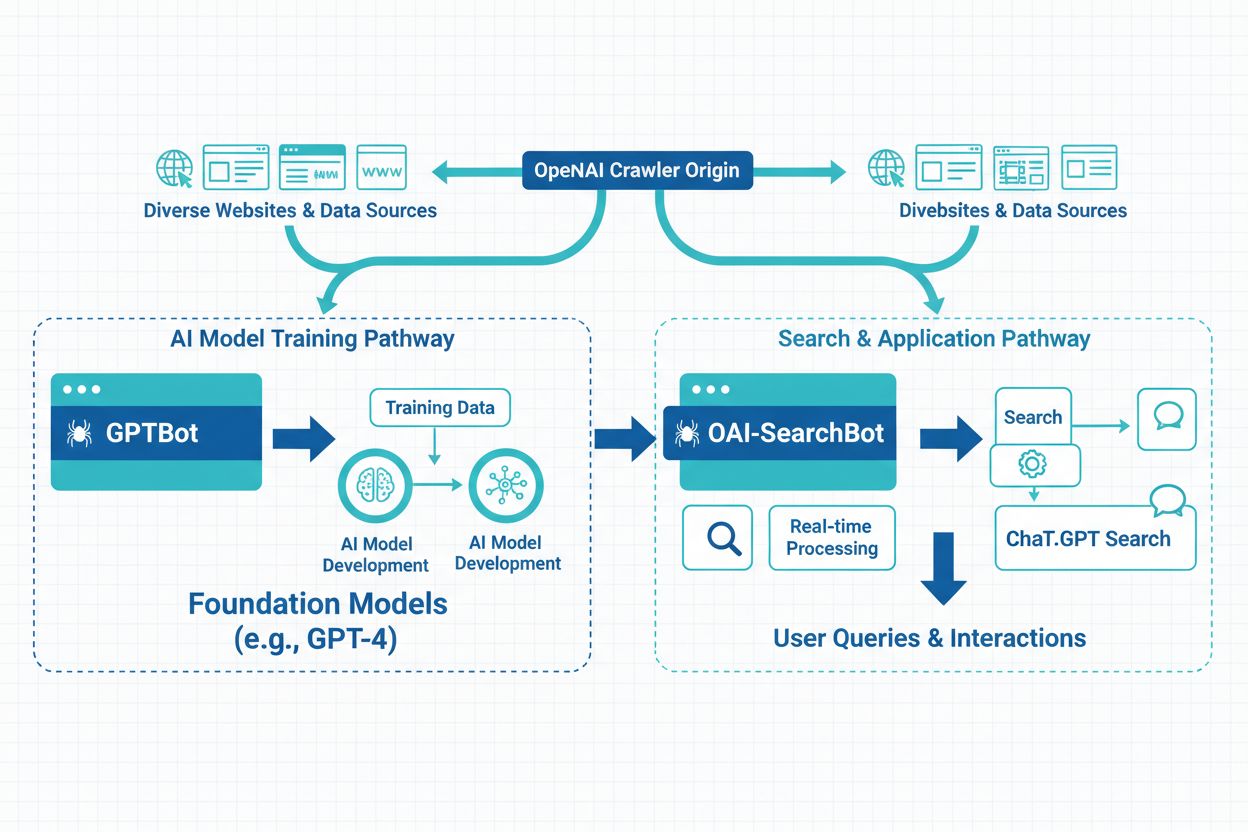

GPTBot is OpenAI’s official web crawler designed to systematically scan publicly accessible websites and collect data for training large language models like ChatGPT and GPT-4. Unlike traditional search engine crawlers such as Googlebot, which index content for search results, GPTBot serves a fundamentally different purpose: gathering information to improve the AI’s understanding of language patterns, current events, and real-world knowledge. When GPTBot visits your website, it identifies itself with a clear user agent string that appears in your server logs as Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; GPTBot/1.3; +https://openai.com/gptbot), making it easy for webmasters to recognize and monitor its activity.

The crawler operates with transparency and respect for established web standards. Before accessing any content on your site, GPTBot checks your robots.txt file, which is the standard mechanism webmasters use to communicate with automated bots about which parts of their site can or cannot be accessed. If you include a disallow rule for GPTBot in your robots.txt file, the crawler will respect your preference and refrain from accessing your site. This voluntary compliance with robots.txt demonstrates OpenAI’s commitment to responsible crawling practices, though it’s important to note that enforcement ultimately depends on the crawler operator’s integrity.

GPTBot only scans publicly accessible content and cannot bypass paywalls, login pages, or restricted sections of your website. The crawler does not attempt to access private information, authenticated areas, or content marked as private. This limitation means that sensitive data, member-only content, and subscription-based material remain protected from GPTBot’s reach. The information gathered by GPTBot is used exclusively to improve the AI’s understanding of language, context, and current events, with no direct impact on your traditional search engine rankings or how your site appears in Google Search results.

Recent data reveals the dramatic rise of GPTBot as a dominant force in web crawling. Between May 2024 and May 2025, GPTBot’s share of AI crawler traffic surged from just 5% to 30%, representing a staggering 305% increase in raw requests. This explosive growth reflects OpenAI’s massive investment in training data collection and the increasing importance of AI models in the digital ecosystem. GPTBot has become the second-most blocked crawler on the web today and the most blocked crawler via robots.txt files, with more than 3.5% of websites currently implementing blocking rules against it.

Major publishers and content creators have taken notice of this trend. The New York Times, CNN, and more than 30 of the top 100 websites have already implemented blocking rules against GPTBot, signaling growing concerns about content usage and intellectual property rights. However, this blocking trend doesn’t tell the complete story. While some sites view GPTBot as a threat to their business model, others recognize it as an opportunity to ensure their content reaches the billions of users who interact with ChatGPT and other AI systems daily. The decision to allow or block GPTBot has become a strategic choice that reflects each organization’s values, business model, and long-term vision for their digital presence.

| Metric | Value | Significance |

|---|---|---|

| GPTBot Growth (May 2024 - May 2025) | +305% | Fastest-growing AI crawler |

| Current Share of AI Crawler Traffic | 30% | Dominant AI crawler by volume |

| Websites Blocking GPTBot | 3.5%+ | Second-most blocked crawler |

| Top 100 Websites Blocking | 30+ | Major publishers blocking access |

| ChatGPT Weekly Users | 800 million | Potential audience reach |

Website owners choose to block GPTBot for several legitimate and interconnected reasons that reflect genuine concerns about content usage, business sustainability, and data protection. The most prominent concern centers on content usage without compensation. Publishing high-quality content requires significant time, resources, and expertise. When AI systems scrape that work to train models that answer user questions—often without linking back to the original source—the arrangement feels fundamentally unfair to many content creators. This concern is particularly acute for publishers, journalists, and specialized content creators who depend on traffic and attribution to sustain their operations. The fear is that as AI systems become more sophisticated at answering questions directly, users may have less incentive to visit original websites, thereby eroding traffic and devaluing the original content investment.

Security and server resource concerns represent another significant factor in blocking decisions. While GPTBot respects robots.txt rules like other crawlers, questions remain about the cumulative impact of multiple AI crawlers accessing your content simultaneously. GPT crawlers like GPTBot and ClaudeBot can consume substantial bandwidth, with some websites reporting surges of up to 30 terabytes of traffic, placing significant strain on servers—especially those in shared hosting environments. Even if GPTBot itself isn’t malicious, the addition of one more automated system accessing your content adds complexity to site monitoring, firewall configurations, and bot management strategies. There’s also concern about data exposure through pattern matching, where seemingly benign pieces of content reveal more than intended when combined and analyzed by machine learning systems.

Legal uncertainty creates additional hesitation for many website owners. AI-driven tools like GPTBot exist in a gray area regarding data privacy, copyright laws, and intellectual property rights. Some marketers worry that allowing GPTBot to scrape content could unintentionally violate regulations like GDPR or CCPA, particularly if personal data or user-generated content is involved. Even though the content is publicly accessible, the legal argument around fair use in AI training remains unsettled and contested. The intellectual property angle adds another layer of complexity: if your original writing ends up paraphrased in a ChatGPT answer, who owns that output? Right now, no clear legal precedent exists to answer this question definitively. For brands operating in regulated industries like finance, healthcare, or law, the conservative approach of blocking access while the legal landscape continues to evolve makes strategic sense.

Despite the legitimate concerns about blocking, compelling reasons exist for allowing GPTBot access to your content. The most significant advantage is brand visibility in ChatGPT and AI-powered search results. ChatGPT has approximately 800 million weekly users and handles billions of queries monthly. Many of those users ask questions that your content can answer. If GPTBot cannot access your site, the model relies on secondhand information or outdated sources to discuss your brand, products, or expertise. This represents a missed opportunity and a potential risk to your reputation. Allowing GPTBot to crawl your content helps ensure that ChatGPT’s responses reflect your messaging, offerings, and expertise accurately. This is essentially reputation management on autopilot—your content gets represented in one of the world’s most widely used AI systems.

AI search traffic converts significantly better than traditional organic search traffic. Early data shows that visitors from AI search platforms convert 23 times better than traditional organic search visitors. While AI search currently drives less than 1% of total web traffic, the quality of those visits tells a compelling story. AI search users typically arrive further along the decision-making journey. They have already used AI to research options, compare features, and narrow down choices before clicking through to your website. This means they’re more qualified, more informed, and more likely to convert into customers or take desired actions. As AI tools become a primary way people search, discover, and engage with content, ignoring AI search completely could mean falling behind competitors who are actively optimizing for this emerging channel.

Future-proofing your digital presence is another critical consideration. As AI tools become increasingly central to how people discover information, blocking AI crawlers entirely could mean opting out of the future of search. Generative engine optimization represents the next evolution of search visibility, and ChatGPT accounts for over 80% of AI referral traffic, making OpenAI’s crawler particularly important for long-term visibility. The web and search landscape are changing rapidly, and organizations that position themselves now to be part of the AI ecosystem will have significant advantages as these technologies mature and become even more central to how people find information.

Blocking GPTBot is straightforward and reversible through your robots.txt file, which is the standard mechanism for communicating with web crawlers. To block GPTBot completely from your entire website, add these lines to your robots.txt file:

User-agent: GPTBot

Disallow: /

This tells OpenAI’s crawler to avoid your entire site. If you want more granular control, you can allow partial access by replacing the / with specific directories or pages you want to make available. For example, to block GPTBot from accessing your /private/ directory while allowing access to the rest of your site:

User-agent: GPTBot

Disallow: /private/

If you want to block all OpenAI-related crawling activities, you should add rules for the three different bots that OpenAI operates:

User-agent: GPTBot

Disallow: /

User-agent: ChatGPT-User

Disallow: /

User-agent: OAI-SearchBot

Disallow: /

Alternative blocking methods offer greater control but require more technical expertise. IP blocking allows you to deny OpenAI’s IP address ranges from your server firewall or hosting control panel, though this method requires keeping the IP list updated as OpenAI’s infrastructure changes. Rate limiting sets restrictions on the number of requests per minute or hour to prevent server overload. Web Application Firewalls (WAFs) implement server-side blocking rules based on a bot’s IP address or user agent string, offering more sophisticated control over bot traffic. You can monitor crawler activity in your server logs or through tools like Cloudflare or Google Search Console to ensure GPTBot respects your instructions.

Certain industries have particularly strong reasons to limit bot access to protect data, revenue, and user interests. Publishing and media companies face direct threats to their business model, as they depend on traffic and ad revenue. Publishers want users visiting their sites directly, not being redirected to AI-generated summaries. Major examples include The New York Times, Associated Press, and Reuters, which have all implemented blocking rules. E-commerce platforms shield unique product descriptions and pricing from competitors and data scraping tools, protecting their competitive advantages. User-generated content platforms like Reddit protect community-created content and licensed data from unrestricted scraping that could devalue their assets. High-authority data sites in sensitive industries like law, medicine, and finance control access to specialized, research-based content to maintain compliance and protect proprietary information.

You can confirm whether GPTBot is visiting your site through several methods. Checking server logs is the most direct approach—look for user agent strings containing “GPTBot” in your access logs to see when and how frequently the crawler visits. Using analytics tools provides another avenue, as many analytics platforms show bot traffic and allow filtering via user agent, making identification straightforward. SEO monitoring software reports on crawler activity, including OpenAI’s bots, giving you visibility into how often GPTBot accesses your content. Regular monitoring helps you understand the frequency of GPTBot visits and whether the crawler impacts your site performance. If you notice GPTBot activity and want to control access, you can easily manage permissions through your robots.txt file or implement more sophisticated blocking methods through your hosting provider or web application firewall.

The decision to allow or block GPTBot should align with your specific business goals, content strategy, and long-term vision. Block GPTBot if you publish proprietary content or operate in a tightly regulated space where data protection is paramount, you’re not ready to feed the AI ecosystem and prefer to maintain complete control over your content usage, you prioritize content control, legal compliance, or security over potential AI visibility, your server resources are limited and bot traffic causes measurable performance issues, or you have strong concerns about intellectual property and content ownership rights. Allow GPTBot if you want to boost your AI-era visibility, brand influence, and relevance across generative platforms, you want accurate brand representation to ChatGPT’s 800 million weekly users, you’re building for the future and want to be part of the AI search ecosystem, you want to improve your site’s generative engine optimization and capture high-converting AI search traffic, or you’re aiming for long-term visibility and brand reach in an increasingly AI-driven digital landscape.

The web and search are changing rapidly, and either way, you need to decide where your content fits into that future and act accordingly. The choice between allowing and blocking GPTBot is not permanent—you can adjust your robots.txt file at any time to change your preference. What matters most is making an informed decision based on your business priorities, understanding the implications for your brand visibility in AI systems, and monitoring the results of your choice over time.

Track how your brand appears in ChatGPT, Perplexity, and other AI answer generators. Get real-time insights into your AI search visibility and optimize your content strategy.

Learn what GPTBot is, how it works, and whether you should block it from your website. Understand the impact on SEO, server load, and brand visibility in AI sea...

Learn the key differences between GPTBot and OAI-SearchBot crawlers. Understand their purposes, crawl behaviors, and how to manage them for optimal content visi...

Learn which AI crawlers to allow or block in your robots.txt. Comprehensive guide covering GPTBot, ClaudeBot, PerplexityBot, and 25+ AI crawlers with configurat...