MUM (Multitask Unified Model)

MUM is Google's Multitask Unified Model—a multimodal AI that processes text, images, video, and audio across 75+ languages. Learn how it transforms search and i...

Learn about Google’s Multitask Unified Model (MUM) and its impact on AI search results. Understand how MUM processes complex queries across multiple formats and languages.

MUM (Multitask Unified Model) is Google's advanced AI model that understands complex search queries across text, images, and video in 75+ languages. It affects AI search by reducing the need for multiple searches, providing richer multimodal results, and enabling more contextual understanding of user intent.

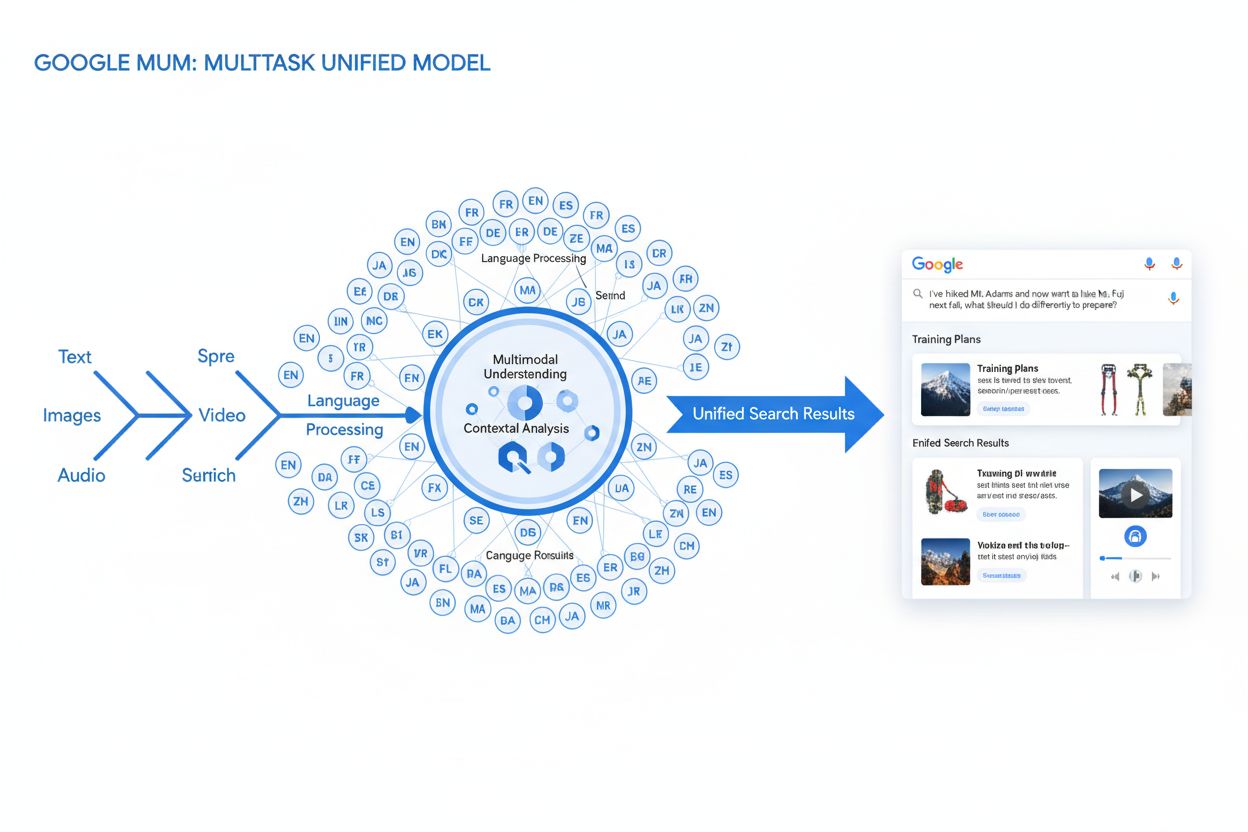

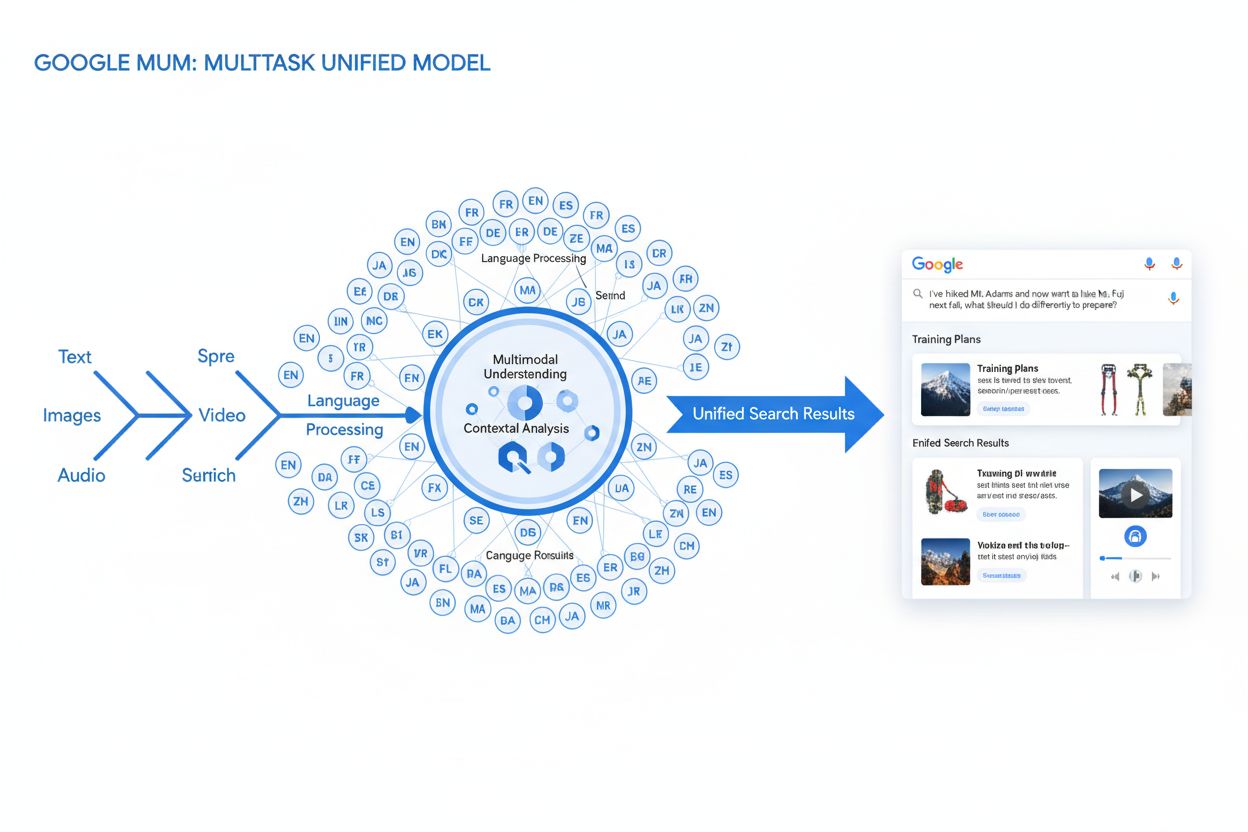

MUM (Multitask Unified Model) is a revolutionary artificial intelligence framework developed by Google and announced in May 2021. It represents a significant leap forward in how search engines understand and process complex user queries. Unlike previous AI models that focused primarily on text-based understanding, MUM is a multimodal and multilingual AI system that can simultaneously process information across text, images, video, and audio formats. This fundamental shift in technology has profound implications for how AI search engines deliver results and how users interact with search platforms.

The core innovation behind MUM lies in its ability to understand context and nuance in ways that previous models could not achieve. Google’s research team built MUM using the T5 text-to-text framework, making it approximately 1,000 times more powerful than BERT, its predecessor. This increased capability allows MUM to not only understand language but also generate it, creating a more comprehensive understanding of information and world knowledge. The model was trained across 75 different languages and many different tasks simultaneously, enabling it to develop a more sophisticated grasp of how information relates across different contexts, cultures, and formats.

The way MUM processes search queries differs fundamentally from traditional search algorithms. When a user submits a complex query, MUM analyzes multiple possible interpretations in parallel rather than narrowing down to a single understanding. This parallel processing capability means the system can surface insights based on deep knowledge of the world while simultaneously identifying related questions, comparisons, and diverse content sources. For example, if someone asks “I’ve hiked Mt. Adams and now want to hike Mt. Fuji next fall, what should I do to prepare?”, MUM understands that this query involves comparing two mountains, requires elevation and trail information, and includes preparation aspects like fitness training and gear selection.

MUM employs sequence-to-sequence matching technology that analyzes entire queries as complete sequences rather than matching individual keywords to database entries. The system converts search input into high-dimensional vectors that represent semantic meaning, then compares these vectors against content in Google’s index. This vector-based semantic understanding enables MUM to retrieve results based on actual meaning rather than simple term matching. Additionally, MUM uses knowledge transfer across languages, allowing it to learn from sources written in languages different from the user’s search language and bring that information to them in their preferred language.

| Feature | Traditional Search | MUM-Powered Search |

|---|---|---|

| Input Types | Text only | Text, images, video, audio |

| Language Support | Limited multilingual | 75+ languages natively |

| Query Understanding | Keyword matching | Contextual intent analysis |

| Result Format | Text links primarily | Multimodal rich results |

| Processing Speed | Sequential | Parallel processing |

| Context Awareness | Single query focus | Cross-document understanding |

One of MUM’s most transformative capabilities is its multimodal understanding, which means it can process and understand information from different formats simultaneously. This is fundamentally different from previous search technologies that treated text, images, and video as separate data streams. With MUM, a user could theoretically take a photo of their hiking boots and ask “can I use these to hike Mt. Fuji?” and the system would understand both the image and the question together, providing an integrated answer that connects visual information with contextual knowledge.

This multimodal approach has significant implications for how content appears in search results. Rather than displaying a simple list of blue links, MUM-powered search results are becoming increasingly visual and interactive. Users now see integrated carousels of images, embedded videos with timestamps, zoomable product photos, and contextual overlays that provide information without requiring clicks. The search experience itself becomes more immersive and exploratory, with features like “Things to Know” panels that break down complex queries into digestible subtopics, each with relevant snippets and visual elements.

Language has traditionally been a significant barrier to accessing information, but MUM fundamentally changes this dynamic. The model’s ability to transfer knowledge across languages means that helpful information written in Japanese about Mt. Fuji can now inform search results for English-language queries about the same topic. This cross-lingual knowledge transfer doesn’t simply translate content; instead, it understands concepts and information in one language and applies that understanding to deliver results in another language.

This capability has profound implications for global information access. When searching for information about visiting Mt. Fuji, users might now see results about where to enjoy the best views, local onsen (hot springs), and popular souvenir shops—information that is more commonly found when searching in Japanese. The system essentially democratizes access to information that was previously locked behind language barriers. For content creators and brands, this means that multilingual content strategies become increasingly important, as your content in one language can now influence search results in other languages.

One of MUM’s primary design goals is to reduce the number of searches users need to perform to get complete answers. Research showed that users typically issue eight separate queries on average for complex tasks. Before MUM, if someone wanted to compare hiking Mt. Adams with Mt. Fuji, they would need to search for elevation differences, average temperatures, trail difficulty, required gear, training recommendations, and more. Each search would require clicking through multiple results and synthesizing information from different sources.

With MUM, the system attempts to anticipate these follow-up questions and provide comprehensive information in a single search result. The SERP becomes a unified information hub that addresses multiple aspects of the user’s underlying need. This shift has important implications for how brands and content creators think about visibility. Rather than optimizing for individual keyword rankings, success increasingly depends on being part of comprehensive topic clusters that address user intent from multiple angles. Content that provides deep, layered information addressing various aspects of a topic is more likely to be surfaced by MUM.

MUM’s effectiveness depends significantly on structured data and entity recognition. The system uses schema markup and structured information to better understand what content is about and how different pieces of information relate to each other. This means that implementing proper schema markup—such as FAQPage, HowTo, Article, and VideoObject schemas—becomes increasingly important for visibility in MUM-powered search results.

Beyond simple schema implementation, MUM focuses on entity building and topical authority. Rather than thinking about individual keywords, successful content strategies now emphasize establishing key topics or entities relevant to your industry. For example, instead of optimizing for the single keyword “CRM for small business,” a comprehensive approach would establish related entities like customer relationship management, sales automation, lead management, customer support, and customer data management. This entity-based approach helps MUM understand the full scope of your expertise and surface your content across a broader range of related queries.

The rise of MUM and similar multimodal AI models has significant implications for how brands appear in AI-powered search results. Traditional SEO metrics like click-through rates and individual page rankings become less relevant when users can consume comprehensive information directly in search results without clicking through to websites. This creates both challenges and opportunities for content creators and brands.

The challenge is that users may find answers to their questions without ever visiting your website. The opportunity is that being featured prominently in these rich, multimodal search results—through featured snippets, video carousels, image galleries, and knowledge panels—provides brand visibility and authority even without direct traffic. This requires a fundamental shift in how success is measured. Rather than focusing solely on traffic metrics, brands need to develop new KPIs that reflect visibility within search results, brand mentions in AI-generated answers, and engagement with multimodal content formats.

To optimize for MUM and similar AI models, content strategies must evolve in several key directions. First, content must become genuinely multimodal, incorporating high-quality images, videos, infographics, and interactive elements alongside text. Second, content should be structured with clear semantic relationships, using proper heading hierarchies, schema markup, and internal linking to establish topical connections. Third, content creators should focus on comprehensive topic coverage rather than individual keyword optimization, addressing the full spectrum of user questions and needs related to a topic.

Additionally, brands should consider multilingual content strategies that recognize MUM’s ability to transfer knowledge across languages. This doesn’t necessarily mean translating every piece of content, but rather understanding how information in different languages can complement each other and serve global audiences. Finally, content should be created with user intent and journey mapping in mind, addressing questions users might ask at different stages of their decision-making process, from initial awareness through final purchase decisions.

The emergence of MUM and similar multimodal AI models represents a fundamental shift in how search engines understand and deliver information. By processing multiple formats and languages simultaneously, these systems can provide more comprehensive, contextual, and helpful results. For brands and content creators, success in this new landscape requires moving beyond traditional keyword optimization to embrace multimodal, topically comprehensive, and semantically rich content strategies that serve user intent across multiple formats and languages.

Track how your content appears in AI-powered search engines and AI answer generators. Get real-time insights into your brand visibility across ChatGPT, Perplexity, and other AI platforms.

MUM is Google's Multitask Unified Model—a multimodal AI that processes text, images, video, and audio across 75+ languages. Learn how it transforms search and i...

Community discussion explaining Google MUM and its impact on AI search. Experts share how this multi-modal AI model affects content optimization and visibility.

Master multimodal AI search optimization. Learn how to optimize images and voice queries for AI-powered search results, featuring strategies for GPT-4o, Gemini,...