AI Source Selection

Learn how AI systems select and rank sources for citations. Discover the algorithms, signals, and factors that determine which websites AI platforms like ChatGP...

Learn about source selection bias in AI, how it affects machine learning models, real-world examples, and strategies to detect and mitigate this critical fairness issue.

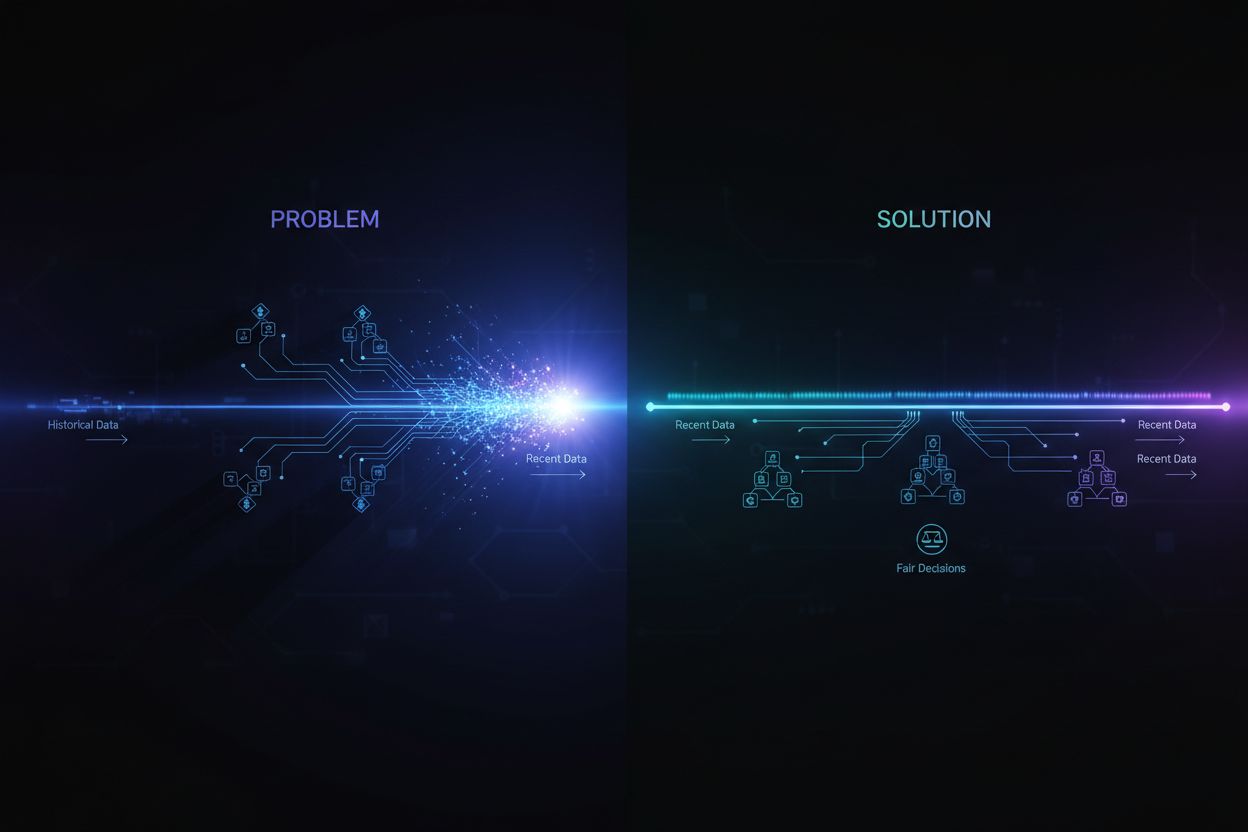

Source selection bias occurs when training data is not representative of the overall population or real-world distribution, causing AI models to make inaccurate predictions for underrepresented groups or scenarios.

Source selection bias is a fundamental problem in artificial intelligence that occurs when the data used to train machine learning models does not accurately represent the real-world population or distribution they are intended to serve. This type of bias emerges when datasets are chosen in ways that systematically exclude or underrepresent certain groups, scenarios, or characteristics. The consequence is that AI models learn patterns from incomplete or skewed data, leading to predictions that are inaccurate, unfair, or discriminatory toward underrepresented populations. Understanding this bias is critical for anyone developing, deploying, or relying on AI systems, as it directly impacts the fairness, accuracy, and reliability of automated decision-making across industries.

Source selection bias is distinct from other forms of bias because it originates at the data collection stage itself. Rather than emerging from algorithmic choices or human assumptions during model development, source selection bias is baked into the foundation of the training dataset. This makes it particularly insidious because models trained on biased source data will perpetuate and amplify these biases in their predictions, regardless of how sophisticated the algorithm is. The problem becomes even more critical when AI systems are deployed in high-stakes domains like healthcare, finance, criminal justice, and hiring, where biased predictions can have serious consequences for individuals and communities.

Source selection bias develops through several distinct mechanisms during the data collection and curation process. The most common pathway occurs through coverage bias, where certain populations or scenarios are systematically excluded from the training dataset. For example, if a facial recognition system is trained primarily on images of light-skinned individuals, it will have poor coverage of darker-skinned faces, leading to higher error rates for those populations. This happens because data collectors may have limited access to diverse populations, or they may unconsciously prioritize certain groups when gathering data.

Another critical mechanism is non-response bias, also called participation bias, which occurs when certain groups are less likely to participate in data collection processes. Consider a survey-based dataset for predicting consumer preferences: if certain demographic groups are significantly less likely to respond to surveys, their preferences will be underrepresented in the training data. This creates a dataset that appears balanced but actually reflects participation patterns rather than true population characteristics. In healthcare, for instance, if clinical trial data comes predominantly from urban populations with access to advanced medical facilities, the resulting AI models may not generalize well to rural or underserved communities.

Sampling bias represents a third mechanism where proper randomization is not used during data collection. Instead of randomly selecting data points, collectors might choose the first available samples or use convenience sampling methods. This introduces systematic errors because the samples chosen are not representative of the broader population. For example, if an AI model for predicting loan defaults is trained on data collected from a specific geographic region or time period, it may not accurately predict defaults in other regions or during different economic conditions.

| Bias Type | Mechanism | Real-World Example |

|---|---|---|

| Coverage Bias | Systematic exclusion of populations | Facial recognition trained on light-skinned faces only |

| Non-Response Bias | Participation gaps in data collection | Healthcare models trained on urban populations only |

| Sampling Bias | Improper randomization in selection | Loan prediction models trained on single geographic region |

| Temporal Bias | Data from specific time periods | Models trained on pre-pandemic data applied post-pandemic |

| Source Diversity Bias | Limited data sources | Medical imaging datasets from single hospital system |

The consequences of source selection bias in AI systems are profound and far-reaching, affecting both individuals and organizations. In healthcare, source selection bias has led to diagnostic systems that perform significantly worse for certain patient populations. Research has documented that AI algorithms for diagnosing skin cancer exhibit substantially lower accuracy for patients with darker skin tones, with some studies showing only about half the diagnostic accuracy compared to light-skinned patients. This disparity directly translates to delayed diagnoses, inappropriate treatment recommendations, and worse health outcomes for underrepresented populations. When training data comes predominantly from patients of one demographic group, the resulting models learn patterns specific to that group and fail to generalize to others.

In financial services, source selection bias in credit scoring and lending algorithms has perpetuated historical discrimination. Models trained on historical loan approval data that reflects past discriminatory lending practices will reproduce those same biases when making new lending decisions. If certain groups were historically denied credit due to systemic discrimination, and this historical data is used to train AI models, the models will learn to deny credit to similar groups in the future. This creates a vicious cycle where historical inequities become embedded in algorithmic decision-making, affecting individuals’ access to capital and economic opportunities.

Hiring and recruitment represents another critical domain where source selection bias causes significant harm. AI tools used in resume screening have been found to exhibit biases based on perceived race and gender, with studies showing that White-associated names were favored 85% of the time in some systems. When training data comes from historical hiring records that reflect past discrimination or homogeneous hiring patterns, the resulting AI models learn to replicate those patterns. This means that source selection bias in hiring data perpetuates workplace discrimination at scale, limiting opportunities for underrepresented groups and reducing workforce diversity.

In criminal justice, source selection bias in predictive policing systems has led to disproportionate targeting of certain communities. When training data comes from historical arrest records that are themselves biased against marginalized groups, the resulting models amplify these biases by predicting higher crime rates in those communities. This creates a feedback loop where biased predictions lead to increased policing in certain areas, which generates more arrest data from those areas, further reinforcing the bias in the model.

Detecting source selection bias requires a systematic approach that combines quantitative analysis, qualitative evaluation, and continuous monitoring throughout the model lifecycle. The first step is to conduct a comprehensive data audit that examines the sources, collection methods, and representativeness of your training data. This involves documenting where data came from, how it was collected, and whether the collection process might have systematically excluded certain populations or scenarios. Ask critical questions: Were all relevant demographic groups represented in the data collection process? Were there participation barriers that might have discouraged certain groups from participating? Did the data collection period or geographic scope limit representation?

Demographic parity analysis provides a quantitative approach to detecting source selection bias. This involves comparing the distribution of key characteristics in your training data against the distribution in the real-world population your model will serve. If your training data significantly underrepresents certain demographic groups, age ranges, geographic regions, or other relevant characteristics, you have evidence of source selection bias. For example, if your training data contains only 5% female representation but the target population is 50% female, this indicates a serious coverage bias that will likely result in poor model performance for women.

Performance slice analysis is another critical detection technique where you evaluate your model’s performance separately for different demographic groups and subpopulations. Even if overall model accuracy appears acceptable, performance may vary dramatically across groups. If your model achieves 95% accuracy overall but only 70% accuracy for a particular demographic group, this suggests that source selection bias in the training data has resulted in the model learning patterns specific to the majority group while failing to learn patterns relevant to the minority group. This analysis should be conducted not just on overall accuracy but on fairness metrics like equalized odds and disparate impact.

Adversarial testing involves deliberately creating test cases designed to expose potential biases. This might include testing your model on data from underrepresented populations, edge cases, or scenarios not well-represented in the training data. By stress-testing your model against diverse inputs, you can identify where source selection bias has created blind spots. For instance, if your model was trained primarily on data from urban areas, test it extensively on rural data to see if performance degrades. If your training data came from a specific time period, test the model on data from different time periods to detect temporal bias.

Mitigating source selection bias requires intervention at multiple stages of the AI development lifecycle, starting with data collection and continuing through model evaluation and deployment. The most effective approach is data-centric mitigation, which addresses bias at its source by improving the quality and representativeness of training data. This begins with diverse data collection where you actively work to include underrepresented populations and scenarios in your training dataset. Rather than relying on convenience sampling or existing datasets, organizations should conduct targeted data collection efforts to ensure adequate representation of all relevant demographic groups and use cases.

Resampling and reweighting techniques provide practical methods for addressing imbalances in existing datasets. Random oversampling duplicates examples from underrepresented groups, while random undersampling reduces examples from overrepresented groups. More sophisticated approaches like stratified sampling ensure proportional representation across multiple dimensions simultaneously. Reweighting assigns higher importance to underrepresented samples during model training, effectively telling the algorithm to pay more attention to patterns in minority groups. These techniques work best when combined with data collection efforts to gather more diverse data, rather than relying solely on resampling of limited data.

Synthetic data generation offers another avenue for addressing source selection bias, particularly when collecting real data from underrepresented populations is difficult or expensive. Techniques like Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) can create realistic synthetic examples for underrepresented groups. More targeted approaches like SMOTE (Synthetic Minority Over-sampling Technique) create synthetic examples by interpolating between existing minority instances. However, synthetic data should be used carefully and validated thoroughly, as it may introduce its own biases if not generated carefully.

Fairness-aware algorithms represent another mitigation strategy that operates at the model training stage. These algorithms explicitly incorporate fairness constraints into the learning process, ensuring that the model achieves acceptable performance across all demographic groups, not just the majority group. Adversarial debiasing, for example, uses an adversarial network to ensure that the model’s predictions cannot be used to infer protected characteristics like race or gender. Fairness-aware regularization adds penalty terms to the loss function that discourage discriminatory behavior. These approaches allow you to explicitly trade off between overall accuracy and fairness, choosing the balance that aligns with your ethical priorities.

Continuous monitoring and retraining ensure that source selection bias does not emerge or worsen over time. Even if your initial training data is representative, the real-world distribution your model serves may change due to demographic shifts, economic changes, or other factors. Implementing performance monitoring systems that track model accuracy separately for different demographic groups allows you to detect when bias emerges. When performance degradation is detected, retraining with updated data that reflects current population distributions can restore fairness. This ongoing process recognizes that bias mitigation is not a one-time effort but a continuous responsibility.

Understanding source selection bias becomes increasingly important in the context of AI answer monitoring and brand presence tracking. As AI systems like ChatGPT, Perplexity, and other AI answer generators become primary sources of information for users, the sources these systems cite and the information they present are shaped by their training data. If the training data used to build these AI systems exhibits source selection bias, the answers they generate will reflect that bias. For example, if an AI system’s training data overrepresents certain websites, publications, or perspectives while underrepresenting others, the AI system will be more likely to cite and amplify information from the overrepresented sources.

This has direct implications for brand monitoring and content visibility. If your brand, domain, or URLs are underrepresented in the training data of major AI systems, your content may be systematically excluded from or underrepresented in AI-generated answers. Conversely, if competing brands or misinformation sources are overrepresented in the training data, they will receive disproportionate visibility in AI answers. Monitoring how your brand appears in AI-generated answers across different platforms helps you understand whether source selection bias in these systems is affecting your visibility and reputation. By tracking which sources are cited, how frequently your content appears, and whether the information presented is accurate, you can identify potential biases in how AI systems represent your brand and industry.

Source selection bias in AI is a critical fairness issue that originates at the data collection stage and propagates through all downstream applications of machine learning models. It occurs when training data systematically excludes or underrepresents certain populations, scenarios, or characteristics, resulting in models that make inaccurate or unfair predictions for underrepresented groups. The consequences are severe and far-reaching, affecting healthcare outcomes, financial access, employment opportunities, and criminal justice decisions. Detecting source selection bias requires comprehensive data audits, demographic parity analysis, performance slice analysis, and adversarial testing. Mitigating this bias demands a multi-faceted approach including diverse data collection, resampling and reweighting, synthetic data generation, fairness-aware algorithms, and continuous monitoring. Organizations must recognize that addressing source selection bias is not optional but essential for building AI systems that are fair, accurate, and trustworthy across all populations and use cases.

Ensure your brand appears accurately in AI-generated answers across ChatGPT, Perplexity, and other AI platforms. Track how AI systems cite your content and identify potential biases in AI responses.

Learn how AI systems select and rank sources for citations. Discover the algorithms, signals, and factors that determine which websites AI platforms like ChatGP...

Learn effective methods to identify, verify, and correct inaccurate information in AI-generated answers from ChatGPT, Perplexity, and other AI systems.

Learn about recency bias in AI systems, how it affects content visibility, recommendation algorithms, and business decisions. Discover mitigation strategies and...