Vector Search

Vector search uses mathematical vector representations to find similar data by measuring semantic relationships. Learn how embeddings, distance metrics, and AI ...

Learn how vector search uses machine learning embeddings to find similar items based on meaning rather than exact keywords. Understand vector databases, ANN algorithms, and real-world applications.

Vector search is a technique that uses machine learning to convert data into numerical representations called vectors, enabling systems to find similar items based on meaning and context rather than exact keyword matches.

Vector search is a search technique that finds similar items or data points by comparing their numerical representations called vectors or embeddings. Unlike traditional keyword-based search engines that look for exact word matches, vector search understands the meaning and context behind queries, enabling more intelligent and relevant results. This technology has become fundamental to modern artificial intelligence systems, including AI answer generators like ChatGPT, Perplexity, and other semantic search engines that power the next generation of information retrieval.

The core principle of vector search is that similar items have similar vector representations. When you search for information, the system converts both your query and the data into vectors in a high-dimensional space, then calculates the distance between them to determine relevance. This approach captures semantic relationships and hidden patterns in data that traditional keyword matching cannot detect, making it essential for applications ranging from recommendation systems to retrieval-augmented generation (RAG) frameworks used in modern AI.

Traditional keyword search operates by matching exact terms or phrases in documents. If you search for “best pizza restaurant,” the system returns pages containing those exact words. However, this approach has significant limitations when dealing with variations in language, synonyms, or when users don’t know the precise terminology. Vector search overcomes these limitations by understanding intent and meaning rather than relying on exact word matches.

In vector search, the system understands that “top-rated pizza places” and “best pizza restaurant” convey similar meaning, even though they use different words. This semantic understanding allows vector search to return contextually relevant results that traditional systems would miss. For example, a vector search might return articles about highly recommended pizza establishments in various locations, even if those articles never use the exact phrase “best pizza restaurant.” The difference is profound: traditional search focuses on matching keywords, while vector search focuses on matching meaning.

| Aspect | Traditional Keyword Search | Vector Search |

|---|---|---|

| Matching Method | Exact word or phrase matches | Semantic similarity based on meaning |

| Data Representation | Discrete tokens, keywords, tags | Dense numerical vectors in high-dimensional space |

| Scalability | Struggles with large datasets | Efficiently scales to millions or billions of items |

| Unstructured Data | Limited capability | Handles text, images, audio, and video |

| Context Understanding | Minimal | Captures semantic relationships and context |

| Search Speed | Varies with dataset size | Milliseconds even with massive datasets |

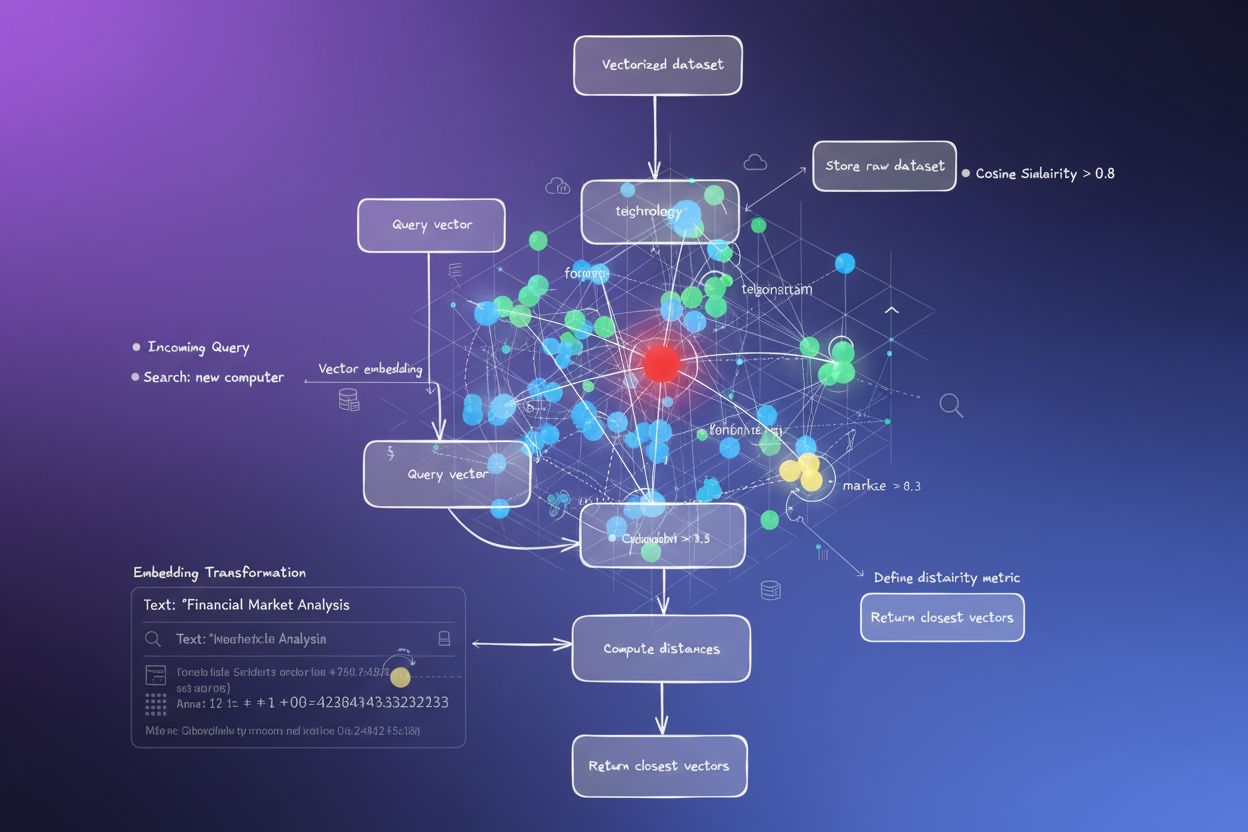

The foundation of vector search is the vectorization process, which converts raw data into numerical representations. This process begins with data preparation, where raw text or other data types are cleaned and standardized. Next, an embedding model is selected and trained on the dataset to generate embeddings for each data point. Popular embedding models include Word2Vec, GloVe, FastText, and transformer-based models like BERT or RoBERTa.

Vector embeddings are dense numerical arrays where most or all elements are non-zero values, allowing them to store more information in a smaller space compared to sparse representations. Each dimension of a vector corresponds to a latent feature or underlying characteristic of the data that isn’t directly observed but is inferred through mathematical models. For instance, in text embeddings, dimensions might capture semantic concepts like sentiment, topic, or entity type. These embeddings are then stored in a vector database or vector search plugin, where indexes are created using techniques like Hierarchical Navigable Small World (HNSW) graphs to enable fast and efficient retrieval based on similarity queries.

Vector search determines relevance by measuring similarity between query and document vectors using mathematical distance metrics. The two most common distance measurements are Euclidean distance and cosine similarity. Euclidean distance calculates the straight-line distance between two points in space, computed as the square root of the sum of squared differences between corresponding coordinates. This metric works well in lower-dimensional spaces but can become less effective in high-dimensional vector spaces.

Cosine similarity measures the angle between two vectors, indicating how closely they align with each other. It calculates the cosine of the angle between vectors, ranging from -1 to 1, where 1 indicates perfect alignment, 0 indicates orthogonal vectors, and -1 indicates opposite directions. Cosine similarity is particularly useful for vector search because it focuses on directional relationships rather than magnitudes, making it ideal for comparing high-dimensional embeddings. When comparing vectors with hundreds or thousands of dimensions, cosine similarity provides more meaningful similarity scores than Euclidean distance, which is why it’s the preferred metric in most modern vector search systems.

Comparing every vector in a database against a query vector would be computationally expensive and impractical for large datasets. To solve this problem, vector search systems use Approximate Nearest Neighbor (ANN) algorithms, which efficiently find vectors that are approximately closest to a query without computing exact distances to every vector. ANN algorithms trade a small amount of accuracy for massive gains in speed and computational efficiency, making vector search practical at scale.

One of the most popular ANN algorithms is HNSW (Hierarchical Navigable Small World), which organizes vectors into a hierarchical, multi-layered graph structure. This structure enables fast navigation through the dataset during search by clustering similar vectors together during index construction. HNSW balances longer distances for faster search in upper layers with shorter distances for accurate search in lower layers, achieving high recall rates (often exceeding 95%) while maintaining millisecond-level query latency even with billions of vectors. Other ANN methods include tree-based approaches like ANNOY, clustering-based methods like FAISS, and hashing techniques like LSH, each with different tradeoffs between latency, throughput, accuracy, and build time.

Vector search powers numerous applications across different domains and industries. Retrieval Augmented Generation (RAG) is one of the most important applications, combining vector search with large language models to generate accurate, contextually relevant responses. In RAG systems, vector search retrieves relevant documents or passages from a knowledge base, which are then provided to an LLM to generate responses based on actual data rather than relying solely on the model’s training data. This approach significantly reduces hallucinations and improves factual accuracy in AI-generated answers.

Recommendation systems leverage vector search to suggest products, movies, music, or content based on user preferences and behavior. By finding items with similar vector representations, recommendation engines can suggest products users haven’t interacted with but would likely enjoy. Semantic search applications use vector search to power search engines that understand user intent, enabling users to find relevant information even without exact keyword matches. Image and video search systems use vector embeddings to index visual content, allowing users to search for visually similar images or videos across large datasets. Additionally, vector search enables multimodal search capabilities, where users can search across different data types simultaneously, such as finding images based on text descriptions or vice versa.

Vector search has become critical infrastructure for AI answer generators and semantic search engines like ChatGPT, Perplexity, and similar platforms. These systems use vector search to retrieve relevant information from their training data and indexed knowledge bases when generating answers to user queries. When you ask a question to an AI system, it converts your query into a vector and searches through massive indexed datasets to find the most relevant information, which is then used to generate contextually appropriate responses.

For businesses and content creators, understanding vector search is essential for ensuring brand visibility in AI-generated answers. As AI systems increasingly become the primary way people search for information, having your content indexed and retrievable through vector search becomes crucial. Monitoring platforms like AmICited track how your brand, domain, and URLs appear in AI-generated answers across multiple AI systems, helping you understand your visibility in this new search paradigm. By monitoring vector search results, you can identify opportunities to improve your content’s relevance and ensure your brand appears when AI systems generate answers related to your industry or expertise.

Vector search offers significant advantages over traditional search methods, particularly for handling unstructured data like documents, images, audio, and video. It enables faster search across massive datasets, more relevant results based on semantic understanding, and the ability to search across multiple data types simultaneously. The technology is continuously evolving, with improvements in embedding models, ANN algorithms, and vector database capabilities making vector search faster, more accurate, and more accessible to developers and organizations of all sizes.

As artificial intelligence becomes increasingly integrated into search and information retrieval, vector search will continue to play a central role in how people discover information. Organizations that understand and leverage vector search technology will be better positioned to ensure their content is discoverable in AI-generated answers and to build intelligent applications that provide superior user experiences. The shift from keyword-based to semantic search represents a fundamental change in how information is organized and retrieved, making vector search literacy essential for anyone involved in content creation, SEO, or AI application development.

Vector search powers modern AI systems like ChatGPT and Perplexity. Ensure your brand appears in AI-generated answers with AmICited's monitoring platform.

Vector search uses mathematical vector representations to find similar data by measuring semantic relationships. Learn how embeddings, distance metrics, and AI ...

Learn how vector embeddings enable AI systems to understand semantic meaning and match content to queries. Explore the technology behind semantic search and AI ...

Learn how embeddings work in AI search engines and language models. Understand vector representations, semantic search, and their role in AI-generated answers.