AI Visibility Reporting

Learn what AI visibility reporting is, how it works, and why it's essential for monitoring your brand's presence in ChatGPT, Perplexity, Google AI Overviews, an...

Learn what metrics and data should be included in an AI visibility report to track brand presence across ChatGPT, Perplexity, Google AI Overviews, and Claude. Complete guide to GEO monitoring.

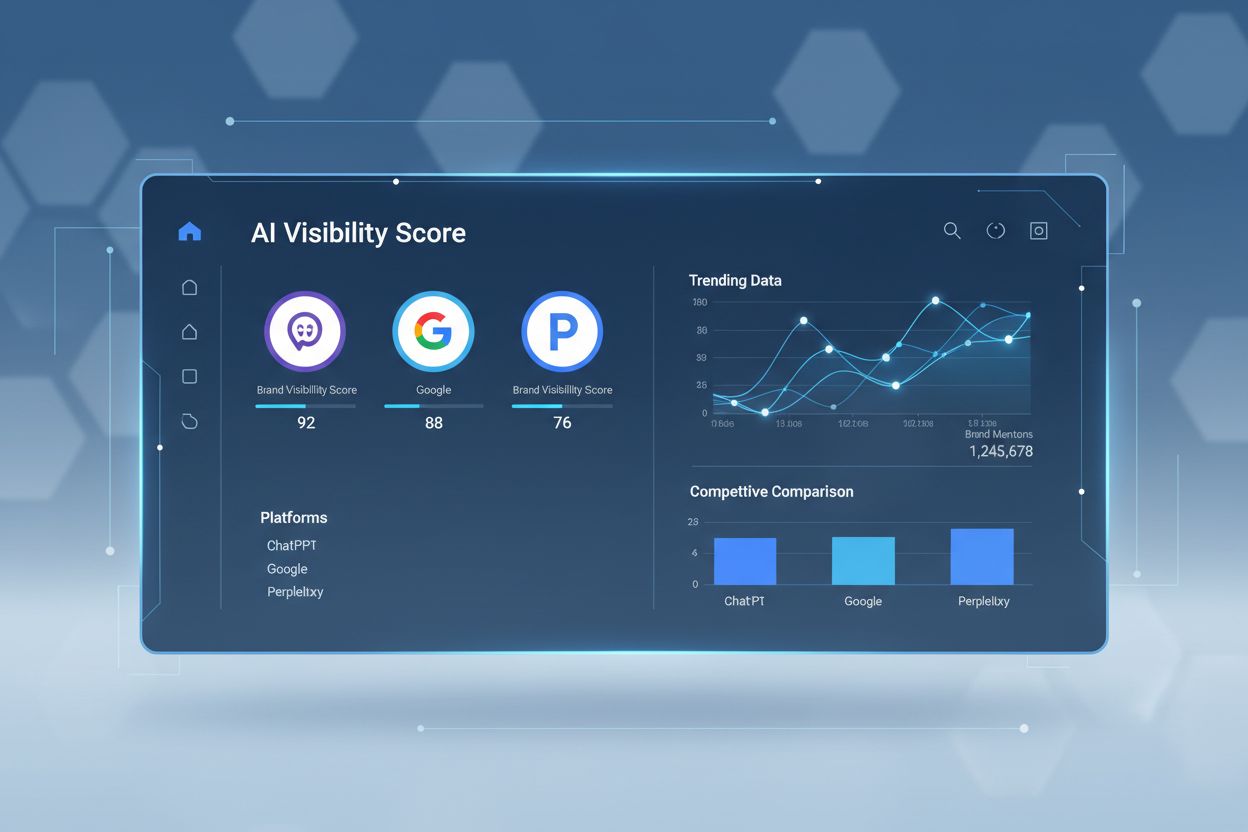

An AI visibility report should track brand mentions, citation frequency, share of voice across AI platforms (ChatGPT, Perplexity, Google AI Overviews, Claude), sentiment analysis, and competitive benchmarking. It measures how often your brand appears in AI-generated answers and recommendations, providing metrics like visibility percentage, citation rates, and platform-specific performance data that traditional analytics cannot capture.

An AI visibility report is a comprehensive analysis document that measures how often your brand appears in AI-generated answers across platforms like ChatGPT, Perplexity, Google AI Overviews, and Claude. Unlike traditional SEO reports that track keyword rankings and organic traffic, AI visibility reports focus on whether and how AI systems mention, cite, and recommend your brand when users ask relevant questions. This represents a fundamental shift in how brands achieve discovery, as 58% of consumers have replaced traditional search engines with generative AI tools for product recommendations according to Capgemini research. An effective AI visibility report transforms opaque AI responses into measurable, actionable data that reveals your competitive position in the emerging AI-powered search landscape. Without this visibility, brands remain blind to a critical discovery channel that increasingly influences purchasing decisions.

Brand Visibility Score represents the percentage of relevant AI responses that mention your brand when users ask questions in your category. This foundational metric reveals whether AI platforms consider your brand relevant enough to recommend. A visibility score above 70% represents exceptional performance, while anything below 30% indicates significant missed opportunities. This metric directly correlates with how often your target audience encounters your brand in AI-generated recommendations, making it essential for understanding your competitive position in the AI search landscape.

Citation Frequency measures how often AI platforms reference your specific content as a credible source when answering user questions. Unlike brand mentions, which simply acknowledge your existence, citations drive direct traffic from users clicking links in AI responses and establish your content as authoritative. Research analyzing 7,000+ citations revealed that brand search volume has a 0.334 correlation with AI visibility—the strongest predictor identified—while traditional backlinks show weak or neutral correlation. This counterintuitive finding means that brand-building activities now directly impact AI visibility in ways that traditional SEO signals do not.

Share of Voice (SOV) calculates your brand’s mention rate compared to competitors in AI-generated answers for your target topics. If competitors appear in 60% of relevant responses while you appear in only 15%, that gap represents lost opportunity. Top brands typically capture approximately 15% share of voice, while enterprise leaders reach 25-30%. This competitive metric reveals whether you’re gaining or losing ground in AI search visibility and identifies specific topics where optimization efforts could yield the highest returns.

Sentiment Analysis evaluates whether AI platforms characterize your brand positively, negatively, or neutrally when mentioning it. Getting mentioned means nothing if the context is negative—in fact, negative mentions can be worse than no mentions at all. Research shows that positive sentiment from trusted sources dramatically increases recommendation frequency. Brands with consistently positive sentiment achieve significantly higher visibility scores than competitors with mixed or negative sentiment, even when total mention volume is similar.

Platform-Specific Performance Data breaks down your visibility across individual AI platforms because each operates differently. ChatGPT relies heavily on Wikipedia and parametric knowledge, with 47.9% of citations coming from Wikipedia. Perplexity emphasizes real-time retrieval, with Reddit leading at 46.7% of citations. Google AI Overviews favor diversified cross-platform presence, while Claude uses Brave Search backend with Constitutional AI preferences for trustworthy sources. Only 11% of domains are cited by both ChatGPT and Perplexity, meaning cross-platform optimization is essential.

| Metric | Definition | Benchmark | Why It Matters |

|---|---|---|---|

| Brand Visibility Score | % of AI responses mentioning your brand for target queries | 70%+ (exceptional), 30-70% (competitive), <30% (opportunity) | Shows whether AI systems recognize you as relevant |

| Citation Frequency | How often your URLs appear as sources in AI answers | Track monthly trends | Drives direct traffic and establishes authority |

| Share of Voice | Your mentions vs. competitors in AI responses | 15% (top brands), 25-30% (enterprise leaders) | Reveals competitive position and gaps |

| Brand Sentiment | Positive/negative/neutral characterization in AI answers | >70% positive | Determines recommendation likelihood |

| Average Rank Position (ARP) | Average position your brand appears in AI responses | Lower is better (1.0 = first mention) | Shows prominence within AI answers |

| Citation Drift | Monthly volatility in citation frequency | 40-60% normal variation | Indicates stability of AI visibility |

| Cross-Platform Coverage | Presence across ChatGPT, Perplexity, Google AI, Claude | 4+ platforms = 2.8x higher citation likelihood | Ensures comprehensive AI presence |

| Content Recency Impact | % of citations from recently published content | 65% from past year, 79% from past 2 years | Guides content refresh strategy |

Executive Summary should provide a high-level overview of your AI visibility performance, including your overall visibility score, key trends, and primary opportunities. This section answers the critical question: “How visible is my brand in AI search?” in clear, actionable terms. Include your current visibility percentage, month-over-month changes, and how you rank against top competitors. The executive summary enables leadership to quickly understand your AI search position without diving into detailed metrics.

Platform-Specific Analysis breaks down performance across each major AI platform separately because optimization strategies differ significantly. For ChatGPT, focus on parametric knowledge signals and Wikipedia presence since 60% of ChatGPT queries are answered purely from training data without triggering web search. For Perplexity, emphasize real-time content freshness and Reddit engagement since it indexes 200+ billion URLs in real-time. For Google AI Overviews, track traditional ranking correlation since 93.67% of citations link to at least one top-10 organic result. For Claude, highlight trustworthiness signals and Brave Search optimization. This granular breakdown reveals which platforms offer the greatest opportunity for your brand.

Competitive Benchmarking compares your metrics against your top three to five competitors, revealing where you’re winning and where you’re losing ground. Identify which competitors appear most frequently in AI responses for your target topics, analyze the sources AI platforms cite when recommending them, and determine what content formats and topics drive their visibility. This intelligence directly informs your optimization strategy by showing exactly what works in your competitive landscape.

Citation Source Analysis identifies which websites, publications, and platforms AI systems reference most frequently when discussing your brand or category. Research analyzing 680 million+ citations revealed that comparative listicles account for 32.5% of all AI citations—the highest-performing format. Track which industry publications, review sites, Reddit communities, YouTube channels, and professional forums appear most frequently in AI responses. This reveals your target publication list for earned media and partnership efforts.

Content Performance Data shows which of your pages, articles, and resources generate the most AI citations and mentions. Identify your highest-performing content by citation frequency, visibility score, and sentiment. Analyze what makes these pieces citation-worthy: Are they comprehensive guides? Do they include statistics and expert quotes? Are they structured with clear headings and self-contained sections? This analysis reveals content patterns that AI systems prefer, informing your content creation strategy.

Sentiment and Positioning Analysis examines how AI systems describe your brand, products, and positioning compared to competitors. Look for patterns in how AI characterizes your strengths, weaknesses, pricing, and differentiators. Identify any misalignments between your intended positioning and how AI systems actually describe you. This qualitative analysis often reveals perception gaps that require content or messaging adjustments.

Trend Analysis and Forecasting tracks visibility changes over time, identifying whether your AI search presence is improving, declining, or stagnating. Monthly visibility fluctuations of 40-60% are normal due to AI system volatility, so focus on multi-month trends rather than week-to-week changes. Include forward-looking analysis that projects future visibility based on current trajectory and planned optimization efforts.

Query Panel Definition forms the foundation of accurate AI visibility measurement. Identify 25-30 tracked prompts per industry category that represent your target audience’s most likely questions. Focus on prompts combining your industry with qualifiers like “best,” “top,” “recommended,” or “how to.” These prompts should span different customer journey stages: awareness (“What is X?”), consideration (“Best X for Y”), and decision (“X vs. Y”). Document your query panel clearly so measurements remain consistent across reporting periods.

Sampling Methodology requires testing each query across all target platforms on a regular cadence—typically weekly or bi-weekly. Because AI responses vary significantly for identical queries, single data points are meaningless. Aggregate data across multiple time periods and query variations to identify genuine trends. The 30-day minimum rule governs initial data collection; expect significant fluctuations during the first month that don’t reflect actual performance changes. Week 6-8 marks the actionable threshold when sufficient data exists to identify reliable patterns.

Data Validation and Quality Assurance ensures measurement accuracy by regularly spot-checking parsed data and extracted citations. Watch for sampling bias from running queries at fixed times or from a single location, and validate that engines aren’t personalizing results based on prior activity. Document your data collection methodology clearly so stakeholders understand measurement limitations and confidence levels.

Attribution and Tracking connects AI visibility to business outcomes by monitoring referral traffic from AI platforms and correlating it with lead generation, demo requests, and sales pipeline impact. Configure GA4 to track referrals from perplexity.ai, chat.openai.com, and other AI platforms separately from traditional search traffic. This enables you to model the influence of AI visibility on downstream business metrics, even when direct attribution is incomplete.

ChatGPT Visibility Tracking requires understanding that responses operate in two distinct modes. Without web browsing, responses draw exclusively from parametric knowledge where entity mentions depend entirely on training data frequency. When web browsing is enabled, ChatGPT queries Bing and selects 3-10 diverse sources. 87% of SearchGPT citations match Bing’s top 10 organic results, with only 56% correlation with Google results. Track both parametric knowledge visibility (brand mentions without citations) and retrieved knowledge visibility (actual citations) separately.

Perplexity Optimization Tracking focuses on real-time retrieval signals since Perplexity triggers web search for every query against its 200+ billion URL index. Monitor content freshness metrics closely since 65% of AI bot hits target content published within the past year. Track Reddit engagement separately since Reddit dominates Perplexity citations at 46.7%. Measure how quickly new content gets indexed and cited, as Perplexity’s real-time architecture rewards fresh, relevant content more aggressively than other platforms.

Google AI Overview Tracking maintains the strongest correlation with traditional search rankings since 93.67% of citations link to at least one top-10 organic result. However, only 4.5% of AI Overview URLs directly matched a Page 1 organic URL, suggesting Google draws from deeper pages on authoritative domains. Track both your traditional rankings and your AI Overview appearance separately, as they don’t always correlate perfectly. Monitor how often your brand appears in AI Overviews for your target keywords and whether appearance rates increase as your traditional rankings improve.

Claude and Microsoft Copilot Tracking requires understanding their unique architectures. Claude uses Brave Search backend with Constitutional AI preferences for helpful, harmless, and honest content. Microsoft Copilot uses Bing grounding with IndexNow becoming critical for instant content indexing notification. Track how quickly your content appears in Copilot responses after publication, and monitor whether IndexNow implementation improves your visibility in Microsoft’s AI platform.

Your AI visibility report should conclude with specific, prioritized recommendations for improving performance. Quick wins identify opportunities where competitors have weak presence or where small content improvements could earn citations. High-impact targets focus on sources that drive multiple competitor citations, representing the most valuable earned media opportunities. Long-term investments outline relationship-building efforts with key publications and platforms that require sustained effort but deliver compounding returns.

Content optimization recommendations should specify which pages need restructuring for AI consumption, which topics need expanded coverage, and which content formats (comparative listicles, FAQs, how-to guides) would improve citation likelihood. Research shows that adding citations increases visibility by 115.1% for rank #5 sites, quotations improve visibility by 37%, and statistics addition improves visibility by 22%. Recommend specific content enhancements with expected impact estimates.

Entity and authority building recommendations address how to strengthen your brand’s recognition across AI training data and retrieval systems. This includes creating or optimizing Wikidata entries, pursuing Wikipedia presence if meeting notability guidelines, engaging authentically on Reddit in relevant communities, and securing placements on frequently-cited platforms. Brands mentioned on 4+ platforms are 2.8x more likely to appear in ChatGPT responses, making distributed presence essential.

Technical optimization recommendations cover robots.txt configuration for AI crawler access, schema markup implementation, and IndexNow setup for Bing/Copilot visibility. Recommend allowing search-focused bots like OAI-SearchBot and PerplexityBot while potentially blocking training-only bots like GPTBot. Implement Organization, Person, and FAQPage schema as Tier 1 essentials, with Product, LocalBusiness, and Review schema as Tier 2 high-value additions.

Monthly reporting provides sufficient frequency to identify trends while accounting for normal AI volatility. Monthly reports should include updated metrics, trend analysis, competitive changes, and progress against optimization recommendations. Quarterly deep-dive reports provide comprehensive analysis with strategic recommendations and forward-looking projections. Annual reports assess year-over-year progress and inform long-term strategy adjustments.

Executive dashboards should visualize key metrics (visibility score, share of voice, citation frequency) with month-over-month trends and competitive benchmarks. Detailed analytical reports provide the granular data, methodology documentation, and strategic recommendations that inform optimization efforts. Stakeholder-specific views tailor information to different audiences: executives need business impact, content teams need content performance data, and technical teams need implementation requirements.

Alerting and escalation procedures flag significant changes requiring immediate attention. Set alerts for visibility drops exceeding normal volatility thresholds, sudden sentiment shifts, or competitive changes that indicate market movement. Document clear escalation procedures so teams can respond quickly to emerging opportunities or threats.

As AI search continues to evolve, reporting frameworks must adapt to capture emerging opportunities and risks. Multimodal search is beginning to process images, voice, and video alongside text, requiring expanded measurement approaches. Real-time integration with live data sources enables fresher, more accurate AI answers, making content recency even more critical. Platform fragmentation with new AI search options emerging continuously means monitoring requirements will expand beyond today’s major platforms.

The most forward-thinking brands are already building AI visibility measurement as core infrastructure rather than treating it as a side project. They’re integrating AI visibility metrics into their broader marketing dashboards, connecting them to revenue impact, and embedding them into regular decision cycles. This systematic approach transforms AI visibility from a curiosity into a strategic capability that drives competitive advantage. As traditional organic search traffic is expected to decline by 50% by 2028 according to Gartner, the brands that establish comprehensive AI visibility measurement and optimization now will be best positioned to capture the discovery opportunities that emerge in the AI-powered search era.

Track how often your brand appears in AI-generated answers across all major platforms. Get real-time insights into your AI search visibility with AmICited's comprehensive monitoring solution.

Learn what AI visibility reporting is, how it works, and why it's essential for monitoring your brand's presence in ChatGPT, Perplexity, Google AI Overviews, an...

Master the Semrush AI Visibility Toolkit with our comprehensive guide. Learn how to monitor brand visibility in AI search, analyze competitors, and optimize for...

Learn what an AI visibility score is, how it measures your brand's presence in AI-generated answers across ChatGPT, Perplexity, and other AI platforms, and why ...