AI Crawler Access Audit: Are the Right Bots Seeing Your Content?

Learn how to audit AI crawler access to your website. Discover which bots can see your content and fix blockers preventing AI visibility in ChatGPT, Perplexity,...

Discover the critical technical SEO factors affecting your visibility in AI search engines like ChatGPT, Perplexity, and Google AI Mode. Learn how page speed, schema markup, content structure, and infrastructure impact AI citations.

Technical SEO factors affecting AI visibility include page speed and Core Web Vitals, structured data markup (schema), content structure with logical hierarchy, crawlability and accessibility, HTTPS security, freshness signals, and infrastructure reliability. Unlike traditional search engines, AI crawlers strip away formatting and rely heavily on clean, accessible content and structured data to understand and cite your pages.

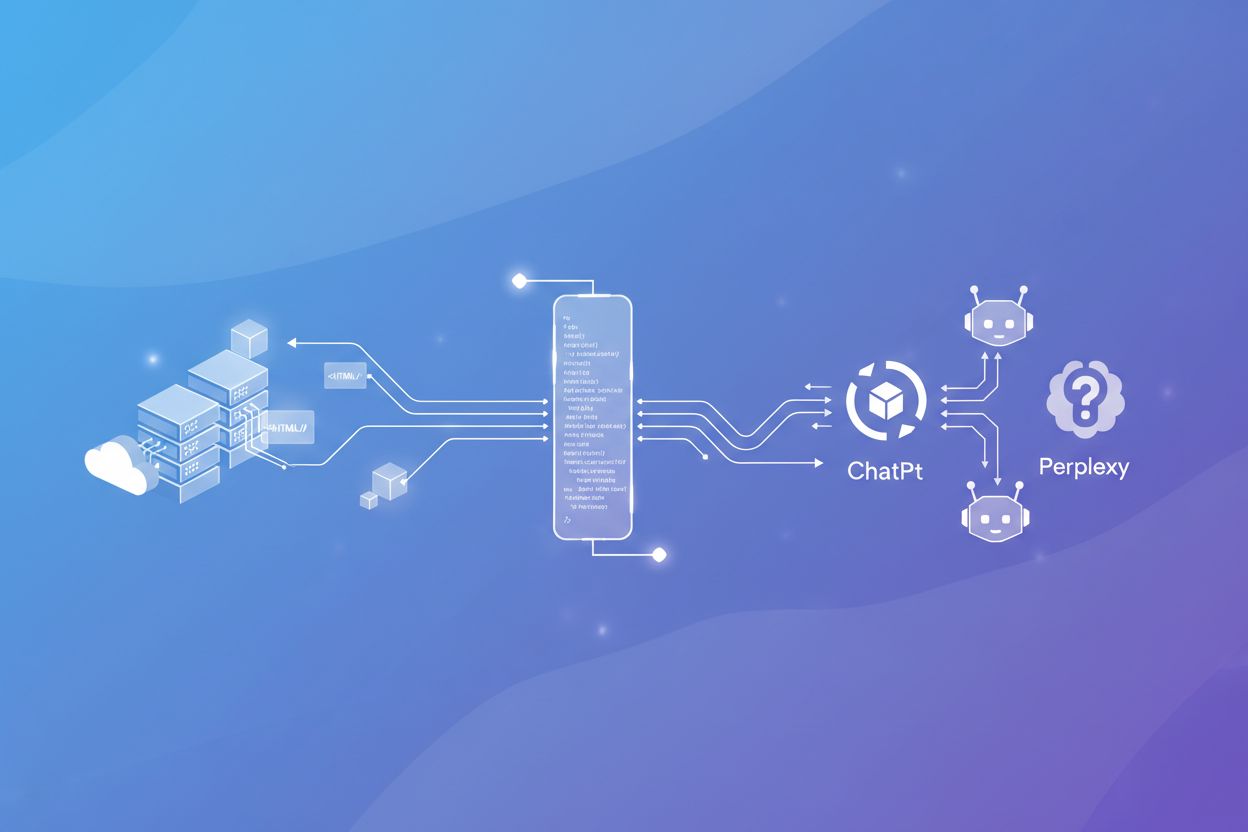

The fundamental difference between traditional search engines and AI-powered platforms lies in how they process and evaluate content. While Google’s sophisticated search infrastructure considers hundreds of ranking signals including authority, backlinks, and user engagement metrics, AI crawlers operate with a fundamentally different approach. They strip away code, formatting, and visual elements to ingest only raw text content, making technical debt far more visible and impactful. This means that technical issues which might be masked or compensated for by Google’s complex algorithm can severely damage your visibility in AI search results. The stakes are higher because AI platforms have fewer signals to balance out technical problems, leaving nowhere for your technical debt to hide.

When ChatGPT-User or similar AI crawlers visit your website, they’re not evaluating your site the same way Googlebot does. They’re extracting information to train language models and provide real-time citations in AI-generated answers. This extraction process is unforgiving—if your content is difficult to access, parse, or understand, AI systems will simply move to a competitor’s site that offers the same information more efficiently. Understanding this distinction is critical for optimizing your presence in AI search results.

Page speed has evolved from a ranking factor to a qualifying factor in AI search visibility. Research analyzing over 2,000 websites cited in Google’s AI Mode reveals a clear correlation between faster load times and citation frequency. Websites with poor Largest Contentful Paint (LCP) scores—measuring how quickly the main content loads—show significantly fewer citations compared to faster competitors. Similarly, Cumulative Layout Shift (CLS) scores, which measure visual stability, demonstrate a direct impact on AI citation rates.

The reason is straightforward: AI platforms crawl billions of pages daily, consuming enormous computational resources. OpenAI estimates its expansion plans will require 10 gigawatts of power—equivalent to 10 nuclear reactors. When an AI crawler encounters a slow website, it burns more resources to extract the same information that a faster site provides instantly. As AI platforms optimize for cost efficiency, they naturally deprioritize slow sites in favor of faster, more efficient sources. A website requiring extra processing power to crawl becomes less attractive than a competitor offering identical information with minimal resource consumption.

| Performance Metric | Impact on AI Visibility | Target Benchmark |

|---|---|---|

| Largest Contentful Paint (LCP) | Direct correlation with citation frequency | Under 2.5 seconds |

| Cumulative Layout Shift (CLS) | Affects crawl efficiency and content extraction | Below 0.1 |

| First Input Delay (FID) | Influences crawler responsiveness | Under 100 milliseconds |

| Server Response Time (TTFB) | Critical for crawler access speed | Under 200 milliseconds |

| Image Optimization | Reduces crawl burden and bandwidth | WebP/AVIF format with lazy loading |

Improving page speed requires addressing multiple factors simultaneously. Compress images aggressively using modern formats like WebP or AVIF, implement lazy loading to defer off-screen image loading, eliminate render-blocking CSS and JavaScript, and optimize your server response time. Using a Content Delivery Network (CDN) reduces latency by serving content from geographically distributed servers. These optimizations don’t just improve AI visibility—they also enhance user experience and traditional search rankings, making them essential investments for any digital presence.

Structured data markup is the Rosetta Stone of AI search optimization. While traditional search engines use schema to trigger rich results and understand content context, AI systems rely on structured data to reduce ambiguity and accelerate information extraction. When your content includes proper JSON-LD markup, AI crawlers can instantly identify what type of content you’re offering, extract key information, and determine whether it’s relevant to user queries without parsing through unstructured text.

The most impactful schema types for AI visibility include FAQPage schema for question-and-answer content, HowTo schema for step-by-step processes, Article schema with proper authorship attribution, Product or Service schema for commercial offerings, Organization or LocalBusiness schema for entity clarity, Review and AggregateRating schema for social proof, and BreadcrumbList schema for hierarchy mapping. Each schema type serves a specific purpose in helping AI systems understand your content’s structure and relevance.

Implementation requires more than simply adding markup—it demands accuracy and synchronization with visible content. Outdated or misaligned schema erodes trust with AI systems, potentially disqualifying your content from citations. Use Google’s Rich Results Test and Schema Markup Validator to verify your implementation. Test rigorously before deployment, and maintain a regular audit schedule to ensure schema stays synchronized with content updates. Avoid over-marking content; only implement schema for elements that genuinely help explain your content’s purpose and structure.

AI systems excel at parsing well-organized, logically structured content. The way you organize information directly impacts whether AI crawlers can extract, understand, and cite your material. Logical URL structures communicate hierarchy and topic relationships—a URL like /services/website-design/ immediately signals the content’s purpose and position within your site’s architecture. This clarity helps AI systems understand topical relationships and determine whether your content comprehensively covers a subject.

Header tag hierarchy serves as a content roadmap for AI crawlers. Following a logical progression from H1 to H2 to H3 without skipping levels helps AI systems understand content organization and identify key topics. Each header should represent a distinct concept or question, making it easy for AI systems to extract relevant sections for citations. Avoid using headers purely for styling; they should reflect genuine content structure.

Internal linking strategy reinforces topical authority and helps AI systems understand content relationships. Bidirectional linking—where pillar pages link to subpages and subpages link back to pillar pages—creates a web of topical relevance that signals comprehensive expertise. When AI systems encounter multiple related articles on your site, they’re more likely to cite your content as an authoritative source on that topic. This is why the American Kennel Club appears multiple times in Perplexity results for dog training queries—their comprehensive coverage across multiple related articles signals topical authority.

Structured elements like tables, lists, and ordered steps are far easier for AI systems to extract than long paragraphs of prose. When presenting information, use tables to compare options, bullet points to list related items, and numbered steps for processes. This formatting doesn’t just improve readability for human visitors—it dramatically improves your chances of being cited in AI-generated answers because the information is immediately parseable and extractable.

Your technical infrastructure forms the foundation for AI visibility. If AI crawlers cannot access your content, verify its freshness, or establish trust, no amount of content optimization will result in citations. Crawlability is the first requirement—ensure your important pages are accessible to search engine crawlers and, by extension, AI crawlers that often rely on search engine indexes. Check your robots.txt file to confirm you’re not accidentally blocking crawlers, and verify that critical content isn’t hidden behind authentication walls or JavaScript rendering that prevents initial access.

Freshness signals carry significant weight in AI search. AI systems heavily prioritize current, up-to-date information over stale content. Implement accurate publication and modification dates in your content metadata, use XML sitemaps with <lastmod> tags to signal when pages were last updated, and include visible update notes on your pages. When you update existing content, ensure the modification date reflects the actual update time. This signals to AI systems that your information is current and reliable.

Security infrastructure establishes trust with AI systems. HTTPS encryption, valid SSL certificates, and proper security headers (Content Security Policy, X-Content-Type-Options, X-Frame-Options) demonstrate that you maintain professional security standards. AI systems are more likely to cite content from secure, trustworthy sources than from sites with security vulnerabilities or warnings.

JavaScript rendering presents a critical challenge for AI crawlers. Heavy client-side rendering that hides core content behind JavaScript can prevent AI systems from accessing your content during initial crawls. Use server-side rendering for essential content, ensuring that the HTML delivered to crawlers contains the actual content rather than placeholder elements that only render in browsers. This is particularly important for dynamic content that changes based on user interaction.

A critical but often overlooked technical factor affecting AI visibility is third-party infrastructure decisions. In July 2025, Cloudflare began blocking AI crawlers by default for all users, fundamentally changing how AI systems access websites. If your site runs on Cloudflare infrastructure and you haven’t explicitly configured crawler access settings, your website may now be invisible to ChatGPT, Claude, Perplexity, and other AI platforms—not because your content is poor, but because a third-party platform made an infrastructure decision you might not even be aware of.

This situation illustrates a broader principle: you cannot assume that decisions affecting your AI visibility happen within your organization. Infrastructure providers, CDN services, and other third-party platforms can make changes that dramatically impact your AI search visibility. Regularly audit your infrastructure settings, particularly with major CDN providers, to ensure you haven’t inadvertently blocked AI crawlers. Document who is responsible for monitoring these settings and ensure clear communication channels exist for alerting relevant teams when infrastructure changes occur.

A critical insight from recent data analysis reveals that strong Google rankings do not guarantee AI visibility. Comparing two major accommodation platforms shows that while one dominates traditional search results, the other receives significantly more citations in AI answers. This disconnect occurs because AI systems evaluate content differently than search engines. A page might rank well in Google due to authority and backlinks while remaining invisible to AI systems due to poor content structure, slow load times, or inaccessible information architecture.

This distinction has profound implications for your optimization strategy. You cannot assume that your existing SEO efforts automatically translate to AI visibility. A site with excellent traditional SEO might have accumulated technical debt that doesn’t significantly impact Google rankings but severely damages AI visibility. Conversely, a site with modest Google rankings might achieve strong AI visibility by focusing on content clarity, structure, and accessibility.

Begin by assessing your current technical foundation against AI-specific requirements. Audit your Core Web Vitals using Google’s PageSpeed Insights and Chrome User Experience Report, identifying pages with poor performance. Test your schema markup implementation across all major content types. Evaluate your content structure, ensuring logical header hierarchies and strategic internal linking. Verify crawlability by checking robots.txt, testing JavaScript rendering, and confirming that important content is accessible to crawlers.

Prioritize fixes based on impact and effort. Page speed improvements typically deliver the highest ROI because they affect both AI visibility and user experience. Schema markup implementation is relatively straightforward and provides immediate benefits. Content restructuring requires more effort but compounds over time as AI systems recognize your improved organization and topical authority.

Establish clear ownership and accountability for maintaining technical SEO health. Assign responsibility for monitoring infrastructure changes, updating freshness signals, maintaining schema accuracy, and conducting regular technical audits. Without clear ownership, technical debt accumulates silently until it suddenly impacts your AI visibility.

Track how your content appears across ChatGPT, Perplexity, and other AI search engines. Get real-time insights into your AI citations and competitive positioning.

Learn how to audit AI crawler access to your website. Discover which bots can see your content and fix blockers preventing AI visibility in ChatGPT, Perplexity,...

Discover how SSR and CSR rendering strategies affect AI crawler visibility, brand citations in ChatGPT and Perplexity, and your overall AI search presence.

Learn how to audit your website for AI search readiness. Step-by-step guide to optimize for ChatGPT, Perplexity, and AI Overviews with technical SEO and content...