AI Search Optimization

Learn AI Search Optimization strategies to improve brand visibility in ChatGPT, Google AI Overviews, and Perplexity. Optimize content for LLM citation and AI-po...

Techniques and strategies for ensuring new content is quickly found, crawled, indexed, and made available to artificial intelligence systems including LLMs, AI search engines, and chatbots. Unlike traditional SEO which focuses on search rankings, AI Discovery Optimization targets content inclusion in AI-generated answers across platforms like ChatGPT, Gemini, Perplexity, and Claude.

Techniques and strategies for ensuring new content is quickly found, crawled, indexed, and made available to artificial intelligence systems including LLMs, AI search engines, and chatbots. Unlike traditional SEO which focuses on search rankings, AI Discovery Optimization targets content inclusion in AI-generated answers across platforms like ChatGPT, Gemini, Perplexity, and Claude.

AI Discovery Optimization refers to the practice of ensuring that your content is quickly discovered, crawled, indexed, and made available to artificial intelligence systems, including large language models (LLMs), AI search engines, and chatbots. Unlike traditional search engine optimization, which focuses on ranking pages in search results, AI Discovery Optimization targets the inclusion of your content in AI-generated answers across platforms like ChatGPT, Google Gemini, Perplexity, Claude, and Microsoft Copilot. The stakes are significant: AI referrals to top websites increased 357% year-over-year in June 2025, reaching 1.13 billion visits, making AI visibility a critical component of any modern content strategy. The fundamental difference lies in how AI systems consume content—rather than simply indexing pages, they break content into semantic chunks and synthesize answers by combining information from multiple sources. This shift requires a different optimization approach focused on content clarity, structure, and authority. Tools like AmICited.com help brands monitor how AI systems reference and cite their content across different platforms, providing visibility into this new discovery landscape.

AI systems employ specialized crawlers to discover and index web content, similar to traditional search engine bots but with distinct purposes and behaviors. Major AI crawlers include GPTBot (OpenAI), ClaudeBot (Anthropic), PerplexityBot (Perplexity), Googlebot with Google-Extended token (Google), and Bingbot (Microsoft), each with different crawling patterns and priorities. These crawlers operate under the concept of “crawl budget”—a limited allocation of resources that determines which pages get crawled and how frequently. Unlike traditional search engines that index entire pages for ranking, AI systems break content into semantic chunks during the indexing process, extracting self-contained passages that can be retrieved and synthesized into answers. The crawl budget for AI systems is particularly constrained because AI crawlers must not only fetch content but also process it through language models, making efficient content structure critical. Understanding which crawlers access your site and how they prioritize content is essential for effective AI Discovery Optimization.

| AI Crawler | Source | Primary Purpose | Crawl Type | Frequency |

|---|---|---|---|---|

| GPTBot | OpenAI | Training & RAG for ChatGPT | Broad coverage | Periodic |

| ClaudeBot | Anthropic | Training & RAG for Claude | Selective | Periodic |

| PerplexityBot | Perplexity | Real-time retrieval for answers | High-frequency | Continuous |

| Googlebot (Google-Extended) | AI Overviews & AI Mode | Selective | Continuous | |

| Bingbot | Microsoft | Copilot & AI search | Selective | Continuous |

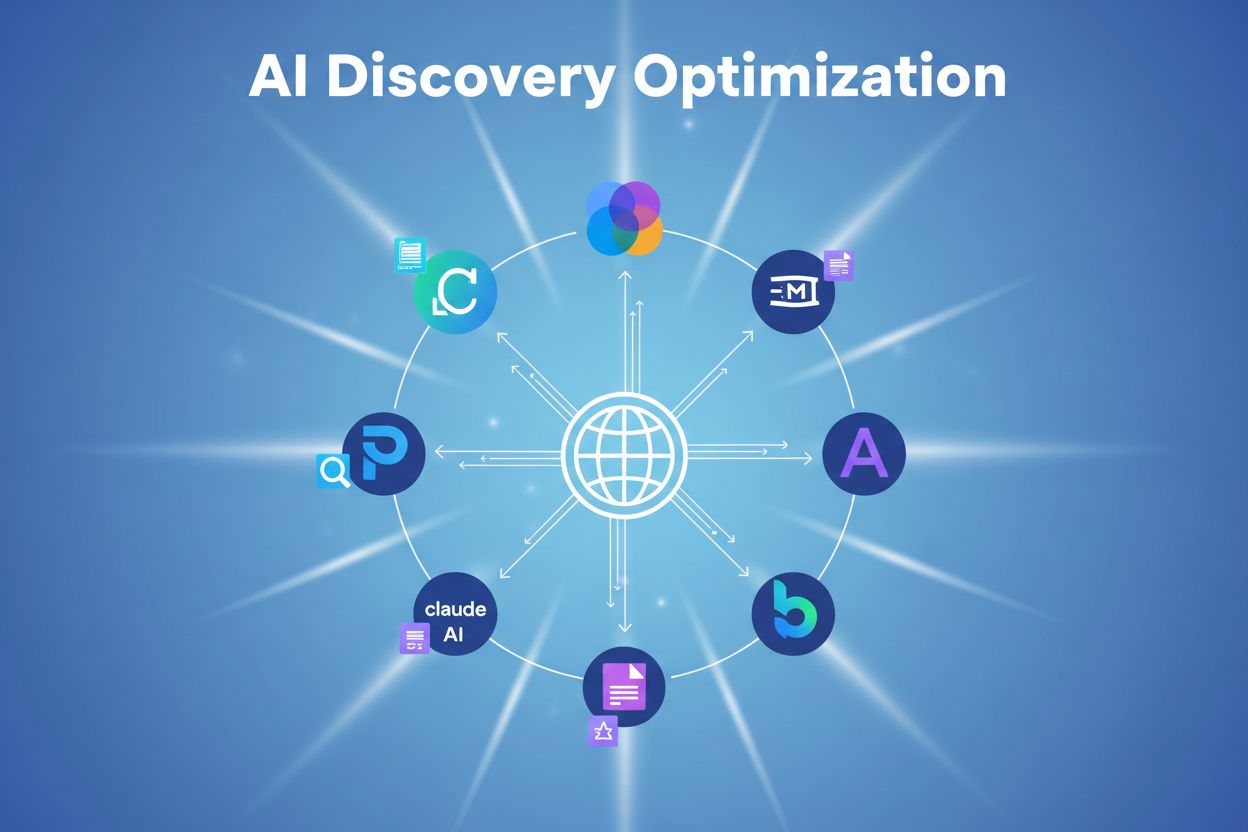

| CCBot | Common Crawl | Research & training data | Broad coverage | Periodic |

Traditional SEO and AI Discovery Optimization represent fundamentally different approaches to content visibility, each with distinct priorities and success metrics. Traditional SEO focuses on optimizing pages to rank in search engine results pages (SERPs), emphasizing keyword matching, backlink authority, and page-level ranking signals. In contrast, AI Discovery Optimization prioritizes content inclusion in AI-generated answers, emphasizing content clarity, semantic structure, and chunk-level optimization. Traditional SEO operates on a one-query-one-result model where a single URL ranks for a specific keyword, while AI systems employ a synthesis model where multiple content chunks from different sources are combined into a single answer. The ranking factors differ significantly: traditional SEO values keyword density and backlinks, while AI systems prioritize content structure, factual accuracy, and citation-worthiness. Additionally, traditional SEO metrics focus on click-through rates and rankings, whereas AI Discovery Optimization metrics emphasize mention frequency, citation sentiment, and inclusion in AI-generated responses. This fundamental shift means that content optimized purely for traditional search may not perform well in AI discovery, requiring a complementary optimization strategy that addresses both channels.

For AI systems to discover and index your content effectively, several technical requirements must be met to ensure crawlability and accessibility. Server-side rendering (SSR) is critical because most AI crawlers cannot execute JavaScript—content loaded dynamically via client-side JavaScript remains invisible to these systems. All essential content must be present in the initial HTML response, allowing AI crawlers with limited processing time (typically 1-5 seconds) to access it immediately. The HTML structure should be semantic and well-organized with proper heading hierarchy (H1, H2, H3 tags), clear meta tags, and self-referencing canonical tags to help AI systems understand content relationships. Page speed is crucial; slow-loading pages may timeout before AI crawlers can retrieve all content, resulting in incomplete indexing. Structured data using schema.org markup (Article, FAQPage, Product, etc.) helps AI systems understand content context and purpose. Additionally, your robots.txt and llms.txt files should explicitly allow major AI crawlers rather than blocking them, and your firewall or CDN should whitelist AI bot IP ranges to prevent accidental blocking. AmICited.com can help monitor which AI crawlers are accessing your site and how frequently, providing insights into your crawlability effectiveness.

Technical Requirements Checklist:

AI systems don’t retrieve entire pages; instead, they break content into semantic “chunks”—self-contained passages that can be extracted and understood independently without surrounding context. This fundamental difference requires a different approach to content structure and formatting. Each chunk should be designed as a standalone unit that makes sense when extracted from the page, which means avoiding references to surrounding content or relying on context from other sections. Clear heading hierarchy is essential because AI systems use headings to identify chunk boundaries and understand the relationship between ideas. The principle of “one idea per section” ensures that each chunk focuses on a single concept, making it easier for AI systems to extract and synthesize. Formatting techniques like Q&A pairs, bulleted lists, and HTML tables are particularly effective because they create natural chunk boundaries and are easily extractable. Semantic clarity is paramount—use precise, specific language rather than vague terms, and avoid long walls of text that blur multiple concepts together. For example, a well-structured chunk might be: “What is crawl budget? Crawl budget refers to the limited number of URLs that search engines and AI systems will crawl on your site within a given timeframe. Optimizing crawl budget ensures that AI crawlers spend their resources on high-value content rather than low-priority pages.” AmICited.com tracks which content chunks from your site get cited in AI-generated answers, helping you understand which content structures perform best.

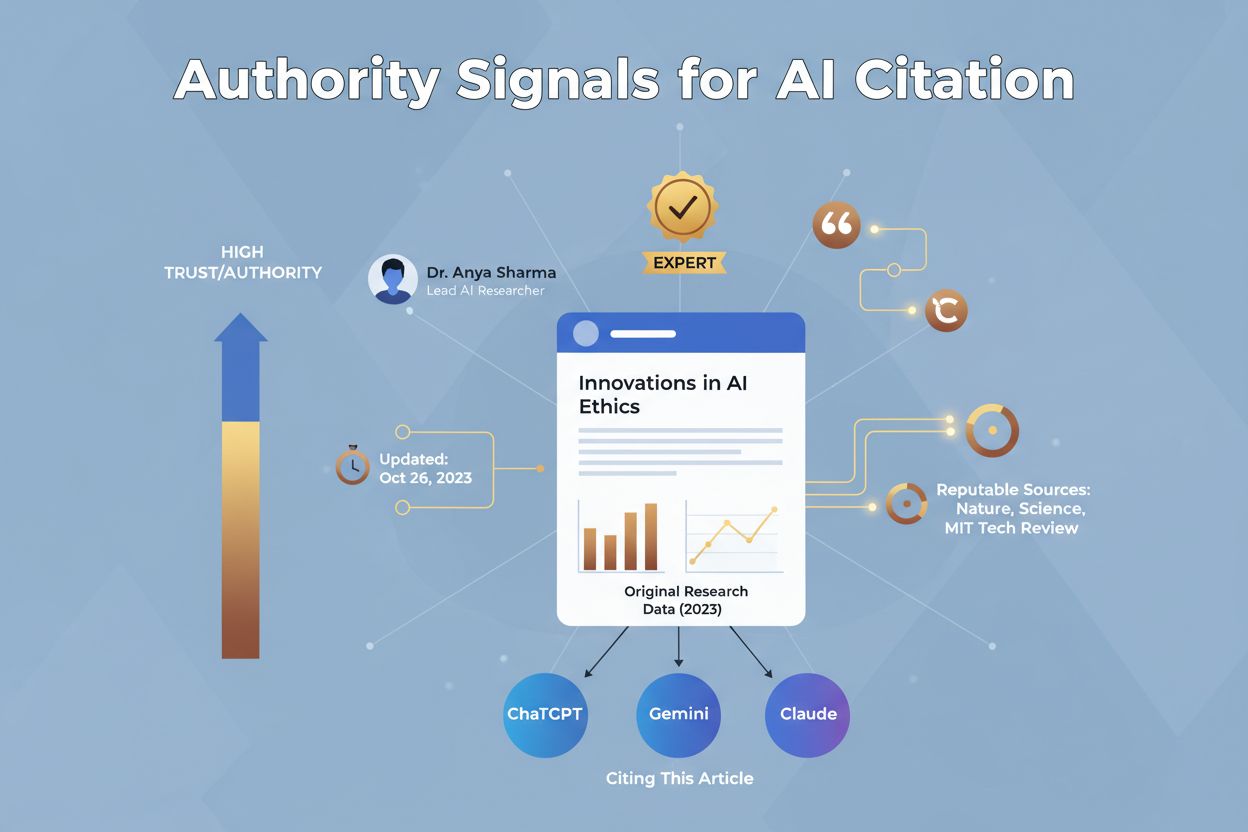

AI systems prioritize authoritative, trustworthy sources when synthesizing answers, making authority signals critical for AI Discovery Optimization. The concept of EEAT (Expertise, Experience, Authoritativeness, Trustworthiness) directly influences whether AI systems will cite your content. Expertise is demonstrated through deep, accurate knowledge of your subject matter; Experience comes from real-world application and case studies; Authoritativeness is built through recognition by other experts and reputable sources; and Trustworthiness is established through transparent, fact-based claims with proper attribution. Author bylines with credentials and structured author schema markup help AI systems understand who created the content and assess their expertise level. Original research, unique datasets, and proprietary insights are significantly more likely to be cited than repurposed or generic content, as AI systems recognize and value primary sources. External citations from reputable sources and mentions on authoritative websites boost your content’s perceived authority in AI systems’ evaluation. Fresh, regularly updated content with visible timestamps signals that information is current and reliable, which is particularly important for topics where accuracy and timeliness matter. Fact-based claims supported by sources, studies, or data are more likely to be cited than unsupported assertions. Building authority requires a long-term commitment to creating original, well-researched content that earns recognition from other experts and publications. AmICited.com helps you monitor how frequently your content is cited in AI-generated answers and assess the sentiment of those citations, providing feedback on your authority-building efforts.

Measuring the success of your AI Discovery Optimization efforts requires tracking metrics distinct from traditional SEO analytics. Begin by tracking AI referral traffic separately in your analytics platform, creating a dedicated channel for traffic from AI platforms like ChatGPT, Gemini, Perplexity, and Claude to understand its growth trajectory and user behavior. Monitor brand mentions across major AI platforms by regularly checking how often your brand, products, or content appear in AI-generated answers for relevant queries. Assess sentiment of these mentions—whether they’re positive, neutral, or negative—to understand how AI systems are positioning your brand relative to competitors. Track citation frequency to identify which content pieces are most valuable to AI systems and which topics deserve more investment. Monitor AI crawler behavior in your server logs by analyzing crawl frequency, which URLs are being accessed, HTTP response codes, and changes in crawling patterns over time to ensure your optimization efforts are effective. Tools like Goodie, Profound, and Similarweb provide AI visibility tracking, but AmICited.com serves as the primary platform for monitoring how AI systems reference and cite your brand across ChatGPT, Gemini, Perplexity, Claude, and other AI search platforms. Establish baseline metrics for your current AI visibility, then track progress quarterly to measure the impact of your optimization efforts.

Implementing AI Discovery Optimization requires a systematic approach combining technical, content, and monitoring strategies. Start by auditing your current crawlability using tools that analyze your robots.txt, llms.txt, server logs, and content structure to identify barriers to AI discovery. Implement server-side rendering for all critical content pages to ensure AI crawlers can access information in the initial HTML response. Optimize your content structure by implementing clear heading hierarchies, semantic HTML, and schema.org markup that help AI systems understand your content. Create original, authoritative content with proper author credentials, citations, and timestamps that signal expertise and trustworthiness to AI systems. Build topical authority through hub-and-spoke content clusters where pillar pages provide overviews and cluster pages dive deep into specific subtopics, helping AI systems recognize your expertise. Monitor and adjust your robots.txt configuration to allow AI crawlers access to valuable content while blocking access to sensitive or low-value sections. Track AI referral traffic and brand mentions using AmICited.com to monitor how AI systems reference your content and identify optimization opportunities. Continuously update and refresh key content to maintain freshness signals and ensure accuracy. Benchmark against competitors to understand where you’re winning and losing in AI visibility. Implement structured data across your site to help AI systems understand content context and relationships. Optimize page speed to ensure AI crawlers can retrieve content within their processing timeouts. Test and iterate based on performance data, adjusting your strategy as AI systems evolve. By combining these practices with tools like AmICited.com for monitoring and FlowHunt.io for content optimization and automation, you can build a comprehensive AI Discovery Optimization strategy that ensures your content reaches and is cited by AI systems.

Traditional SEO focuses on ranking pages in search engine results, while AI Discovery Optimization ensures content is included in AI-generated answers. AI systems break content into chunks and synthesize answers from multiple sources, requiring different optimization strategies focused on content clarity, structure, and authority rather than keyword matching and backlinks.

You should allow major AI crawlers including GPTBot (OpenAI), ClaudeBot (Anthropic), PerplexityBot, Googlebot with Google-Extended token, and Bingbot. However, you can selectively block crawlers if your content is sensitive or proprietary. Use tools like AmICited.com to monitor which crawlers are accessing your site and their crawl patterns.

Most AI crawlers cannot execute JavaScript, so content loaded dynamically via JavaScript is invisible to them. Server-side rendering ensures all critical content is in the initial HTML response, making it immediately accessible to AI systems that have limited processing time (1-5 seconds) to retrieve and index your content.

Track AI referral traffic separately in your analytics, monitor brand mentions and sentiment in AI-generated answers using tools like AmICited.com or Goodie, and benchmark your visibility against competitors. Also monitor AI crawler behavior in server logs to ensure your content is being accessed and indexed by AI systems.

AI systems break content into semantic chunks (self-contained passages) for retrieval and synthesis. Each chunk should be independently understandable and optimized for extraction. Clear heading hierarchy, concise answers, and structured formatting help AI systems identify and extract valuable chunks that can be used in AI-generated answers.

Very important. AI systems prioritize original, authoritative content with unique data and insights. Original research, surveys, and unique datasets are significantly more likely to be cited in AI-generated answers than repurposed or generic content, making original research a key component of your AI Discovery Optimization strategy.

Yes, you can block specific AI crawlers using robots.txt or llms.txt files. However, blocking crawlers means your content won't be included in AI-generated answers, reducing your visibility in AI search platforms. Consider the trade-offs carefully before blocking, as AI referrals have grown 357% year-over-year.

Regularly update key content to maintain freshness signals. Add timestamps to show when content was last updated. AI systems prioritize current, accurate information, so refreshing content quarterly or when new information becomes available is recommended for maintaining strong AI discovery performance.

Track how AI systems reference and cite your brand across ChatGPT, Gemini, Perplexity, Claude, and other AI platforms. Get real-time insights into your AI discovery performance and optimize your content strategy.

Learn AI Search Optimization strategies to improve brand visibility in ChatGPT, Google AI Overviews, and Perplexity. Optimize content for LLM citation and AI-po...

Learn proven strategies to improve your brand's visibility in AI search engines like ChatGPT, Perplexity, and Gemini. Discover content optimization, entity cons...

Learn proven strategies to accelerate AI content discovery including content structuring, schema markup implementation, semantic optimization, and technical SEO...