How to Dispute and Correct Inaccurate Information in AI Responses

Learn how to dispute inaccurate AI information, report errors to ChatGPT and Perplexity, and implement strategies to ensure your brand is accurately represented...

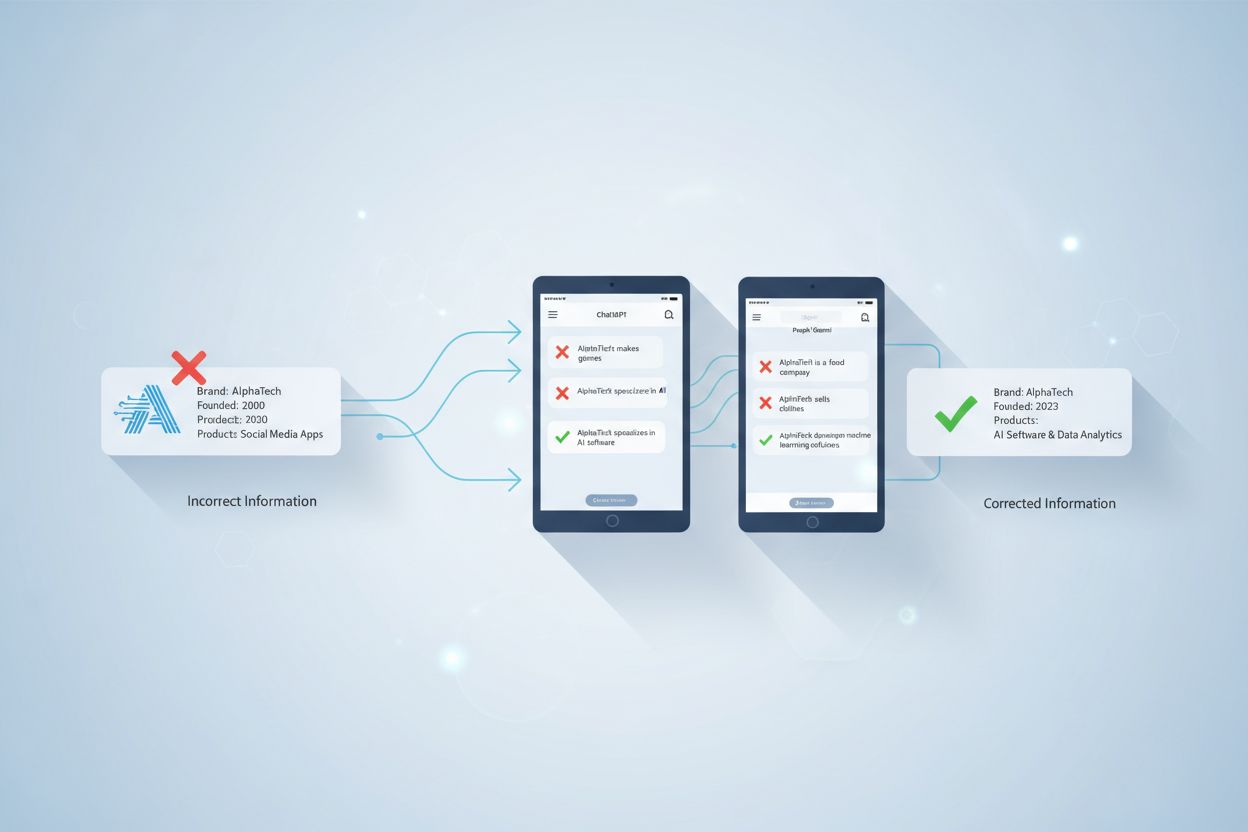

AI Misinformation Correction refers to strategies and tools for identifying and addressing incorrect brand information that appears in AI-generated answers from systems like ChatGPT, Gemini, and Perplexity. It involves monitoring how AI systems represent brands and implementing source-level fixes to ensure accurate information is distributed across trusted platforms. Unlike traditional fact-checking, it focuses on correcting the sources that AI systems trust rather than the AI outputs themselves. This is essential for maintaining brand reputation and accuracy in an AI-driven search environment.

AI Misinformation Correction refers to strategies and tools for identifying and addressing incorrect brand information that appears in AI-generated answers from systems like ChatGPT, Gemini, and Perplexity. It involves monitoring how AI systems represent brands and implementing source-level fixes to ensure accurate information is distributed across trusted platforms. Unlike traditional fact-checking, it focuses on correcting the sources that AI systems trust rather than the AI outputs themselves. This is essential for maintaining brand reputation and accuracy in an AI-driven search environment.

AI Misinformation Correction refers to the strategies, processes, and tools used to identify and address incorrect, outdated, or misleading information about brands that appears in AI-generated answers from systems like ChatGPT, Gemini, and Perplexity. Recent research shows that approximately 45% of AI queries produce erroneous answers, making brand accuracy in AI systems a critical concern for businesses. Unlike traditional search results where brands can control their own listings, AI systems synthesize information from multiple sources across the web, creating a complex landscape where misinformation can persist silently. The challenge isn’t just about correcting individual AI responses—it’s about understanding why AI systems get brand information wrong in the first place and implementing systematic fixes at the source level.

AI systems don’t invent brand information from scratch; they assemble it from what already exists across the internet. However, this process creates several predictable failure points that lead to brand misrepresentation:

| Root Cause | How It Happens | Business Impact |

|---|---|---|

| Source Inconsistency | Brand described differently across websites, directories, and articles | AI infers wrong consensus from conflicting information |

| Outdated Authoritative Sources | Old Wikipedia entries, directory listings, or comparison pages contain incorrect data | Newer corrections are ignored because older sources have higher authority signals |

| Entity Confusion | Similar brand names or overlapping categories confuse AI systems | Competitors get credited for your capabilities or brand is omitted entirely |

| Missing Primary Signals | Lack of structured data, clear About pages, or consistent terminology | AI forced to infer information, leading to vague or incorrect descriptions |

When a brand is described differently across platforms, AI systems struggle to determine which version is authoritative. Rather than asking for clarification, they infer consensus based on frequency and perceived authority—even when that consensus is wrong. Small differences in brand names, descriptions, or positioning often get duplicated across platforms, and once repeated, these fragments become signals that AI models treat as reliable. The problem intensifies when outdated but high-authority pages contain incorrect information; AI systems often favor these older sources over newer corrections, especially if those corrections haven’t spread widely across trusted platforms.

Fixing incorrect brand information in AI systems requires a fundamentally different approach than traditional SEO cleanup. In traditional SEO, brands update their own listings, correct NAP (Name, Address, Phone) data, and optimize on-page content. AI brand correction focuses on changing what trusted sources say about your brand, not on controlling your own visibility. You don’t correct AI directly—you correct what AI trusts. Trying to “fix” AI answers by repeatedly stating incorrect claims (even to deny them) can backfire by reinforcing the association you’re trying to remove. AI systems recognize patterns, not intent. This means every correction must start at the source level, working backward from where AI systems actually learn information.

Before you can fix incorrect brand information, you need visibility into how AI systems currently describe your brand. Effective monitoring focuses on:

Manual checks alone are unreliable because AI answers vary by prompt, context, and update cycle. Structured monitoring tools provide the visibility needed to detect errors early, before they become entrenched in AI systems. Many brands don’t realize they’re being misrepresented in AI until a customer mentions it or a crisis emerges. Proactive monitoring prevents this by catching inconsistencies before they spread.

Once you’ve identified incorrect brand information, the fix must happen where AI systems actually learn from—not where the error merely appears. Effective source-level corrections include:

The key principle is this: corrections work only when applied at the source level. Changing what appears in AI outputs without fixing the underlying sources is temporary at best. AI systems continuously re-evaluate signals as new content appears and older pages resurface. A correction that doesn’t address the root source will eventually be overwritten by the original misinformation.

When correcting inaccurate brand information across directories, marketplaces, or AI-fed platforms, most systems require verification that links the brand to legitimate ownership and use. Commonly requested documentation includes:

The goal isn’t volume—it’s consistency. Platforms evaluate whether documentation, listings, and public-facing brand data align. Having these materials organized in advance reduces rejection cycles and accelerates approval when fixing incorrect brand information at scale. Consistency across sources signals to AI systems that your brand information is reliable and authoritative.

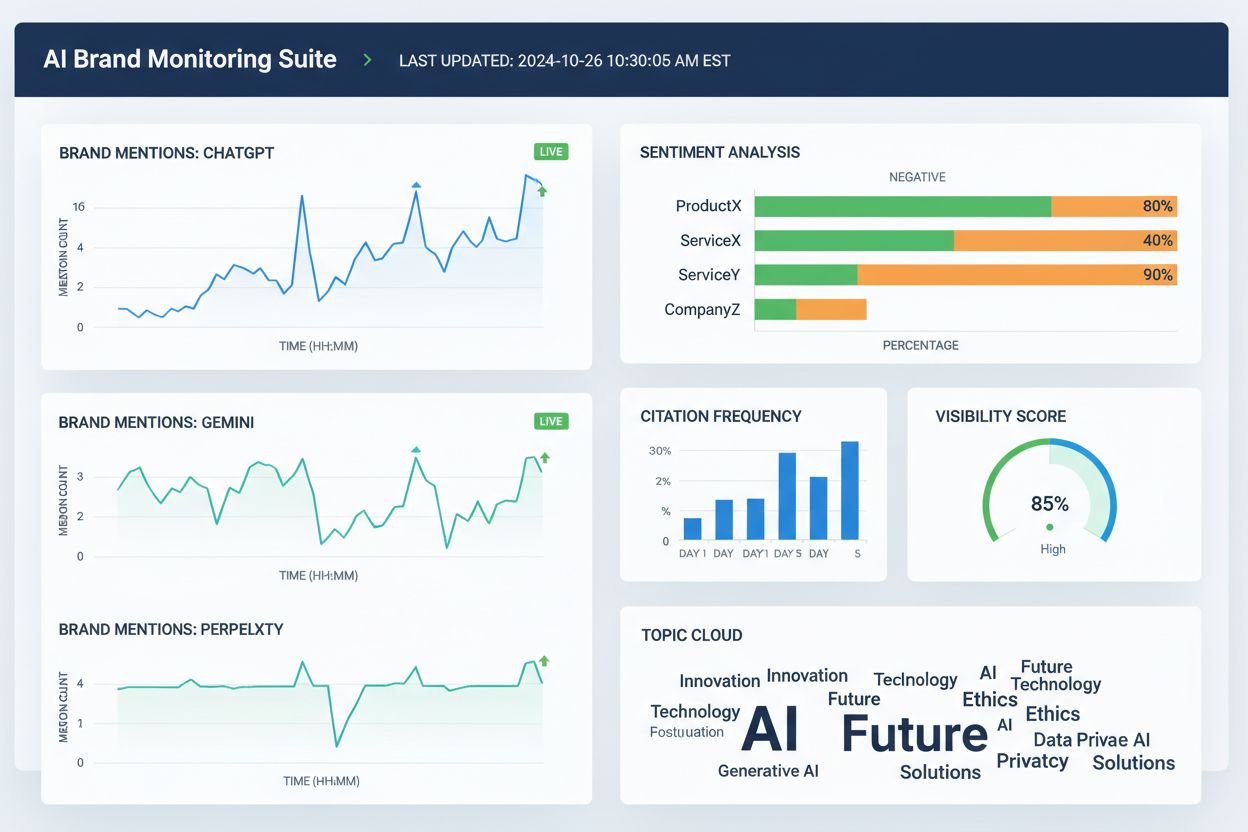

Several tools now help teams track brand representation across AI search platforms and the broader web. While capabilities overlap, they generally focus on visibility, attribution, and consistency:

These tools don’t correct incorrect brand information directly. Instead, they help teams detect errors early, identify brand data discrepancies before they spread, validate whether source-level fixes improve AI accuracy, and monitor long-term trends in AI attribution and visibility. Used together with source corrections and documentation, monitoring tools provide the feedback loop required to fix incorrect brand information sustainably.

AI search accuracy improves when brands are clearly defined entities, not vague participants in a category. To reduce brand misrepresentation in AI systems, focus on:

The objective isn’t to say more—it’s to say the same thing everywhere. When AI systems encounter consistent brand definitions across authoritative sources, they stop guessing and start repeating the correct information. This step is especially important for brands experiencing incorrect mentions, competitor attribution, or omission from relevant AI answers. Even after you fix incorrect brand info, accuracy isn’t permanent. AI systems continuously re-evaluate signals, which makes ongoing clarity essential.

There is no fixed timeline for correcting brand misrepresentation in AI systems. AI models update based on signal strength and consensus, not submission dates. Typical patterns include:

Early progress rarely shows up as a sudden “fixed” answer. Instead, look for indirect signals: reduced variability in AI responses, fewer conflicting descriptions, more consistent citations across sources, and gradual inclusion of your brand where it was previously omitted. Stagnation looks different—if the same incorrect phrasing persists despite multiple corrections, it usually indicates that the original source hasn’t been fixed or stronger reinforcement is needed elsewhere.

The most reliable way to fix incorrect brand information is to reduce the conditions that allow it to emerge in the first place. Effective prevention includes:

Brands that treat AI visibility as a living system—not a one-time cleanup project—recover faster from errors and experience fewer instances of repeated brand misrepresentation. Prevention isn’t about controlling AI outputs. It’s about maintaining clean, consistent inputs that AI systems can confidently repeat. As AI search continues to evolve, the brands that succeed are those that recognize misinformation correction as an ongoing process requiring continuous monitoring, source management, and strategic reinforcement of accurate information across trusted platforms.

AI Misinformation Correction is the process of identifying and fixing incorrect, outdated, or misleading information about brands that appears in AI-generated answers. Unlike traditional fact-checking, it focuses on correcting the sources that AI systems trust (directories, articles, listings) rather than trying to directly edit AI outputs. The goal is to ensure that when users ask AI systems about your brand, they receive accurate information.

AI systems like ChatGPT, Gemini, and Perplexity now influence how millions of people learn about brands. Research shows 45% of AI queries produce errors, and incorrect brand information can damage reputation, confuse customers, and lead to lost business. Unlike traditional search where brands control their own listings, AI systems synthesize information from multiple sources, making brand accuracy harder to control but more critical to manage.

No, direct correction doesn't work effectively. AI systems don't store brand facts in editable locations—they synthesize answers from external sources. Repeatedly asking AI to 'fix' information can actually reinforce hallucinations by strengthening the association you're trying to remove. Instead, corrections must be applied at the source level: updating directories, fixing outdated listings, and publishing accurate information on trusted platforms.

There's no fixed timeline because AI systems update based on signal strength and consensus, not submission dates. Minor factual corrections typically appear within 2-4 weeks, entity-level clarifications take 1-3 months, and competitive displacement can take 3-6 months or longer. Progress rarely shows as a sudden 'fixed' answer—instead, look for reduced variability in responses and more consistent citations across sources.

Several tools now track brand representation across AI platforms: Wellows monitors mentions and sentiment across ChatGPT, Gemini, and Perplexity; Profound compares visibility across LLMs; Otterly.ai analyzes brand sentiment in AI responses; BrandBeacon provides positioning analytics; Ahrefs Brand Radar tracks web mentions; and AmICited.com specializes in monitoring how brands are cited and represented across AI systems. These tools help detect errors early and validate whether corrections are working.

AI hallucinations are when AI systems generate information that isn't based on training data or is incorrectly decoded. AI misinformation is false or misleading information that appears in AI outputs, which can result from hallucinations but also from outdated sources, entity confusion, or inconsistent data across platforms. Misinformation correction addresses both hallucinations and source-level inaccuracies that lead to incorrect brand representation.

Monitor how AI systems describe your brand by asking them questions about your company, products, and positioning. Look for outdated information, incorrect descriptions, missing details, or competitor attribution. Use monitoring tools to track mentions across ChatGPT, Gemini, and Perplexity. Check if your brand is omitted from relevant AI answers. Compare AI descriptions to your official brand information to identify discrepancies.

It's an ongoing process. AI systems continuously re-evaluate signals as new content appears and older pages resurface. A one-time correction without ongoing monitoring will eventually be overwritten by the original misinformation. Brands that succeed treat AI visibility as a living system, maintaining consistent brand definitions across sources, auditing directories regularly, and monitoring AI mentions continuously to catch new errors before they spread.

Track how AI systems like ChatGPT, Gemini, and Perplexity represent your brand. Get real-time insights into brand mentions, citations, and visibility across AI platforms with AmICited.com.

Learn how to dispute inaccurate AI information, report errors to ChatGPT and Perplexity, and implement strategies to ensure your brand is accurately represented...

Learn effective strategies to identify, monitor, and correct inaccurate information about your brand in AI-generated answers from ChatGPT, Perplexity, and other...

Learn effective methods to identify, verify, and correct inaccurate information in AI-generated answers from ChatGPT, Perplexity, and other AI systems.