Fine-Tuning

Fine-tuning definition: adapting pre-trained AI models for specific tasks through domain-specific training. Learn how fine-tuning improves model performance and...

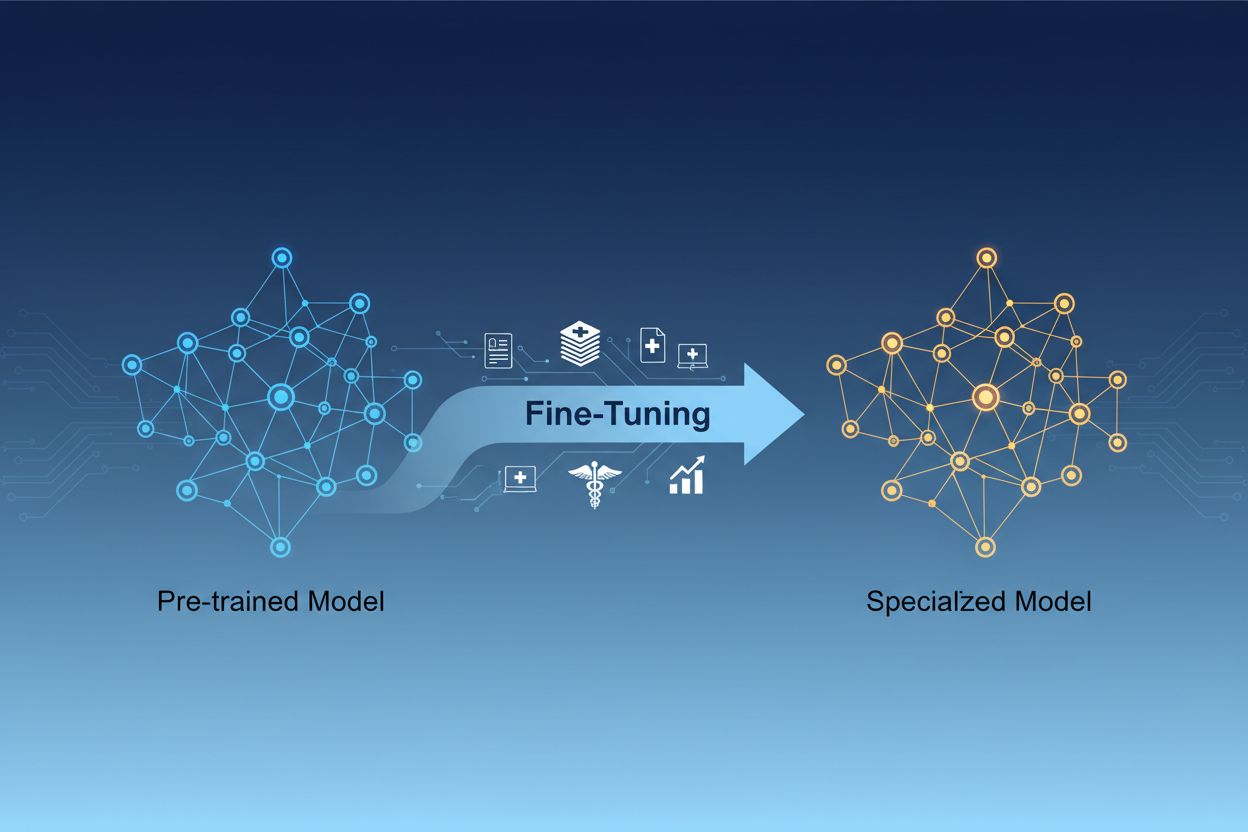

AI model fine-tuning is the process of adapting pre-trained artificial intelligence models to perform specific tasks or work with specialized data by adjusting their parameters through additional training on domain-specific datasets. This approach leverages existing foundational knowledge while customizing models for particular business applications, enabling organizations to create highly specialized AI systems without the computational expense of training from scratch.

AI model fine-tuning is the process of adapting pre-trained artificial intelligence models to perform specific tasks or work with specialized data by adjusting their parameters through additional training on domain-specific datasets. This approach leverages existing foundational knowledge while customizing models for particular business applications, enabling organizations to create highly specialized AI systems without the computational expense of training from scratch.

AI model fine-tuning is the process of taking a pre-trained artificial intelligence model and adapting it to perform specific tasks or work with specialized data. Rather than training a model from scratch, fine-tuning leverages the foundational knowledge already embedded in a pre-trained model and adjusts its parameters through additional training on domain-specific or task-specific datasets. This approach combines the efficiency of transfer learning with the customization needed for particular business applications. Fine-tuning allows organizations to create highly specialized AI models without the computational expense and time investment required for training from the ground up, making it an essential technique in modern machine learning development.

The distinction between fine-tuning and training from scratch represents one of the most important decisions in machine learning development. When you train a model from scratch, you begin with randomly initialized weights and must teach the model everything about language patterns, visual features, or domain-specific knowledge using massive datasets and substantial computational resources. This approach can require weeks or months of training time and access to specialized hardware like GPUs or TPUs. Fine-tuning, by contrast, starts with a model that already understands fundamental patterns and concepts, requiring only a fraction of the data and computational power to adapt it to your specific needs. The pre-trained model has already learned general features during its initial training phase, so fine-tuning focuses on adjusting these features to match your particular use case. This efficiency gain makes fine-tuning the preferred approach for most organizations, as it reduces both time-to-market and infrastructure costs while often achieving superior performance compared to training smaller models from scratch.

| Aspect | Fine-Tuning | Training from Scratch |

|---|---|---|

| Training Time | Days to weeks | Weeks to months |

| Data Requirements | Thousands to millions of examples | Millions to billions of examples |

| Computational Cost | Moderate (single GPU often sufficient) | Extremely high (multiple GPUs/TPUs required) |

| Initial Knowledge | Leverages pre-trained weights | Starts with random initialization |

| Performance | Often superior with limited data | Better with massive datasets |

| Expertise Required | Intermediate | Advanced |

| Customization Level | High for specific tasks | Maximum flexibility |

| Infrastructure | Standard cloud resources | Specialized hardware clusters |

Fine-tuning has become a critical capability for organizations seeking to deploy AI solutions that deliver competitive advantages. By adapting pre-trained models to your specific business context, you can create AI systems that understand your industry terminology, customer preferences, and operational requirements with remarkable accuracy. This customization enables businesses to achieve performance levels that generic, off-the-shelf models simply cannot match, particularly when dealing with specialized domains like healthcare, legal services, or technical support. The cost-effectiveness of fine-tuning means that even smaller organizations can now access enterprise-grade AI capabilities without massive infrastructure investments. Furthermore, fine-tuned models can be deployed faster, allowing companies to respond quickly to market opportunities and competitive pressures. The ability to continuously improve models by fine-tuning them on new data ensures that your AI systems remain relevant and effective as business conditions evolve.

Key business benefits of fine-tuning include:

Several established techniques have emerged as best practices in the fine-tuning landscape, each with distinct advantages depending on your specific requirements. Full fine-tuning involves updating all parameters of the pre-trained model, providing maximum flexibility and often the best performance, but requiring significant computational resources and larger datasets to avoid overfitting. Parameter-efficient fine-tuning methods like LoRA (Low-Rank Adaptation) and QLoRA have revolutionized the field by allowing effective model adaptation while updating only a small fraction of parameters, dramatically reducing memory requirements and training time. These techniques work by adding trainable low-rank matrices to the model’s weight matrices, capturing task-specific adaptations without modifying the original pre-trained weights. Adapter modules represent another approach, inserting small trainable networks between layers of the frozen pre-trained model, enabling efficient fine-tuning with minimal additional parameters. Prompt-based fine-tuning focuses on optimizing input prompts rather than model weights, useful for models where parameter access is limited. Instruction fine-tuning trains models to follow specific instructions and commands, particularly important for large language models that need to respond appropriately to diverse user requests. The choice among these techniques depends on your computational constraints, dataset size, performance requirements, and the specific model architecture you’re working with.

Fine-tuning large language models (LLMs) presents unique opportunities and challenges compared to fine-tuning smaller models or other types of neural networks. Modern LLMs like GPT-style models contain billions of parameters, making full fine-tuning computationally prohibitive for most organizations. This reality has driven the adoption of parameter-efficient techniques that allow effective adaptation of LLMs without the need for enterprise-scale infrastructure. Instruction fine-tuning has become particularly important for LLMs, where models are trained on examples of instructions paired with high-quality responses, enabling them to follow user directives more effectively. Reinforcement Learning from Human Feedback (RLHF) represents an advanced fine-tuning approach where models are further refined based on human preferences and evaluations, improving alignment with human values and expectations. The relatively small amount of task-specific data needed to fine-tune LLMs—often just hundreds or thousands of examples—makes this approach accessible to organizations without massive labeled datasets. However, fine-tuning LLMs requires careful attention to hyperparameter selection, learning rate scheduling, and preventing catastrophic forgetting, where the model loses previously learned capabilities while adapting to new tasks.

Organizations across industries have discovered powerful applications for fine-tuned AI models that deliver measurable business value. Customer service automation represents one of the most prevalent use cases, where models are fine-tuned on company-specific support tickets, product information, and communication styles to create chatbots that handle customer inquiries with domain expertise and brand consistency. Medical and legal document analysis leverages fine-tuning to adapt general language models to understand specialized terminology, regulatory requirements, and industry-specific formats, enabling accurate information extraction and classification. Sentiment analysis and content moderation can be significantly improved by fine-tuning models on examples from your specific industry or community, capturing nuanced language patterns and context that generic models miss. Code generation and software development assistance benefits from fine-tuning on your organization’s codebase, programming conventions, and architectural patterns, enabling AI tools that generate code aligned with your standards. Recommendation systems are frequently fine-tuned on user behavior data and product catalogs to deliver personalized suggestions that drive engagement and revenue. Named entity recognition and information extraction from domain-specific documents—such as financial reports, scientific papers, or technical specifications—can be dramatically improved through fine-tuning on relevant examples. These diverse applications demonstrate that fine-tuning is not limited to any single industry or use case, but rather represents a fundamental capability for creating AI systems that deliver competitive advantages across virtually every business domain.

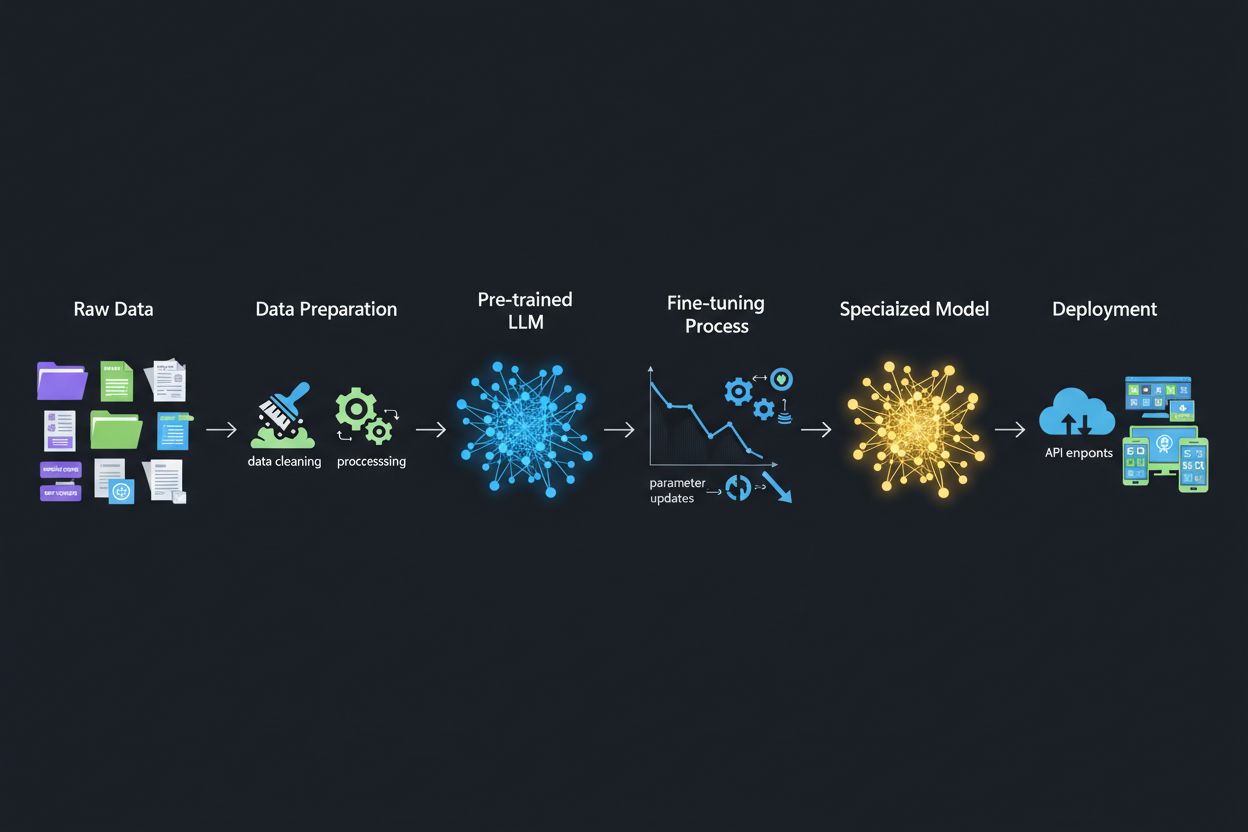

The fine-tuning process follows a structured workflow that begins with careful preparation and extends through deployment and monitoring. Data preparation is the critical first step, requiring you to collect, clean, and format your domain-specific dataset in a way that matches the input-output structure expected by the pre-trained model. This dataset should be representative of the tasks your fine-tuned model will perform in production, and quality matters more than quantity—a smaller dataset of high-quality examples typically outperforms a larger dataset with inconsistent or noisy labels. Train-validation-test split ensures you can properly evaluate model performance, with typical allocations of 70-80% for training, 10-15% for validation, and 10-15% for testing. Hyperparameter selection involves choosing learning rates, batch sizes, number of training epochs, and other parameters that control the fine-tuning process; these choices significantly impact both performance and training efficiency. Model initialization uses the pre-trained weights as a starting point, preserving the foundational knowledge while allowing parameters to adjust to your specific task. Training execution involves iteratively updating model parameters on your training data while monitoring validation performance to detect overfitting. Evaluation and iteration uses your test set to assess final performance and determine whether additional fine-tuning, different hyperparameters, or more training data would improve results. Deployment preparation includes optimizing the model for inference speed and resource efficiency, potentially through quantization or distillation techniques. Finally, monitoring and maintenance in production ensures the model continues performing well as data distributions shift over time, with periodic retraining on new data to maintain accuracy.

Fine-tuning, while more efficient than training from scratch, presents its own set of challenges that practitioners must navigate carefully. Overfitting occurs when models memorize training data rather than learning generalizable patterns, a particular risk when fine-tuning on small datasets; this can be mitigated through techniques like early stopping, regularization, and data augmentation. Catastrophic forgetting happens when fine-tuning causes models to lose previously learned capabilities, particularly problematic when adapting general-purpose models to specialized tasks; careful learning rate selection and techniques like knowledge distillation help preserve foundational knowledge. Data quality and labeling represent significant practical challenges, as fine-tuning requires high-quality labeled examples that accurately represent your target domain and use cases. Computational resource management requires balancing performance improvements against training time and infrastructure costs, particularly important when working with large models. Hyperparameter sensitivity means that fine-tuning performance can vary dramatically based on learning rate, batch size, and other settings, requiring systematic experimentation and validation.

Best practices for successful fine-tuning include:

Organizations often face a choice between three complementary approaches for adapting AI models to specific tasks: fine-tuning, Retrieval-Augmented Generation (RAG), and prompt engineering. Prompt engineering involves crafting carefully worded instructions and examples to guide model behavior without modifying the model itself; this approach is fast and requires no training, but has limited effectiveness for complex tasks and cannot teach models genuinely new information. RAG augments model responses by retrieving relevant documents or data from external sources before generating answers, enabling models to access current information and domain-specific knowledge without parameter updates; this approach works well for knowledge-intensive tasks but adds latency and complexity to inference. Fine-tuning modifies model parameters to deeply embed task-specific knowledge and patterns, providing the best performance for well-defined tasks with sufficient training data, but requiring more time and computational resources than the other approaches. The optimal solution often involves combining these techniques: using prompt engineering for quick prototyping, RAG for knowledge-intensive applications, and fine-tuning for performance-critical systems where the investment in training is justified. Fine-tuning excels when you need consistent, high-performance behavior on specific tasks, when you have sufficient training data, and when the performance gains justify the development effort. RAG works best for applications requiring access to current or proprietary information that changes frequently. Prompt engineering serves as an excellent starting point for exploration and prototyping before committing to more resource-intensive approaches. Understanding the strengths and limitations of each approach enables organizations to make informed decisions about which techniques to employ for different components of their AI systems.

Transfer learning is the broader concept of leveraging knowledge from one task to improve performance on another task, while fine-tuning is a specific implementation of transfer learning. Fine-tuning takes a pre-trained model and adjusts its parameters on new data, whereas transfer learning can also include feature extraction where you freeze the pre-trained weights and only train new layers. All fine-tuning involves transfer learning, but not all transfer learning requires fine-tuning.

Fine-tuning time varies significantly based on model size, dataset size, and available hardware. Using parameter-efficient techniques like LoRA, a 13-billion-parameter model can be fine-tuned in approximately 5 hours on a single A100 GPU. Smaller models or parameter-efficient methods may take just hours, while full fine-tuning of larger models can take days or weeks. The key advantage is that fine-tuning is dramatically faster than training from scratch, which can take months.

Yes, fine-tuning is specifically designed to work effectively with limited data. Pre-trained models have already learned general patterns, so you typically need only hundreds to thousands of examples for effective fine-tuning, compared to millions required for training from scratch. However, data quality matters more than quantity—a smaller dataset of high-quality, representative examples will outperform a larger dataset with inconsistent or noisy labels.

LoRA (Low-Rank Adaptation) is a parameter-efficient fine-tuning technique that adds trainable low-rank matrices to a model's weight matrices instead of updating all parameters. This approach reduces trainable parameters by thousands of times while maintaining comparable performance to full fine-tuning. LoRA is important because it makes fine-tuning accessible on standard hardware, dramatically reduces memory requirements, and enables organizations to fine-tune large models without expensive infrastructure.

Overfitting occurs when training loss decreases but validation loss increases, indicating the model is memorizing training data rather than learning generalizable patterns. Monitor both metrics during training—if validation performance plateaus or degrades while training performance continues improving, your model is likely overfitting. Implement early stopping to halt training when validation performance stops improving, and use techniques like regularization and data augmentation to prevent overfitting.

Fine-tuning costs include computational resources (GPU/TPU time), data preparation and labeling, model storage and deployment infrastructure, and ongoing monitoring and maintenance. However, these costs are typically 10-100 times lower than training models from scratch. Using parameter-efficient techniques like LoRA can reduce computational costs by 80-90% compared to full fine-tuning, making fine-tuning an economical approach for most organizations.

Yes, fine-tuning typically improves model accuracy significantly for specific tasks. By training on domain-specific data, models learn task-relevant patterns and terminology that generic models miss. Studies show fine-tuning can improve accuracy by 10-30% or more depending on the task and dataset quality. The improvement is most dramatic when the fine-tuning task differs from the pre-training task, as the model adapts its learned features to your specific requirements.

Fine-tuning enables organizations to keep sensitive data on their own infrastructure rather than sending it to third-party APIs. You can fine-tune models locally on proprietary or regulated data without exposing it to external services, ensuring compliance with data protection regulations like GDPR, HIPAA, or industry-specific requirements. This approach provides both security and compliance benefits while maintaining the performance advantages of using pre-trained models.

Track how AI systems like GPTs, Perplexity, and Google AI Overviews cite and reference your brand with AmICited's AI monitoring platform.

Fine-tuning definition: adapting pre-trained AI models for specific tasks through domain-specific training. Learn how fine-tuning improves model performance and...

Discover real-time AI adaptation - the technology enabling AI systems to continuously learn from current events and data. Explore how adaptive AI works, its app...

Learn how AI-friendly formatting with tables, lists, and clear sections improves AI parsing accuracy and increases your content's visibility in AI Overviews, Ch...