Preventing AI Visibility Crises: Proactive Strategies

Learn how to prevent AI visibility crises with proactive monitoring, early warning systems, and strategic response protocols. Protect your brand in the AI era.

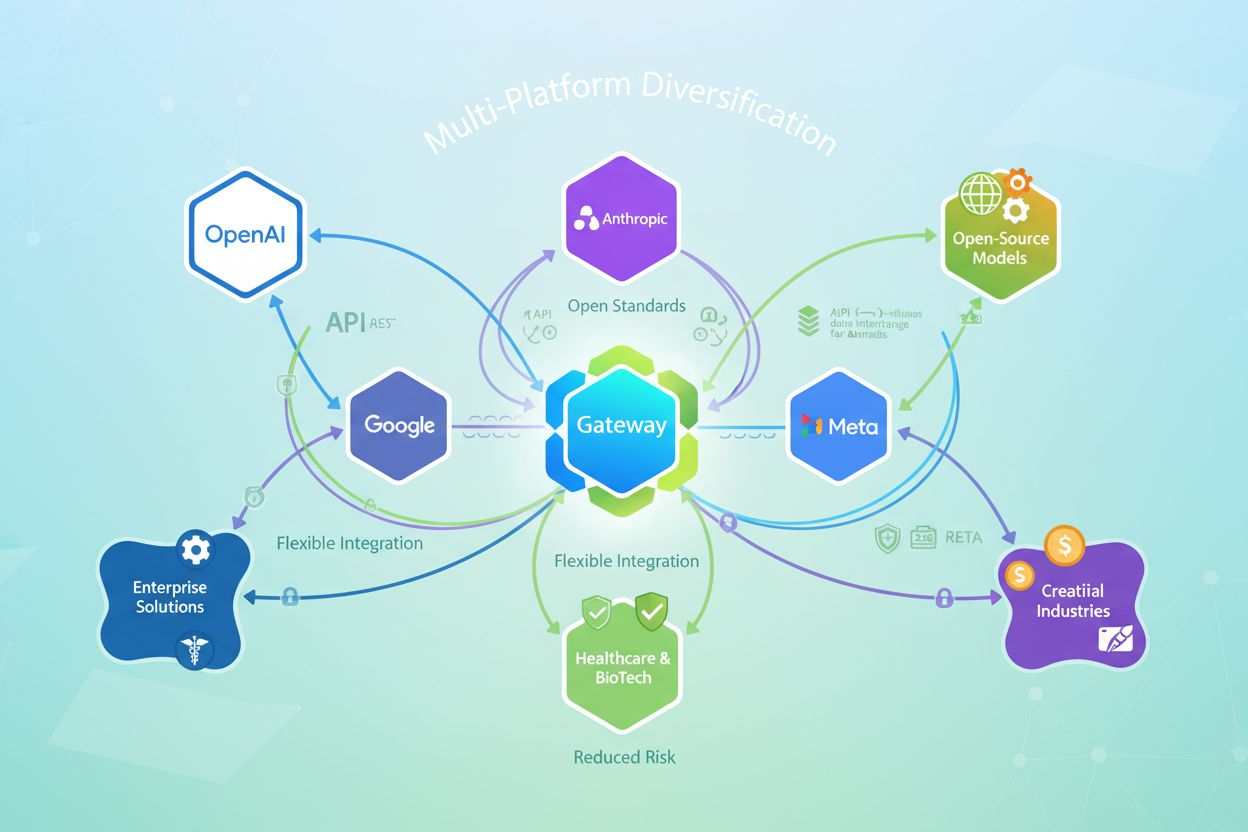

Strategy of building visibility and access across multiple AI platforms to reduce dependency risk, optimize costs, and maintain strategic flexibility. Organizations distribute their AI workloads across different providers rather than relying on a single vendor, enabling better negotiating power, improved resilience, and the ability to adopt best-of-breed solutions as they emerge.

Strategy of building visibility and access across multiple AI platforms to reduce dependency risk, optimize costs, and maintain strategic flexibility. Organizations distribute their AI workloads across different providers rather than relying on a single vendor, enabling better negotiating power, improved resilience, and the ability to adopt best-of-breed solutions as they emerge.

Vendor lock-in occurs when an organization becomes so dependent on a single AI provider that switching to alternatives becomes impractical or prohibitively expensive. This dependency develops gradually through tightly coupled integrations, proprietary APIs, and custom implementations that bind applications directly to one vendor’s ecosystem. The consequences are severe: organizations lose negotiating leverage, face escalating costs, and become unable to adopt superior models or technologies as they emerge. Once locked in, switching providers requires extensive re-engineering, retraining, and often substantial financial penalties.

Relying on a single AI platform creates multiple strategic vulnerabilities that extend far beyond initial convenience:

Multi-model platforms solve these challenges by creating an abstraction layer between applications and AI providers. Rather than applications calling vendor APIs directly, they interact with a unified interface that the platform manages. This architecture enables intelligent routing that directs requests to optimal models based on cost, performance, compliance requirements, or availability. The platform translates requests into provider-specific formats, handles authentication and security, and maintains comprehensive audit trails. Enterprise-grade multi-model platforms add critical governance capabilities: centralized policy enforcement, sensitive data protection, role-based access control, and real-time observability into AI usage across the entire organization.

| Dimension | Single-Provider | Multi-Model Platform |

|---|---|---|

| Vendor Flexibility | Locked to one provider | Access to 100+ models from multiple providers |

| Cost | Enterprise agreements: $50K-$500K+ annually | 40-60% lower cost with same capabilities |

| Governance | Limited to vendor’s controls | Centralized policies across all providers |

| Data Security | Direct exposure to provider | Sensitive data protection layer |

| Switching Cost | Extremely high (months, millions) | Minimal (configuration change) |

| Latency Overhead | None | 3-5ms (negligible) |

| Compliance | Vendor-dependent | Customizable to requirements |

Multi-model platforms deliver substantial cost advantages through competitive provider pricing and intelligent model selection. Organizations using enterprise multi-model platforms report 40-60% cost savings compared to single-provider enterprise agreements while gaining access to superior models and comprehensive governance. The platform enables dynamic model selection—routing simple queries to cost-efficient models while reserving expensive, high-capability models for complex tasks. Real-time cost tracking and budget management prevent runaway expenses, while competitive pressure between providers keeps pricing favorable. Organizations can also negotiate better rates by demonstrating they can easily switch providers, fundamentally shifting the power dynamic in vendor relationships.

Enterprise multi-model platforms implement protective layers that single-provider solutions cannot match. Sensitive data protection mechanisms detect and prevent confidential information from reaching external providers, keeping proprietary data within organizational boundaries. Comprehensive audit logging creates transparent records of every AI interaction, supporting compliance demonstrations for regulations like GDPR, HIPAA, and SOC 2. Organizations can enforce consistent policies across all providers—acceptable use rules, data handling requirements, and compliance constraints—without depending on each vendor’s governance capabilities. A 2025 Business Digital Index report found that 50% of AI providers fail basic data security standards, making intermediary governance layers essential for regulated industries. Multi-model platforms become the security boundary, providing better protection than direct provider access.

Platform diversification creates operational resilience through redundancy and failover capabilities. If one AI provider experiences outages or performance degradation, the platform automatically routes workloads to alternative providers without service interruption. This redundancy is impossible with single-provider approaches, where outages directly impact all dependent applications. Multi-model platforms also enable performance optimization by monitoring real-time latency and quality metrics, automatically selecting the fastest or most reliable provider for each request. Organizations gain the ability to test new models in production with minimal risk, gradually shifting traffic to superior alternatives as confidence builds. The result is AI infrastructure that remains reliable and performant even when individual providers experience disruptions.

Sustainable platform diversification depends on open standards that prevent new forms of lock-in. Organizations should prioritize platforms using standard APIs (REST, GraphQL) rather than proprietary SDKs, ensuring applications remain vendor-agnostic. Model interchange formats like ONNX (Open Neural Network Exchange) enable trained models to move between frameworks and platforms without re-training. Data portability requires storing logs and metrics in open formats—Parquet, JSON, OpenTelemetry—under organizational control rather than vendor-locked databases. Open standards create genuine strategic freedom: organizations can migrate to new platforms, adopt emerging models, or self-host infrastructure without rewriting applications. This approach future-proofs AI strategies against vendor changes, pricing shifts, or market disruptions.

Successful platform diversification requires systematic evaluation and governance. Organizations should assess platforms based on multi-vendor support (do they integrate with major providers and allow custom models?), open APIs and data formats (can you export data and use standard libraries?), and deployment flexibility (can you run on-premises or across multiple clouds?). Implementation begins with selecting a multi-model platform that aligns with organizational requirements, then gradually migrating applications to use the platform’s unified interface. Establish governance frameworks defining acceptable AI use, data handling policies, and compliance requirements—the platform enforces these consistently across all providers. Team training ensures developers understand the new architecture and can leverage platform capabilities effectively. Continuous monitoring and optimization identify cost-saving opportunities, performance improvements, and emerging use cases.

As organizations diversify across multiple AI platforms, maintaining visibility and control becomes critical. AmICited.com serves as an essential monitoring solution specifically designed for this challenge, tracking how AI systems reference your brand and content across multiple AI platforms including ChatGPT, Perplexity, Google AI Overviews, and others. This visibility is crucial for understanding your AI footprint, ensuring compliance, and identifying opportunities for optimization. FlowHunt.io complements this approach by providing AI content generation and automation capabilities across multiple platforms, enabling organizations to maintain consistent quality and governance as they scale AI usage. Together, these solutions help organizations maintain comprehensive visibility into their AI platform usage, control costs, ensure compliance, and optimize performance across their entire diversified AI infrastructure. By combining multi-platform monitoring with intelligent automation, organizations can confidently scale AI adoption while maintaining the control and visibility necessary for enterprise operations.

Vendor lock-in occurs when an organization becomes so dependent on a single AI provider that switching becomes impractical or prohibitively expensive. This dependency develops through tightly coupled integrations and proprietary APIs, resulting in lost negotiating power, inability to adopt superior models, and escalating costs. Organizations should care because lock-in limits strategic flexibility and creates long-term vulnerability to pricing changes and service disruptions.

Organizations using enterprise multi-model platforms report 40-60% cost savings compared to single-provider enterprise agreements while gaining access to superior models and comprehensive governance. These savings come from competitive provider pricing, intelligent model selection that routes simple queries to cost-efficient models, and improved negotiating leverage when vendors know you can easily switch.

Single-provider platforms lock organizations into one vendor's ecosystem with limited governance and high switching costs. Multi-model platforms create an abstraction layer enabling access to 100+ models from multiple providers, centralized governance across all providers, sensitive data protection, and minimal switching costs. Multi-model platforms add only 3-5ms latency overhead while providing enterprise-grade security and compliance capabilities.

Enterprise multi-model platforms implement sensitive data protection mechanisms that detect and prevent confidential information from reaching external providers, keeping proprietary data within organizational boundaries. They maintain comprehensive audit logs of every AI interaction, enforce consistent policies across all providers, and become the security boundary rather than exposing data directly to vendors. This approach is essential because 50% of AI providers fail basic data security standards.

Open standards (REST APIs, GraphQL, ONNX, OpenTelemetry) prevent new forms of vendor lock-in by ensuring applications remain vendor-agnostic and data stays portable. Organizations should prioritize platforms using standard APIs rather than proprietary SDKs, store data in open formats under organizational control, and use model interchange formats that enable models to move between platforms without re-training. This approach future-proofs AI strategies against vendor changes and market disruptions.

Evaluate platforms based on multi-vendor support (integration with major providers and custom models), open APIs and data formats (ability to export data and use standard libraries), deployment flexibility (on-premises or multi-cloud options), and governance capabilities (policy enforcement, audit logging, compliance support). Prioritize platforms with sustainable organic distribution mechanisms rather than temporary promotional incentives, and assess their track record with enterprise customers in your industry.

Key challenges include selecting the right multi-model platform aligned with organizational requirements, migrating existing applications to use the platform's unified interface, establishing governance frameworks that define acceptable AI use and compliance requirements, and training teams on the new architecture. Organizations should also plan for ongoing monitoring and optimization to identify cost-saving opportunities and emerging use cases. Success requires commitment to the new approach rather than maintaining legacy single-provider integrations in parallel.

AmICited.com provides comprehensive monitoring of how your brand and content appear across multiple AI platforms including ChatGPT, Perplexity, Google AI Overviews, and others. This visibility is crucial for understanding your AI footprint, ensuring compliance, identifying optimization opportunities, and maintaining control over how your brand is referenced in AI-generated responses. AmICited helps organizations track their presence across the diversified AI landscape they've built.

Track how your brand appears across multiple AI platforms with AmICited. Get comprehensive visibility into your AI footprint across ChatGPT, Perplexity, Google AI Overviews, and more.

Learn how to prevent AI visibility crises with proactive monitoring, early warning systems, and strategic response protocols. Protect your brand in the AI era.

Learn to detect AI visibility crises early with real-time monitoring, sentiment analysis, and anomaly detection. Discover warning signs and best practices for p...

Learn how AI ecosystem integration connects AI assistants with apps and services to expand functionality. Discover APIs, integrations, use cases, and best pract...