Paid AI Visibility vs Organic: Understanding Your Options

Compare paid AI advertising and organic optimization strategies. Learn costs, ROI, and best practices for visibility across ChatGPT, Perplexity, and Google AI O...

AI Search Behavior Analytics is the systematic study of how users interact with AI assistants and how brands appear within AI-generated answers. It measures visibility, sentiment, and influence across multiple AI platforms like ChatGPT, Perplexity, and Google AI Overviews. Unlike traditional SEO metrics focused on clicks and rankings, it tracks zero-click visibility and brand positioning in conversational AI contexts. This analytical framework reveals whether your content influences AI systems and shapes user perception before they visit your website.

AI Search Behavior Analytics is the systematic study of how users interact with AI assistants and how brands appear within AI-generated answers. It measures visibility, sentiment, and influence across multiple AI platforms like ChatGPT, Perplexity, and Google AI Overviews. Unlike traditional SEO metrics focused on clicks and rankings, it tracks zero-click visibility and brand positioning in conversational AI contexts. This analytical framework reveals whether your content influences AI systems and shapes user perception before they visit your website.

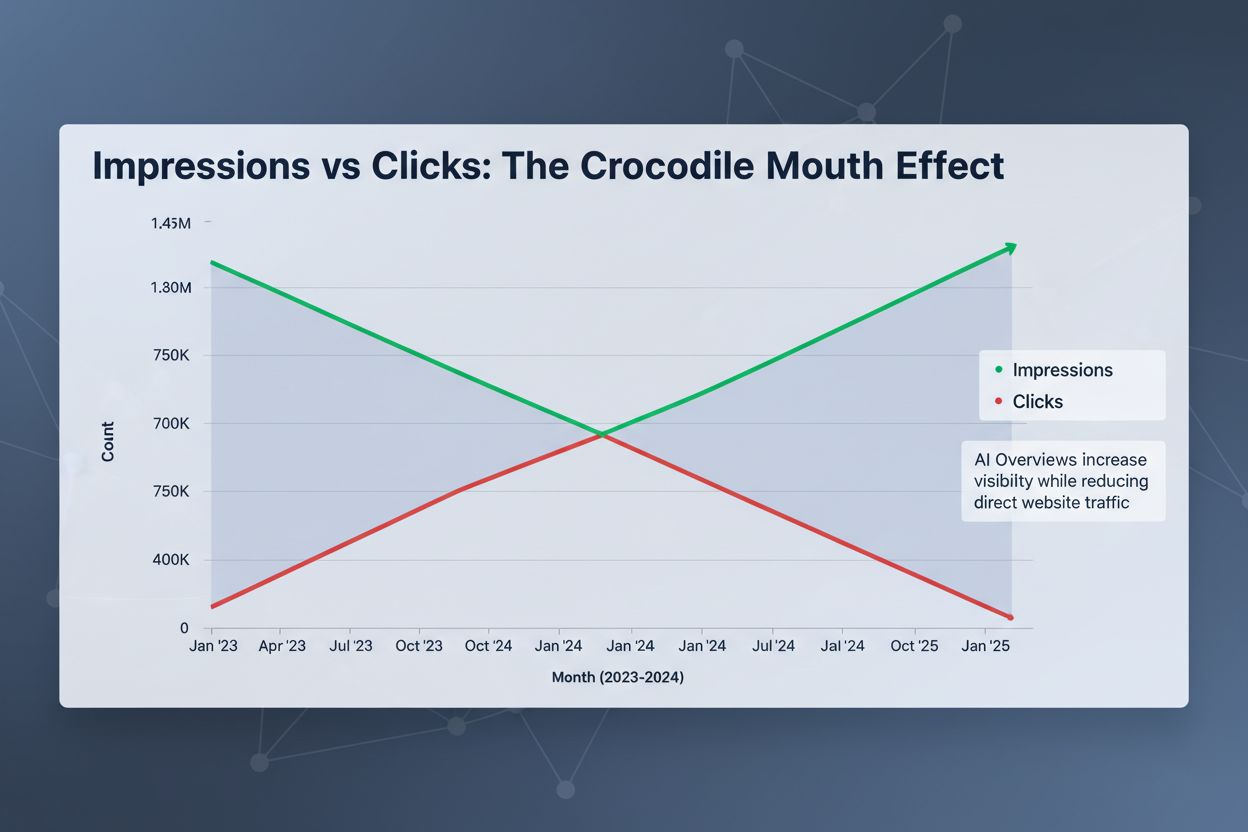

The digital search landscape is undergoing a fundamental transformation as AI-driven search replaces the traditional ten blue links model that dominated for decades. Users increasingly interact with conversational AI assistants like ChatGPT, Claude, and Google’s AI Overviews rather than clicking through to individual web pages. This shift introduces the Crocodile Mouth phenomenon—a paradoxical situation where search impressions and visibility metrics increase while actual click-through rates decline sharply. Zero-click searches have become increasingly common, with users receiving complete answers directly from AI systems without ever visiting source websites. Traditional metrics like organic click volume no longer accurately reflect brand visibility or influence in the AI-driven search ecosystem. Organizations must fundamentally rethink how they measure search performance, moving beyond legacy KPIs to embrace AI Search Behavior Analytics—the systematic study of how users interact with AI assistants and how brands appear within those interactions. This analytical framework reveals not just whether your content ranks, but whether it influences AI-generated answers and shapes user perception.

| Metric | Definition | What It Measures | Why It Matters |

|---|---|---|---|

| AI Overview Inclusion Rate | Percentage of tracked queries where your brand/content appears in AI-generated answers | Direct visibility within AI responses across multiple platforms | Indicates whether your content influences AI systems; higher rates correlate with brand authority |

| Citation Share-of-Voice | Your brand’s percentage of total citations within AI answers for competitive queries | Competitive positioning within AI-generated content | Shows whether you’re winning the narrative battle against competitors in AI contexts |

| Multi-Engine Entity Coverage | Number of different AI platforms (ChatGPT, Perplexity, Claude, Gemini, etc.) where your entity appears | Cross-platform visibility and consistency | Reveals whether your presence is platform-dependent or truly authoritative across the AI ecosystem |

| Answer Sentiment Score | Quantified measure of positive, neutral, or negative language used when AI systems describe your brand | Brand perception and safety within AI responses | Detects potential brand damage, hallucinations, or misrepresentations before they spread widely |

The modern AI search ecosystem comprises multiple distinct platforms, each with different crawling behaviors, ranking algorithms, and response generation mechanisms. ChatGPT, Perplexity, Google’s AI Overviews, Claude, Gemini, and emerging platforms like Grok each surface information differently and reach different user segments. Tracking visibility across these engines requires fundamentally different approaches than traditional SEO monitoring—each platform has unique data access patterns, citation formats, and answer structures. Competitive benchmarking in this context means understanding not just where competitors rank, but how their narratives are represented across multiple AI systems and whether they maintain consistent brand positioning. A brand might dominate Google’s AI Overviews while being underrepresented in Perplexity or Claude, creating strategic gaps in market perception. Organizations using platforms like AmICited.com gain unified visibility across these fragmented AI surfaces, enabling comprehensive competitive intelligence. The challenge intensifies because AI platforms update their training data and algorithms frequently, meaning yesterday’s visibility metrics may not predict tomorrow’s performance.

Effective AI Search Behavior Analytics requires sophisticated data collection infrastructure that captures, parses, and warehouses AI responses at scale. The implementation process follows five critical steps:

This infrastructure must handle the volume and velocity of AI responses—thousands of queries across multiple platforms daily—while maintaining data quality and compliance with each platform’s terms of service. Organizations building this capability internally often underestimate the engineering complexity; specialized platforms streamline this process significantly.

Sentiment analysis within AI responses reveals how artificial intelligence systems characterize your brand, products, and competitive positioning—information that traditional search analytics cannot capture. When an AI system describes your company as “innovative” versus “controversial,” or highlights customer complaints versus product benefits, it shapes user perception before they ever visit your website. Analyzing sentiment requires moving beyond simple positive/negative classification to understand key sentiment drivers—which specific claims, attributes, or associations appear most frequently in AI descriptions of your brand. Brand safety becomes critical in this context because AI systems can hallucinate facts, misattribute claims, or amplify outdated information that damages reputation. Sentiment dashboards track whether AI responses emphasize your competitive advantages, acknowledge your market position accurately, or inadvertently promote competitor narratives. Negative sentiment spikes often indicate emerging brand perception issues that require immediate content or PR response. The most sophisticated organizations monitor sentiment trends across platforms and geographies, identifying where brand perception diverges and why.

The transition from traditional SEO dashboards to AI-focused monitoring dashboards requires rethinking both metrics and audience. Legacy dashboards emphasize rankings, impressions, and clicks—metrics that lose relevance when users receive answers without clicking. Modern AI dashboards must serve multiple personas with distinct information needs: the CMO needs brand sentiment trends and competitive narrative analysis; the SEO Lead requires AI Overview inclusion rates and citation share-of-voice benchmarks; the Content Lead wants to understand which content types and topics drive AI citations; the Product Marketing team needs entity coverage across platforms and sentiment drivers. Each persona requires different visualizations, drill-down capabilities, and alert thresholds. Integration with revenue data transforms these metrics from vanity numbers into business outcomes—connecting AI visibility to pipeline influence, customer acquisition cost, and lifetime value. Organizations that successfully implement AI dashboards report 40-60% improvements in content strategy effectiveness because decisions shift from “does this rank?” to “does this influence AI-driven customer decisions?”

Competitive intelligence in the AI era extends far beyond traditional rank tracking to encompass narrative analysis and share-of-voice calculations across multiple platforms. Monitoring how competitors appear in AI responses reveals their content strategy, authority positioning, and market narrative—information that informs your own content roadmap. Share of Voice calculations in AI contexts measure your brand’s percentage of citations within competitive answer sets, revealing whether you’re winning the visibility battle in AI-generated content. Identifying niche competitors becomes easier when analyzing AI responses because platforms often surface unexpected sources that rank poorly in traditional search but carry significant authority in AI systems. Analyzing competitor narratives—the specific claims, attributes, and associations highlighted in their AI descriptions—reveals gaps in your own positioning and opportunities to differentiate. Some organizations discover that smaller, more specialized competitors dominate AI responses for specific query types, requiring targeted content strategies to reclaim visibility. This competitive intelligence feeds directly into content planning, ensuring resources focus on queries and topics where AI visibility drives business outcomes.

Localization and compliance introduce complexity because AI responses vary significantly across countries, languages, and regulatory contexts. A brand’s AI-generated description in English may differ substantially from its German or Japanese equivalent, reflecting different training data, cultural contexts, and local competitor positioning. Privacy and data governance requirements vary by jurisdiction—GDPR compliance in Europe, CCPA in California, and emerging regulations elsewhere all affect how AI systems can be monitored and what data can be collected. Terms of service compliance matters because most AI platforms restrict automated querying, requiring careful monitoring infrastructure design to avoid violating platform policies. Brand safety monitoring becomes geographically complex when the same brand appears in different contexts across regions—a product description accurate in one market might be misleading in another. Organizations operating globally must implement monitoring that respects these regional variations while maintaining consistent brand positioning. The complexity multiplies when considering that different AI platforms have different geographic coverage and localization approaches, creating fragmented visibility across markets.

Zero-click mentions in AI responses—where users receive information without visiting your website—paradoxically influence customer decisions and business outcomes despite generating no direct traffic. Research demonstrates that AI-generated answers shape user perception, build brand awareness, and influence purchase consideration even when users never click through to source content. Attribution modeling for AI visibility requires new approaches because traditional last-click attribution fails when the customer journey includes AI touchpoints that generate no clicks. Organizations must map the customer journey to identify where AI interactions occur and how they influence downstream conversions, even when attribution appears indirect. Some companies discover that AI mentions correlate with increased branded search volume, suggesting that AI visibility drives awareness that converts through other channels. Modeled attribution approaches—using statistical techniques to estimate AI’s influence on pipeline and revenue—provide more accurate ROI calculations than click-based metrics alone. Forward-thinking organizations integrate AI visibility metrics into their marketing attribution models, revealing that AI search behavior analytics directly impacts revenue outcomes.

Future-proofing your AI search behavior analytics infrastructure requires building flexibility into metrics, data structures, and monitoring approaches because the AI landscape evolves rapidly. New AI platforms emerge regularly—today’s dominant engines may be displaced by tomorrow’s innovations—requiring monitoring systems that adapt without complete redesign. Building reusable playbooks for onboarding new platforms, defining metrics, and implementing monitoring reduces the friction of staying current as the ecosystem evolves. Flexible data structures that capture platform-agnostic information (query, response, citations, sentiment) while accommodating platform-specific attributes enable rapid adaptation. Regular reviews of metrics and KPIs—quarterly or semi-annually—ensure your monitoring framework remains aligned with business priorities and reflects the current competitive landscape. Organizations that treat AI search behavior analytics as a static implementation often find their insights become stale as platforms evolve; those that embrace continuous improvement maintain competitive advantage. The most sophisticated teams build internal expertise in AI monitoring, reducing dependency on external platforms and enabling rapid response to ecosystem changes.

Traditional SEO analytics focus on rankings, clicks, and organic traffic from search engines. AI Search Behavior Analytics measures visibility within AI-generated answers, sentiment analysis, and influence on user decisions even when no click occurs. Traditional metrics become less relevant in zero-click search environments where AI provides complete answers without directing users to websites.

Continuous monitoring is ideal, but most organizations implement weekly or bi-weekly reviews of key metrics. Real-time alerting for significant changes (drops in inclusion rate, sentiment shifts, or competitive threats) ensures rapid response. The frequency depends on your industry volatility and how quickly AI platforms update their training data.

Start with the platforms your audience uses most: Google AI Overviews, ChatGPT, and Perplexity represent the largest user bases. Add Claude, Gemini, and other platforms based on your industry and customer research. B2B companies often find different platform priorities than B2C organizations, so tailor your monitoring to your specific market.

Create comprehensive, authoritative content that directly answers user questions. Implement structured data and schema markup to help AI systems understand your content. Build backlinks from authoritative sources that AI systems cite. Ensure your content is technically optimized for crawling by AI bots. Monitor sentiment and correct inaccurate AI descriptions through updated content and PR efforts.

AmICited.com specializes in monitoring how AI systems reference your brand across multiple platforms. Other options include Semrush's AI Visibility Toolkit, Gumshoe AI for persona-based tracking, ZipTie for simplified monitoring, and Trakkr for crawler analytics. Choose based on your specific needs: brand monitoring, competitive intelligence, or technical optimization.

Connect AI visibility metrics to business outcomes by tracking branded search volume, website traffic, and conversion rates alongside AI mention increases. Use attribution modeling to estimate AI's influence on pipeline and revenue. Monitor customer feedback to identify whether AI descriptions influence purchase decisions. Compare AI visibility trends with sales cycles to identify correlations.

Share of Voice measures your brand's percentage of citations within AI-generated answers for competitive queries. It matters because it reveals whether you're winning the narrative battle against competitors in AI contexts. A higher share of voice indicates stronger authority and influence over how AI systems describe your market category.

Monitor AI responses regularly for hallucinations, outdated information, or misrepresentations. Create authoritative content that corrects inaccurate descriptions. Implement structured data to provide AI systems with accurate information about your brand. Engage in digital PR to build citations from authoritative sources that AI systems trust. Flag significant inaccuracies to AI platform support teams when possible.

Track how AI assistants reference your brand across ChatGPT, Perplexity, Google AI Overviews, and more. Get real-time visibility into AI mentions, sentiment analysis, and competitive positioning with AmICited.

Compare paid AI advertising and organic optimization strategies. Learn costs, ROI, and best practices for visibility across ChatGPT, Perplexity, and Google AI O...

Discover how AI agents reshape search behavior, from conversational queries to zero-click results. Learn the impact on user habits, brand visibility, and search...

Discover how branded search volume directly correlates with AI visibility. Learn to measure brand signals in LLMs and optimize for AI-driven discovery with acti...