How Do AI Engines Index Content? Complete Process Explained

Learn how AI engines like ChatGPT, Perplexity, and Gemini index and process web content using advanced crawlers, NLP, and machine learning to train language mod...

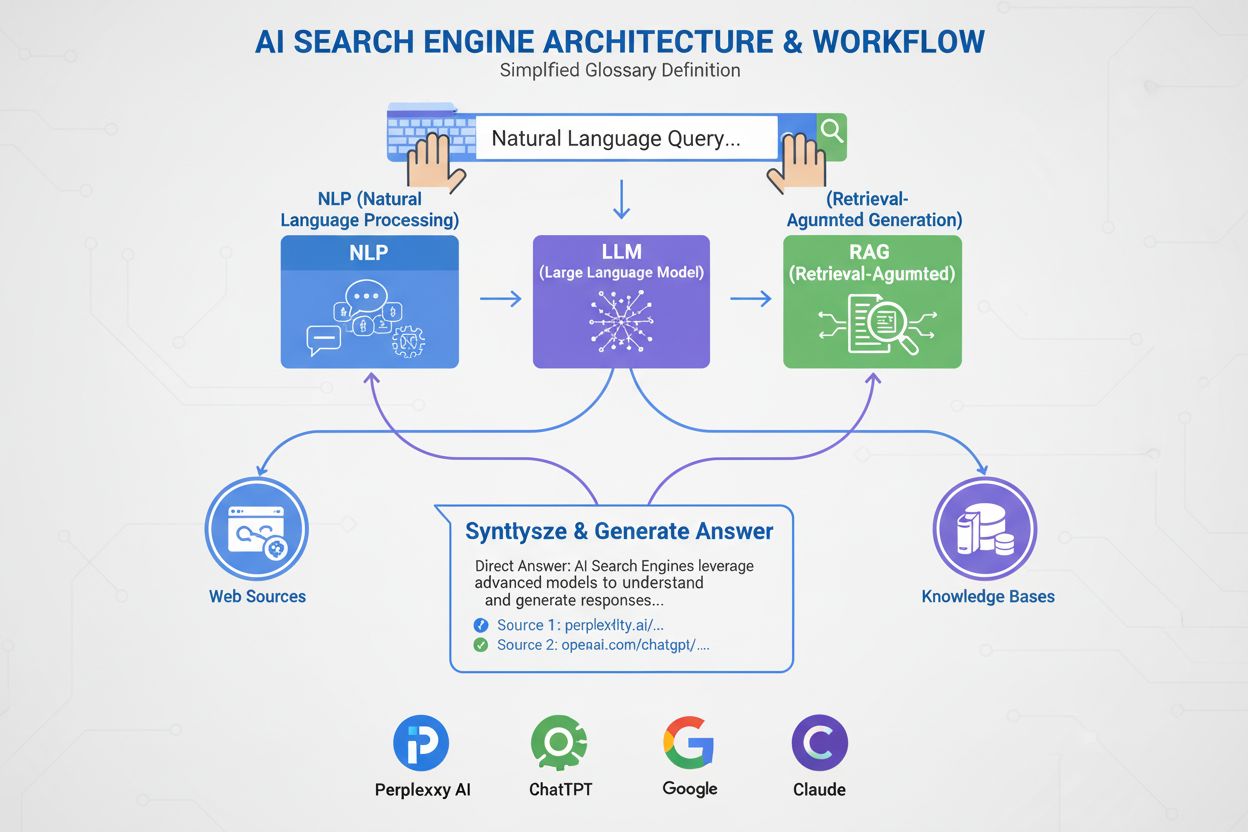

An AI search engine is a platform that uses artificial intelligence, natural language processing, and large language models to interpret user queries and generate direct, conversational answers synthesized from web sources, rather than displaying traditional lists of links. These platforms leverage retrieval-augmented generation (RAG) to provide current, cited information with real-time web access.

An AI search engine is a platform that uses artificial intelligence, natural language processing, and large language models to interpret user queries and generate direct, conversational answers synthesized from web sources, rather than displaying traditional lists of links. These platforms leverage retrieval-augmented generation (RAG) to provide current, cited information with real-time web access.

An AI search engine is a platform that uses artificial intelligence, natural language processing (NLP), and large language models (LLMs) to interpret user queries and generate direct, conversational answers synthesized from web sources. Unlike traditional search engines that display lists of links, AI search engines provide concise, plain-language summaries that directly address user intent. These platforms leverage retrieval-augmented generation (RAG) to combine real-time web retrieval with generative AI synthesis, enabling them to deliver current, cited information. AI search engines represent a fundamental shift in how people discover information online, moving from keyword-based link lists to semantic understanding and direct answers. The technology underpinning these systems integrates multiple AI disciplines—including semantic search, entity recognition, and conversational AI—to create a more intuitive and efficient search experience.

The emergence of AI search engines marks a significant evolution in information retrieval technology. For decades, search engines operated on a keyword-matching paradigm, where relevance was determined by the presence and frequency of search terms within indexed documents. However, the rise of large language models and advances in natural language understanding have fundamentally changed what’s possible. The global AI search engine market reached USD 15.23 billion in 2024 and is expected to register a revenue compound annual growth rate (CAGR) of 16.8% through 2032, according to market research. This explosive growth reflects both enterprise adoption and consumer demand for more intelligent, conversational search experiences.

Generative AI has reshaped the way people discover information online, pushing search beyond the familiar list of blue links toward more direct, conversational answers. Industry leaders like Google and Microsoft Bing have swiftly integrated AI across their platforms to keep pace with rising stars like Perplexity and You.com. According to McKinsey research, approximately 50% of Google searches already have AI summaries, a figure expected to rise to more than 75% by 2028. This shift is more than just a user-interface change—it’s redefining content strategy and search engine optimization into a new era called “generative engine optimization” (GEO).

The transition reflects broader organizational adoption of AI technologies. 78% of organizations reported using AI in 2024, up from 55% the year before, according to Stanford’s AI Index Report. For search and marketing professionals, this means visibility now depends on understanding how AI systems parse, condense, and reiterate content into plain-language summaries. The challenge is that AI-generated answers often divert traffic away from original websites altogether, raising both opportunities and challenges for content creators and brands seeking visibility in this new landscape.

AI search engines operate through a sophisticated multi-stage pipeline that combines retrieval, ranking, and synthesis. The process begins with query understanding, where the system parses the user’s input using natural language processing to extract meaning, intent, and context. Rather than treating the query as a simple string of keywords, the system creates multiple representations: a lexical form for exact-match retrieval, a dense embedding for semantic search, and an entity form for knowledge graph matching.

Once the query is understood, most AI search engines employ retrieval-augmented generation (RAG), a core architectural pattern that addresses the fundamental weaknesses of large language models. RAG performs a live retrieval step, searching across web indexes or APIs to pull relevant documents and passages. These candidates are then reranked using more sophisticated models that jointly evaluate the query and candidate to produce refined relevance scores. The top-ranked results are fed into a large language model as grounding context, which synthesizes a conversational answer while maintaining fidelity to the retrieved sources.

Hybrid retrieval pipelines are standard across leading platforms. These combine lexical search (keyword-based, using algorithms like BM25) with semantic search (embedding-based, using vector similarity). Lexical search excels at precision for exact matches, rare terms, and named entities, while semantic search excels at recall for conceptually related content. By merging both approaches and applying cross-encoder reranking, AI search engines achieve higher accuracy than either method alone. The final synthesis stage uses a large language model to compose a coherent, human-like response that integrates information from multiple sources while maintaining accuracy and providing citations.

| Aspect | Traditional Search Engines | AI Search Engines |

|---|---|---|

| Result Format | Lists of links with short snippets | Conversational summaries with direct answers |

| Query Processing | Keyword matching and ranking | Semantic understanding and intent analysis |

| Learning Mechanism | Works from scratch with each query | Continuously learns from user interactions and feedback |

| Information Retrieval | Lexical/keyword-based matching | Hybrid (lexical + semantic + entity-based) |

| Input Formats | Text only | Text, images, voice, and video (multimodal) |

| Real-Time Updates | Index-based, periodic crawling | Real-time web access via RAG |

| Citation Behavior | No citations; users find sources themselves | Integrated citations and source attribution |

| User Interaction | Single query, static results | Multi-turn conversations with follow-ups |

| Bias Handling | Curators organize information | AI synthesis may introduce developer bias |

| Hallucination Risk | Low (links are factual) | Higher (LLMs can generate false information) |

Different AI search engines implement distinct architectural approaches, each with unique optimization implications. Google’s AI Overviews and AI Mode employ a query fan-out strategy, where a single user query is exploded into multiple subqueries targeting different intent dimensions. These subqueries run in parallel against various data sources—the web index, Knowledge Graph, YouTube transcripts, Google Shopping feeds, and specialty indexes. Results are aggregated, deduplicated, and ranked before being synthesized into an overview. For GEO practitioners, this means content must address multiple facets of a query in extractable ways to survive the fan-out process.

Bing Copilot represents a more traditional search-native approach, leveraging Microsoft’s mature Bing ranking infrastructure and layering GPT-class synthesis on top. The platform uses dual-lane retrieval, combining BM25 lexical search with dense vector semantic search. Results pass through a contextual cross-encoder reranker that focuses on passage-level relevance rather than whole-page ranking. This architecture means that classic SEO signals—crawlability, canonical tags, clean HTML, page speed—still matter significantly because they determine which candidates reach the grounding set. Bing Copilot also emphasizes extractability: passages with clear scope, lists, tables, and definition-style phrasing are more likely to be cited.

Perplexity AI operates with intentional transparency, displaying sources prominently before the generated answer itself. The platform conducts real-time searches, often pulling from both Google and Bing indexes, then evaluates candidates against a blend of lexical and semantic relevance, topical authority, and answer extractability. Research analyzing 59 distinct factors influencing Perplexity’s ranking behavior reveals that the platform prioritizes direct answer formatting—pages that explicitly restate the query in a heading, followed immediately by a concise, high-information-density answer, are disproportionately represented in citation sets. Entity prominence and linking also play an outsized role; Perplexity favors passages where key entities are clearly named and contextually linked to related concepts.

ChatGPT Search takes an opportunistic approach, generating search queries dynamically and calling Bing’s API to retrieve specific URLs. Unlike platforms with persistent indexes, ChatGPT fetches content in real-time, meaning inclusion depends entirely on real-time retrievability. If a site is blocked by robots.txt, slow to load, hidden behind client-side rendering, or semantically opaque, it will not be used in synthesis. This architecture prioritizes accessibility and clarity: pages must be technically crawlable, lightweight, and semantically transparent so that on-the-fly fetches yield clean, parseable text.

Natural language processing (NLP) is the foundational technology enabling AI search engines to move beyond keyword matching. NLP allows systems to parse the structure, semantics, and intent behind search queries, understanding context and recognizing synonyms and related concepts. When a user asks “best places to eat near me with outdoor seating,” an NLP-powered system understands that the query seeks restaurants with patios, despite the absence of those exact words. This semantic understanding enables AI search engines to deliver helpful results even when intent is less obvious or phrased conversationally.

Large language models (LLMs) power the synthesis stage of AI search engines. Trained on vast quantities of text data, LLMs predict the next most logical word based on context, allowing them to produce coherent, grammatically correct prose that closely resembles human-written text. However, LLMs also introduce risks. They can hallucinate—presenting falsehoods as facts—because they generate text from probabilistic knowledge rather than quoting live sources. This is why retrieval-augmented generation (RAG) is critical: by grounding LLM synthesis in freshly retrieved, authoritative sources, AI search engines mitigate hallucination risk and improve factual accuracy. Some platforms have incorporated line-by-line article citations to further offset hallucination, though the cited articles aren’t always accurate and sometimes don’t exist at all.

AI search engines differ from traditional search engines in several fundamental ways. First, they provide summaries instead of links. Regular search engines display results as lists of links with short snippets, while AI search engines generate concise summaries that answer queries directly, sparing users the need to scroll and click through multiple websites. Second, AI search learns over time, while traditional search resets with each query. AI search engines are designed to continuously learn and adapt to user interactions and new data, improving their performance over time. Traditional search engines work from scratch with every new query, without accounting for previous queries or user interactions.

Third, AI search focuses on semantics, while traditional search focuses on keywords. Regular search engines primarily depend on keyword matching to interpret queries, while AI search engines concentrate on semantics—the broader meaning of words within context. This allows AI search engines to more thoroughly understand user intent and deliver results that more closely match what users are seeking. Fourth, AI search accepts multiple input formats, while traditional search accepts only text. Some AI search engines have multimodal capabilities, meaning they can process and understand information from formats beyond text, such as images, videos, and audio. This provides a more intuitive and flexible search experience than typing keywords.

The rise of AI search engines is reshaping how brands approach visibility and content strategy. Instead of competing solely for keyword rankings, publishers and businesses must now account for how AI systems parse, condense, and reiterate their content into plain-language summaries. This shift has ushered in a new era of “generative engine optimization” (GEO), where the goal is not just to rank but to be retrieved, synthesized, and cited by AI systems.

Research indicates that even the GEO performance of industry leaders may lag SEO by anywhere from 20 to 50 percent, according to McKinsey analysis. This gap reflects the nascent nature of GEO strategies and the complexity of optimizing for multiple AI platforms simultaneously. For brands, the implications are significant: visibility in AI search depends on content being retrievable (appearing in search results), extractable (structured in ways that AI systems can easily parse and cite), and trustworthy (demonstrating expertise, authority, and trustworthiness). Monitoring tools like AmICited now track brand mentions across AI platforms—including Perplexity, ChatGPT, Google AI Overviews, and Claude—to measure this new form of visibility and identify optimization opportunities.

The AI search landscape is rapidly evolving, with significant implications for how information is discovered, distributed, and monetized. The global AI search engine market is projected to grow at a 16.8% CAGR through 2032, driven by increasing enterprise adoption and consumer demand for conversational, intelligent search experiences. As AI search engines mature, we can expect several key trends to emerge.

First, consolidation and specialization will likely accelerate. While generalist platforms like Google, Bing, and Perplexity will continue to dominate, specialized AI search engines targeting specific verticals—legal, medical, technical, e-commerce—will proliferate. These specialized engines will offer deeper domain expertise and more accurate synthesis for niche queries. Second, citation and attribution mechanisms will become more sophisticated and standardized. As regulatory pressure increases and publishers demand clearer attribution, AI search engines will likely implement more granular citation systems that make it easier for users to trace claims back to sources and for publishers to measure visibility.

Third, the definition and measurement of “visibility” will fundamentally shift. In the traditional SEO era, visibility meant ranking position and click-through rate. In the GEO era, visibility means being retrieved, synthesized, and cited—metrics that require new measurement frameworks and tools. AmICited and similar platforms are pioneering this measurement space, tracking brand mentions across multiple AI platforms and providing insights into how often and in what context brands appear in AI-generated answers.

Fourth, the tension between AI search and traditional search will intensify. As AI search engines capture more user attention and traffic, traditional search engines will face pressure to evolve or risk obsolescence. Google’s integration of AI into its core search product represents a strategic response to this threat, but the long-term winner remains uncertain. Publishers and brands must optimize for both traditional and AI search simultaneously, creating a more complex and resource-intensive content strategy.

Finally, trust and accuracy will become paramount. As AI search engines become primary information sources, the stakes for accuracy and bias mitigation will rise. Regulatory frameworks around AI transparency and accountability will likely emerge, requiring AI search engines to disclose their training data, ranking factors, and citation methodologies. For brands and publishers, this means that E-E-A-T signals—expertise, experience, authoritativeness, and trustworthiness—will become even more critical for visibility in both traditional and AI search contexts.

+++ showCTA = true ctaHeading = “Monitor Your Brand’s Visibility Across AI Search Engines” ctaDescription = “Understanding AI search engines is just the first step. Track where and how your brand appears in ChatGPT, Perplexity, Google AI Overviews, and Claude with AmICited’s AI search monitoring platform. Measure your GEO performance, identify citation opportunities, and optimize your content for the next generation of search.” ctaPrimaryText = “Contact Us” ctaPrimaryURL = “/contact/” ctaSecondaryText = “Try it Now” ctaSecondaryURL = “https://app.amicited.com ” +++

Traditional search engines display results as lists of links with snippets, while AI search engines generate conversational summaries that directly answer queries. AI search engines use natural language processing and large language models to understand user intent semantically rather than relying solely on keyword matching. They continuously learn from user interactions and can process multiple input formats including text, images, and voice. Additionally, AI search engines often provide real-time web access through retrieval-augmented generation (RAG), allowing them to deliver current information with source citations.

Retrieval-augmented generation (RAG) is a technique that enables large language models to retrieve and incorporate fresh information from external sources before generating answers. RAG addresses the fundamental limitations of LLMs—hallucinations and knowledge cutoffs—by grounding responses in real-time retrieved data. In AI search engines, RAG works by first performing a live search or retrieval step, pulling relevant documents or snippets, then synthesizing a response grounded in those retrieved items. This approach ensures answers are both current and traceable to specific sources, making citations possible and improving factual accuracy.

Leading AI search engines include Perplexity (known for transparent citations), ChatGPT Search (powered by GPT-4o with real-time web access), Google Gemini and AI Overviews (integrated with Google's search infrastructure), Bing Copilot (built on Microsoft's search index), and Claude (Anthropic's model with selective web search). Perplexity prioritizes real-time retrieval and visible source attribution, while ChatGPT generates search queries opportunistically. Google's approach uses query fan-out to cover multiple intent dimensions, and Bing CoPilot combines traditional SEO signals with generative synthesis. Each platform has distinct retrieval architectures, citation behaviors, and optimization requirements.

AI search engines follow a multi-stage process: first, they parse the user query using natural language processing to understand intent; second, they retrieve relevant documents or passages from web indexes or APIs using hybrid retrieval (combining lexical and semantic search); third, they rerank candidates based on relevance and extractability; and finally, they synthesize a conversational answer using a large language model, inserting citations to source documents. The citation mechanism varies by platform—some display inline citations, others show source lists, and some integrate citations into the answer text itself. The quality and accuracy of citations depend on how well the retrieved passages match the synthesized claims.

Generative engine optimization (GEO) is the practice of optimizing content and brand visibility specifically for AI search engines, as opposed to traditional SEO which targets keyword rankings in link-based search results. GEO focuses on making content retrievable, extractable, and citable by AI systems. Key GEO strategies include structuring content for clarity and direct answers, using natural language that matches user intent, implementing entity markup and schema, ensuring fast page load times, and building topical authority. As McKinsey research shows, about 50% of Google searches already have AI summaries, expected to rise to over 75% by 2028, making GEO increasingly critical for brand visibility.

AI search engines can both increase and decrease website traffic depending on how content is optimized. When content is cited in AI-generated answers, it gains visibility and credibility, but users may get their answer directly without clicking through to the source. Research indicates that AI Overviews can divert traffic away from original websites, though they also provide attribution and source links. For brands, the shift means visibility now depends on being retrieved, synthesized, and cited by AI systems—not just ranking in traditional search results. Monitoring tools like AmICited track brand mentions across AI platforms (Perplexity, ChatGPT, Google AI Overviews, Claude) to measure this new form of visibility and optimize accordingly.

Natural language processing (NLP) is fundamental to how AI search engines understand and process user queries. NLP enables systems to parse the structure, semantics, and intent behind search queries rather than just matching keywords. It allows AI search engines to understand context, disambiguate meaning, and recognize synonyms and related concepts. NLP also powers the synthesis stage, where language models generate grammatically correct, coherent responses that sound natural to users. Additionally, NLP helps AI search engines extract and structure information from web pages, identifying key entities, relationships, and claims that can be incorporated into generated answers.

AI search engines handle real-time information through retrieval-augmented generation (RAG), which performs live searches or API calls to fetch current data at query time rather than relying solely on training data. Platforms like Perplexity and Google's AI Mode actively query the web in real-time, ensuring answers reflect the latest information. ChatGPT uses Bing's search API to access current web content when browsing is enabled. Freshness signals are also incorporated into ranking algorithms—pages with recent publication dates and updated content are weighted more heavily for time-sensitive queries. However, some AI search engines still rely partially on training data, which can lag behind real-world events, making real-time retrieval a key differentiator between platforms.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Learn how AI engines like ChatGPT, Perplexity, and Gemini index and process web content using advanced crawlers, NLP, and machine learning to train language mod...

Learn how to submit and optimize your content for AI search engines like ChatGPT, Perplexity, and Gemini. Discover indexing strategies, technical requirements, ...

Learn how AI search indexes work, the differences between ChatGPT, Perplexity, and SearchGPT indexing methods, and how to optimize your content for AI search vi...