How to Configure robots.txt for AI Crawlers: Complete Guide

Learn how to configure robots.txt to control AI crawler access including GPTBot, ClaudeBot, and Perplexity. Manage your brand visibility in AI-generated answers...

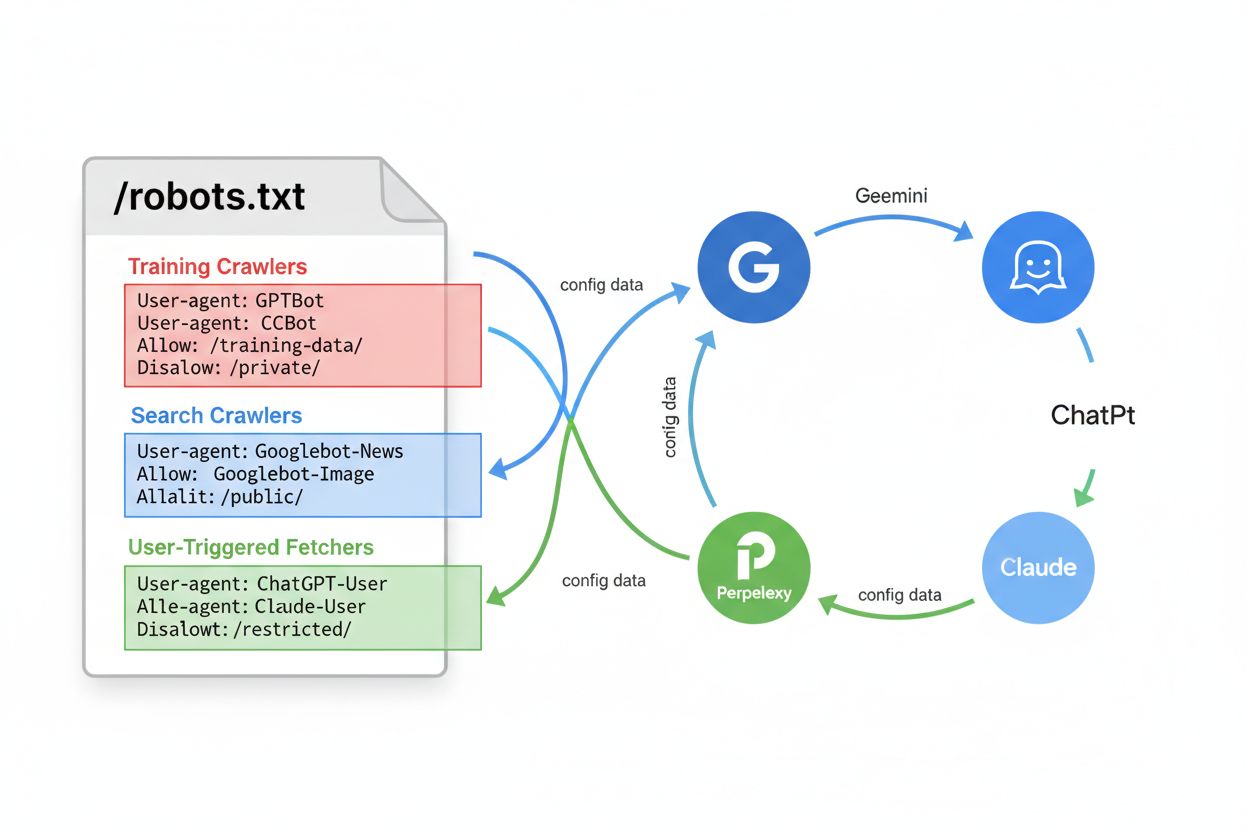

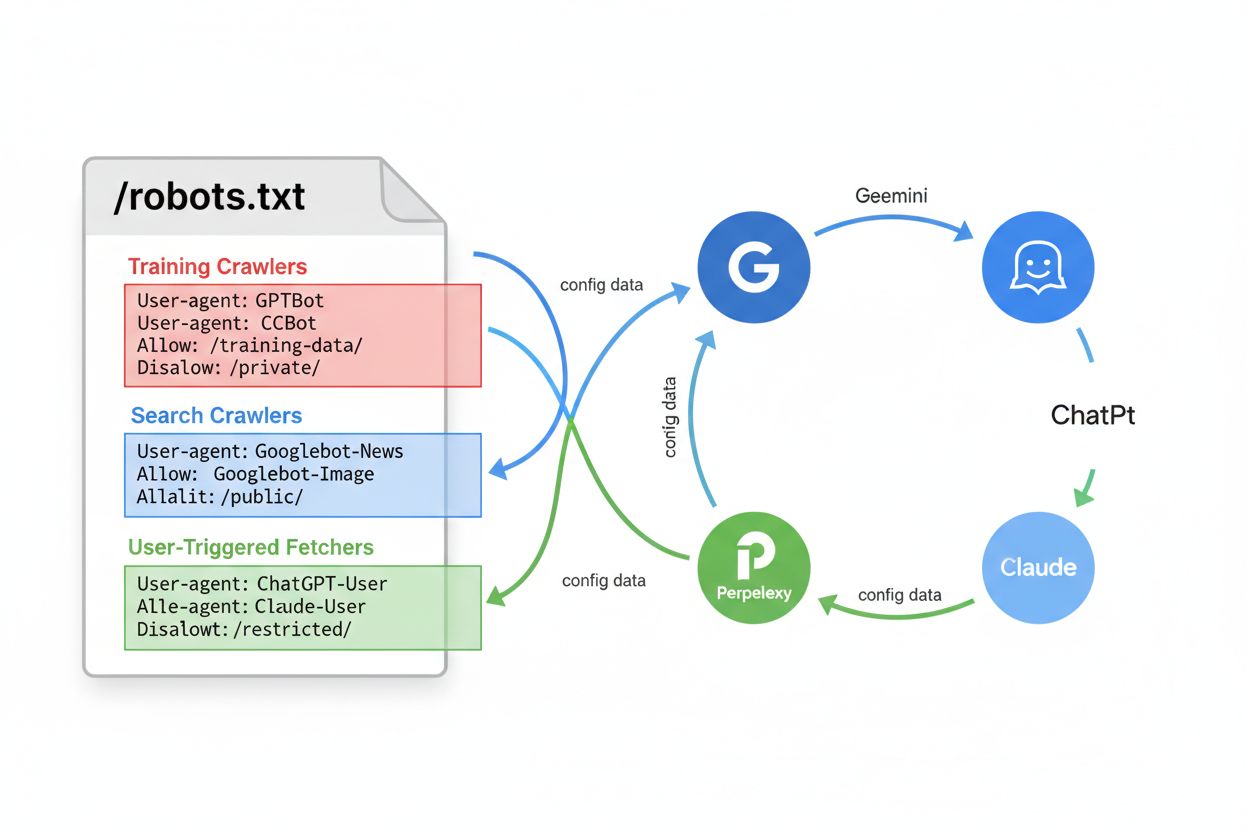

Configuration of robots.txt with user-agent rules specifically targeting AI crawlers. AI-specific robots.txt allows website owners to control how artificial intelligence systems, large language models, and AI training bots access and use their content. It distinguishes between different types of AI crawlers—training crawlers, search crawlers, and user-triggered fetchers—enabling granular control over content visibility to AI systems. This configuration has become critical as AI crawlers now account for approximately 80% of bot traffic to many websites.

Configuration of robots.txt with user-agent rules specifically targeting AI crawlers. AI-specific robots.txt allows website owners to control how artificial intelligence systems, large language models, and AI training bots access and use their content. It distinguishes between different types of AI crawlers—training crawlers, search crawlers, and user-triggered fetchers—enabling granular control over content visibility to AI systems. This configuration has become critical as AI crawlers now account for approximately 80% of bot traffic to many websites.

AI-specific robots.txt configuration refers to the practice of creating targeted rules within your robots.txt file that specifically address artificial intelligence crawlers and training bots, distinct from traditional search engine crawlers like Googlebot. While conventional robots.txt has historically focused on managing Googlebot, Bingbot, and other search indexers, the emergence of large language models and AI training systems has created an entirely new category of bot traffic that requires separate management strategies. According to recent data from November 2025, AI crawlers now account for approximately 80% of all bot traffic to many publisher websites, fundamentally shifting the importance of robots.txt configuration from a nice-to-have SEO tool to a critical content protection mechanism. The distinction matters because AI training crawlers operate under different business models than search engines—they’re collecting data to train proprietary models rather than to drive referral traffic—making the traditional trade-off of allowing crawlers in exchange for search visibility no longer applicable. For publishers, this means that robots.txt decisions now directly impact content visibility to AI systems, potential unauthorized use of proprietary content in training datasets, and the overall traffic and revenue implications of AI discovery.

AI crawlers fall into three distinct operational categories, each with different characteristics, traffic implications, and strategic considerations for publishers. Training crawlers are designed to collect large volumes of text data for machine learning model development; they typically operate with high bandwidth requirements, generate significant server load, and provide zero referral traffic in return—examples include OpenAI’s GPTBot and Anthropic’s ClaudeBot. Search and citation crawlers function similarly to traditional search engines by indexing content for retrieval and providing attribution; they generate moderate traffic volumes and can drive referral traffic through citations and links—this category includes OpenAI’s OAI-SearchBot and Google’s AI Overviews crawler. User-triggered crawlers operate on-demand when end users explicitly request AI analysis of a webpage, such as ChatGPT’s ability to browse the web or Claude’s document analysis features; these generate lower traffic volumes but represent direct user engagement with your content. The categorization matters strategically because training crawlers present the highest content protection concerns with minimal business benefit, search crawlers offer a middle ground with some referral potential, and user-triggered crawlers typically align with user intent and can enhance content visibility.

| Crawler Category | Purpose | Traffic Volume | Referral Potential | Content Risk | Examples |

|---|---|---|---|---|---|

| Training | Model development | Very High | None | Very High | GPTBot, ClaudeBot |

| Search/Citation | Content indexing & attribution | Moderate | Moderate | Moderate | OAI-SearchBot, Google AI |

| User-Triggered | On-demand analysis | Low | Low | Low | ChatGPT Web Browse, Claude |

The major AI companies operating crawlers include OpenAI, Anthropic, Google, Meta, Apple, and Amazon, each with distinct user-agent strings that allow identification in server logs and robots.txt configuration. OpenAI operates multiple crawlers: GPTBot (user-agent: GPTBot/1.0) for training data collection, OAI-SearchBot (user-agent: OAI-SearchBot/1.0) for search and citation indexing, and ChatGPT-User (user-agent: ChatGPT-User/1.0) for user-triggered web browsing. Anthropic’s primary crawler is ClaudeBot (user-agent: Claude-Web/1.0 or anthropic-ai) used for training and knowledge base development. Google operates Google-Extended (user-agent: Google-Extended/1.1) for Gemini and other AI products, while Meta uses facebookexternalhit for content analysis, Apple operates AppleBot for Siri and search features, and Amazon uses Amazonbot for Alexa and search capabilities. To identify these crawlers in your server logs, examine the User-Agent header in HTTP requests—most legitimate AI crawlers include their company name and version number in this field. For enhanced security, you can verify crawler legitimacy by checking the requesting IP address against published IP ranges provided by each company; OpenAI publishes its crawler IP ranges, as do Google and other major providers, allowing you to distinguish between legitimate crawlers and spoofed user-agents.

The basic syntax for AI-specific robots.txt rules follows the standard robots.txt format with user-agent matching and allow/disallow directives targeted at specific crawlers. To block OpenAI’s GPTBot from training data collection while allowing their search crawler, you would structure your robots.txt as follows:

User-agent: GPTBot

Disallow: /

User-agent: OAI-SearchBot

Allow: /

For more granular control, you can apply path-specific rules that block certain sections while allowing others—for example, blocking AI crawlers from accessing your paywall content or user-generated content sections:

User-agent: GPTBot

Disallow: /premium/

Disallow: /user-content/

Allow: /public-articles/

User-agent: ClaudeBot

Disallow: /

You can group multiple user-agents under a single rule set to apply identical restrictions across several crawlers, reducing configuration complexity. Testing and validation of your robots.txt configuration is critical; tools like Google Search Console’s robots.txt tester and third-party validators can verify that your rules are syntactically correct and will be interpreted as intended by crawlers. Remember that robots.txt is advisory rather than enforceable—compliant crawlers will respect these rules, but malicious actors or non-compliant bots may ignore them entirely, necessitating additional server-level enforcement mechanisms for sensitive content.

The decision to block or allow AI crawlers involves fundamental trade-offs between content protection and visibility that vary significantly based on your business model and content strategy. Blocking training crawlers like GPTBot entirely eliminates the risk of your content being used to train proprietary AI models without compensation, but it also means your content won’t appear in AI-generated responses, potentially reducing discovery and traffic from users who interact with AI systems. Conversely, allowing training crawlers increases the likelihood that your content will be incorporated into AI training datasets, potentially without attribution or compensation, but it may enhance visibility if those AI systems eventually cite or reference your content. The strategic decision should consider your content’s competitive advantage—proprietary research, original analysis, and unique data warrant stricter blocking, while evergreen educational content or commodity information may benefit from broader AI visibility. Different publisher types face different calculus: news organizations might allow search crawlers to gain citation traffic while blocking training crawlers, while educational publishers might allow broader access to increase reach, and SaaS companies might block all AI crawlers to protect proprietary documentation. Monitoring the impact of your blocking decisions through server logs and traffic analytics is essential to validate whether your configuration is achieving your intended business outcomes.

While robots.txt provides a clear mechanism for communicating crawler policies, it is fundamentally advisory and not legally enforceable—compliant crawlers will respect your rules, but non-compliant actors may ignore them entirely, requiring additional technical enforcement layers. IP verification and allowlisting represents the most reliable enforcement method; by maintaining a list of legitimate IP addresses published by OpenAI, Google, Anthropic, and other major AI companies, you can verify that requests claiming to be from these crawlers actually originate from their infrastructure. Firewall rules and server-level blocking provide the strongest enforcement mechanism, allowing you to reject requests from specific user-agents or IP ranges at the network level before they consume server resources. For Apache servers, .htaccess configuration can enforce crawler restrictions:

<IfModule mod_rewrite.c>

RewriteEngine On

RewriteCond %{HTTP_USER_AGENT} GPTBot [NC]

RewriteRule ^.*$ - [F,L]

</IfModule>

Meta tags in your HTML head section provide granular, page-level control over crawler access without modifying robots.txt:

<meta name="robots" content="noindex, noimageindex, nofollowbyai">

Regularly checking server logs for crawler activity allows you to identify new crawlers, verify that your rules are being respected, and detect spoofed user-agents attempting to circumvent your restrictions. Tools like Knowatoa and Merkle provide automated validation and monitoring of your robots.txt configuration and crawler behavior, offering visibility into which crawlers are accessing your site and whether they’re respecting your directives.

Ongoing maintenance of your AI-specific robots.txt configuration is critical because the landscape of AI crawlers is rapidly evolving, with new crawlers emerging regularly and existing crawlers modifying their user-agent strings and behavior patterns. Your monitoring strategy should include:

The rapid evolution of AI crawler technology means that a robots.txt configuration that was appropriate six months ago may no longer reflect your current needs or the current threat landscape, making regular review and adaptation essential to maintaining effective content protection.

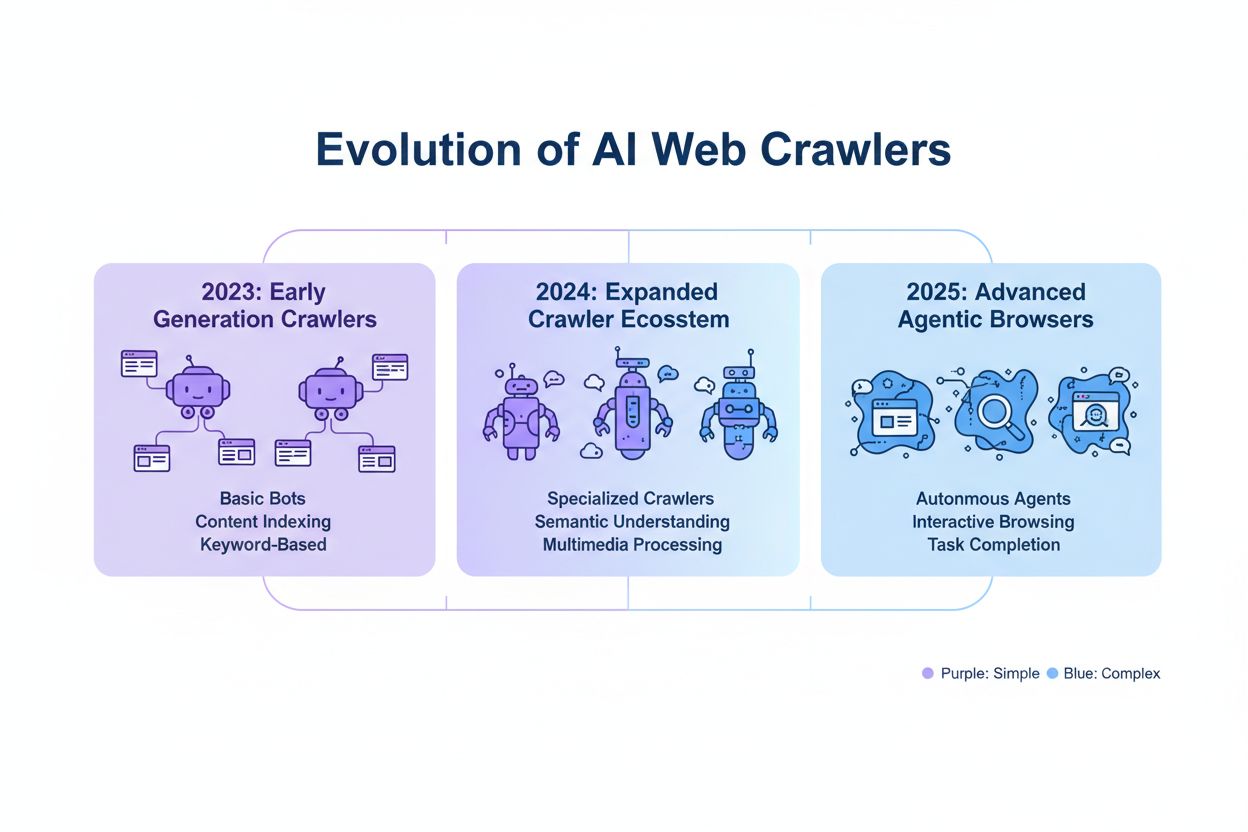

The next generation of AI crawlers presents novel challenges that traditional robots.txt configuration may be unable to address effectively. Agentic browser crawlers like ChatGPT Atlas and Google Project Mariner operate as full-featured web browsers rather than simple HTTP clients, rendering JavaScript, executing user interactions, and behaving indistinguishably from human users—these crawlers may not identify themselves with distinctive user-agent strings, making robots.txt-based blocking ineffective. Many emerging crawlers are adopting standard Chrome user-agent strings to avoid detection and blocking, deliberately obscuring their identity to circumvent robots.txt rules and other access controls. This trend is driving a shift toward IP-based blocking as an emerging necessity, where publishers must maintain allowlists of legitimate crawler IP addresses and block all other traffic from suspicious sources, fundamentally changing the enforcement model from user-agent matching to network-level access control. Spoofed user-agents and circumvention techniques are becoming increasingly common, with bad actors impersonating legitimate crawlers or using generic user-agent strings to evade detection. The future of AI crawler management will likely require a multi-layered approach combining robots.txt configuration, IP verification, firewall rules, and potentially behavioral analysis to distinguish legitimate crawlers from malicious actors. Staying informed about emerging crawler technologies and participating in industry discussions about crawler ethics and standards is essential for publishers seeking to maintain effective content protection strategies.

Implementing effective AI-specific robots.txt configuration requires a comprehensive approach that balances content protection with strategic visibility goals. Start with a clear content protection policy that defines which content categories require blocking (proprietary research, premium content, user-generated content) versus which can be safely exposed to AI crawlers (public articles, educational content, commodity information). Implement a tiered blocking strategy that distinguishes between training crawlers (typically block), search crawlers (typically allow with monitoring), and user-triggered crawlers (typically allow), rather than applying a blanket allow-or-block approach to all AI crawlers. Combine robots.txt with server-level enforcement by implementing firewall rules and IP verification for your most sensitive content, recognizing that robots.txt alone is insufficient for strong content protection. Integrate AI crawler management into your broader SEO and content strategy by considering how blocking decisions affect your visibility in AI-generated responses, citations, and AI-powered search features—this integration ensures that your robots.txt configuration supports rather than undermines your overall business objectives. Establish a monitoring and maintenance cadence with weekly log reviews, monthly IP verification, and quarterly comprehensive audits to ensure your configuration remains effective as the crawler landscape evolves. Use tools like AmICited.com to monitor your content’s visibility across AI systems and understand the impact of your blocking decisions on AI discovery and citation. For different publisher types: news organizations should typically allow search crawlers while blocking training crawlers to maximize citation traffic; educational publishers should consider allowing broader access to increase reach; and SaaS companies should implement strict blocking for proprietary documentation. When robots.txt blocking proves insufficient due to spoofed user-agents or non-compliant crawlers, escalate to firewall rules and IP-based blocking to enforce your content protection policies at the network level.

Training crawlers like GPTBot and ClaudeBot collect data for model development and provide zero referral traffic, making them high-risk for content protection. Search crawlers like OAI-SearchBot and PerplexityBot index content for AI-powered search and may send referral traffic through citations. Most publishers block training crawlers while allowing search crawlers to balance content protection with visibility.

Google officially states that blocking Google-Extended does not impact search rankings or inclusion in AI Overviews. However, some webmasters have reported concerns, so monitor your search performance after implementing blocks. AI Overviews in Google Search follow standard Googlebot rules, not Google-Extended.

Yes, robots.txt is advisory rather than enforceable. Well-behaved crawlers from major companies generally respect robots.txt directives, but some crawlers ignore them. For stronger protection, implement server-level blocking via .htaccess or firewall rules, and verify legitimate crawlers using published IP address ranges.

Review and update your blocklist quarterly at minimum. New AI crawlers emerge regularly, so check server logs monthly to identify new crawlers hitting your site. Track community resources like the ai.robots.txt GitHub project for updates on emerging crawlers and user-agent strings.

This depends on your business priorities. Blocking training crawlers protects your content from being incorporated into AI models without compensation. Blocking search crawlers may reduce your visibility in AI-powered discovery platforms like ChatGPT search or Perplexity. Many publishers opt for selective blocking that targets training crawlers while allowing search and citation crawlers.

Check your server logs for crawler user-agent strings and verify that blocked crawlers aren't accessing your content pages. Use analytics tools to monitor bot traffic patterns. Test your configuration with Knowatoa AI Search Console or Merkle robots.txt Tester to validate that your rules are working as intended.

Agentic browser crawlers like ChatGPT Atlas and Google Project Mariner operate as full-featured web browsers rather than simple HTTP clients. They often use standard Chrome user-agent strings, making them indistinguishable from regular browser traffic. IP-based blocking becomes necessary for controlling access to these advanced crawlers.

AI-specific robots.txt controls access to your content, while tools like AmICited monitor how AI platforms reference and cite your content. Together, they provide complete visibility and control: robots.txt manages crawler access, and monitoring tools track your content's impact across AI systems.

AmICited tracks how AI systems like ChatGPT, Claude, Perplexity, and Google AI Overviews cite and reference your brand. Combine robots.txt configuration with AI visibility monitoring to understand your content's impact across AI platforms.

Learn how to configure robots.txt to control AI crawler access including GPTBot, ClaudeBot, and Perplexity. Manage your brand visibility in AI-generated answers...

Community discussion on configuring robots.txt for AI crawlers like GPTBot, ClaudeBot, and PerplexityBot. Real experiences from webmasters and SEO specialists o...

Learn how to audit AI crawler access to your website. Discover which bots can see your content and fix blockers preventing AI visibility in ChatGPT, Perplexity,...