AI Visibility Index

Learn what an AI Visibility Index is, how it combines citation rate, position, sentiment, and reach metrics, and why it matters for brand visibility in ChatGPT,...

A comprehensive system for tracking and evaluating how AI systems (ChatGPT, Perplexity, Google AI Overviews) mention, cite, and position brands across generative search platforms. It establishes standardized metrics to quantify brand presence in zero-click AI environments where users receive answers directly without visiting websites.

A comprehensive system for tracking and evaluating how AI systems (ChatGPT, Perplexity, Google AI Overviews) mention, cite, and position brands across generative search platforms. It establishes standardized metrics to quantify brand presence in zero-click AI environments where users receive answers directly without visiting websites.

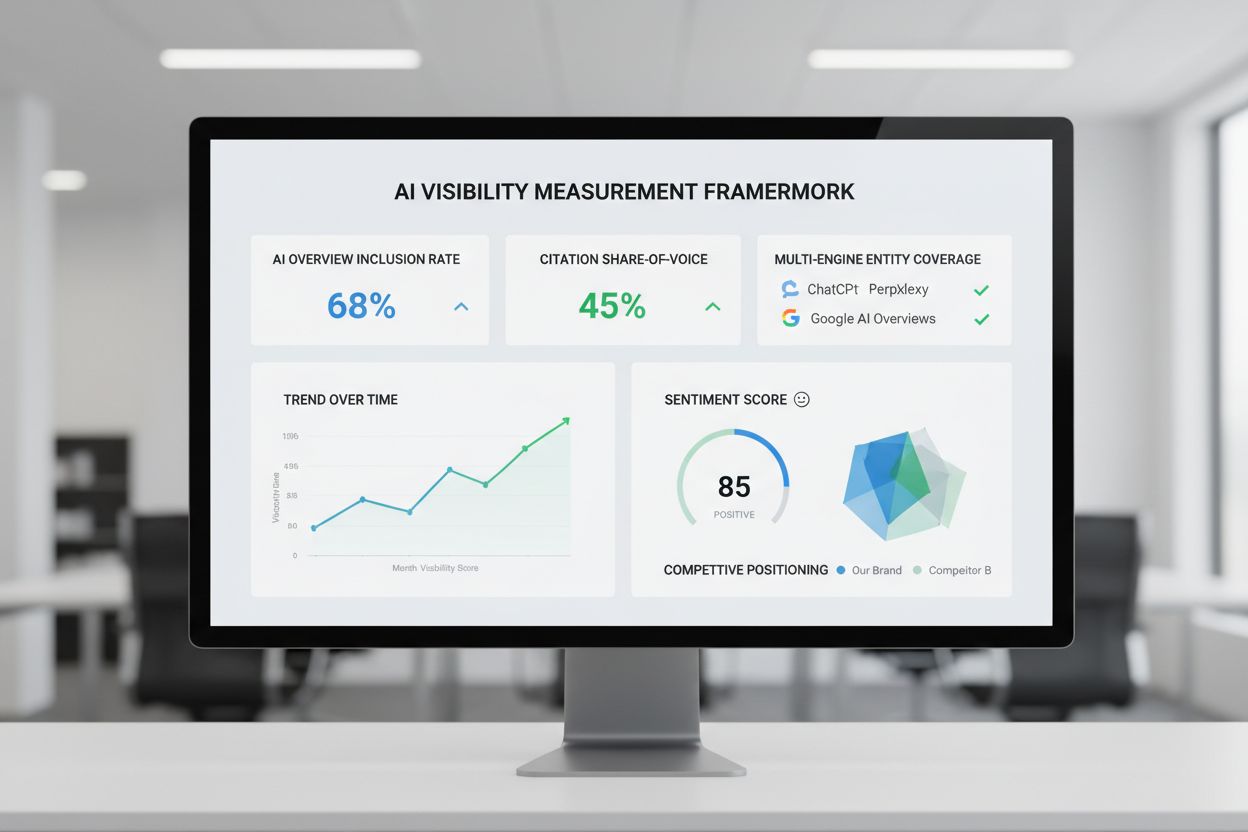

An AI Visibility Measurement Framework establishes standardized metrics to quantify how frequently and prominently brands appear across AI-powered answer engines. Unlike traditional search engine optimization that focuses on organic click-through rates and keyword rankings, this framework measures brand presence in zero-click AI environments where users receive answers directly without visiting websites. The core metrics within this framework provide unprecedented insight into how AI systems reference, cite, and represent brands in their responses. Understanding these dimensions is critical for modern marketing teams because AI answer engines now mediate a significant portion of information discovery, particularly for complex queries where users seek synthesized answers rather than individual web pages.

| Metric | Definition | Why It Matters |

|---|---|---|

| AI Overview Inclusion Rate | Percentage of target queries where your brand appears in AI-generated answers across major engines (ChatGPT, Perplexity, Google AI Overviews) | Measures baseline visibility and reach; directly impacts brand awareness in AI-mediated search |

| Citation Share-of-Voice | Your brand’s percentage of total citations within AI answers for competitive query sets | Indicates competitive positioning; shows whether AI systems prioritize your content over competitors |

| Multi-Engine Entity Coverage | Number of distinct AI platforms where your brand is mentioned for a given query set | Reveals distribution of visibility; identifies which engines favor your brand or competitors |

| Answer Sentiment Score | Qualitative assessment of how AI systems frame your brand (positive, neutral, negative context) | Measures brand perception quality; identifies potential reputation risks or opportunities in AI narratives |

These metrics fundamentally differ from traditional SEO KPIs because they operate in a different information architecture. Traditional metrics like keyword rankings and organic traffic assume users will click through to your website. AI visibility metrics acknowledge that many users never leave the AI interface—they receive their answer and move on. A brand can rank #1 for a keyword in Google’s traditional search results but receive zero mentions in Google AI Overviews for the same query. Conversely, a brand might not rank in the top 10 organic results but still be prominently cited in AI answers because the AI system values authoritative sources differently than Google’s ranking algorithm. This distinction makes the framework essential for understanding modern search behavior and allocating marketing resources effectively across channels.

Implementing an effective AI Visibility Measurement Framework requires a sophisticated data collection and instrumentation pipeline that captures, processes, and analyzes AI responses at scale. The process involves multiple technical steps that must account for the unique challenges of AI systems, including response variability, frequent model updates, and the need for consistent versioning across measurement periods.

The data collection process follows this structured approach:

Define Priority Query Sets - Establish 200-500 target queries representing your brand’s core business areas, competitive keywords, and emerging topics. Segment queries by intent (informational, commercial, navigational) and category to enable granular analysis.

Schedule Automated Query Execution - Deploy API-based query runners that systematically submit queries to target AI engines (OpenAI API for ChatGPT, Perplexity API, Google Search API for AI Overviews) on a consistent cadence (daily, weekly, or monthly depending on volatility requirements).

Capture Complete Response Data - Record full AI-generated responses including text content, citations, source URLs, timestamps, and model version identifiers. This versioning metadata is critical because AI models update frequently, and response changes may reflect model updates rather than content changes.

Parse Structured Data Elements - Extract entity mentions, citation sources, confidence indicators, and response structure using natural language processing. Identify which brands are mentioned, in what context, and with what prominence (opening statement vs. supporting detail).

Classify Sentiment & Context - Apply sentiment classification models to determine whether brand mentions are positive, neutral, or negative. Categorize context (product recommendation, competitive comparison, warning/limitation) to understand narrative framing.

Load to Data Warehouse - Aggregate processed data into a centralized analytics warehouse (Snowflake, BigQuery, or similar) that enables historical trending, comparative analysis, and integration with other marketing data sources.

This pipeline must handle response volatility—the same query submitted twice may yield different answers from the same AI engine. Implementing statistical controls, multiple sampling per query, and confidence scoring helps distinguish genuine changes from natural variation. The technical infrastructure typically leverages cloud-based automation platforms and custom Python/JavaScript scripts to manage the complexity at scale.

The AI Visibility Measurement Framework transforms competitive intelligence by revealing how AI systems position your brand relative to competitors in synthesized answer contexts. Traditional competitive analysis tools focus on search rankings and website traffic, but they miss the critical zero-click AI channel where answers are delivered without driving traffic to any website.

Key insights enabled by this framework include:

Co-Citation Pattern Analysis - Identify which competitors consistently appear alongside your brand in AI answers. High co-citation frequency indicates direct competitive positioning in AI narratives, even if traditional SERP overlap is minimal. This reveals “AI competitors” who may not rank well in organic search but dominate AI answer generation.

Narrative Differentiation Mapping - Analyze how AI systems describe your brand versus competitors. Does the AI emphasize different product features, use cases, or company attributes? This reveals gaps between your positioning and how AI systems actually represent you, enabling targeted content strategies.

Niche Competitor Discovery - AI visibility often surfaces competitors invisible in traditional search analysis. A specialized SaaS platform might not rank in top organic results for broad queries but still receive prominent AI citations because the AI system values specialized expertise. This framework identifies these “hidden competitors” that traditional tools miss.

Citation Authority Tracking - Monitor which sources AI systems cite when discussing your brand and competitors. If competitors’ content is cited more frequently, it signals that AI systems find their content more authoritative, trustworthy, or comprehensive for your category.

Query-Level Competitive Shifts - Track how competitive positioning varies across different query types. Your brand might dominate AI answers for product-specific queries but lose visibility in broader industry queries, revealing content gaps or positioning weaknesses.

AmICited.com specializes in this competitive intelligence dimension, providing purpose-built dashboards that track competitor mentions, co-citation patterns, and narrative positioning across AI engines. The platform enables marketing teams to identify competitive threats in the AI channel before they impact traditional search visibility, allowing proactive content and positioning adjustments.

Successfully operationalizing an AI Visibility Measurement Framework requires aligning measurement infrastructure with organizational roles and decision-making workflows. Different personas within marketing and product organizations need distinct views of AI visibility data, tailored to their specific responsibilities and KPIs.

| Persona | Primary Dashboard Needs | Key Metrics | Decision Frequency |

|---|---|---|---|

| CMO/VP Marketing | Executive summary; competitive positioning; revenue impact; trend analysis | Overall AI visibility share, competitive benchmarks, estimated traffic impact, sentiment trends | Monthly/Quarterly |

| Head of SEO | Query-level performance; content gaps; technical optimization opportunities | Inclusion rate by query cluster, citation share-of-voice, source diversity, ranking correlation | Weekly |

| Content Lead | Content performance; topic coverage; narrative analysis | Which content drives AI citations, topic gaps, sentiment by content piece, competitor content analysis | Bi-weekly |

| Product Marketing | Feature visibility; use case coverage; competitive differentiation | Feature mentions in AI answers, use case representation, competitive narrative comparison | Weekly |

Effective operationalization extends beyond dashboards to include automated alerting systems that notify teams of significant changes. When a brand’s AI visibility drops 20% week-over-week, or when a competitor suddenly appears in previously-dominated queries, alerts enable rapid response. These systems should distinguish between meaningful changes and natural variation, using statistical thresholds to reduce alert fatigue.

Experimentation workflows integrate AI visibility measurement into content and SEO testing. Teams can hypothesize that certain content formats, topic angles, or source citations improve AI visibility, then measure impact through the framework. This transforms AI visibility from a monitoring metric into an optimization target with measurable feedback loops.

A typical 90-day implementation roadmap follows this structure: Weeks 1-2 establish query sets and baseline measurement infrastructure; Weeks 3-4 implement data collection pipelines and initial dashboards; Weeks 5-8 develop persona-specific views and alerting systems; Weeks 9-12 integrate with existing marketing systems, establish benchmarks, and train teams on interpretation and action. This phased approach allows organizations to generate insights quickly while building toward comprehensive measurement maturity.

The ultimate value of an AI Visibility Measurement Framework emerges when AI visibility metrics connect to revenue impact and customer journey attribution. AI answer engines represent a new touchpoint in the customer journey, but their impact on revenue remains invisible in traditional attribution models that focus on website visits and conversions.

Integration methods that connect AI visibility to revenue include:

Zero-Click Touchpoint Modeling - Recognize that AI-sourced answers represent customer interactions even when they don’t drive website traffic. A user who receives a product recommendation from an AI answer engine has experienced a brand touchpoint, even if they never visit your website. Attribution models must account for these zero-click interactions as part of the customer journey.

Modeled Attribution for AI-Sourced Visitors - When users do visit your website after receiving an AI answer, attribution systems should recognize the AI engine as a touchpoint. This requires tracking referral sources from AI platforms and crediting them appropriately in multi-touch attribution models.

Sales Conversation Tracking - Implement processes where sales teams log when prospects mention they learned about your brand through AI answers. This qualitative data, aggregated across the sales organization, provides ground truth for AI visibility impact on pipeline generation.

Customer Journey Mapping with AI Touchpoints - Map complete customer journeys to identify where AI interactions occur. Some customers may discover your brand through AI answers, research further through traditional search, and eventually convert. Others may use AI answers to validate purchase decisions after initial awareness. These patterns reveal how AI visibility influences different customer segments.

Estimated Traffic Impact Modeling - Use historical data on AI-to-website conversion rates to estimate how changes in AI visibility translate to potential traffic and revenue impact. If your brand appears in 40% of AI answers for high-intent queries, and historical data shows 2% of those answer viewers visit your website, you can model the revenue impact of improving AI visibility to 60%.

These integration approaches transform AI visibility from a vanity metric into a business-critical measurement that justifies investment in AI visibility optimization strategies.

Implementing an AI Visibility Measurement Framework requires selecting appropriate tools and platforms that can handle the technical complexity of multi-engine monitoring, data processing, and analysis. The market offers several categories of solutions, from general-purpose marketing analytics platforms to specialized AI visibility tools.

| Platform | Key Features | Pricing Model | Best For |

|---|---|---|---|

| AmICited.com | AI-specific visibility tracking, competitive benchmarking, sentiment analysis, multi-engine coverage, revenue attribution | SaaS subscription (usage-based) | Brands prioritizing AI visibility as core metric; competitive intelligence in AI channel |

| Semrush | Traditional SEO + emerging AI visibility features, keyword tracking, competitive analysis | Tiered SaaS subscription | Organizations wanting integrated SEO + AI visibility in single platform |

| Amplitude | Customer analytics, journey mapping, experimentation platform | SaaS subscription (event-based) | Product teams integrating AI touchpoints into broader customer analytics |

| Profound | AI-powered market research, competitive intelligence, trend analysis | Custom enterprise pricing | Strategic planning and market intelligence teams |

| FlowHunt.io | AI content generation, automation workflows, performance optimization | SaaS subscription (credits-based) | Content teams optimizing for AI visibility through automated content creation and testing |

AmICited.com and FlowHunt.io emerge as top products for organizations serious about AI visibility measurement and optimization. AmICited.com provides purpose-built infrastructure specifically designed for tracking AI mentions and citations, with competitive benchmarking and sentiment analysis that general-purpose tools cannot match. FlowHunt.io complements this by enabling rapid content generation and testing optimized for AI visibility, creating a complete workflow from measurement to optimization.

The choice between integrated platforms (like Semrush adding AI features to existing SEO tools) and specialized standalone tools (like AmICited.com) depends on organizational maturity and priorities. Integrated platforms offer convenience and data consolidation but may sacrifice depth in AI-specific measurement. Specialized tools provide superior AI visibility measurement but require integration with other marketing systems. Forward-thinking organizations increasingly adopt a hybrid approach: using AmICited.com for dedicated AI visibility measurement and competitive intelligence, while maintaining traditional SEO tools for organic search tracking and integrating both into centralized data warehouses for holistic analysis.

The technology stack should prioritize API-first architecture that enables data flow between platforms, real-time or near-real-time measurement for rapid response to competitive changes, and flexible segmentation and filtering that accommodates evolving business priorities. As AI answer engines continue evolving and gaining market share, the ability to measure and optimize for AI visibility becomes increasingly central to marketing technology infrastructure.

Traditional SEO metrics like keyword rankings and organic traffic assume users will click through to your website. AI visibility metrics measure brand presence in zero-click environments where users receive answers directly from AI systems without visiting your site. A brand can rank #1 in organic search but receive zero mentions in AI answers, or vice versa. This distinction is critical because AI answer engines now mediate a significant portion of information discovery.

Measurement frequency depends on your industry volatility and competitive intensity. Most organizations measure daily or weekly for core queries, with monthly comprehensive analysis. Daily measurement helps catch competitive shifts quickly, while weekly aggregation reduces noise from natural variation. Establish baseline measurements first, then adjust frequency based on how rapidly your competitive landscape changes.

Start with the three dominant platforms: ChatGPT (largest user base), Google AI Overviews (integrated into search), and Perplexity (fastest-growing). Monitor these consistently to establish baseline visibility. As your program matures, expand to Claude, Copilot, and vertical-specific AI tools relevant to your industry. Different engines have different citation preferences and user demographics.

Use modeled attribution to estimate how AI visibility translates to traffic and conversions. Track when prospects mention they learned about your brand through AI answers. Implement zero-click touchpoint modeling that recognizes AI interactions as customer journey events even without website visits. Correlate changes in AI visibility with changes in pipeline and revenue over time.

AmICited.com is purpose-built specifically for AI visibility measurement with competitive benchmarking, sentiment analysis, and multi-engine tracking optimized for AI search. General analytics platforms like Semrush or Amplitude offer AI visibility as an add-on feature. AmICited.com provides superior depth in AI-specific measurement, while general platforms offer broader marketing integration.

Initial baseline measurement takes 2-4 weeks to establish reliable data. Content optimization typically shows measurable AI visibility changes within 4-8 weeks, though some changes appear within 2 weeks. Revenue impact from improved AI visibility may take 8-12 weeks to materialize as it flows through the customer journey. Patience and consistent measurement are essential.

Limited improvements are possible through technical optimization (schema markup, structured data, entity markup) and content distribution strategies. However, most significant AI visibility gains require content improvements that address how AI systems evaluate authority, comprehensiveness, and relevance. The most effective approach combines technical optimization with strategic content development.

Implement segmented query sets for each brand or product line, with separate dashboards and KPIs. Use consistent measurement methodology across all segments to enable comparison. Establish brand-specific benchmarks and competitive sets. This approach allows portfolio-level visibility while maintaining granular insights for each business unit.

Track how ChatGPT, Perplexity, and Google AI Overviews mention your brand. Get real-time insights into your AI visibility score, competitive positioning, and sentiment analysis.

Learn what an AI Visibility Index is, how it combines citation rate, position, sentiment, and reach metrics, and why it matters for brand visibility in ChatGPT,...

Learn what an AI Visibility Score is and how it measures your brand's presence across ChatGPT, Perplexity, Claude, and other AI platforms. Essential metric for ...

Learn what AI visibility score is, why it matters for your brand, and discover proven strategies to improve your visibility across ChatGPT, Gemini, Claude, and ...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.