What black hat tactics can get you penalized in AI search? Seeing some sketchy stuff out there

Community discussion on black hat tactics that hurt AI visibility. Real insights on AI poisoning, content manipulation, and how to protect your brand.

Competitive AI Sabotage refers to deliberate attempts by competitors to negatively influence a brand’s visibility, sentiment, or performance in AI-powered search results and chatbot responses. This includes data poisoning, fake reviews, misinformation campaigns, and manipulation of AI training data to damage brand reputation and reduce AI citations.

Competitive AI Sabotage refers to deliberate attempts by competitors to negatively influence a brand's visibility, sentiment, or performance in AI-powered search results and chatbot responses. This includes data poisoning, fake reviews, misinformation campaigns, and manipulation of AI training data to damage brand reputation and reduce AI citations.

Competitive AI sabotage refers to deliberate attempts to manipulate, poison, or corrupt the data that AI search engines and language models use to generate results and citations. Unlike traditional negative SEO, which focuses on damaging a competitor’s website rankings through link schemes or technical exploits, AI sabotage targets the training data and sources that AI systems rely upon to provide answers. This distinction is critical because AI systems like Google AI Overviews, Perplexity, and GPTs cite sources directly from their training data, making them vulnerable to coordinated misinformation campaigns. The real-world impact is substantial: with 60% of searches ending without clicking through to websites, competitors can now poison AI results to redirect traffic, damage brand reputation, or promote false information without ever touching traditional search rankings.

Competitive AI sabotage operates through several interconnected attack vectors that exploit how AI systems ingest and process information. Data poisoning is the primary technique, involving the injection of false, misleading, or malicious information into sources that AI systems use for training—such as public databases, forums, review sites, and news aggregators. Attackers employ label flipping (changing metadata to misclassify content), data injection (adding fake entries to datasets), and backdoor attacks (embedding hidden triggers in training data). Competitors also launch coordinated fake review campaigns to flood platforms with fabricated testimonials, while spreading misinformation and deepfakes through social media, blogs, and AI-indexed content. The vulnerability lies in the fact that AI systems often trust the volume and consistency of information rather than verifying its authenticity. Here’s how different sabotage methods compare:

| Method | How It Works | AI Impact | Detection Difficulty |

|---|---|---|---|

| Data Poisoning | Injecting false information into training datasets | AI cites poisoned sources directly in results | High - requires data analysis |

| Fake Reviews | Coordinated review bombing on platforms | Skews AI sentiment analysis and recommendations | Medium - pattern analysis can help |

| Misinformation Campaigns | Spreading false claims across indexed websites | AI amplifies false narratives in search results | High - requires fact-checking |

| Deepfakes | Creating synthetic media of executives or products | Damages brand credibility when cited by AI | Very High - requires verification |

| Directory Hijacking | Claiming competitor’s business listings | AI pulls false information from hijacked directories | Medium - verification checks help |

| Fake News Articles | Publishing fabricated stories on news-like sites | AI treats as authoritative sources | High - requires source verification |

AI systems are fundamentally more vulnerable to sabotage than traditional search engines because they trust training data more implicitly than humans do. While Google’s algorithm evaluates link authority and domain reputation, AI models simply learn patterns from whatever data they’re trained on—making them susceptible to coordinated attacks. Research shows that as few as 250 malicious documents can poison an AI model, a dramatically lower barrier to entry compared to traditional SEO attacks. The problem is compounded by the fact that AI systems cite sources directly, meaning poisoned data doesn’t just influence results—it becomes the authoritative answer users see. Detecting poisoned data is exponentially harder than identifying a bad backlink because the manipulation happens at the training level, often invisible to external audits. With AI search traffic increasing 527% in just five months (January-May 2025) and 79% of Americans trusting AI search engines, the incentive for competitors to sabotage is higher than ever, while the security infrastructure to prevent it remains underdeveloped.

The most prominent example of AI sabotage occurred when Target fell victim to a coordinated misinformation campaign that falsely claimed the retailer was selling “Satanic-themed” children’s clothing. AI systems, trained on this fabricated narrative, began surfacing these false claims in search results and AI overviews, causing significant reputational damage before the company could respond. Email systems have experienced similar attacks through spam filter poisoning, where competitors inject malicious patterns into training data to cause legitimate emails to be flagged as spam. Competitor review bombing has become increasingly common, with businesses flooding rival companies’ listings with fake negative reviews that AI systems then aggregate into sentiment scores. Directory listing hijacking represents another vector, where attackers claim competitor business profiles on platforms like Google My Business or industry directories, then feed false information that AI systems index and cite. These cases demonstrate that AI sabotage isn’t theoretical—it’s actively damaging businesses today, with the damage amplified because AI search results appear authoritative and are often the first information users encounter.

Detecting competitive AI sabotage requires a multi-layered monitoring approach that tracks how your brand appears across AI search results and citation sources. The first step is continuous monitoring of AI search results for your brand, products, and key executives across platforms like Google AI Overviews, Perplexity, ChatGPT, and Claude. Sentiment tracking across indexed sources helps identify sudden shifts in how your brand is discussed—a red flag for coordinated misinformation campaigns. Fake review identification involves analyzing review patterns for statistical anomalies, such as sudden spikes in one-star ratings or reviews using identical language. Early detection is critical; research shows that the first 48 hours after a sabotage attack begins are crucial for containment and response. Monitoring should also track which sources AI systems are citing about your brand, allowing you to identify poisoned data sources before they cause widespread damage. Here are the essential detection methods:

Building resilience against competitive AI sabotage requires a proactive, multi-faceted defense strategy that goes beyond traditional reputation management. Creating high-quality, authoritative content is your strongest defense—AI systems are trained to recognize and prioritize content from established, credible sources, making it harder for poisoned data to compete with legitimate information. Amplifying accurate information across multiple indexed platforms ensures that when AI systems search for information about your brand, they encounter consistent, truthful narratives from authoritative sources. Securing your brand presence means claiming and verifying all business listings, social media profiles, and industry directories to prevent hijacking attacks. Implement robust monitoring systems that track your brand across AI search results in real-time, enabling rapid response to emerging threats. Develop response protocols that outline how your team will react to detected sabotage, including documentation, platform reporting, and legal action when appropriate. Building relationships with journalists, industry influencers, and trusted publications creates additional layers of credibility that AI systems recognize and prioritize.

Dedicated AI monitoring platforms have become essential infrastructure for brand protection in the age of AI search. These tools provide real-time alerts when your brand appears in AI search results, allowing you to track not just rankings but actual citations and the sources AI systems are using. AmICited.com stands out as a comprehensive solution specifically designed for monitoring how your brand, products, and executives are cited across AI search engines including Google AI Overviews, Perplexity, and GPTs. The platform tracks which sources AI systems are citing about your brand, enabling you to identify poisoned data sources and respond before they cause widespread damage. Integration with your broader brand safety strategy means you can correlate AI citation patterns with traditional search metrics, social media sentiment, and review platforms to get a complete picture of your competitive landscape. By combining AI monitoring with your existing reputation management tools, you create a unified defense system that catches sabotage attempts early and provides the data needed for rapid response.

As competitive AI sabotage becomes more prevalent, legal frameworks are evolving to address these new threats. DMCA takedown notices can be used to remove poisoned content from indexed sources, though the process is often slow and requires clear evidence of copyright infringement or impersonation. Defamation law provides recourse when sabotage involves false statements that damage your reputation, though proving damages in the AI context remains complex. Most major platforms now have reporting mechanisms specifically for AI-related abuse, including data poisoning, fake reviews, and misinformation campaigns, though enforcement varies significantly. Emerging regulations like the EU AI Act and proposed U.S. legislation are beginning to establish liability frameworks for AI systems that amplify false information, potentially creating new avenues for legal action. Companies should document all sabotage attempts thoroughly, as this evidence becomes critical for both legal proceedings and platform appeals. Consulting with legal counsel experienced in AI and digital reputation is increasingly important for developing response strategies that comply with evolving regulations.

The landscape of competitive AI sabotage will continue to evolve as both attackers and defenders develop more sophisticated techniques. AI-generated fake reviews using large language models will become increasingly difficult to distinguish from authentic user feedback, requiring more advanced detection systems. Blockchain verification and decentralized credibility systems are emerging as potential solutions to verify source authenticity before AI systems incorporate data into training sets. Platform providers are investing heavily in improved defenses, including better data validation, source verification, and poisoning detection algorithms, though these measures will always lag behind new attack vectors. Community-based verification systems where users and experts flag suspicious content may become standard practice, creating crowdsourced defense against sabotage. The competitive advantage will increasingly belong to companies that stay ahead of emerging threats through continuous monitoring, rapid response protocols, and investment in AI literacy across their teams. Organizations that treat AI monitoring as a core component of their brand protection strategy today will be best positioned to defend against tomorrow’s more sophisticated attacks.

Traditional negative SEO targets search engine rankings through link manipulation and content scraping. Competitive AI sabotage targets AI training data and model behavior, poisoning the sources AI systems cite in responses. AI systems are more vulnerable because they directly cite sources and trust training data more than traditional search algorithms.

Monitor how AI platforms describe your brand weekly using tools like AmICited.com. Look for sudden sentiment changes, false claims appearing in AI responses, coordinated negative reviews, or misinformation spreading across platforms. Early detection within the first 48 hours is critical for containment.

Document everything immediately with screenshots and timestamps. Report to affected platforms (OpenAI, Google, Anthropic). Amplify accurate information through your own channels. Contact legal counsel if defamation or financial harm occurred. Implement monitoring systems to catch future attacks early.

Research shows attackers need only 250 malicious documents or 7-8% of a dataset to meaningfully poison AI models. This low barrier to entry makes AI systems particularly vulnerable compared to traditional search engines, which have more sophisticated spam detection mechanisms.

Current AI platforms have limited detection capabilities and mostly rely on user reports and periodic retraining with clean data. However, platforms are implementing better verification systems, anomaly detection, and access controls. The responsibility for early detection often falls on brands themselves.

Build a strong authentic reputation with high-quality content, monitor AI search results regularly, secure your brand presence across directories, implement real-time monitoring systems, and respond quickly to attacks. Prevention through reputation building is more effective than remediation after sabotage occurs.

AmICited.com monitors how AI platforms (ChatGPT, Perplexity, Google AI Overviews) reference your brand, tracking sentiment changes and citation patterns. Real-time alerts notify you of sudden shifts in how AI describes your brand, enabling rapid response to potential sabotage attempts.

You can pursue defamation claims, file DMCA takedown notices for false content, report to platforms for terms of service violations, and potentially seek damages for financial harm. Documentation of the sabotage is critical. Consult with legal counsel specializing in digital rights and intellectual property.

Protect your brand from competitive AI sabotage with real-time monitoring across ChatGPT, Perplexity, and Google AI Overviews. Detect threats before they damage your reputation.

Community discussion on black hat tactics that hurt AI visibility. Real insights on AI poisoning, content manipulation, and how to protect your brand.

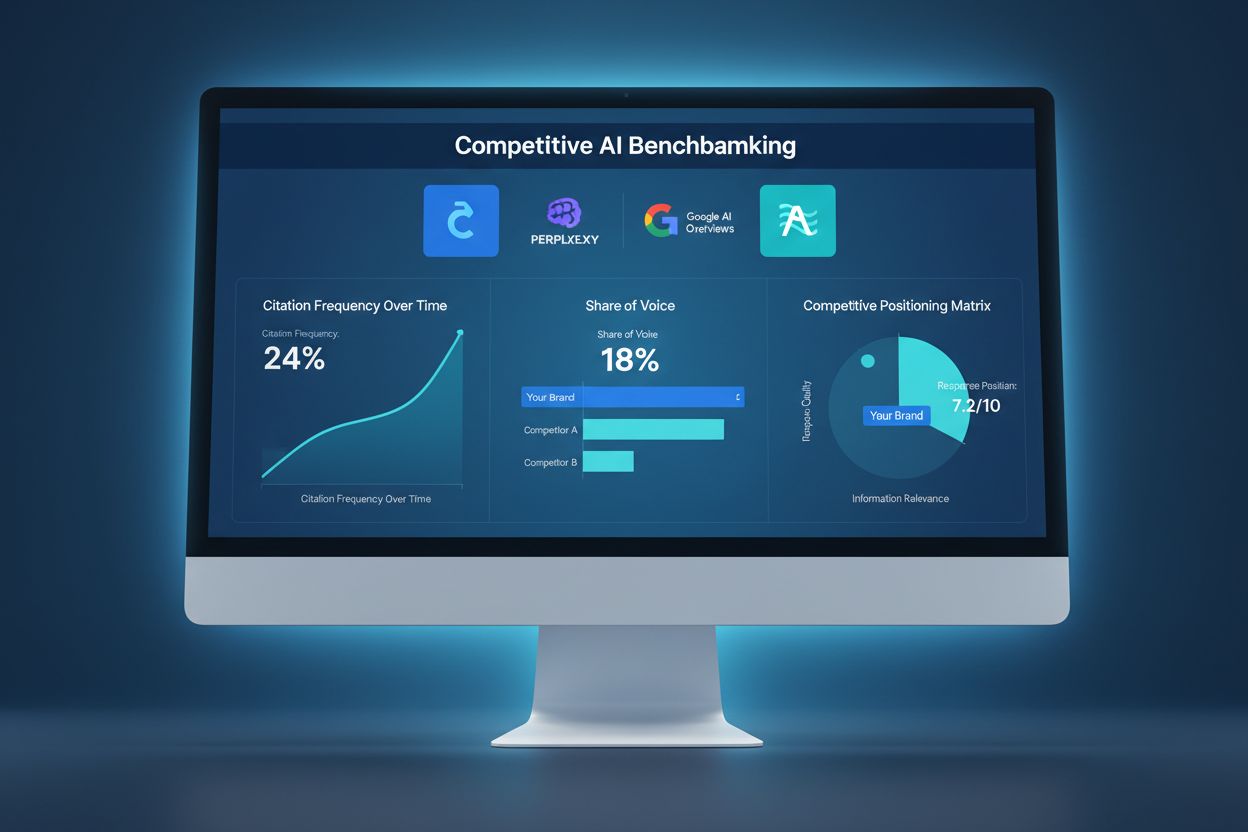

Learn how to benchmark your AI visibility against competitors. Track citations, share of voice, and competitive positioning across ChatGPT, Perplexity, and Goog...

Learn how to analyze competitor AI visibility across ChatGPT, Perplexity, and Google AI Overviews. Discover methodology, metrics, and tools for competitive inte...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.