Content Authenticity for AI Search: Verification and Trust

Learn what content authenticity means for AI search engines, how AI systems verify sources, and why it matters for accurate AI-generated answers from ChatGPT, P...

Content authenticity is the ability to verify the origin, integrity, and history of digital content such as images, videos, audio, and documents to confirm it is genuine, unaltered, and traceable to a trustworthy source. It employs secure metadata, digital signatures, and open standards like C2PA to establish verifiable provenance records that document who created content, how it was modified, and whether AI tools were involved in its production.

Content authenticity is the ability to verify the origin, integrity, and history of digital content such as images, videos, audio, and documents to confirm it is genuine, unaltered, and traceable to a trustworthy source. It employs secure metadata, digital signatures, and open standards like C2PA to establish verifiable provenance records that document who created content, how it was modified, and whether AI tools were involved in its production.

Content authenticity is the ability to verify the origin, integrity, and history of digital content such as images, videos, audio, and documents to confirm it is genuine, unaltered, and traceable to a trustworthy source. In an era where generative AI can create hyper-realistic synthetic media and deepfakes proliferate across social platforms, content authenticity has become essential for maintaining trust in digital information. The concept encompasses verifying who created content, what tools were used in its production, how it has been modified, and whether artificial intelligence was involved in any stage of creation or editing. Content authenticity operates through secure metadata, digital signatures, and open standards like the Coalition for Content Provenance and Authenticity (C2PA) to establish verifiable provenance records that document the complete lifecycle of digital content from creation through distribution.

The challenge of verifying content authenticity is not new—forged documents, altered photographs, and fabricated evidence have existed for centuries. However, the digital age has fundamentally transformed the scale and speed at which inauthentic content can be created and distributed. According to research from AIMultiple, 75% of adults in the United Kingdom believe that digitally altered content contributes to the spread of misinformation, highlighting widespread public concern about content integrity. The proliferation of social media platforms has enabled information to spread globally within minutes, while advances in generative AI have made it exponentially easier to create convincing synthetic content that mimics reality. In November 2024, research from Graphite.io revealed a significant milestone: the quantity of AI-generated articles being published on the web surpassed the quantity of human-written articles for the first time, underscoring the urgency of distinguishing authentic human-created content from synthetic alternatives. This shift has prompted major technology companies, media organizations, and civil society groups to collaborate on standardized frameworks for embedding and verifying content provenance.

The emergence of generative AI tools like DALL-E, Midjourney, Sora, and Adobe Firefly has created an unprecedented challenge for content verification. These systems can generate photorealistic images, convincing video deepfakes, and synthesized audio that are nearly indistinguishable from authentic human-created content. Research demonstrates that humans detect deepfake images with only 62% accuracy, barely better than random chance, and for deepfake videos, accuracy can drop as low as 23%. The consequences are severe: deepfake fraud incidents increased tenfold between 2022 and 2023, with 88% of identified deepfake cases occurring in the cryptocurrency sector and 8% in fintech. Beyond financial fraud, deepfakes have been weaponized for political disinformation, celebrity impersonation scams, and nonconsensual pornography. In response, content authenticity frameworks provide a technical solution by embedding verifiable information directly into digital files, allowing users to inspect the provenance of content and make informed decisions about its trustworthiness. This is particularly critical for AI monitoring platforms like AmICited, which track how brands and content appear in AI-generated responses across systems like ChatGPT, Perplexity, and Google AI Overviews.

Content authenticity relies on several interconnected technologies to establish verifiable provenance. The primary mechanism is secure metadata combined with cryptographic digital signatures that create tamper-evident records of content history. Unlike traditional metadata that can be easily modified or stripped from files, C2PA-compliant content credentials bind assertions about content creation and editing to a cryptographic hash of the content itself. This means that any alteration to either the content or its metadata will invalidate the signature, immediately signaling tampering. The Content Authenticity Initiative (CAI), founded by Adobe in 2019, develops open-source tools that implement these standards across the content lifecycle. When a creator uses a C2PA-aware tool like Adobe Photoshop or Lightroom, the software automatically records details such as the creator’s verified identity, the timestamp of creation, the device or software used, and any subsequent edits or AI involvement. This information is cryptographically signed and embedded into the file, creating what the CAI calls content credentials—essentially a “nutrition label” for digital content that persists across editing iterations and platform sharing.

| Method/Standard | Technology Type | Tamper-Evidence | Persistence | Adoption Level | Primary Use Case |

|---|---|---|---|---|---|

| C2PA Content Credentials | Cryptographic signatures + metadata | Yes, cryptographically signed | High across C2PA-aware platforms | Growing (major tech companies) | Comprehensive provenance tracking |

| Digital Watermarking | Visible or invisible identifiers | Partial (watermark persists but can be removed) | Moderate (survives compression/resizing) | Widespread | Copyright protection and ownership marking |

| Blockchain-Based Provenance | Distributed ledger recording | Yes, immutable ledger | Very high (permanent record) | Emerging (specialized use) | Long-term archival and legal evidence |

| Traditional Metadata (EXIF/XMP) | Embedded file information | No (easily modified) | Low (stripped by platforms) | Universal but unreliable | Basic file information only |

| Digital Signatures (PKI) | Public key infrastructure | Yes, cryptographically verified | Depends on implementation | Moderate (enterprise use) | Document authentication and verification |

| In-Sensor Cryptography | Hardware-level encryption | Yes, hardware-bound | Very high (hardware-embedded) | Emerging (Leica M11-P, Nikon Z6III) | Point-of-capture authenticity |

The C2PA represents the most comprehensive open standard for establishing content authenticity across digital media. Formed as a mutually governed standards development organization under the Linux Foundation’s Joint Development Foundation, the C2PA unifies efforts from the Adobe-led Content Authenticity Initiative, Microsoft’s Project Origin, and contributions from major technology companies including Intel, NVIDIA, Arm, and Truepic. The C2PA specification defines how provenance data is cryptographically signed and embedded into media files in a standardized format that works across different platforms and applications. The standard supports multiple file formats including PNG, JPEG, MP4, WAV, and PDF, ensuring broad applicability across content types. When a creator applies C2PA content credentials to their work, the system generates a manifest that records assertions about the content’s origin, creation process, editing history, and any AI involvement. This manifest is then cryptographically signed using certificates managed through a trust list, ensuring that only authorized entities can create valid credentials. The C2PA Conformance Program verifies that software, hardware, and services adhere to the specification, with certified implementations added to a public trust list. This governance structure ensures interoperability and security across the ecosystem.

Content authenticity implementation begins at the point of content creation and extends through the entire content lifecycle. Leading camera manufacturers have integrated C2PA support directly into hardware: the Leica M11-P became the world’s first camera with built-in content credentials, while Nikon’s Z6III embeds credentials for photojournalism applications. The Qualcomm Snapdragon 8 Gen3 platform brings content credentials to smartphones at the chip level, enabling millions of users to automatically embed authenticity data when capturing photos and videos. For post-production workflows, Adobe’s Creative Suite including Photoshop, Lightroom, and Firefly now supports C2PA-compliant content credentials, allowing creators to document their editing process and AI involvement. The ProofMode mobile app enables users to capture photos and videos with content credentials embedded at the source using digital signatures and secure metadata. However, implementation faces significant challenges: many social media platforms currently strip metadata during uploads, removing content credentials unless the platform is C2PA-aware. This creates a critical gap where authentic content loses its provenance information as it moves through digital ecosystems. Organizations like Reuters, BBC, and AFP have begun implementing C2PA standards in their editorial workflows to verify photo and video provenance before publication, demonstrating the practical value of content authenticity in professional journalism.

Verifiable Attribution: Creators can attach verified identity information, social media accounts, and usage preferences directly to their content, ensuring proper attribution and preventing unauthorized use or misattribution.

Tamper Detection: Cryptographic signatures make any unauthorized modifications immediately apparent, allowing viewers to identify when content has been altered after initial creation or publication.

AI Transparency: Content credentials can explicitly indicate whether content was created, edited, or enhanced using AI tools, providing transparency about synthetic media involvement without labeling such content as inherently deceptive.

Misinformation Mitigation: By establishing verifiable provenance chains, content authenticity helps combat deepfakes, manipulated media, and false attribution that fuel misinformation campaigns and erode public trust.

Operational Efficiency: Organizations integrating content authenticity into digital asset management systems can automate provenance documentation, reducing manual data entry and minimizing errors in content tracking and archival.

Legal and Compliance Support: Verifiable content credentials provide admissible evidence of content origin and integrity, supporting legal proceedings, human rights documentation, and regulatory compliance requirements.

Brand Protection: Companies can embed content credentials into marketing materials and digital assets to protect intellectual property, detect unauthorized use, and maintain brand integrity across distribution channels.

Media Literacy Support: When users can inspect content credentials through browser extensions or verification tools, they gain insight into content provenance, encouraging critical evaluation and supporting informed decision-making about media consumption.

Despite the promise of content authenticity standards, significant obstacles impede widespread implementation. Metadata stripping by social media platforms remains a critical challenge: when users upload content to Facebook, Instagram, Twitter, or TikTok, these platforms often re-encode files and remove embedded metadata, including C2PA content credentials, to optimize for their systems. This means that even if a creator embeds authentic provenance data, the information may be lost before the content reaches audiences. The adoption gap presents another barrier—not all software, hardware, and websites currently support C2PA standards, limiting the reach of authenticity verification. A creator using C2PA-aware tools may find that their credentials are stripped when content is shared on non-compliant platforms, reducing the value of the investment in authenticity documentation. Scalability concerns also loom large: processing and verifying content credentials at global scale requires coordinated technical infrastructure, standardized certificate management, and interoperable systems across thousands of organizations. Additionally, content authenticity provides data about provenance but cannot determine trustworthiness in isolation—human interpretation remains essential. A content credential might show that content was created by a verified entity, but viewers must still evaluate context, source reputation, and intent. Finally, the optional nature of content credentials in many systems allows bad actors to simply opt out of the ecosystem entirely, creating a two-tier system where authentic content is verifiable but inauthentic content can avoid scrutiny by avoiding C2PA-aware tools.

Content authenticity has become particularly critical in journalism and human rights documentation, where verifying the provenance of visual evidence directly impacts public trust and legal accountability. Major news organizations including Reuters, BBC, The New York Times, and Agence France-Presse have begun implementing C2PA standards and content authenticity verification in their editorial workflows. Reuters conducted a proof-of-concept project using secure metadata and signed attribution to establish the authenticity of images in its reporting, demonstrating how content authenticity can strengthen journalistic credibility. During the 2022 Ukraine conflict, journalists relied on content authenticity analysis to verify user-generated videos from Telegram showing nuclear facility attacks, using file format forensics and metadata analysis to confirm that footage originated from mobile devices rather than being synthetically generated. Human rights organizations like WITNESS have collaborated with the Content Authenticity Initiative to develop camera-focused systems that allow activists and journalists to embed authenticity signals at the point of capture while protecting contributor privacy. These systems enable secure documentation of atrocities and human rights violations while maintaining the anonymity of those recording evidence, addressing the critical need to verify authenticity without exposing vulnerable sources. The Content Authenticity Initiative has also developed educational materials and media literacy resources to help journalists and the public interpret content credentials and understand provenance data, recognizing that technology alone cannot address misinformation without accompanying education.

The trajectory of content authenticity standards points toward increasing integration into digital infrastructure, similar to how HTTPS became the default protocol for website security. As generative AI continues to advance and synthetic media becomes more sophisticated, the need for verifiable content authenticity will intensify. Industry experts predict that C2PA standards will become as foundational for content verification as XMP metadata has been for digital asset management. Government interest is accelerating adoption: the U.S. Presidential Executive Order on AI explicitly mentions watermarking and content provenance, signaling policy-level recognition of content authenticity importance. Major technology companies including Intel, NVIDIA, Microsoft, and Adobe have significantly increased their involvement in the Content Authenticity Initiative over the past two years, suggesting that ecosystem-wide adoption is approaching critical mass. The transition from optional to mandatory content credentials across platforms represents the next frontier—when social media platforms, search engines, and content distribution networks require C2PA compliance for content publication, the incentive structure shifts dramatically in favor of authenticity verification. This shift will likely occur gradually, beginning with high-stakes domains like news publishing and financial services before expanding to consumer-generated content. Additionally, integration of content authenticity with AI detection systems will create layered verification approaches where content credentials indicate AI involvement while forensic analysis confirms authenticity claims. For organizations like AmICited that monitor brand and content appearance in AI systems, content authenticity verification becomes increasingly important for distinguishing between authentic human-created content being cited by AI and synthetic or misattributed content being propagated through AI responses.

For AI monitoring platforms like AmICited, content authenticity verification represents a critical capability for tracking how human-created content appears in AI-generated responses. As AI systems like ChatGPT, Perplexity, Google AI Overviews, and Claude increasingly cite and reference digital content, the ability to verify whether cited content is authentic human-created material or synthetically generated becomes essential for brand protection and content integrity. Content authenticity standards enable these platforms to distinguish between legitimate citations of verified human-created content and potential misattribution or fabrication of sources. When a brand’s content appears in an AI response, content credentials can confirm the original creator, publication date, and any modifications, helping organizations understand how their authentic content is being represented in AI systems. Conversely, content authenticity verification can identify when AI systems are citing or referencing content that lacks proper provenance documentation, potentially indicating synthetic or unreliable sources. This capability becomes increasingly valuable as organizations seek to understand their digital footprint across AI systems and protect their brand reputation in an environment where AI-generated content and human-created content coexist. The integration of content authenticity verification into AI monitoring workflows represents the next evolution in tracking brand presence and ensuring that authentic human-created content maintains its integrity and proper attribution across AI platforms.

+++

Content authenticity focuses on verifying the origin, history, and integrity of digital content through transparent provenance data, while digital rights management (DRM) controls access and usage permissions. Content authenticity does not enforce permissions or ownership but serves as a transparency mechanism that helps users understand content provenance. Both serve different purposes: authenticity builds trust through verification, while DRM protects intellectual property through access control.

C2PA (Coalition for Content Provenance and Authenticity) creates cryptographically signed, tamper-evident metadata that cannot be altered without detection, whereas traditional metadata can be easily modified or stripped from files. C2PA manifests bind assertions about content creation and editing to a cryptographic hash of the content itself, making any unauthorized changes immediately apparent. This cryptographic binding makes C2PA significantly stronger than conventional metadata for establishing authentic provenance chains.

Content authenticity is one component of addressing misinformation but cannot solve the problem entirely. It provides data points about content origin and history, but human interpretation remains essential for determining trustworthiness. Content authenticity works best when combined with media literacy education, critical evaluation skills, and platform-level adoption of verification standards. The technology establishes verifiable facts about content, but context and intent assessment still require human judgment.

Content credentials are designed to persist across the content lifecycle, from creation through editing to publishing. However, many social media platforms currently strip metadata during uploads, which can remove credential data unless the platform is C2PA-aware. As more platforms adopt C2PA standards, credentials will remain accessible and verifiable across different services. This ecosystem-wide adoption is critical for content credentials to function effectively at scale.

In-sensor cryptography embeds encryption and authentication directly within camera hardware at the point of capture, creating hardware-level security for digital content. This technology generates cryptographic keys bound to the captured image or signal, making tampering immediately detectable. When combined with software-level content credentials, in-sensor cryptography creates a comprehensive chain of authenticity from hardware capture through software processing and distribution.

Organizations can implement content authenticity by adopting C2PA-aware tools in their content creation and editing software, integrating content credentials into their digital asset management systems, and training staff on provenance documentation. Starting with creation tools like Adobe Photoshop or Lightroom that support content credentials, organizations can automatically embed authenticity data. Digital asset management systems that recognize and validate C2PA data can streamline workflows and reduce manual data entry.

Key challenges include metadata stripping by social platforms, limited adoption across software and hardware ecosystems, scalability concerns for global verification infrastructure, and the need for user education on interpreting provenance data. Additionally, content credentials are optional in many systems, allowing bad actors to opt out entirely. Widespread adoption requires coordinated effort from technology companies, platforms, and content creators to make authenticity verification mandatory rather than optional.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Learn what content authenticity means for AI search engines, how AI systems verify sources, and why it matters for accurate AI-generated answers from ChatGPT, P...

Learn proven methods to demonstrate content originality including digital timestamps, plagiarism detection tools, content credentials, and blockchain verificati...

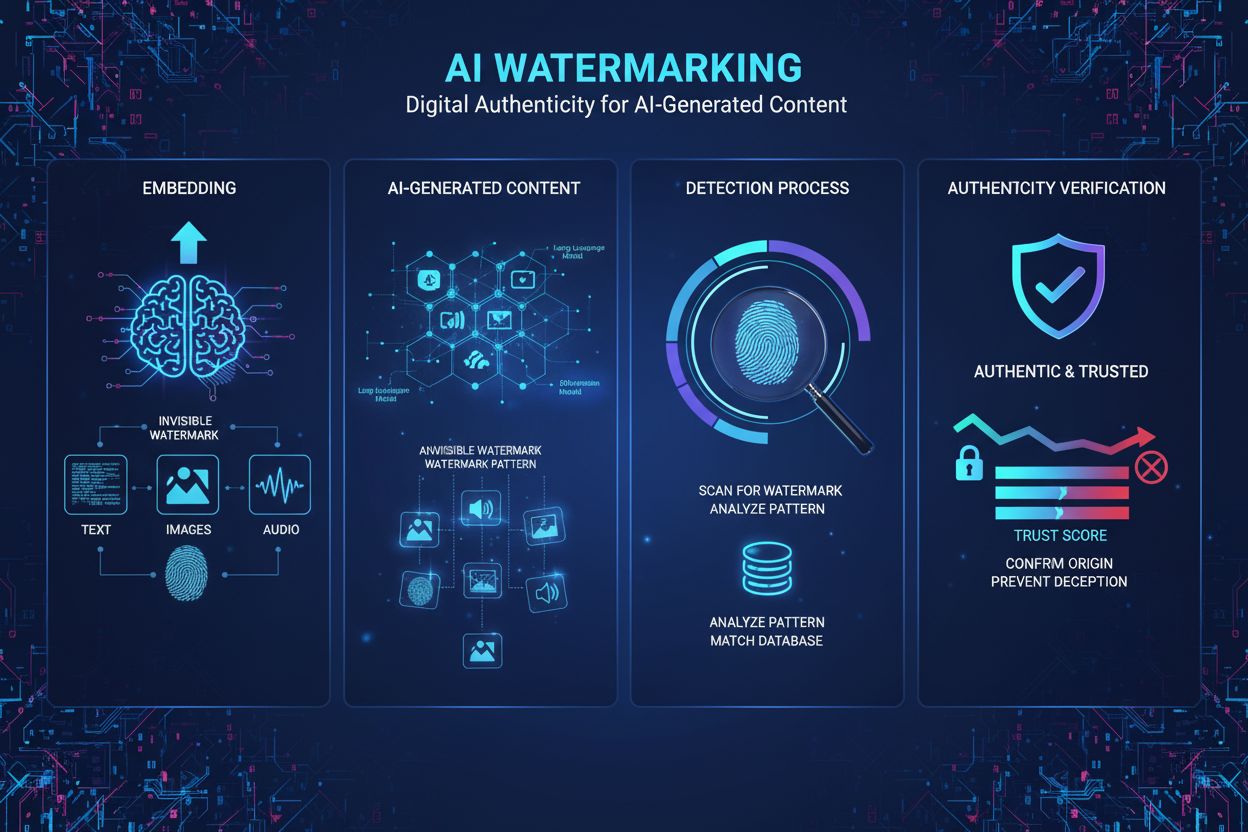

Watermarking AI content embeds digital markers in AI-generated material for authenticity verification. Learn about techniques, regulations, and detection method...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.