Conversational Context Window

Learn what a conversational context window is, how it affects AI responses, and why it matters for effective AI interactions. Understand tokens, limitations, an...

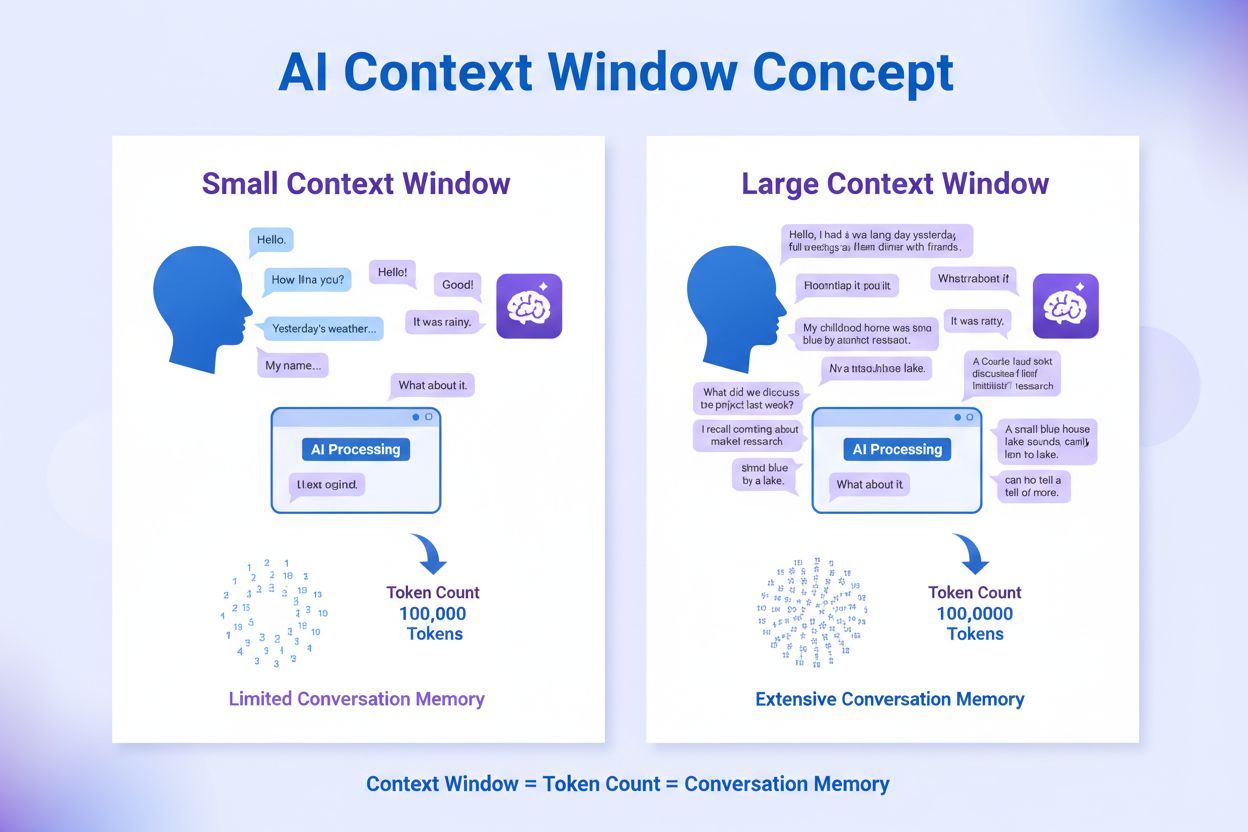

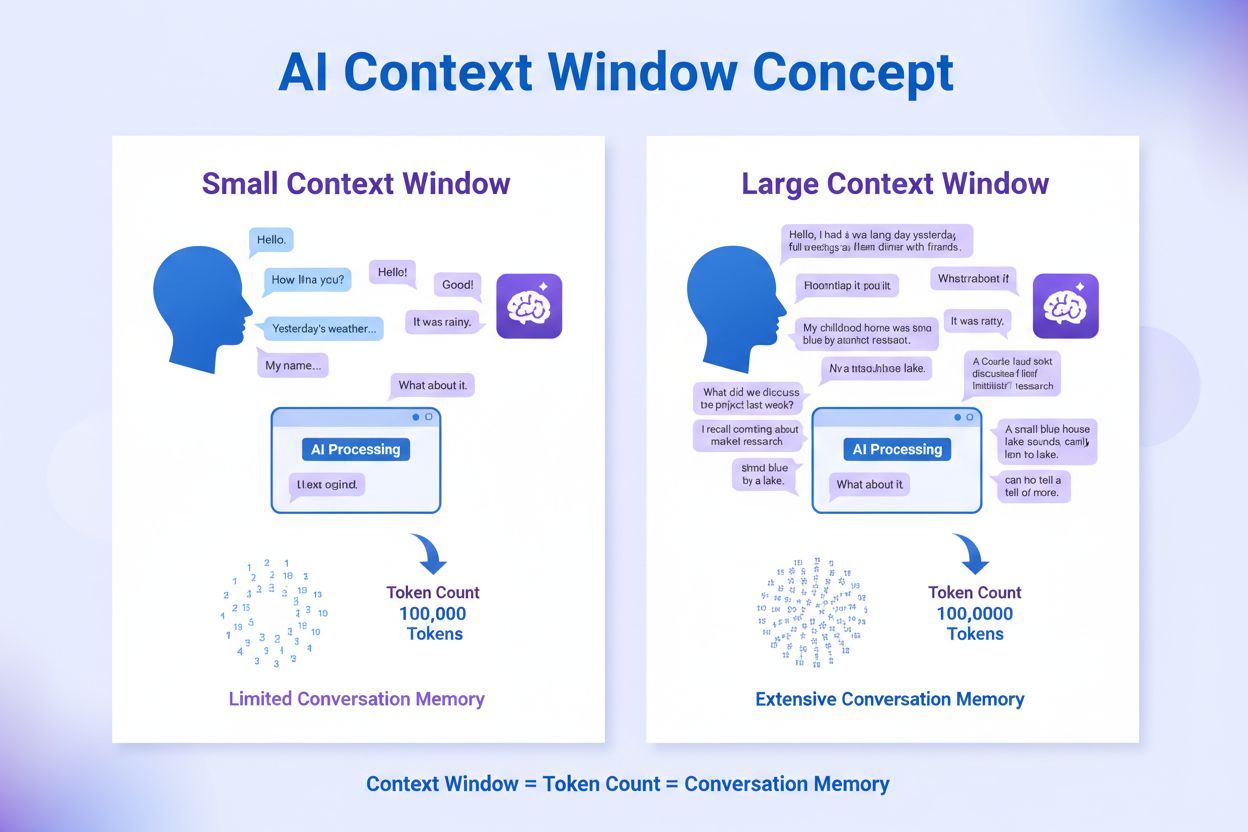

A context window is the maximum amount of text, measured in tokens, that a large language model can process and consider at one time when generating responses. It determines how much information an LLM can retain and reference within a single interaction, directly affecting the model’s ability to maintain coherence, accuracy, and relevance across longer inputs and conversations.

A context window is the maximum amount of text, measured in tokens, that a large language model can process and consider at one time when generating responses. It determines how much information an LLM can retain and reference within a single interaction, directly affecting the model's ability to maintain coherence, accuracy, and relevance across longer inputs and conversations.

A context window is the maximum amount of text, measured in tokens, that a large language model can process and consider simultaneously when generating responses. Think of it as the working memory of an AI system—it determines how much information from a conversation, document, or input the model can “remember” and reference at any single moment. The context window directly constrains the size of documents, code samples, and conversation histories that an LLM can process without truncation or summarization. For example, if a model has a 128,000-token context window and you provide a 150,000-token document, the model cannot process the entire document at once and must either reject the excess content or use specialized techniques to handle it. Understanding context windows is fundamental to working with modern AI systems, as it affects everything from accuracy and coherence to computational costs and the practical applications for which a model is suitable.

To fully understand context windows, one must first grasp how tokenization works. Tokens are the smallest units of text that language models process—they can represent individual characters, parts of words, whole words, or even short phrases. The relationship between words and tokens is not fixed; on average, one token represents approximately 0.75 words or 4 characters in English text. However, this ratio varies significantly depending on the language, the specific tokenizer used, and the content being processed. For instance, code and technical documentation often tokenize less efficiently than natural language prose, meaning they consume more tokens within the same context window. The tokenization process breaks down raw text into these manageable units, allowing models to learn patterns and relationships between linguistic elements. Different models and tokenizers may tokenize the same passage differently, which is why context window capacity can vary in practical terms even when two models claim the same token limit. This variability underscores why monitoring tools like AmICited must account for how different AI platforms tokenize content when tracking brand mentions and citations.

Context windows operate through the transformer architecture’s self-attention mechanism, which is the core computational engine of modern large language models. When a model processes text, it computes mathematical relationships between every token in the input sequence, calculating how relevant each token is to every other token. This self-attention mechanism enables the model to understand context, maintain coherence, and generate relevant responses. However, this process has a critical limitation: the computational complexity grows quadratically with the number of tokens. If you double the number of tokens in a context window, the model requires approximately 4 times more processing power to compute all the token relationships. This quadratic scaling is why context window expansion comes with significant computational costs. The model must store attention weights for every token pair, which demands substantial memory resources. Additionally, as the context window grows, inference (the process of generating responses) becomes progressively slower because the model must compute relationships between the new token being generated and every preceding token in the sequence. This is why real-time applications often face trade-offs between context window size and response latency.

| AI Model | Context Window Size | Output Tokens | Primary Use Case | Cost Efficiency |

|---|---|---|---|---|

| Google Gemini 1.5 Pro | 2,000,000 tokens | Varies | Enterprise document analysis, multimodal processing | High computational cost |

| Claude Sonnet 4 | 1,000,000 tokens | Up to 4,096 | Complex reasoning, codebase analysis | Moderate-to-high cost |

| Meta Llama 4 Maverick | 1,000,000 tokens | Up to 4,096 | Enterprise multimodal applications | Moderate cost |

| OpenAI GPT-5 | 400,000 tokens | 128,000 | Advanced reasoning, agentic workflows | High cost |

| Claude Opus 4.1 | 200,000 tokens | Up to 4,096 | High-precision coding, research | Moderate cost |

| OpenAI GPT-4o | 128,000 tokens | 16,384 | Vision-language tasks, code generation | Moderate cost |

| Mistral Large 2 | 128,000 tokens | Up to 32,000 | Professional coding, enterprise deployment | Lower cost |

| DeepSeek R1 & V3 | 128,000 tokens | Up to 32,000 | Mathematical reasoning, code generation | Lower cost |

| Original GPT-3.5 | 4,096 tokens | Up to 2,048 | Basic conversational tasks | Lowest cost |

The practical implications of context window size extend far beyond technical specifications—they directly affect business outcomes, operational efficiency, and cost structures. Organizations using AI for document analysis, legal review, or codebase comprehension benefit significantly from larger context windows because they can process entire documents without splitting them into smaller chunks. This reduces the need for complex preprocessing pipelines and improves accuracy by maintaining full document context. For example, a legal firm analyzing a 200-page contract can use Claude Sonnet 4’s 1-million-token window to review the entire document at once, whereas older models with 4,000-token windows would require splitting the contract into 50+ separate chunks and then synthesizing results—a process prone to missing cross-document relationships and context. However, this capability comes at a cost: larger context windows demand more computational resources, which translates to higher API costs for cloud-based services. OpenAI, Anthropic, and other providers typically charge based on token consumption, so processing a 100,000-token document costs significantly more than processing a 10,000-token document. Organizations must therefore balance the benefits of comprehensive context against budget constraints and performance requirements.

Despite the apparent advantages of large context windows, research has revealed a significant limitation: models don’t robustly utilize information distributed throughout long contexts. A 2023 study published on arXiv found that LLMs perform best when relevant information appears at the beginning or end of the input sequence, but performance degrades substantially when the model must carefully consider information buried in the middle of long contexts. This phenomenon, known as the “lost in the middle” problem, suggests that simply expanding context window size doesn’t guarantee proportional improvements in model performance. The model may become “lazy” and rely on cognitive shortcuts, failing to thoroughly process all available information. This has profound implications for applications like AI brand monitoring and citation tracking. When AmICited monitors how AI systems like Perplexity, ChatGPT, and Claude reference brands across their responses, the position of brand mentions within the model’s context window affects whether those mentions are accurately captured and cited. If a brand mention appears in the middle of a long document, the model may overlook it or deprioritize it, leading to incomplete citation tracking. Researchers have developed benchmarks like Needle-in-a-Haystack (NIAH), RULER, and LongBench to measure how effectively models find and utilize relevant information within large passages, helping organizations understand real-world performance beyond theoretical context window limits.

One of the most significant benefits of larger context windows is their potential to reduce AI hallucinations—instances where models generate false or fabricated information. When a model has access to more relevant context, it can ground its responses in actual information rather than relying on statistical patterns that may lead to false outputs. Research from IBM and other institutions shows that increasing context window size generally translates to increased accuracy, fewer hallucinations, and more coherent model responses. However, this relationship is not linear, and context window expansion alone is insufficient to eliminate hallucinations entirely. The quality and relevance of information within the context window matter as much as the window’s size. Additionally, larger context windows introduce new security vulnerabilities: research from Anthropic demonstrated that increasing a model’s context length also increases its vulnerability to “jailbreaking” attacks and adversarial prompts. Attackers can embed malicious instructions deeper within long contexts, exploiting the model’s tendency to deprioritize middle-positioned information. For organizations monitoring AI citations and brand mentions, this means that larger context windows can improve accuracy in capturing brand references but may also introduce new risks if competitors or bad actors embed misleading information about your brand within long documents that AI systems process.

Different AI platforms implement context windows with varying strategies and trade-offs. ChatGPT’s GPT-4o model offers 128,000 tokens, balancing performance and cost for general-purpose tasks. Claude 3.5 Sonnet, Anthropic’s flagship model, recently expanded from 200,000 to 1,000,000 tokens, positioning it as a leader for enterprise document analysis. Google’s Gemini 1.5 Pro pushes the boundaries with 2 million tokens, enabling processing of entire codebases and extensive document collections. Perplexity, which specializes in search and information retrieval, leverages context windows to synthesize information from multiple sources when generating responses. Understanding these platform-specific implementations is crucial for AI monitoring and brand tracking because each platform’s context window size and attention mechanisms affect how thoroughly they can reference your brand across their responses. A brand mention that appears in a document processed by Gemini’s 2-million-token window may be captured and cited, whereas the same mention might be missed by a model with a smaller context window. Additionally, different platforms use different tokenizers, meaning the same document consumes different numbers of tokens on different platforms. This variability means that AmICited must account for platform-specific context window behaviors when tracking brand citations and monitoring AI responses across multiple systems.

The AI research community has developed several techniques to optimize context window efficiency and extend effective context length beyond theoretical limits. Rotary Position Embedding (RoPE) and similar position encoding methods improve how models handle tokens at large distances from one another, enhancing performance on long-context tasks. Retrieval Augmented Generation (RAG) systems extend functional context by dynamically retrieving relevant information from external databases, allowing models to effectively work with vastly larger information sets than their context windows would normally permit. Sparse attention mechanisms reduce computational complexity by limiting attention to the most relevant tokens rather than computing relationships between all token pairs. Adaptive context windows adjust the processing window size based on input length, reducing costs when smaller contexts suffice. Looking forward, the trajectory of context window development suggests continued expansion, though with diminishing returns. Magic.dev’s LTM-2-Mini already offers 100 million tokens, and Meta’s Llama 4 Scout supports 10 million tokens on a single GPU. However, industry experts debate whether such massive context windows represent practical necessity or technological excess. The real frontier may lie not in raw context window size but in improving how models utilize available context and in developing more efficient architectures that reduce the computational overhead of long-context processing.

The evolution of context windows has profound implications for AI citation monitoring and brand tracking strategies. As context windows expand, AI systems can process more comprehensive information about your brand, competitors, and industry landscape in single interactions. This means that brand mentions, product descriptions, and competitive positioning information can be considered simultaneously by AI models, potentially leading to more accurate and contextually appropriate citations. However, it also means that outdated or incorrect information about your brand can be processed alongside current information, potentially leading to confused or inaccurate AI responses. Organizations using platforms like AmICited must adapt their monitoring strategies to account for these evolving context window capabilities. Tracking how different AI platforms with different context window sizes reference your brand reveals important patterns: some platforms may cite your brand more frequently because their larger context windows allow them to process more of your content, while others may miss mentions because their smaller windows exclude relevant information. Additionally, as context windows expand, the importance of content positioning and information architecture increases. Brands should consider how their content is structured and positioned within documents that AI systems process, recognizing that information buried in the middle of long documents may be deprioritized by models exhibiting the “lost in the middle” phenomenon. This strategic awareness transforms context windows from a purely technical specification into a business-critical factor affecting brand visibility and citation accuracy across AI-powered search and response systems.

Tokens are the smallest units of text that an LLM processes, where one token typically represents about 0.75 words or 4 characters in English. A context window, by contrast, is the total number of tokens a model can process at once—essentially the container that holds all those tokens. If tokens are individual building blocks, the context window is the maximum size of the structure you can build with them at any given moment.

Larger context windows generally reduce hallucinations and improve accuracy because the model has more information to reference when generating responses. However, research shows that LLMs perform worse when relevant information is buried in the middle of long contexts—a phenomenon called the 'lost in the middle' problem. This means that while bigger windows help, the placement and organization of information within that window significantly impacts output quality.

Context window complexity scales quadratically with token count due to the transformer architecture's self-attention mechanism. When you double the number of tokens, the model needs approximately 4 times more processing power to compute relationships between all token pairs. This exponential increase in computational demand directly translates to higher memory requirements, slower inference speeds, and increased costs for cloud-based AI services.

As of 2025, Google's Gemini 1.5 Pro offers the largest commercial context window at 2 million tokens, followed by Claude Sonnet 4 at 1 million tokens and GPT-4o at 128,000 tokens. However, experimental models like Magic.dev's LTM-2-Mini push boundaries with 100 million tokens. Despite these massive windows, real-world usage shows that most practical applications effectively utilize only a fraction of available context.

Context window size directly impacts how much source material an AI model can reference when generating responses. For brand monitoring platforms like AmICited, understanding context windows is crucial because it determines whether an AI system can process entire documents, websites, or knowledge bases when deciding whether to cite or mention a brand. Larger context windows mean AI systems can consider more competitive information and brand references simultaneously.

Some models support context window extension through techniques like LongRoPE (rotary position embedding) and other position encoding methods, though this often comes with performance trade-offs. Additionally, Retrieval Augmented Generation (RAG) systems can effectively extend functional context by dynamically pulling relevant information from external sources. However, these workarounds typically involve additional computational overhead and complexity.

Different languages tokenize with varying efficiency due to linguistic structure differences. For example, a 2024 study found that Telugu translations required over 7 times more tokens than their English equivalents despite having fewer characters. This happens because tokenizers are typically optimized for English and Latin-based languages, making non-Latin scripts less efficient and reducing the effective context window for multilingual applications.

The 'lost in the middle' problem refers to research findings showing that LLMs perform worse when relevant information is positioned in the middle of long contexts. Models perform best when important information appears at the beginning or end of the input. This suggests that despite having large context windows, models don't robustly utilize all available information equally, which has implications for document analysis and information retrieval tasks.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Learn what a conversational context window is, how it affects AI responses, and why it matters for effective AI interactions. Understand tokens, limitations, an...

Learn what context windows are in AI language models, how they work, their impact on model performance, and why they matter for AI-powered applications and moni...

Community discussion on AI context windows and their implications for content marketing. Understanding how context limits affect AI processing of your content.

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.