AI-Friendly Formatting

Learn how AI-friendly formatting with tables, lists, and clear sections improves AI parsing accuracy and increases your content's visibility in AI Overviews, Ch...

Contextual bracketing is a content optimization technique that establishes clear boundaries around information to prevent AI misinterpretation and hallucination. It uses explicit delimiters and context markers to ensure AI models understand exactly where relevant information begins and ends, preventing generation of responses based on assumptions or fabricated details.

Contextual bracketing is a content optimization technique that establishes clear boundaries around information to prevent AI misinterpretation and hallucination. It uses explicit delimiters and context markers to ensure AI models understand exactly where relevant information begins and ends, preventing generation of responses based on assumptions or fabricated details.

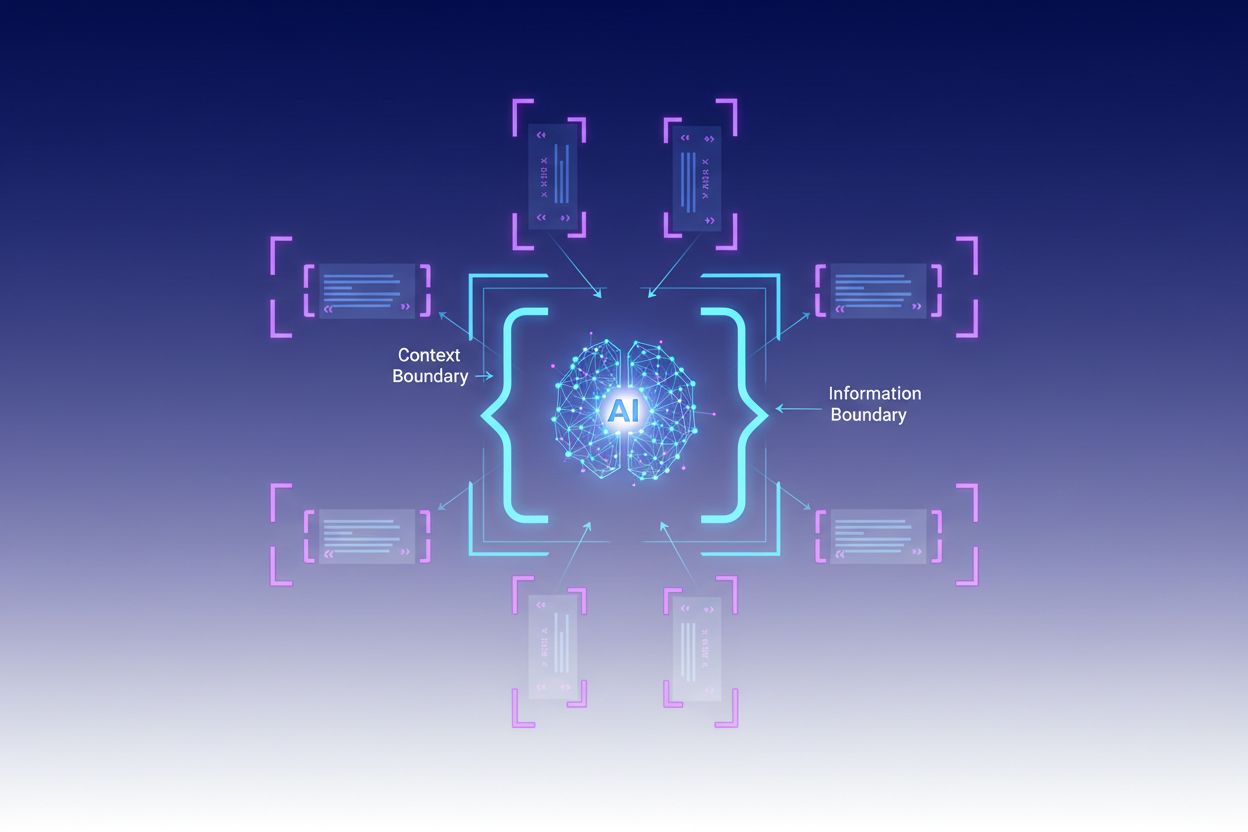

Contextual bracketing is a content optimization technique that establishes clear boundaries around information to prevent AI misinterpretation and hallucination. This method involves using explicit delimiters—such as XML tags, markdown headers, or special characters—to mark the beginning and end of specific information blocks, creating what experts call a “context boundary.” By structuring prompts and data with these clear markers, developers ensure that AI models understand exactly where relevant information begins and ends, preventing the model from generating responses based on assumptions or fabricated details. Contextual bracketing represents an evolution of traditional prompt engineering, extending into the broader discipline of context engineering, which focuses on optimizing all information provided to an LLM to achieve desired outcomes. The technique is particularly valuable in production environments where accuracy and consistency are critical, as it provides mathematical and structural guardrails that guide AI behavior without requiring complex conditional logic.

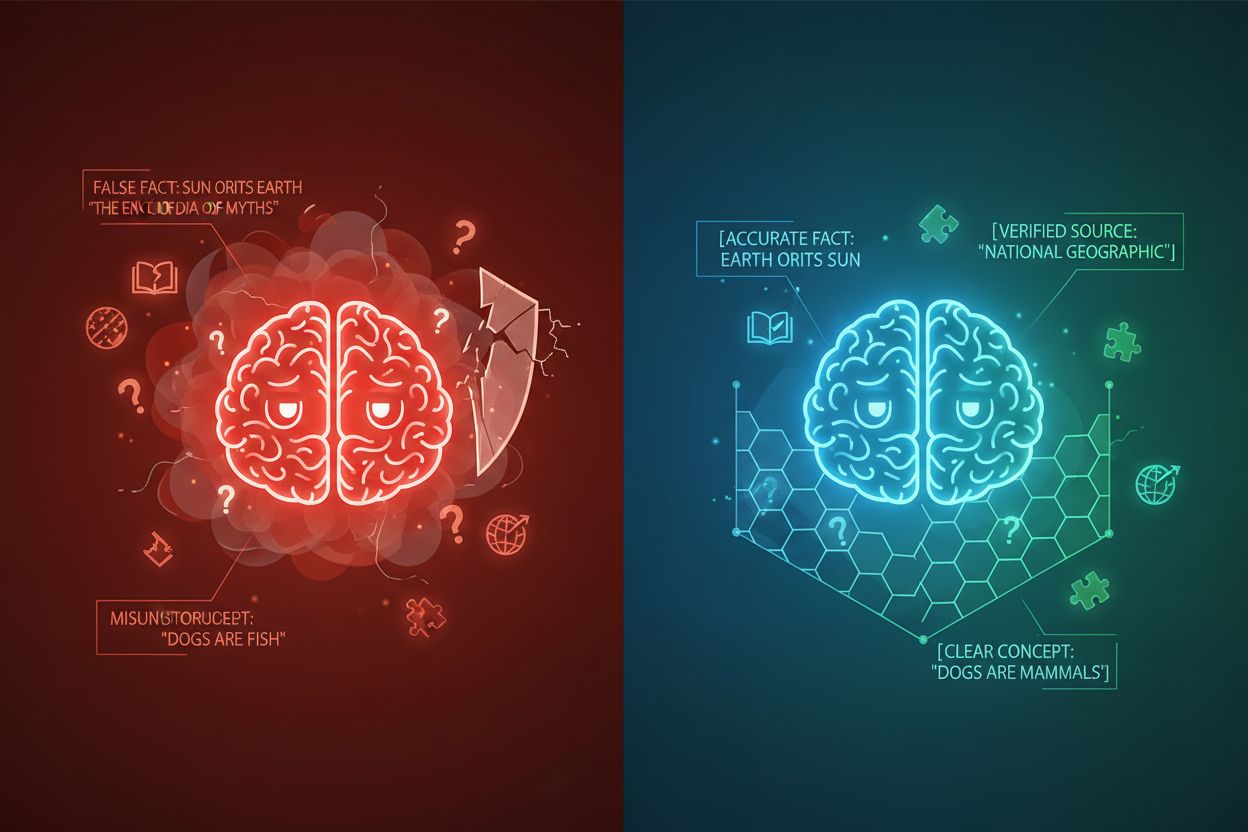

AI hallucination occurs when language models generate responses that are not rooted in factual information or the specific context provided, resulting in false facts, misleading statements, or references to non-existent sources. Research shows that chatbots make up facts approximately 27% of the time, with 46% of their texts containing factual errors, while ChatGPT’s journalism quotes were wrong 76% of the time. These hallucinations stem from multiple sources: models may learn patterns from biased or incomplete training data, misunderstand the relationship between tokens, or lack sufficient constraints that limit possible outputs. The consequences are severe across industries—in healthcare, hallucinations can lead to incorrect diagnoses and unnecessary medical interventions; in legal contexts, they can result in fabricated case citations (as seen in the Mata v. Avianca case where a lawyer faced sanctions for using ChatGPT’s fake legal citations); in business, they waste resources through flawed analysis and forecasting. The fundamental issue is that without clear context boundaries, AI models operate in an information vacuum where they’re more likely to “fill in the gaps” with plausible-sounding but inaccurate information, treating hallucination as a feature rather than a bug.

| Hallucination Type | Frequency | Impact | Example |

|---|---|---|---|

| Factual Inaccuracies | 27-46% | Misinformation spread | False product features |

| Source Fabrication | 76% (quotes) | Loss of credibility | Non-existent citations |

| Misunderstood Concepts | Variable | Incorrect analysis | Wrong legal precedents |

| Biased Patterns | Ongoing | Discriminatory outputs | Stereotyped responses |

The effectiveness of contextual bracketing rests on five fundamental principles:

Delimiter Usage: Employ consistent, unambiguous markers (XML tags like <context>, markdown headers, or special characters) to clearly delineate information blocks and prevent the model from confusing boundaries between different data sources or instruction types.

Context Window Management: Strategically allocate tokens between system instructions, user inputs, and retrieved knowledge, ensuring that the most relevant information occupies the model’s limited attention budget while less critical details are filtered or retrieved just-in-time.

Information Hierarchy: Establish clear priority levels for different types of information, signaling to the model which data should be treated as authoritative sources versus supplementary context, preventing equal weighting of primary and secondary information.

Boundary Definition: Explicitly state what information the model should consider and what it should ignore, creating hard stops that prevent the model from extrapolating beyond provided data or making assumptions about unstated information.

Scope Markers: Use structural elements to indicate the scope of instructions, examples, and data, making it clear whether guidance applies globally, to specific sections, or only to particular types of queries.

Implementing contextual bracketing requires careful attention to how information is structured and presented to AI models. Structured input formatting using JSON or XML schemas provides explicit field definitions that guide model behavior—for example, wrapping user queries in <user_query> tags and expected outputs in <expected_output> tags creates unambiguous boundaries. System prompts should be organized into distinct sections using markdown headers or XML tags: <background_information>, <instructions>, <tool_guidance>, and <output_description> each serve specific purposes and help the model understand the hierarchy of information. Few-shot examples should include bracketed context showing exactly how the model should structure its responses, with clear delimiters around inputs and outputs. Tool definitions benefit from explicit parameter descriptions and usage constraints, preventing the model from misusing tools or applying them outside their intended scope. Retrieval-Augmented Generation (RAG) systems can implement contextual bracketing by wrapping retrieved documents in source markers (<source>document_name</source>) and using grounding scores to verify that generated responses stay within the boundaries of retrieved information. For instance, CustomGPT’s context boundary feature works by training models exclusively on uploaded datasets, ensuring responses never venture beyond the provided knowledge base—a practical implementation of contextual bracketing at the architectural level.

While contextual bracketing shares similarities with related techniques, it occupies a distinct position in the AI engineering landscape. Basic prompt engineering focuses primarily on crafting effective instructions and examples, but lacks the systematic approach to managing all context elements that contextual bracketing provides. Context engineering, the broader discipline, encompasses contextual bracketing as one component among many—it includes prompt optimization, tool design, memory management, and dynamic context retrieval, making it a superset of contextual bracketing’s more focused approach. Simple instruction-following relies on the model’s ability to understand natural language directives without explicit structural boundaries, which often fails when instructions are complex or when the model encounters ambiguous situations. Guardrails and validation systems operate at the output level, checking responses after generation, whereas contextual bracketing works at the input level to prevent hallucinations before they occur. The key distinction is that contextual bracketing is preventative and structural—it shapes the information landscape the model operates within—rather than corrective or reactive, making it more efficient and reliable for maintaining accuracy in production systems.

Contextual bracketing delivers measurable value across diverse applications. Customer service chatbots use context boundaries to restrict responses to company-approved knowledge bases, preventing agents from inventing product features or making unauthorized commitments. Legal document analysis systems bracket relevant case law, statutes, and precedents, ensuring the AI only references verified sources and doesn’t fabricate legal citations. Medical AI systems implement strict context boundaries around clinical guidelines, patient data, and approved treatment protocols, preventing dangerous hallucinations that could harm patients. Content generation platforms use contextual bracketing to enforce brand guidelines, tone requirements, and factual constraints, ensuring generated content aligns with organizational standards. Research and analysis tools bracket primary sources, datasets, and verified information, enabling AI to synthesize insights while maintaining clear attribution and preventing the invention of false statistics or studies. AmICited.com exemplifies this principle by monitoring how AI systems cite and reference brands across GPTs, Perplexity, and Google AI Overviews—essentially tracking whether AI models stay within appropriate context boundaries when discussing specific companies or products, helping organizations understand if AI systems are hallucinating about their brand or accurately representing their information.

Successfully implementing contextual bracketing requires adherence to proven best practices:

Start with Minimal Context: Begin with the smallest possible set of information needed for accurate responses, then expand only when testing reveals gaps, preventing context pollution and maintaining model focus.

Use Consistent Delimiter Patterns: Establish and maintain uniform delimiter conventions across your entire system, making it easier for the model to recognize boundaries and reducing confusion from inconsistent formatting.

Test and Validate Boundaries: Systematically test whether the model respects defined boundaries by attempting to prompt it to venture beyond them, identifying and closing gaps before deployment.

Monitor for Context Drift: Continuously track whether the model’s responses remain within intended boundaries over time, as model behavior can shift with different input patterns or as knowledge bases evolve.

Implement Feedback Loops: Create mechanisms for users or human reviewers to flag instances where the model exceeded its boundaries, using this feedback to refine context definitions and improve future performance.

Version Your Context Definitions: Treat context boundaries as code, maintaining version history and documentation of changes, enabling rollback if new boundary definitions produce worse results.

Several platforms have built contextual bracketing capabilities into their core offerings. CustomGPT.ai implements context boundaries through its “context boundary” feature, which acts as a protective wall ensuring the AI only uses data provided by the user, never venturing into general knowledge or fabricating information—this approach has proven effective for organizations like MIT that require absolute accuracy in knowledge delivery. Anthropic’s Claude emphasizes context engineering principles, providing detailed documentation on how to structure prompts, manage context windows, and implement guardrails that keep responses within defined boundaries. AWS Bedrock Guardrails offers automated reasoning checks that verify generated content against mathematical, logic-based rules, with grounding scores indicating whether responses stay within source material (scores above 0.85 are required for finance applications). Shelf.io provides RAG solutions with context management capabilities, enabling organizations to implement retrieval-augmented generation while maintaining strict boundaries around what information the model can access and reference. AmICited.com serves a complementary role by monitoring how AI systems cite and reference your brand across multiple AI platforms, helping you understand whether AI models are respecting appropriate context boundaries when discussing your organization or staying within accurate, verified information about your brand—essentially providing visibility into whether contextual bracketing is working effectively in the wild.

Prompt engineering focuses primarily on crafting effective instructions and examples, while contextual bracketing is a systematic approach to managing all context elements through explicit delimiters and boundaries. Contextual bracketing is more structured and preventative, working at the input level to prevent hallucinations before they occur, whereas prompt engineering is broader and includes various optimization techniques.

Contextual bracketing prevents hallucinations by establishing clear information boundaries using delimiters like XML tags or markdown headers. This tells the AI model exactly what information it should consider and what it should ignore, preventing it from fabricating details or making assumptions about unstated information. By restricting the model's attention to defined boundaries, it reduces the likelihood of generating false facts or non-existent sources.

Common delimiters include XML tags (like

Contextual bracketing principles can be applied to most modern language models, though effectiveness varies. Models trained with better instruction-following capabilities (like Claude, GPT-4, and Gemini) tend to respect boundaries more reliably. The technique works best when combined with models that support structured outputs and have been trained on diverse, well-formatted data.

Start by organizing your system prompts into distinct sections using clear delimiters. Structure inputs and outputs using JSON or XML schemas. Use consistent delimiter patterns throughout. Implement few-shot examples that show the model exactly how to respect boundaries. Test extensively to ensure the model respects defined boundaries, and monitor performance over time to catch context drift.

Contextual bracketing can slightly increase token usage due to additional delimiters and structural markers, but this is typically offset by improved accuracy and reduced hallucinations. The technique actually improves efficiency by preventing the model from wasting tokens on fabricated information. In production systems, the accuracy gains far outweigh the minimal token overhead.

Contextual bracketing and RAG are complementary techniques. RAG retrieves relevant information from external sources, while contextual bracketing ensures the model stays within the boundaries of that retrieved information. Together, they create a powerful system where the model can access external knowledge while being constrained to only reference verified, retrieved sources.

Several platforms have built-in support: CustomGPT.ai offers context boundary features, Anthropic's Claude provides context engineering documentation and structured output support, AWS Bedrock Guardrails includes automated reasoning checks, and Shelf.io provides RAG with context management. AmICited.com monitors how AI systems cite your brand, helping verify that contextual bracketing is working effectively.

Contextual bracketing ensures AI systems provide accurate information about your brand. Use AmICited to track how AI models cite and reference your content across GPTs, Perplexity, and Google AI Overviews.

Learn how AI-friendly formatting with tables, lists, and clear sections improves AI parsing accuracy and increases your content's visibility in AI Overviews, Ch...

Context window explained: the maximum tokens an LLM can process at once. Learn how context windows affect AI accuracy, hallucinations, and brand monitoring acro...

Learn essential strategies to optimize your support content for AI systems like ChatGPT, Perplexity, and Google AI Overviews. Discover best practices for clarit...