Context Window

Context window explained: the maximum tokens an LLM can process at once. Learn how context windows affect AI accuracy, hallucinations, and brand monitoring acro...

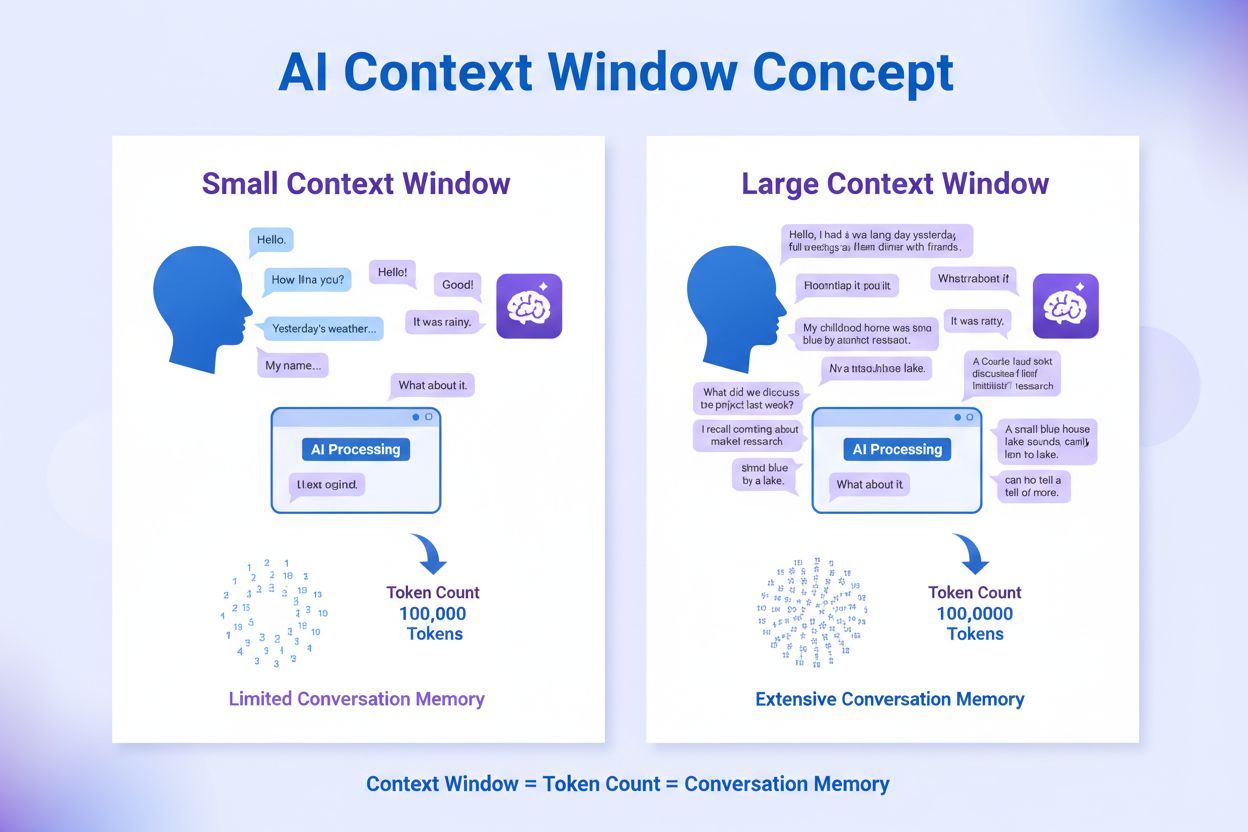

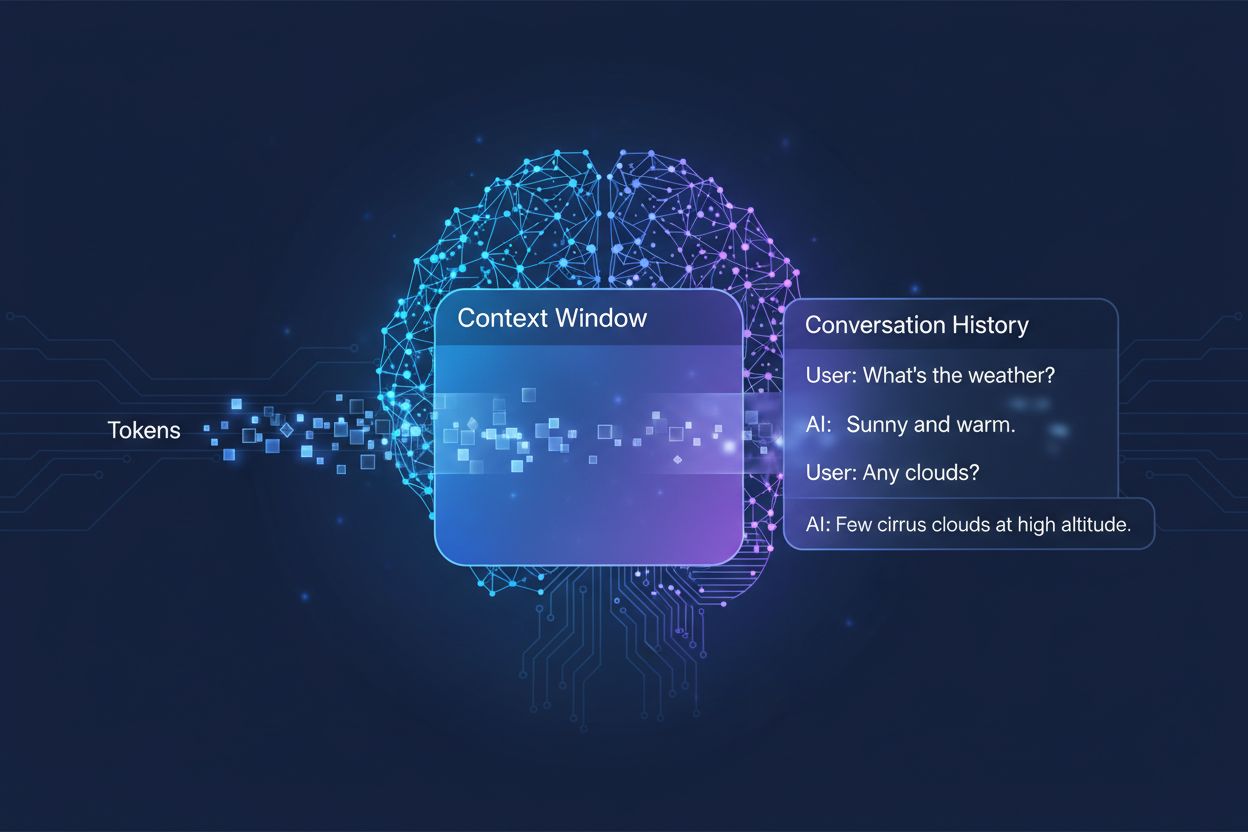

The amount of previous conversation an AI system considers when generating responses, measured in tokens. It determines how much text an AI can process simultaneously and directly impacts the quality and coherence of its outputs in multi-turn conversations.

The amount of previous conversation an AI system considers when generating responses, measured in tokens. It determines how much text an AI can process simultaneously and directly impacts the quality and coherence of its outputs in multi-turn conversations.

A context window is the maximum amount of text that an AI language model can process and reference at one time during a conversation or task. Think of it as the model’s working memory—just as humans can only hold a limited amount of information in their immediate awareness, AI models can only “see” a certain amount of text before and after their current position. This capacity is measured in tokens, which are small units of text that typically represent words or word fragments (on average, one English word equals approximately 1.5 tokens). Understanding your model’s context window is crucial because it directly determines how much information the AI can consider when generating responses, making it a fundamental constraint in how effectively the model can handle complex, multi-turn conversations or lengthy documents.

Modern language models, particularly transformer-based architectures, process text by converting it into tokens and then analyzing the relationships between all tokens within the context window simultaneously. The transformer architecture, introduced in the seminal 2017 paper “Attention is All You Need,” uses a mechanism called self-attention to determine which parts of the input are most relevant to each other. This attention mechanism allows the model to weigh the importance of different tokens relative to one another, enabling it to understand context and meaning across the entire window. However, this process becomes computationally expensive as the context window grows, since the attention mechanism must calculate relationships between every token and every other token—a quadratic scaling problem. The following table illustrates how different leading AI models compare in their context window capabilities:

| Model | Context Window (Tokens) | Release Date |

|---|---|---|

| GPT-4 | 128,000 | March 2023 |

| Claude 3 Opus | 200,000 | March 2024 |

| Gemini 1.5 Pro | 1,000,000 | May 2024 |

| GPT-4 Turbo | 128,000 | November 2023 |

| Llama 2 | 4,096 | July 2023 |

These varying capacities reflect different design choices and computational trade-offs made by each organization, with larger windows enabling more sophisticated applications but requiring more processing power.

The journey toward larger context windows represents one of the most significant advances in AI capability over the past decade. Early recurrent neural networks (RNNs) and long short-term memory (LSTM) models struggled with context, as they processed text sequentially and had difficulty retaining information from distant parts of the input. The breakthrough came in 2017 with the introduction of the Transformer architecture, which enabled parallel processing of entire sequences and dramatically improved the model’s ability to maintain context across longer texts. This foundation led to 2019’s GPT-2, which demonstrated impressive language generation with a 1,024-token context window, followed by 2020’s GPT-3 with 2,048 tokens, and ultimately 2023’s GPT-4 with 128,000 tokens. Each advancement mattered because it expanded what was possible: larger windows meant models could handle longer documents, maintain coherence across multi-turn conversations, and understand nuanced relationships between distant concepts in the text. The exponential growth in context window sizes reflects both improved architectural innovations and increased computational resources available to leading AI labs.

Larger context windows fundamentally expand what AI models can accomplish, enabling applications that were previously impossible or severely limited. Here are the key benefits:

Enhanced conversation continuity: Models can maintain awareness of entire conversation histories, reducing the need to re-explain context and enabling more natural, coherent multi-turn dialogues that feel genuinely continuous rather than fragmented.

Document processing at scale: Larger windows allow AI to analyze entire documents, research papers, or codebases in a single pass, identifying patterns and relationships across the full content without losing information from earlier sections.

Improved reasoning and analysis: With more context available, models can perform more sophisticated reasoning tasks that require understanding relationships between multiple concepts, making them more effective for research, analysis, and complex problem-solving.

Reduced context switching overhead: Users no longer need to manually summarize or re-introduce information repeatedly; the model can reference the full conversation history, reducing friction and improving efficiency in collaborative workflows.

Better handling of nuanced tasks: Applications like legal document review, medical record analysis, and code auditing benefit significantly from the ability to consider comprehensive context, leading to more accurate and thorough results.

Seamless multi-document workflows: Professionals can work with multiple related documents simultaneously, allowing the model to cross-reference information and identify connections that would be impossible with smaller context windows.

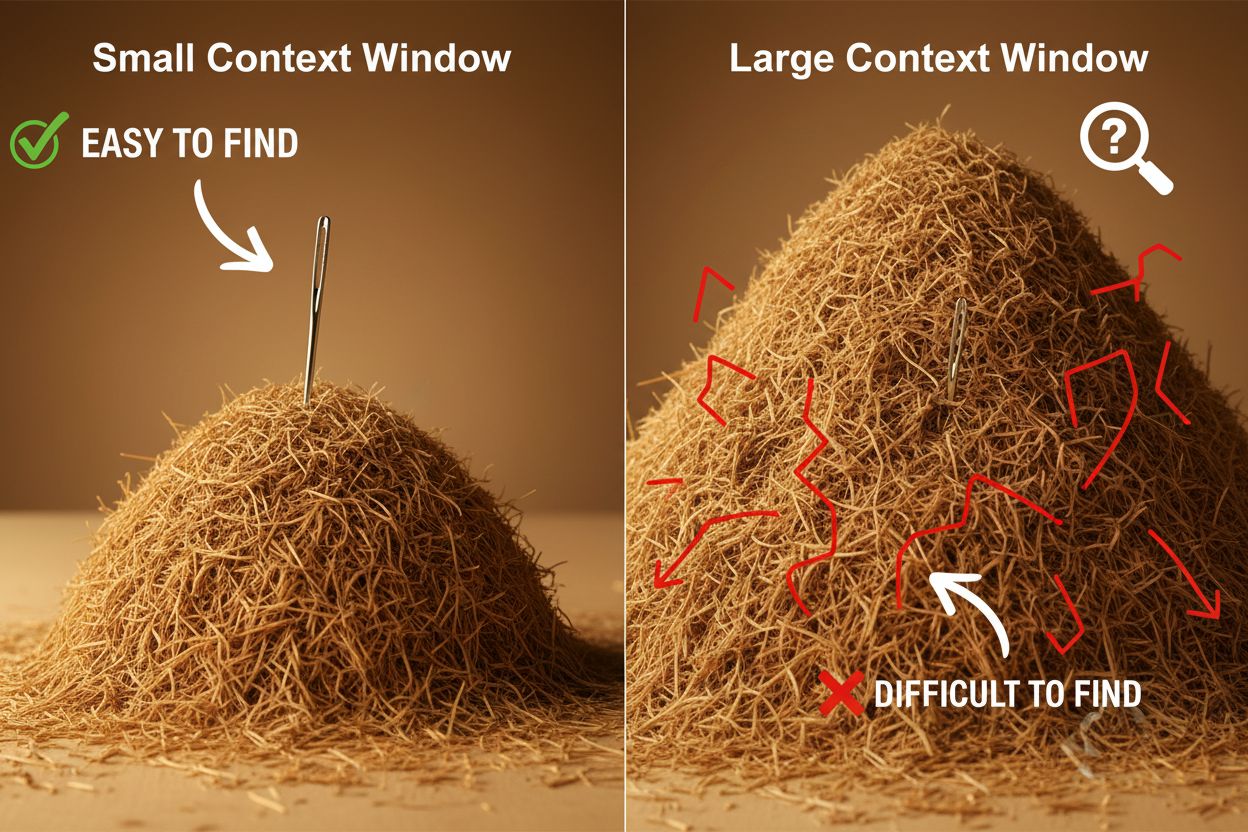

Despite their advantages, large context windows introduce significant technical and practical challenges that developers and users must navigate carefully. The most obvious challenge is computational cost: processing longer sequences requires exponentially more memory and processing power due to the quadratic scaling of the attention mechanism, making larger context windows substantially more expensive to run. This increased computational demand also creates latency issues, as longer context windows mean slower response times—a critical concern for real-time applications where users expect quick answers. Another subtle but important problem is the “needle in haystack” phenomenon, where models struggle to locate and utilize relevant information when it’s buried within a very large context window, sometimes performing worse than with smaller windows. Additionally, context rot occurs when information from the beginning of a long context window becomes less influential on the model’s output, as the attention mechanism may deprioritize distant tokens in favor of more recent ones. These challenges mean that simply maximizing context window size isn’t always the optimal solution for every use case.

Understanding context rot is essential for working effectively with large context windows: as sequences grow longer, tokens at the beginning of the context tend to have diminishing influence on the model’s output, meaning critical information can be effectively “forgotten” even though it’s technically within the window. This happens because the attention budget—the model’s capacity to meaningfully attend to all tokens—becomes stretched thin across a larger span of text. Fortunately, several sophisticated techniques have emerged to address these limitations. Retrieval-Augmented Generation (RAG) solves this by storing information in external databases and retrieving only the most relevant pieces when needed, effectively giving the model a larger effective knowledge base without requiring a massive context window. Context compaction techniques summarize or compress less relevant information, preserving the most important details while reducing token usage. Structured note-taking approaches encourage users to organize information hierarchically, making it easier for the model to prioritize and locate key concepts. These solutions work by being strategic about what information enters the context window and how it’s organized, rather than simply trying to fit everything into memory at once.

The expanded context windows of modern AI models have unlocked numerous real-world applications that were previously impractical or impossible. Customer support systems can now review an entire ticket history and related documentation in a single request, enabling more accurate and contextually appropriate responses without requiring customers to re-explain their situation. Document analysis and research has been transformed by models that can ingest entire research papers, legal contracts, or technical specifications, identifying key information and answering detailed questions about content that would take humans hours to review. Code review and software development benefits from context windows large enough to hold entire files or even multiple related files, allowing AI to understand architectural patterns and provide more intelligent suggestions. Long-form content creation and iterative writing workflows become more efficient when the model can maintain awareness of an entire document’s tone, style, and narrative arc throughout the editing process. Meeting transcription analysis and research synthesis leverage large context windows to extract insights from hours of conversation or dozens of source documents, identifying themes and connections that would be difficult to spot manually. These applications demonstrate that context window size directly translates to practical value for professionals across industries.

The trajectory of context window development suggests we’re moving toward even more dramatic expansions in the near term, with Gemini 1.5 Pro already demonstrating a 1,000,000-token context window and research labs exploring even larger capacities. Beyond raw size, the future likely involves dynamic context windows that intelligently adjust their size based on the task at hand, allocating more capacity when needed and reducing it for simpler queries to improve efficiency and reduce costs. Researchers are also making progress on more efficient attention mechanisms that reduce the computational penalty of larger windows, potentially breaking the quadratic scaling barrier that currently limits context size. As these technologies mature, we can expect context windows to become less of a constraint and more of a solved problem, allowing developers to focus on other aspects of AI capability and reliability. The convergence of larger windows, improved efficiency, and smarter context management will likely define the next generation of AI applications, enabling use cases we haven’t yet imagined.

A context window is the total amount of text (measured in tokens) that an AI model can process at once, while a token limit refers to the maximum number of tokens the model can handle. These terms are often used interchangeably, but context window specifically refers to the working memory available during a single inference, whereas token limit can also refer to output constraints or API usage limits.

Larger context windows generally improve response quality by allowing the model to consider more relevant information and maintain better conversation continuity. However, extremely large windows can sometimes hurt quality due to context rot, where the model struggles to prioritize important information among vast amounts of text. The optimal context window size depends on the specific task and how well the information is organized.

Larger context windows require more computational power because of the quadratic scaling of the attention mechanism in transformer models. The attention mechanism must calculate relationships between every token and every other token, so doubling the context window roughly quadruples the computational requirements. This is why larger context windows are more expensive to run and produce slower response times.

The 'needle in haystack' problem occurs when an AI model struggles to locate and utilize relevant information (the 'needle') when it's buried within a very large context window (the 'haystack'). Models sometimes perform worse with extremely large context windows because the attention mechanism becomes diluted across so much information, making it harder to identify what's actually important.

To maximize context window effectiveness, organize information clearly and hierarchically, place the most important information near the beginning or end of the context, use structured formats like JSON or markdown, and consider using Retrieval-Augmented Generation (RAG) to dynamically load only the most relevant information. Avoid overwhelming the model with irrelevant details that consume tokens without adding value.

Context window is the technical capacity of the model to process text at one time, while conversation history is the actual record of previous messages in a conversation. The conversation history must fit within the context window, but the context window also includes space for system prompts, instructions, and other metadata. A conversation history can be longer than the context window, requiring summarization or truncation.

No AI models currently have truly unlimited context windows, as all models have architectural and computational constraints. However, some models like Gemini 1.5 Pro offer extremely large windows (1,000,000 tokens), and techniques like Retrieval-Augmented Generation (RAG) can effectively extend the model's knowledge base beyond its context window by dynamically retrieving information as needed.

Context window size directly affects API costs because larger windows require more computational resources to process. Most AI API providers charge based on token usage, so using a larger context window means more tokens processed and higher costs. Some providers also charge premium rates for models with larger context windows, making it important to choose the right model size for your specific needs.

AmICited tracks how AI systems like ChatGPT, Perplexity, and Google AI Overviews cite and reference your content. Understand your AI visibility and monitor your brand mentions across AI platforms.

Context window explained: the maximum tokens an LLM can process at once. Learn how context windows affect AI accuracy, hallucinations, and brand monitoring acro...

Learn what context windows are in AI language models, how they work, their impact on model performance, and why they matter for AI-powered applications and moni...

Community discussion on AI context windows and their implications for content marketing. Understanding how context limits affect AI processing of your content.