Conversational Queries vs Keywords: Key Differences for AI Search

Understand how conversational queries differ from traditional keywords. Learn why AI search engines prefer natural language questions and how this impacts brand...

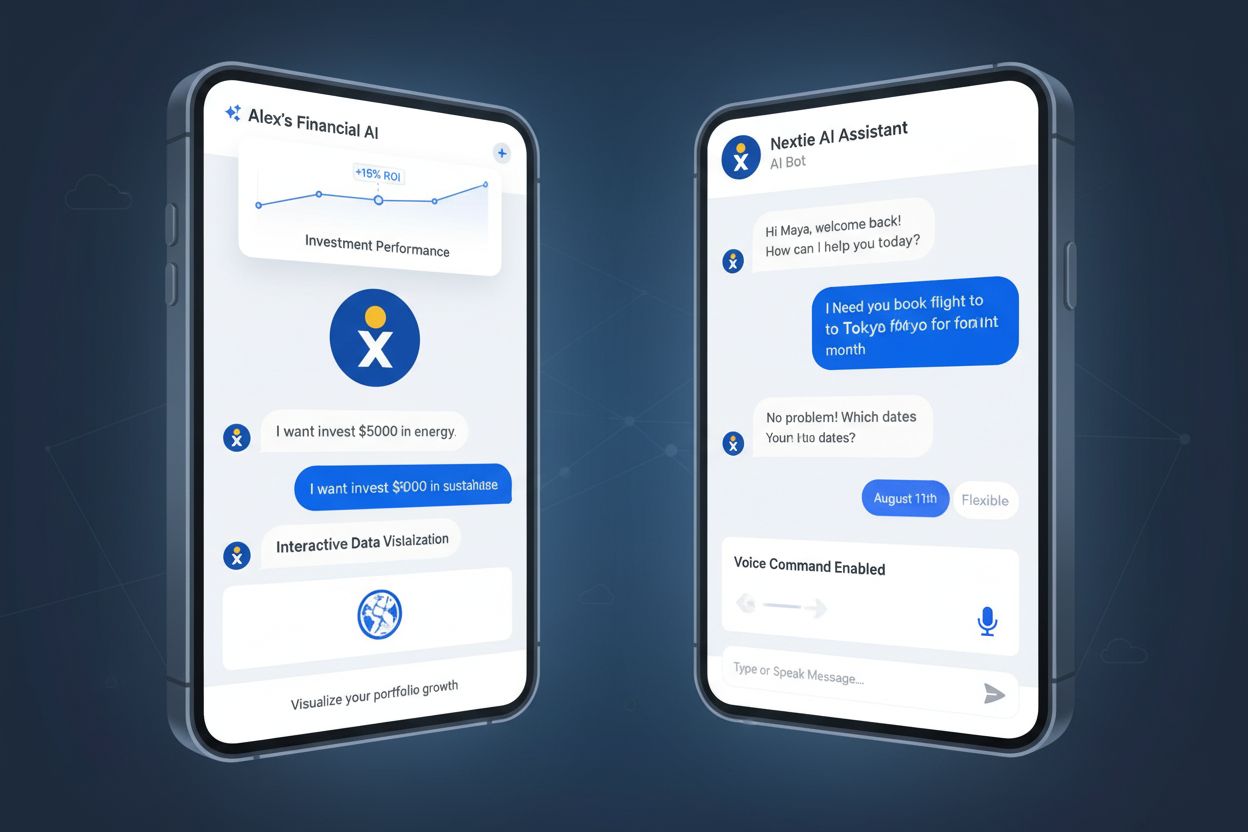

A conversational query is a natural language search question posed to AI systems in everyday language, mimicking human conversation rather than traditional keyword-based searches. These queries enable users to ask complex, multi-turn questions to AI chatbots, search engines, and voice assistants, which then interpret intent and context to provide synthesized answers.

A conversational query is a natural language search question posed to AI systems in everyday language, mimicking human conversation rather than traditional keyword-based searches. These queries enable users to ask complex, multi-turn questions to AI chatbots, search engines, and voice assistants, which then interpret intent and context to provide synthesized answers.

A conversational query is a natural language search question posed to artificial intelligence systems in everyday language, designed to mimic human conversation rather than traditional keyword-based searches. Unlike conventional search queries that rely on short, structured keywords like “best restaurants NYC,” conversational queries use complete sentences and natural phrasing such as “What are the best restaurants near me in New York City?” These queries enable users to ask complex, multi-turn questions to AI chatbots, search engines, and voice assistants, which then interpret intent, context, and nuance to provide synthesized answers. Conversational queries represent a fundamental shift in how people interact with AI systems, moving from transactional information retrieval to dialogue-based problem-solving. The technology powering conversational queries relies on natural language processing (NLP) and machine learning algorithms that can understand context, disambiguate meaning, and recognize user intent from complex sentence structures. This evolution has profound implications for brand visibility, content strategy, and how organizations must optimize their digital presence in an increasingly AI-driven search landscape.

The journey toward conversational queries began decades ago with early attempts at machine translation. The Georgetown-IBM experiment in 1954 marked one of the first milestones, automatically translating 60 Russian sentences into English. However, conversational search as we know it today emerged much later. In the 1990s and early 2000s, NLP technologies gained popularity through applications like spam filtering, document classification, and basic rule-based chatbots that offered scripted responses. The real turning point came in the 2010s with the rise of deep learning models and neural network architectures that could analyze data sequences and process larger blocks of text. These advances enabled organizations to unlock insights buried in emails, customer feedback, support tickets, and social media posts. The breakthrough moment arrived with generative AI technology, which marked a major advancement in natural language processing. Software could now respond creatively and contextually, moving beyond simple processing to natural language generation. By 2024-2025, conversational queries have become mainstream, with 78% of enterprises having integrated conversational AI into at least one key operational area, according to McKinsey research. This rapid adoption reflects the technology’s maturity and business readiness, as companies recognize the value of conversational interfaces for customer engagement, operational efficiency, and competitive differentiation.

| Aspect | Traditional Keyword Search | Conversational Query |

|---|---|---|

| Query Format | Short, structured keywords (e.g., “best restaurants NYC”) | Long, natural language sentences (e.g., “What are the best restaurants near me?”) |

| User Intent | Navigational, one-off lookups with high specificity | Task-oriented, multi-turn dialogue with contextual depth |

| Processing Method | Direct keyword matching against indexed content | Natural language processing with semantic understanding and context analysis |

| Result Presentation | Ranked list of multiple linked pages | Single synthesized answer with source citations and secondary links |

| Optimization Target | Page-level relevance and keyword density | Passage/chunk-level relevance and semantic accuracy |

| Authority Signals | Links and engagement-based popularity at domain level | Mentions, citations, and entity-based authority at passage level |

| Context Handling | Limited; each query treated independently | Rich; maintains conversation history and user context across turns |

| Answer Generation | User must scan and synthesize information from multiple sources | AI generates direct, synthesized answer based on retrieved content |

| Typical Platforms | Google Search, Bing, traditional search engines | ChatGPT, Perplexity, Google AI Overviews, Claude, Gemini |

| Citation Frequency | Implicit through ranking; no direct attribution | Explicit; sources are cited or mentioned in generated responses |

Conversational queries operate through a sophisticated technical architecture that combines multiple NLP components working in concert. The process begins with tokenization, where the system breaks down the user’s natural language input into individual units of words or phrases. Next, stemming and lemmatization simplify words into their root forms, allowing the system to recognize variations like “restaurants,” “restaurant,” and “dining” as related concepts. The system then applies part-of-speech tagging, identifying whether words function as nouns, verbs, adjectives, or adverbs within the sentence context. This grammatical understanding is crucial for comprehending sentence structure and meaning. Named-entity recognition identifies specific entities like locations (“New York City”), organizations, people, and events within the query. For example, in the query “What are the best Italian restaurants in Brooklyn?”, the system recognizes “Italian” as a cuisine type and “Brooklyn” as a geographic location. Word-sense disambiguation resolves words with multiple meanings by analyzing context. The word “bat” means something entirely different in “baseball bat” versus “nocturnal bat,” and conversational AI systems must distinguish between these meanings based on surrounding context. The core of conversational query processing relies on deep learning models and transformer architectures that incorporate self-attention mechanisms. These mechanisms enable the model to examine different parts of the input sequence simultaneously and determine which parts are most important for understanding the user’s intent. Unlike traditional neural networks that process data sequentially, transformers can learn from larger datasets and process very long text where context from far back influences the meaning of what comes next. This capability is essential for handling multi-turn conversations where earlier exchanges inform later responses.

The rise of conversational queries has fundamentally changed how brands must approach visibility and reputation management in AI systems. When users ask conversational questions to platforms like ChatGPT, Perplexity, Google AI Overviews, and Claude, these systems generate synthesized answers that cite or mention specific sources. Unlike traditional search results where ranking determines visibility, conversational AI responses often feature only a handful of sources, making citation frequency and accuracy critical. Over 73% of consumers now anticipate increased AI interactions, and 74% believe AI will significantly boost service efficiency, according to Zendesk research. This shift means that brands not appearing in conversational AI responses risk losing significant visibility and authority. Organizations must now implement AI brand monitoring systems that track how their brand appears across conversational platforms, assess sentiment in AI-generated mentions, and identify gaps where they should be cited but aren’t. The challenge is more complex than traditional search monitoring because conversational queries generate dynamic, context-dependent answers. A brand might be cited for one conversational query but omitted from a similar query depending on how the AI system interprets intent and retrieves relevant sources. This variability requires continuous monitoring and rapid response to inaccuracies. Brands must also ensure their content is structured for AI discoverability through schema markup, clear entity definitions, and authoritative positioning. The stakes are high: 97% of executives acknowledge that conversational AI positively influences user satisfaction, and 94% report boosted agent productivity, making accurate brand representation in these systems a competitive necessity.

One of the defining characteristics of conversational queries is their ability to support multi-turn conversations where context from previous exchanges informs subsequent responses. Unlike traditional search where each query is independent, conversational AI systems maintain conversation history and use it to refine understanding and provide more relevant answers. For example, a user might ask “What are the best restaurants in Barcelona?” and then follow up with “Which ones have vegetarian options?” The system must understand that “ones” refers to the previously mentioned restaurants and that the user is filtering results based on dietary preferences. This contextual understanding requires sophisticated context management systems that track conversation state, user preferences, and evolving intent throughout the dialogue. The system must distinguish between new information and clarifications, recognize when users change topics, and maintain coherence across multiple exchanges. This capability is particularly important for multi-turn query fan-out, where AI systems like Google’s AI Mode break down a single conversational query into multiple sub-queries to provide comprehensive answers. For instance, a query like “Plan a weekend trip to Barcelona” might fan out into sub-queries about attractions, restaurants, transportation, and accommodations. The system must then synthesize answers from these sub-queries while maintaining consistency and relevance to the original intent. This approach significantly improves answer quality and user satisfaction because it addresses multiple dimensions of the user’s need simultaneously. For brands and content creators, understanding multi-turn conversation dynamics is essential. Content must be structured to address not just initial questions but also likely follow-up queries and related topics. This requires creating comprehensive, interconnected content hubs that anticipate user needs and provide clear pathways for exploring related information.

Optimizing for conversational queries requires a fundamental shift from traditional search engine optimization (SEO) to what experts call Generative Engine Optimization (GEO) or Answer Engine Optimization (AEO). The optimization target changes from page-level relevance to passage-level and chunk-level relevance. Rather than optimizing entire pages for specific keywords, content creators must ensure that individual sections, paragraphs, or passages directly answer specific questions that users might ask conversationally. This means structuring content with clear question-and-answer formats, using descriptive headings that match natural language queries, and providing concise, authoritative answers to common questions. Authority signals also shift fundamentally. Traditional SEO relies heavily on backlinks and domain authority, but conversational AI systems prioritize mentions and citations at the passage level. A brand might earn more visibility from being mentioned as an expert source in a relevant passage than from having a high-authority homepage. This requires creating original, research-backed content that establishes clear expertise and earns citations from other authoritative sources. Schema markup becomes increasingly important for helping AI systems understand and extract information from content. Structured data using formats like Schema.org helps AI systems recognize entities, relationships, and facts within content, making it easier for conversational AI to cite and reference specific information. Brands should implement schema markup for key entities, products, services, and expertise areas. Content must also address search intent more explicitly. Conversational queries often reveal intent more clearly than keyword searches because users phrase questions naturally. A conversational query like “How do I fix a leaky faucet?” reveals clear intent to solve a specific problem, whereas a keyword search for “leaky faucet” might indicate browsing, research, or purchase intent. Understanding and addressing this intent explicitly in content improves the likelihood of being cited in conversational AI responses. Additionally, content should be comprehensive and authoritative. Conversational AI systems tend to cite sources that provide complete, well-researched answers rather than thin or promotional content. Investing in original research, expert interviews, and data-driven insights increases the likelihood of being cited in conversational responses.

Different AI platforms handle conversational queries with varying approaches, and understanding these differences is crucial for brand monitoring and optimization. ChatGPT, developed by OpenAI, processes conversational queries through a large language model trained on diverse internet data. It maintains conversation history within a session and can engage in extended multi-turn dialogues. ChatGPT often synthesizes information without explicitly citing sources in the same way search engines do, though it can be prompted to provide source attribution. Perplexity AI positions itself as an “answer engine” specifically designed for conversational search. It explicitly cites sources for its answers, displaying them alongside the synthesized response. This makes Perplexity particularly important for brand monitoring because citations are visible and trackable. Perplexity’s focus on generating accurate answers to search-like questions makes it a direct competitor to traditional search engines. Google AI Overviews (formerly called AI Overviews) appear at the top of Google search results for many queries. These AI-generated summaries synthesize information from multiple sources and often include citations. The integration with traditional Google Search means that AI Overviews reach a massive audience and significantly impact click-through rates to cited sources. Research from Pew Research Center found that Google searchers who encountered an AI overview were substantially less likely to click on results links, highlighting the importance of being cited in these overviews. Claude, developed by Anthropic, is known for its nuanced understanding of context and ability to engage in sophisticated conversations. It emphasizes safety and accuracy, making it valuable for professional and technical queries. Gemini (Google’s conversational AI) integrates with Google’s ecosystem and benefits from Google’s vast data resources. Its association with traditional Google Search gives it significant competitive advantages in the conversational AI market. Each platform has different citation practices, answer generation approaches, and user bases, requiring tailored monitoring and optimization strategies for each.

The trajectory of conversational queries is moving toward increasingly sophisticated, context-aware, and personalized interactions. By 2030, conversational AI is expected to shift from reactive to proactive, with virtual assistants initiating helpful actions based on user behavior, context, and real-time data rather than waiting for explicit prompts. These systems won’t simply respond to questions; they’ll anticipate needs, suggest relevant information, and offer solutions before users ask. The rise of autonomous agents and agentic AI represents another significant evolution. Organizations are piloting autonomous AI agents in workflows like claims processing, customer onboarding, and order management. Deloitte research indicates that 25% of companies using generative AI will run agentic pilots in 2025, growing to 50% by 2027. These systems make decisions across tools, schedule actions, and learn from outcomes, reducing manual handoffs and enabling self-driving service. Multimodal conversational AI is becoming standard, combining text, voice, images, and video for richer interactions. Rather than text-only queries, users will be able to ask questions while showing images, videos, or documents, and AI systems will integrate information from multiple modalities to provide comprehensive answers. This evolution will require brands to optimize content across multiple formats and ensure that visual and multimedia content is discoverable and citable by AI systems. Governance and ethics are becoming increasingly important as conversational AI becomes more prevalent. Over 50% of organizations now involve privacy, legal, IT, and security teams in AI oversight, marking a shift from siloed compliance to multi-disciplinary governance. Brands must ensure that their content and data practices align with emerging AI ethics standards and regulatory requirements. The convergence of conversational AI with other technologies like augmented reality (AR), virtual reality (VR), and the Internet of Things (IoT) will create new opportunities and challenges. Imagine conversational AI integrated with AR that allows users to ask questions about products they see in the real world, or IoT devices that proactively offer assistance based on user behavior patterns. These integrations will require new approaches to content optimization and brand visibility. For organizations, the strategic imperative is clear: conversational queries are no longer an emerging trend but a fundamental shift in how people interact with information and make decisions. Brands that invest in understanding conversational query patterns, optimizing content for AI citation, and monitoring their presence across conversational platforms will gain significant competitive advantages. Those that ignore this shift risk losing visibility, authority, and customer trust in an increasingly AI-driven digital landscape.

Traditional keyword searches rely on short, structured terms like 'best restaurants NYC,' while conversational queries use natural language like 'What are the best restaurants near me in New York City?' Conversational queries are longer, context-aware, and designed to mimic human conversation. They leverage natural language processing (NLP) to understand intent, context, and nuance, whereas keyword searches match terms directly against indexed content. According to research from Aleyda Solis, AI search handles long, conversational-based, multi-turn queries with high task-oriented intent, compared to traditional search's short, keyword-based, one-off queries with navigational intent.

Natural language processing (NLP) is the core technology enabling conversational queries. NLP allows AI systems to interpret, manipulate, and comprehend human language by breaking down sentences into components, understanding context, and extracting meaning. Machine learning algorithms within NLP systems recognize patterns, disambiguate word meanings, and identify user intent from complex sentence structures. AWS defines NLP as technology that enables computers to interpret, manipulate, and comprehend human language, which is essential for conversational AI systems to process and respond to natural language questions accurately.

Brand monitoring for conversational queries involves tracking how brands appear in AI-generated answers across platforms like ChatGPT, Perplexity, Google AI Overviews, and Claude. Organizations use automated alerts, keyword tracing, and periodic audits to identify brand mentions, assess sentiment, and measure citation frequency. Monitoring systems flag inaccuracies, track share of voice against competitors, and identify gaps where brands should appear but don't. This is critical because conversational AI systems increasingly shape consumer perceptions, and brands must ensure accurate representation in these dynamic, synthesized responses.

Query fan-out is a technique used by AI search engines like Google's AI Mode to break down a single conversational query into multiple sub-queries for more comprehensive results. Instead of matching one query directly, the system expands the user's question into related queries to retrieve diverse, relevant information. For example, a conversational query like 'What should I do for a weekend trip to Barcelona?' might fan out into sub-queries about attractions, restaurants, transportation, and accommodations. This approach improves answer quality and relevance by addressing multiple aspects of the user's intent simultaneously.

Conversational queries are critical for AI monitoring because they represent how modern users interact with AI systems. Unlike traditional search, conversational queries generate synthesized answers that cite multiple sources, making brand visibility and citation tracking essential. Platforms like AmICited monitor how brands appear in conversational AI responses across Perplexity, ChatGPT, Google AI Overviews, and Claude. Understanding conversational query patterns helps brands optimize their content for AI citation, track competitive positioning, and ensure accurate representation in AI-generated answers that increasingly influence consumer decisions.

Adoption of conversational AI and queries is accelerating rapidly. According to Master of Code Global, 78% of companies have integrated conversational AI into at least one key operational area by 2025, with 85% of decision-makers forecasting widespread adoption within five years. Nielsen Norman Group research shows that generative AI is reshaping search behaviors, with users increasingly incorporating AI chatbots alongside traditional search. Additionally, 73% of consumers anticipate increased AI interactions, and 74% believe AI will significantly boost service efficiency, demonstrating strong market momentum toward conversational query adoption.

Conversational queries require a shift in content strategy from keyword-focused to intent-focused and passage-level optimization. Rather than targeting single keywords, content must address comprehensive topics, answer specific questions, and provide context. Aleyda Solis's research shows that AI search optimization targets passage and chunk-level relevance rather than page-level relevance. Brands must create authoritative, well-structured content with clear answers to natural language questions, use schema markup for better AI discoverability, and focus on establishing entity-based authority through mentions and citations rather than traditional link-based popularity signals.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Understand how conversational queries differ from traditional keywords. Learn why AI search engines prefer natural language questions and how this impacts brand...

Understand how conversational queries differ from traditional keyword queries. Learn why AI search engines prioritize natural language, user intent, and context...

Conversational AI is a collection of AI technologies enabling natural dialogue between humans and machines. Learn how NLP, machine learning, and dialogue manage...