Crawl Budget Optimization for AI

Learn how to optimize crawl budget for AI bots like GPTBot and Perplexity. Discover strategies to manage server resources, improve AI visibility, and control ho...

Crawl budget is the number of pages search engines allocate resources to crawl on a website within a specific timeframe, determined by crawl capacity limit and crawl demand. It represents the finite resources search engines distribute across billions of websites to discover, crawl, and index content efficiently.

Crawl budget is the number of pages search engines allocate resources to crawl on a website within a specific timeframe, determined by crawl capacity limit and crawl demand. It represents the finite resources search engines distribute across billions of websites to discover, crawl, and index content efficiently.

Crawl budget is the number of pages that search engines allocate resources to crawl on a website within a specific timeframe, typically measured daily or monthly. It represents a finite allocation of computational resources that search engines like Google, Bing, and emerging AI crawlers distribute across billions of websites on the internet. The concept emerged from the fundamental reality that search engines cannot crawl every page on every website simultaneously—they must prioritize and allocate their limited infrastructure strategically. Crawl budget directly impacts whether your website’s pages get discovered, indexed, and ultimately ranked in search results. For large websites with thousands or millions of pages, managing crawl budget efficiently can mean the difference between comprehensive indexing and having important pages remain undiscovered for weeks or months.

The concept of crawl budget became formalized in search engine optimization around 2009 when Google began publishing guidance on how their crawling systems work. Initially, most SEO professionals focused on traditional ranking factors like keywords and backlinks, largely ignoring the technical infrastructure that made indexing possible. However, as websites grew exponentially in size and complexity, particularly with the rise of ecommerce platforms and content-heavy sites, search engines faced unprecedented challenges in efficiently crawling and indexing all available content. Google acknowledged this limitation and introduced the concept of crawl budget to help webmasters understand why not all their pages were being indexed despite being technically accessible. According to Google Search Central, the web exceeds Google’s ability to explore and index every available URL, making crawl budget management essential for large-scale websites. Today, with AI crawler traffic surging 96% between May 2024 and May 2025, and GPTBot’s share jumping from 5% to 30%, crawl budget has become even more critical as multiple crawling systems compete for server resources. This evolution reflects the broader shift toward generative engine optimization (GEO) and the need for brands to ensure visibility across both traditional search and AI-powered platforms.

Crawl budget is determined by two primary components: crawl capacity limit and crawl demand. The crawl capacity limit represents the maximum number of simultaneous connections and the time delay between fetches that a search engine can use without overwhelming a website’s servers. This limit is dynamic and adjusts based on several factors. If a website responds quickly to crawler requests and returns minimal server errors, the capacity limit increases, allowing search engines to use more parallel connections and crawl more pages. Conversely, if a site experiences slow response times, timeouts, or frequent 5xx server errors, the capacity limit decreases as a protective measure to prevent overloading the server. Crawl demand, the second component, reflects how often search engines want to revisit and recrawl content based on its perceived value and update frequency. Popular pages with numerous backlinks and high search traffic receive higher crawl demand and are recrawled more frequently. News articles and frequently updated content receive higher crawl demand than static pages like terms of service. The combination of these two factors—what the server can handle and what search engines want to crawl—determines your effective crawl budget. This balanced approach ensures search engines can discover fresh content while respecting server capacity constraints.

| Concept | Definition | Measurement | Impact on Indexing | Primary Control |

|---|---|---|---|---|

| Crawl Budget | Total pages search engines allocate to crawl within a timeframe | Pages per day/month | Direct—determines which pages get discovered | Indirect (authority, speed, structure) |

| Crawl Rate | Actual number of pages crawled per day | Pages per day | Informational—shows current crawling activity | Server response time, page speed |

| Crawl Capacity Limit | Maximum simultaneous connections server can handle | Connections per second | Constrains crawl budget ceiling | Server infrastructure, hosting quality |

| Crawl Demand | How often search engines want to recrawl content | Recrawl frequency | Determines priority within budget | Content freshness, popularity, authority |

| Index Coverage | Percentage of crawled pages actually indexed | Indexed pages / crawled pages | Outcome metric—shows indexing success | Content quality, canonicalization, noindex tags |

| Robots.txt | File controlling which URLs search engines can crawl | URL patterns blocked | Protective—prevents budget waste on unwanted pages | Direct—you control via robots.txt rules |

Crawl budget operates through a sophisticated system of algorithms and resource allocation that search engines continuously adjust. When Googlebot (Google’s primary crawler) visits your website, it evaluates multiple signals to determine how aggressively to crawl. The crawler first assesses your server’s health by monitoring response times and error rates. If your server consistently responds within 200-500 milliseconds and returns minimal errors, Google interprets this as a healthy, well-maintained server capable of handling increased crawl traffic. The crawler then increases the crawl capacity limit, potentially using more parallel connections to fetch pages simultaneously. This is why page speed optimization is so critical—faster pages allow search engines to crawl more URLs in the same timeframe. Conversely, if pages take 3-5 seconds to load or frequently timeout, Google reduces the capacity limit to protect your server from being overwhelmed. Beyond server health, search engines analyze your site’s URL inventory to determine crawl demand. They examine which pages have internal links pointing to them, how many external backlinks each page receives, and how frequently content is updated. Pages linked from your homepage receive higher priority than pages buried deep in your site hierarchy. Pages with recent updates and high traffic receive more frequent recrawls. Search engines also use sitemaps as guidance documents to understand your site’s structure and content priorities, though sitemaps are suggestions rather than absolute requirements. The algorithm continuously balances these factors, dynamically adjusting your crawl budget based on real-time performance metrics and content value assessments.

The practical impact of crawl budget on SEO performance cannot be overstated, particularly for large websites and rapidly growing platforms. When a website’s crawl budget is exhausted before all important pages are discovered, those undiscovered pages cannot be indexed and therefore cannot rank in search results. This creates a direct revenue impact—pages that aren’t indexed generate zero organic traffic. For ecommerce sites with hundreds of thousands of product pages, inefficient crawl budget management means some products never appear in search results, directly reducing sales. For news publishers, slow crawl budget utilization means breaking news stories take days to appear in search results instead of hours, reducing their competitive advantage. Research from Backlinko and Conductor demonstrates that sites with optimized crawl budgets see significantly faster indexing of new and updated content. One documented case showed a site that improved page load speed by 50% experienced a 4x increase in daily crawl volume—from 150,000 to 600,000 URLs per day. This dramatic increase meant new content was discovered and indexed within hours instead of weeks. For AI search visibility, crawl budget becomes even more critical. As AI crawlers like GPTBot, Claude Bot, and Perplexity Bot compete for server resources alongside traditional search engine crawlers, websites with poor crawl budget optimization may find their content isn’t being accessed frequently enough by AI systems to be cited in AI-generated responses. This directly impacts your visibility in AI Overviews, ChatGPT responses, and other generative search platforms that AmICited monitors. Organizations that fail to optimize crawl budget often experience cascading SEO problems: new pages take weeks to index, content updates aren’t reflected in search results quickly, and competitors with better-optimized sites capture search traffic that should belong to them.

Understanding what wastes crawl budget is essential for optimization. Duplicate content represents one of the largest sources of wasted crawl budget. When search engines encounter multiple versions of the same content—whether through URL parameters, session identifiers, or multiple domain variants—they must process each version separately, consuming crawl budget without adding value to their index. A single product page on an ecommerce site might generate dozens of duplicate URLs through different filter combinations (color, size, price range), each consuming crawl budget. Redirect chains waste crawl budget by forcing search engines to follow multiple hops before reaching the final destination page. A redirect chain of five or more hops can consume significant crawl resources, and search engines may abandon following the chain entirely. Broken links and soft 404 errors (pages that return a 200 status code but contain no actual content) force search engines to crawl pages that provide no value. Low-quality content pages—such as thin pages with minimal text, auto-generated content, or pages that don’t add unique value—consume crawl budget that could be spent on high-quality, unique content. Faceted navigation and session identifiers in URLs create virtually infinite URL spaces that can trap crawlers in loops. Non-indexable pages included in XML sitemaps mislead search engines about which pages deserve crawling priority. High page load times and server timeouts reduce crawl capacity by signaling to search engines that your server cannot handle aggressive crawling. Poor internal link structure buries important pages deep in your site hierarchy, making them harder for crawlers to discover and prioritize. Each of these issues individually reduces crawl efficiency; combined, they can result in search engines crawling only a fraction of your important content.

Optimizing crawl budget requires a multi-faceted approach addressing both technical infrastructure and content strategy. Improve page speed by optimizing images, minifying CSS and JavaScript, leveraging browser caching, and using content delivery networks (CDNs). Faster pages allow search engines to crawl more URLs in the same timeframe. Consolidate duplicate content by implementing proper redirects for domain variants (HTTP/HTTPS, www/non-www), using canonical tags to indicate preferred versions, and blocking internal search result pages from crawling via robots.txt. Manage URL parameters by using robots.txt to block parameter-based URLs that create duplicate content, or by implementing URL parameter handling in Google Search Console and Bing Webmaster Tools. Fix broken links and redirect chains by auditing your site for broken links and ensuring redirects point directly to final destinations rather than creating chains. Clean up XML sitemaps by removing non-indexable pages, expired content, and pages that return error status codes. Include only pages you want indexed and that provide unique value. Improve internal link structure by ensuring important pages have multiple internal links pointing to them, creating a flat hierarchy that distributes link authority throughout your site. Block low-value pages using robots.txt to prevent crawlers from wasting budget on admin pages, duplicate search results, shopping cart pages, and other non-indexable content. Monitor crawl stats regularly using Google Search Console’s Crawl Stats report to track daily crawl volume, identify server errors, and spot trends in crawling behavior. Increase server capacity if you consistently see crawl rates hitting your server’s capacity limit—this signals that search engines want to crawl more but your infrastructure cannot handle it. Use structured data to help search engines understand your content better, potentially increasing crawl demand for high-quality pages. Maintain updated sitemaps with the <lastmod> tag to signal when content has been updated, helping search engines prioritize recrawling fresh content.

Different search engines and AI crawlers have distinct crawl budgets and behaviors. Google remains the most transparent about crawl budget, providing detailed Crawl Stats reports in Google Search Console showing daily crawl volume, server response times, and error rates. Bing provides similar data through Bing Webmaster Tools, though typically with less granular detail. AI crawlers like GPTBot (OpenAI), Claude Bot (Anthropic), and Perplexity Bot operate with their own crawl budgets and priorities, often focusing on high-authority, high-quality content. These AI crawlers have shown explosive growth—GPTBot’s share of crawler traffic jumped from 5% to 30% in just one year. For organizations using AmICited to monitor AI visibility, understanding that AI crawlers have separate crawl budgets from traditional search engines is critical. A page might be well-indexed by Google but rarely crawled by AI systems if it lacks sufficient authority or topical relevance. Mobile-first indexing means Google primarily crawls and indexes mobile versions of pages, so crawl budget optimization must account for mobile site performance. If you have separate mobile and desktop sites, they share a crawl budget on the same host, so mobile site speed directly impacts desktop indexing. JavaScript-heavy sites require additional crawl resources because search engines must render JavaScript to understand page content, consuming more crawl budget per page. Sites using dynamic rendering or server-side rendering can reduce crawl budget consumption by making content immediately available without requiring rendering. International sites with hreflang tags and multiple language versions consume more crawl budget as search engines must crawl variants for each language and region. Properly implementing hreflang helps search engines understand which version to crawl and index for each market, improving crawl efficiency.

The future of crawl budget is being reshaped by the explosive growth of AI search and generative search engines. As AI crawler traffic surged 96% between May 2024 and May 2025, with GPTBot’s share jumping from 5% to 30%, websites now face competition for crawl resources from multiple systems simultaneously. Traditional search engines, AI crawlers, and emerging generative engine optimization (GEO) platforms all compete for server bandwidth and crawl capacity. This trend suggests that crawl budget optimization will become increasingly important rather than less important. Organizations will need to monitor not just Google’s crawl patterns but also crawl patterns from OpenAI’s GPTBot, Anthropic’s Claude Bot, Perplexity’s crawler, and other AI systems. Platforms like AmICited that track brand mentions across AI platforms will become essential tools for understanding whether your content is being discovered and cited by AI systems. The definition of crawl budget may evolve to encompass not just traditional search engine crawling but also crawling by AI systems and LLM training systems. Some experts predict that websites will need to implement separate optimization strategies for traditional search versus AI search, potentially allocating different content and resources to each system. The rise of robots.txt extensions and llms.txt files (which allow websites to specify which content AI systems can access) suggests that crawl budget management will become more granular and intentional. As search engines continue to prioritize E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) signals, crawl budget allocation will increasingly favor high-authority, high-quality content, potentially widening the gap between well-optimized sites and poorly-optimized competitors. The integration of crawl budget concepts into GEO strategies means that forward-thinking organizations will optimize not just for traditional indexing but for visibility across the entire spectrum of search and AI platforms that their audiences use.

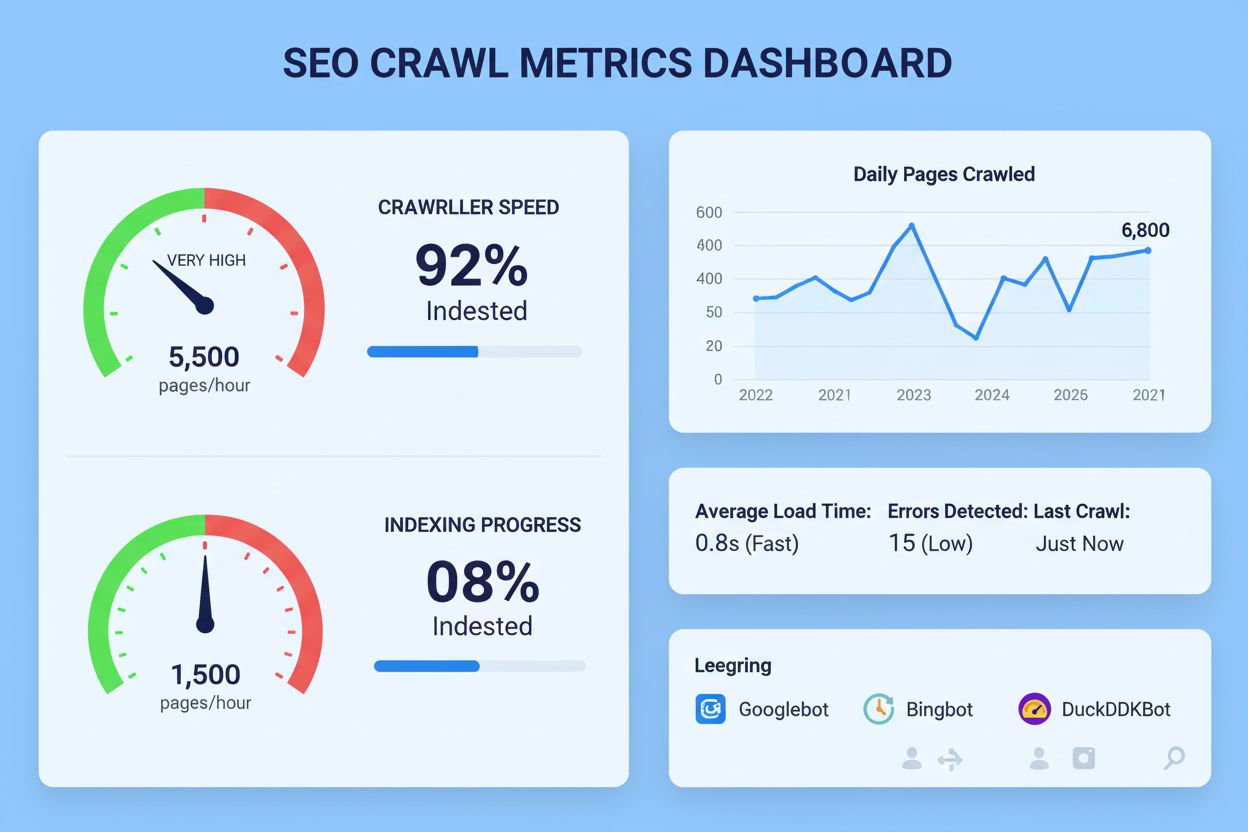

Crawl rate refers to the number of pages a search engine crawls per day, while crawl budget is the total number of pages a search engine will crawl within a specific timeframe. Crawl rate is a measurement metric, whereas crawl budget is the allocation of resources. For example, if Google crawls 100 pages per day on your site, that's the crawl rate, but your monthly crawl budget might be 3,000 pages. Understanding both metrics helps you monitor whether search engines are efficiently using their allocated resources on your site.

As AI crawler traffic surged 96% between May 2024 and May 2025, with GPTBot's share jumping from 5% to 30%, crawl budget has become increasingly critical for AI search visibility. Platforms like AmICited monitor how often your domain appears in AI-generated responses, which depends partly on how frequently AI crawlers can access and index your content. A well-optimized crawl budget ensures search engines and AI systems can discover your content quickly, improving your chances of being cited in AI responses and maintaining visibility across both traditional and generative search platforms.

You cannot directly increase crawl budget through a setting or request to Google. However, you can indirectly increase it by improving your site's authority through earning backlinks, increasing page speed, and reducing server errors. Google's former head of webspam, Matt Cutts, confirmed that crawl budget is roughly proportional to your site's PageRank (authority). Additionally, optimizing your site structure, fixing duplicate content, and removing crawl inefficiencies signals to search engines that your site deserves more crawling resources.

Large websites with 10,000+ pages, ecommerce sites with hundreds of thousands of product pages, news publishers adding dozens of articles daily, and rapidly growing sites should prioritize crawl budget optimization. Small websites under 10,000 pages typically don't need to worry about crawl budget constraints. However, if you notice important pages taking weeks to get indexed or see low index coverage relative to total pages, crawl budget optimization becomes critical regardless of site size.

Crawl budget is determined by the intersection of crawl capacity limit (how much crawling your server can handle) and crawl demand (how often search engines want to crawl your content). If your server responds quickly and has no errors, the capacity limit increases, allowing more simultaneous connections. Crawl demand increases for popular pages with many backlinks and frequently updated content. Search engines balance these two factors to determine your effective crawl budget, ensuring they don't overload your servers while still discovering important content.

Page speed is one of the most impactful factors in crawl budget optimization. Faster-loading pages allow Googlebot to visit and process more URLs within the same timeframe. Research shows that when sites improve page load speed by 50%, crawl rates can increase dramatically—some sites have seen crawl volume increase from 150,000 to 600,000 URLs per day after speed optimization. Slow pages consume more of your crawl budget, leaving less time for search engines to discover other important content on your site.

Duplicate content forces search engines to process multiple versions of the same information without adding value to their index. This wastes crawl budget that could be spent on unique, valuable pages. Common sources of duplicate content include internal search result pages, image attachment pages, multiple domain variants (HTTP/HTTPS, www/non-www), and faceted navigation pages. By consolidating duplicate content through redirects, canonical tags, and robots.txt rules, you free up crawl budget for search engines to discover and index more unique, high-quality pages on your site.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Learn how to optimize crawl budget for AI bots like GPTBot and Perplexity. Discover strategies to manage server resources, improve AI visibility, and control ho...

Crawl rate is the speed at which search engines crawl your website. Learn how it affects indexing, SEO performance, and how to optimize it for better search vis...

Learn what crawl budget for AI means, how it differs from traditional search crawl budgets, and why it matters for your brand's visibility in AI-generated answe...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.