What is Crawl Budget for AI? Understanding AI Bot Resource Allocation

Learn what crawl budget for AI means, how it differs from traditional search crawl budgets, and why it matters for your brand's visibility in AI-generated answe...

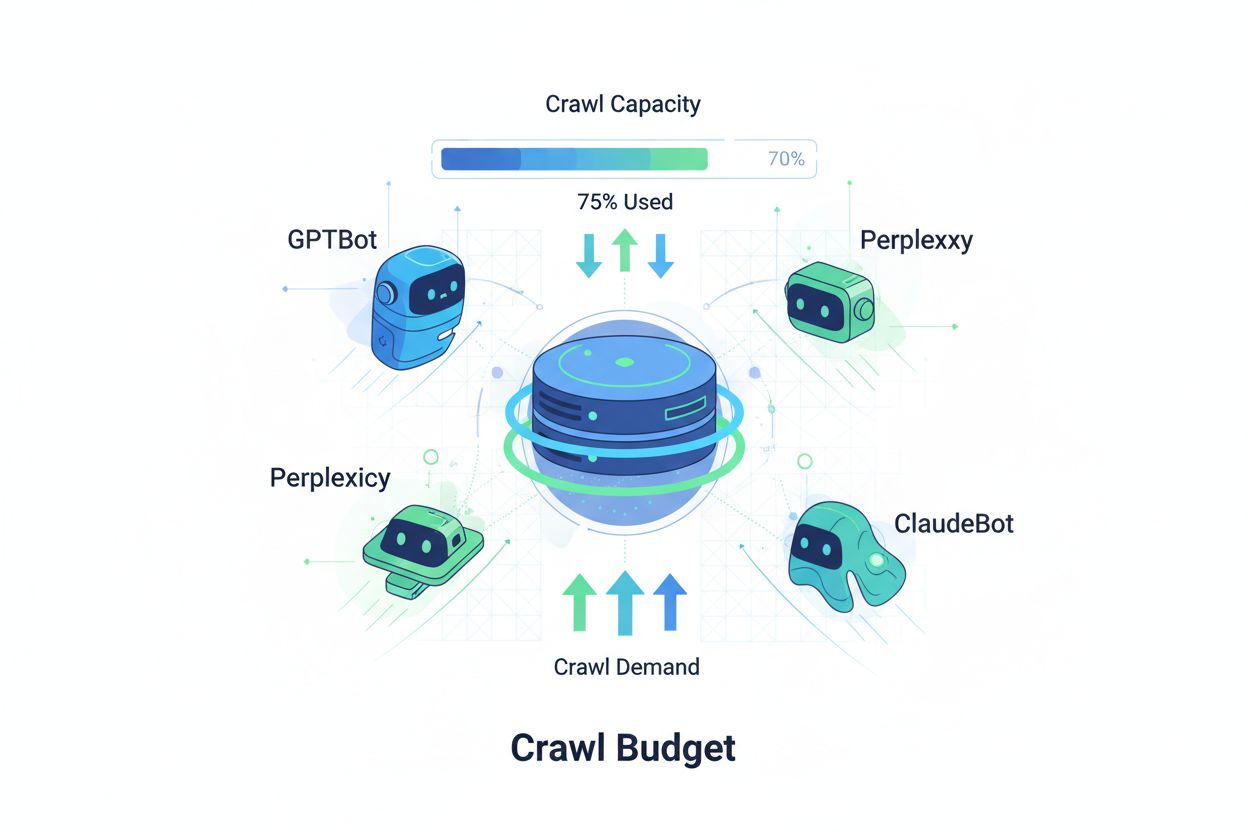

Techniques to ensure AI crawlers efficiently access and index the most important content on a website within their crawl limits. Crawl budget optimization manages the balance between crawl capacity (server resources) and crawl demand (bot requests) to maximize visibility in AI-generated answers while controlling operational costs and server load.

Techniques to ensure AI crawlers efficiently access and index the most important content on a website within their crawl limits. Crawl budget optimization manages the balance between crawl capacity (server resources) and crawl demand (bot requests) to maximize visibility in AI-generated answers while controlling operational costs and server load.

Crawl budget refers to the amount of resources—measured in requests and bandwidth—that search engines and AI bots allocate to crawling your website. Traditionally, this concept applied primarily to Google’s crawling behavior, but the emergence of AI-powered bots has fundamentally transformed how organizations must think about crawl budget management. The crawl budget equation consists of two critical variables: crawl capacity (the maximum number of pages a bot can crawl) and crawl demand (the actual number of pages the bot wants to crawl). In the AI era, this dynamic has become exponentially more complex, as bots like GPTBot (OpenAI), Perplexity Bot, and ClaudeBot (Anthropic) now compete for server resources alongside traditional search engine crawlers. These AI bots operate with different priorities and patterns than Googlebot, often consuming significantly more bandwidth while pursuing different indexing goals, making crawl budget optimization no longer optional but essential for maintaining site performance and controlling operational costs.

AI crawlers fundamentally differ from traditional search engine bots in their crawling patterns, frequency, and resource consumption. While Googlebot respects crawl budget limits and implements sophisticated throttling mechanisms, AI bots often exhibit more aggressive crawling behaviors, sometimes requesting the same content multiple times and showing less deference to server load signals. Research indicates that OpenAI’s GPTBot can consume 12-15 times more bandwidth than Google’s crawler on certain websites, particularly those with large content libraries or frequently updated pages. This aggressive approach stems from AI training requirements—these bots need to continuously ingest fresh content to improve model performance, creating a fundamentally different crawling philosophy than search engines focused on indexing for retrieval. The server impact is substantial: organizations report significant increases in bandwidth costs, CPU utilization, and server load directly attributable to AI bot traffic. Additionally, the cumulative effect of multiple AI bots crawling simultaneously can degrade user experience, slow page load times, and increase hosting expenses, making the distinction between traditional and AI crawlers a critical business consideration rather than a technical curiosity.

| Characteristic | Traditional Crawlers (Googlebot) | AI Crawlers (GPTBot, ClaudeBot) |

|---|---|---|

| Crawl Frequency | Adaptive, respects crawl budget | Aggressive, continuous |

| Bandwidth Consumption | Moderate, optimized | High, resource-intensive |

| Respect for Robots.txt | Strict compliance | Variable compliance |

| Caching Behavior | Sophisticated caching | Frequent re-requests |

| User-Agent Identification | Clear, consistent | Sometimes obfuscated |

| Business Goal | Search indexing | Model training/data acquisition |

| Cost Impact | Minimal | Significant (12-15x higher) |

Understanding crawl budget requires mastering its two foundational components: crawl capacity and crawl demand. Crawl capacity represents the maximum number of URLs your server can handle being crawled within a given timeframe, determined by several interconnected factors. This capacity is influenced by:

Crawl demand, conversely, represents how many pages bots actually want to crawl, driven by content characteristics and bot priorities. Factors affecting crawl demand include:

The optimization challenge emerges when crawl demand exceeds crawl capacity—bots must choose which pages to crawl, potentially missing important content updates. Conversely, when crawl capacity far exceeds demand, you’re wasting server resources. The goal is achieving crawl efficiency: maximizing the crawl of important pages while minimizing wasted crawls on low-value content. This balance becomes increasingly complex in the AI era, where multiple bot types with different priorities compete for the same server resources, requiring sophisticated strategies to allocate crawl budget effectively across all stakeholders.

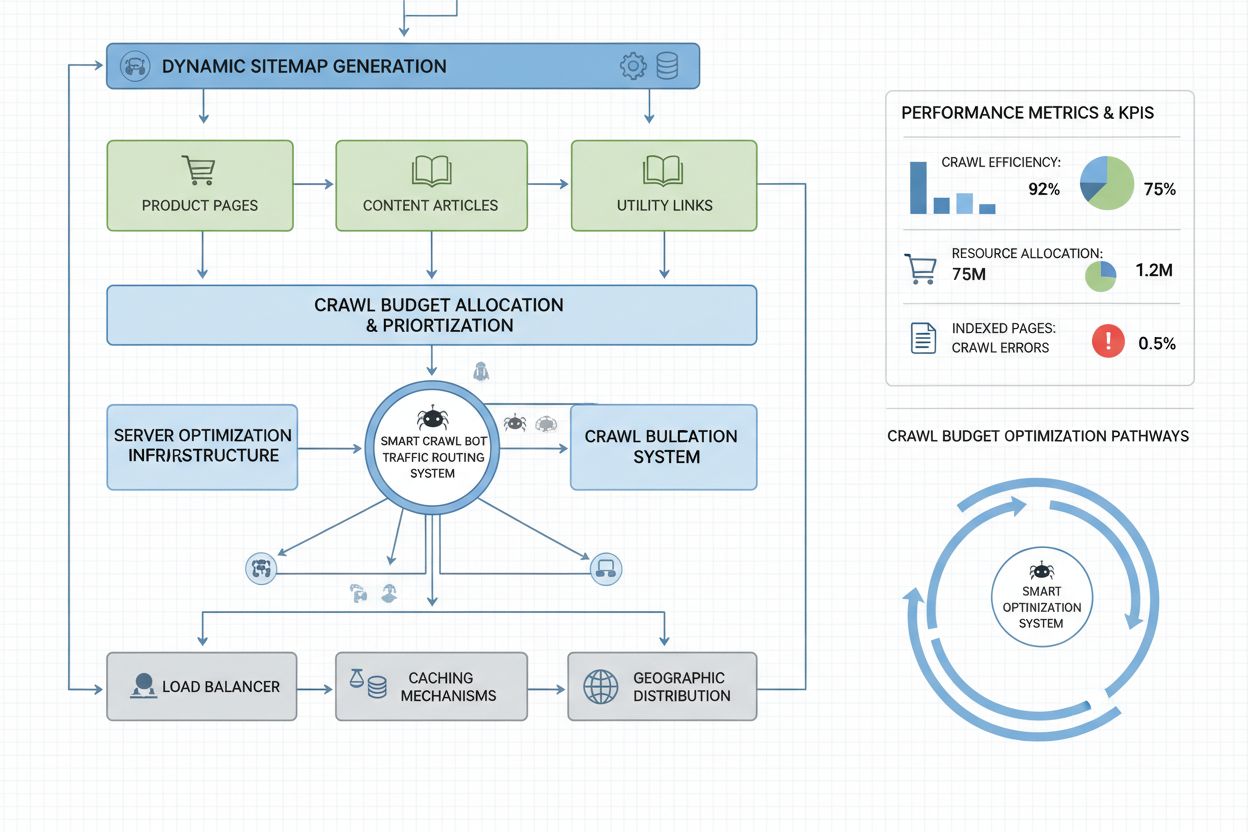

Measuring crawl budget performance begins with Google Search Console, which provides crawl statistics under the “Settings” section, displaying daily crawl requests, bytes downloaded, and response times. To calculate your crawl efficiency ratio, divide the number of successful crawls (HTTP 200 responses) by total crawl requests, with healthy sites typically achieving 85-95% efficiency. A formula for basic crawl efficiency is: (Successful Crawls ÷ Total Crawl Requests) × 100 = Crawl Efficiency %. Beyond Google’s data, practical monitoring requires:

For AI crawler-specific monitoring, tools like AmICited.com provide specialized tracking of GPTBot, ClaudeBot, and Perplexity Bot activity, offering insights into which pages these bots prioritize and how frequently they return. Additionally, implementing custom alerts for unusual crawl spikes—particularly from AI bots—allows rapid response to unexpected resource consumption. The key metric to track is crawl cost per page: dividing total server resources consumed by crawls divided by the number of unique pages crawled reveals whether you’re efficiently using your crawl budget or wasting resources on low-value pages.

Optimizing crawl budget for AI bots requires a multi-layered approach combining technical implementation with strategic decision-making. The primary optimization tactics include:

The strategic decision of which tactic to employ depends on your business model and content strategy. E-commerce sites might block AI crawlers from product pages to prevent training data acquisition by competitors, while content publishers might allow crawling to gain visibility in AI-generated answers. For sites experiencing genuine server strain from AI bot traffic, implementing user-agent specific blocking in robots.txt is the most direct solution: User-agent: GPTBot followed by Disallow: / prevents OpenAI’s crawler from accessing your site entirely. However, this approach sacrifices potential visibility in ChatGPT’s responses and other AI applications. A more nuanced strategy involves selective blocking: allowing AI crawlers access to public-facing content while blocking them from sensitive areas, archives, or duplicate content that wastes crawl budget without providing value to either the bot or your users.

Enterprise-scale websites managing millions of pages require sophisticated crawl budget optimization strategies beyond basic robots.txt configuration. Dynamic sitemaps represent a critical advancement, where sitemaps are generated in real-time based on content freshness, importance scores, and crawl history. Rather than static XML sitemaps listing all pages, dynamic sitemaps prioritize recently updated pages, high-traffic pages, and pages with conversion potential, ensuring bots focus crawl budget on content that matters most. URL segmentation divides your site into logical crawl zones, each with tailored optimization strategies—news sections might use aggressive sitemap updates to ensure daily content is crawled immediately, while evergreen content uses less frequent updates.

Server-side optimization approaches include implementing crawl-aware caching strategies that serve cached responses to bots while delivering fresh content to users, reducing server load from repeated bot requests. Content delivery networks (CDNs) with bot-specific routing can isolate bot traffic from user traffic, preventing crawlers from consuming bandwidth needed for actual visitors. Rate limiting by user-agent allows servers to throttle AI bot requests while maintaining normal speeds for Googlebot and user traffic. For truly large-scale operations, distributed crawl budget management across multiple server regions ensures no single point of failure and allows geographic load balancing of bot traffic. Machine learning-based crawl prediction analyzes historical crawl patterns to forecast which pages bots will request next, allowing proactive optimization of those pages’ performance and caching. These enterprise-level strategies transform crawl budget from a constraint into a managed resource, enabling large organizations to serve billions of pages while maintaining optimal performance for both bots and human users.

The decision to block or allow AI crawlers represents a fundamental business strategy choice with significant implications for visibility, competitive positioning, and operational costs. Allowing AI crawlers provides substantial benefits: your content becomes eligible for inclusion in AI-generated answers, potentially driving traffic from ChatGPT, Claude, Perplexity, and other AI applications; your brand gains visibility in a new distribution channel; and you benefit from the SEO signals that come from being cited by AI systems. However, these benefits come with costs: increased server load and bandwidth consumption, potential training of competitor AI models on your proprietary content, and loss of control over how your information is presented and attributed in AI responses.

Blocking AI crawlers eliminates these costs but sacrifices the visibility benefits and potentially cedes market share to competitors who allow crawling. The optimal strategy depends on your business model: content publishers and news organizations often benefit from allowing crawling to gain distribution through AI summaries; SaaS companies and e-commerce sites might block crawlers to prevent competitors from training models on their product information; educational institutions and research organizations typically allow crawling to maximize knowledge dissemination. A hybrid approach offers middle ground: allow crawling of public-facing content while blocking access to sensitive areas, user-generated content, or proprietary information. This strategy maximizes visibility benefits while protecting valuable assets. Additionally, monitoring AmICited.com and similar tools reveals whether your content is actually being cited by AI systems—if your site isn’t appearing in AI responses despite allowing crawling, blocking becomes a more attractive option since you’re bearing the crawl cost without receiving visibility benefits.

Effective crawl budget management requires specialized tools that provide visibility into bot behavior and enable data-driven optimization decisions. Conductor and Sitebulb offer enterprise-grade crawl analysis, simulating how search engines crawl your site and identifying crawl inefficiencies, wasted crawls on error pages, and opportunities to improve crawl budget allocation. Cloudflare provides bot management at the network level, allowing granular control over which bots can access your site and implementing rate limiting specific to AI crawlers. For AI crawler-specific monitoring, AmICited.com stands out as the most comprehensive solution, tracking GPTBot, ClaudeBot, Perplexity Bot, and other AI crawlers with detailed analytics showing which pages these bots access, how frequently they return, and whether your content appears in AI-generated responses.

Server log analysis remains fundamental to crawl budget optimization—tools like Splunk, Datadog, or open-source ELK Stack allow you to parse raw access logs and segment traffic by user-agent, identifying which bots consume the most resources and which pages attract the most crawl attention. Custom dashboards tracking crawl trends over time reveal whether optimization efforts are working and whether new bot types are emerging. Google Search Console continues providing essential data on Google’s crawl behavior, while Bing Webmaster Tools offers similar insights for Microsoft’s crawler. The most sophisticated organizations implement multi-tool monitoring strategies combining Google Search Console for traditional search crawl data, AmICited.com for AI crawler tracking, server log analysis for comprehensive bot visibility, and specialized tools like Conductor for crawl simulation and efficiency analysis. This layered approach provides complete visibility into how all bot types interact with your site, enabling optimization decisions based on comprehensive data rather than guesswork. Regular monitoring—ideally weekly reviews of crawl metrics—allows rapid identification of problems like unexpected crawl spikes, increased error rates, or new aggressive bots, enabling quick response before crawl budget issues impact site performance or operational costs.

AI bots like GPTBot and ClaudeBot operate with different priorities than Googlebot. While Googlebot respects crawl budget limits and implements sophisticated throttling, AI bots often exhibit more aggressive crawling patterns, consuming 12-15 times more bandwidth. AI bots prioritize continuous content ingestion for model training rather than search indexing, making their crawl behavior fundamentally different and requiring distinct optimization strategies.

Research indicates that OpenAI's GPTBot can consume 12-15 times more bandwidth than Google's crawler on certain websites, particularly those with large content libraries. The exact consumption depends on your site size, content update frequency, and how many AI bots are crawling simultaneously. Multiple AI bots crawling at once can significantly increase server load and hosting costs.

Yes, you can block specific AI crawlers using robots.txt without impacting traditional SEO. However, blocking AI crawlers means sacrificing visibility in AI-generated answers from ChatGPT, Claude, Perplexity, and other AI applications. The decision depends on your business model—content publishers typically benefit from allowing crawling, while e-commerce sites might block to prevent competitor training.

Poor crawl budget management can result in important pages not being crawled or indexed, slower indexing of new content, increased server load and bandwidth costs, degraded user experience from bot traffic consuming resources, and missed visibility opportunities in both traditional search and AI-generated answers. Large sites with millions of pages are most vulnerable to these impacts.

For optimal results, monitor crawl budget metrics weekly, with daily checks during major content launches or when experiencing unexpected traffic spikes. Use Google Search Console for traditional crawl data, AmICited.com for AI crawler tracking, and server logs for comprehensive bot visibility. Regular monitoring allows rapid identification of problems before they impact site performance.

Robots.txt has variable effectiveness with AI bots. While Googlebot strictly respects robots.txt directives, AI bots show inconsistent compliance—some respect the rules while others ignore them. For more reliable control, implement user-agent specific blocking, rate limiting at the server level, or use CDN-based bot management tools like Cloudflare for more granular control.

Crawl budget directly impacts AI visibility because AI bots cannot cite or reference content they haven't crawled. If your important pages aren't being crawled due to budget constraints, they won't appear in AI-generated answers. Optimizing crawl budget ensures your best content gets discovered by AI bots, increasing chances of being cited in ChatGPT, Claude, and Perplexity responses.

Prioritize pages using dynamic sitemaps that highlight recently updated content, high-traffic pages, and pages with conversion potential. Use robots.txt to block low-value pages like archives and duplicates. Implement clean URL structures and strategic internal linking to guide bots toward important content. Monitor which pages AI bots actually crawl using tools like AmICited.com to refine your strategy.

Track how AI bots crawl your site and optimize your visibility in AI-generated answers with AmICited.com's comprehensive AI crawler monitoring platform.

Learn what crawl budget for AI means, how it differs from traditional search crawl budgets, and why it matters for your brand's visibility in AI-generated answe...

Community discussion on AI crawl budget management. How to handle GPTBot, ClaudeBot, and PerplexityBot without sacrificing visibility.

Crawl budget is the number of pages search engines crawl on your website within a timeframe. Learn how to optimize crawl budget for better indexing and SEO perf...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.