Crawl Rate

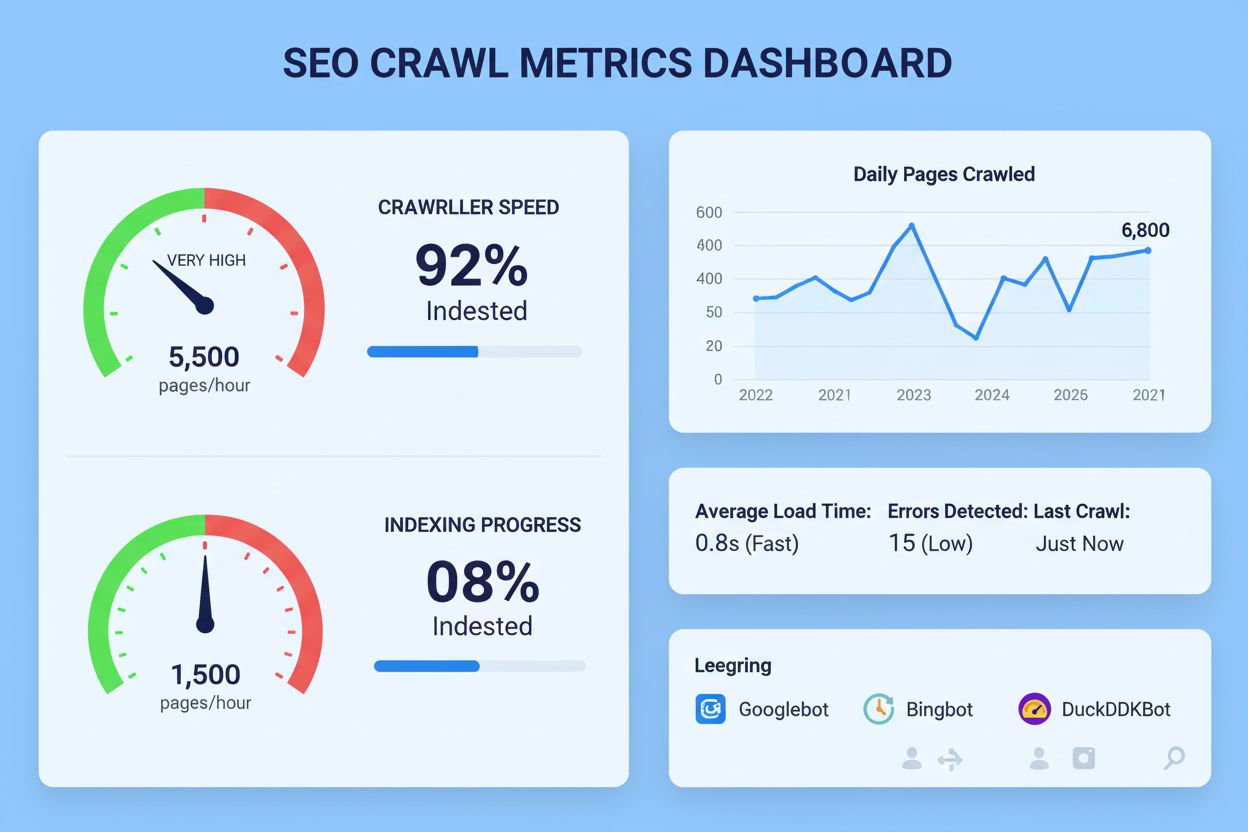

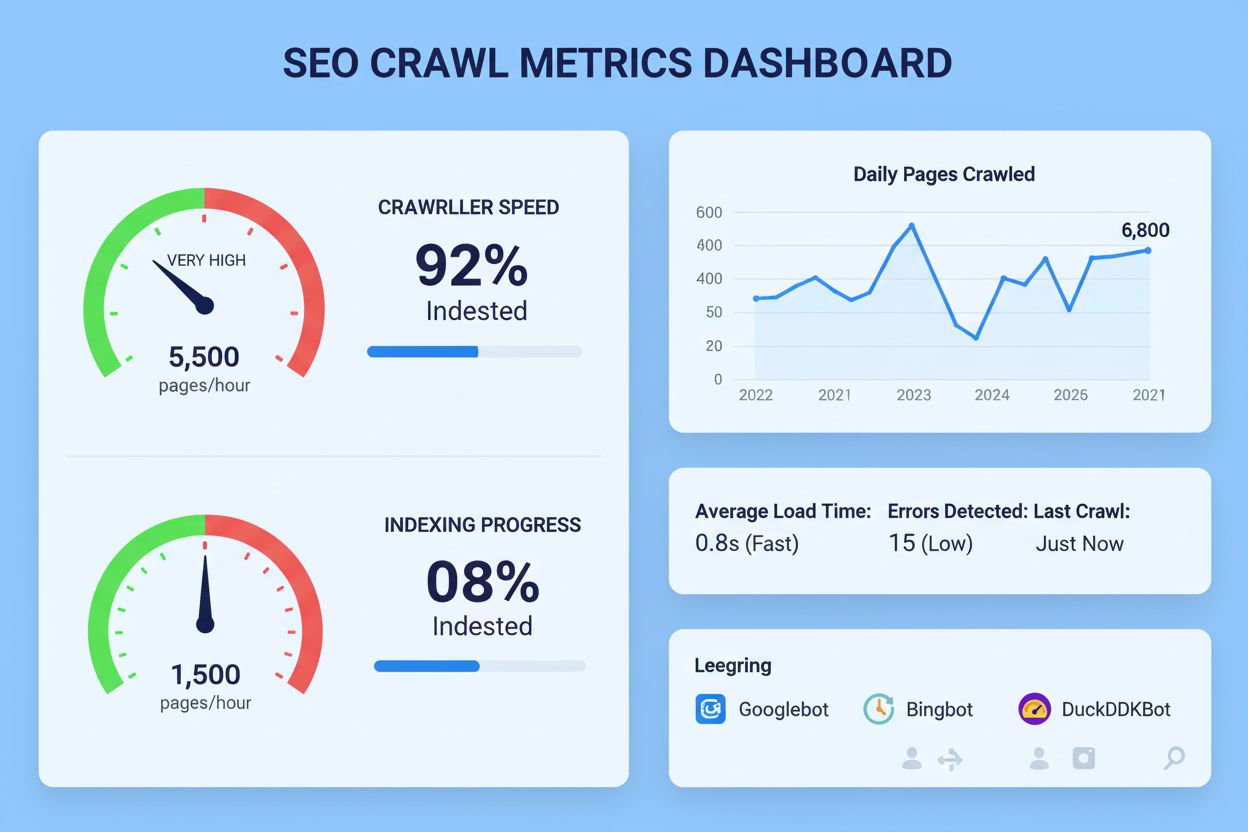

Crawl rate is the speed at which search engines crawl your website. Learn how it affects indexing, SEO performance, and how to optimize it for better search vis...

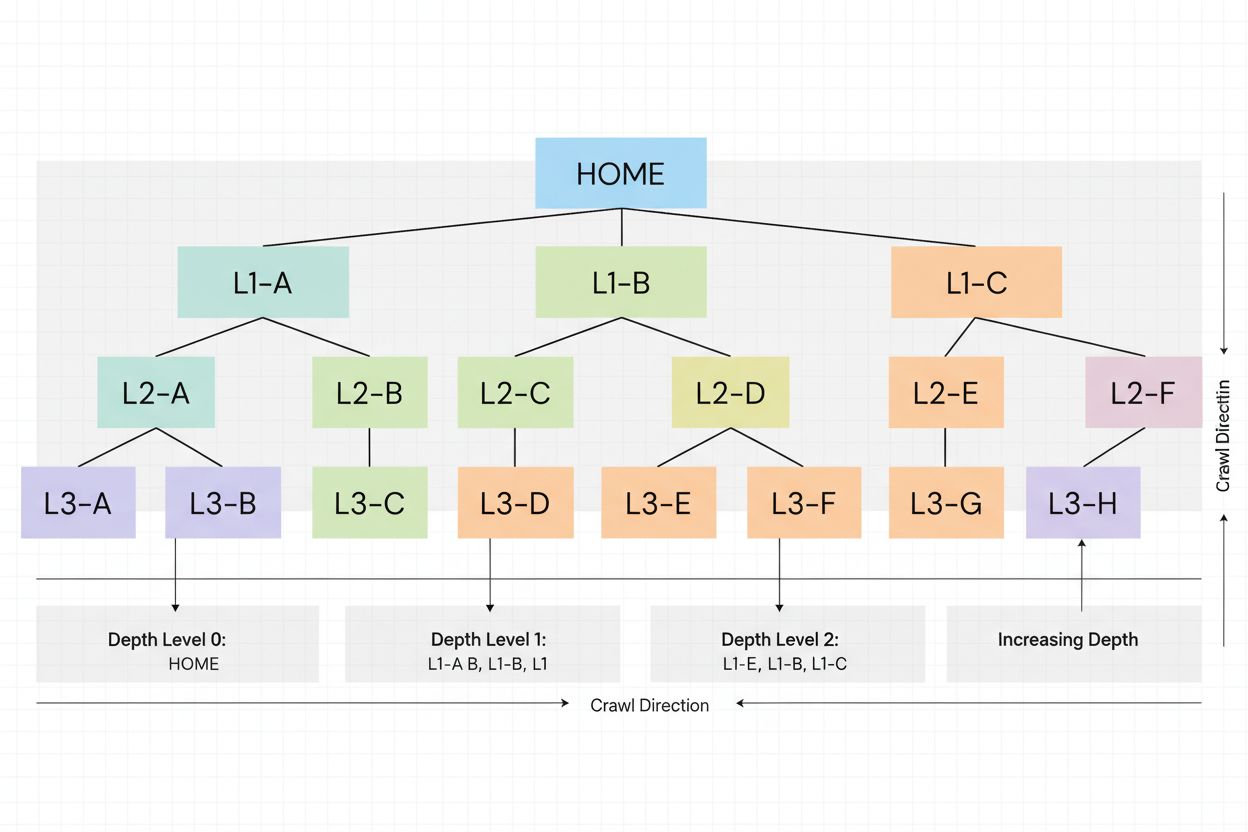

Crawl depth refers to how far down a website’s hierarchical structure search engine crawlers can reach during a single crawl session. It measures the number of clicks or steps required from the homepage to reach a particular page, directly impacting which pages get indexed and how frequently they are crawled within a site’s allocated crawl budget.

Crawl depth refers to how far down a website's hierarchical structure search engine crawlers can reach during a single crawl session. It measures the number of clicks or steps required from the homepage to reach a particular page, directly impacting which pages get indexed and how frequently they are crawled within a site's allocated crawl budget.

Crawl depth is a fundamental technical SEO concept that refers to how far down a website’s hierarchical structure search engine crawlers can navigate during a single crawl session. More specifically, it measures the number of clicks or steps required from the homepage to reach a particular page within your site’s internal linking structure. A website with high crawl depth means that search engine bots can access and index many pages throughout the site, while a website with low crawl depth indicates that crawlers may not reach deeper pages before exhausting their allocated resources. This concept is critical because it directly determines which pages get indexed, how frequently they are crawled, and ultimately, their visibility in search engine results pages (SERPs).

The importance of crawl depth has intensified in recent years due to the exponential growth of web content. With Google’s index containing over 400 billion documents and the rising volume of AI-generated content, search engines face unprecedented limitations on crawl resources. This means that websites with poor crawl depth optimization may find their important pages left unindexed or crawled infrequently, significantly impacting their organic search visibility. Understanding and optimizing crawl depth is therefore essential for any website seeking to maximize its search engine presence.

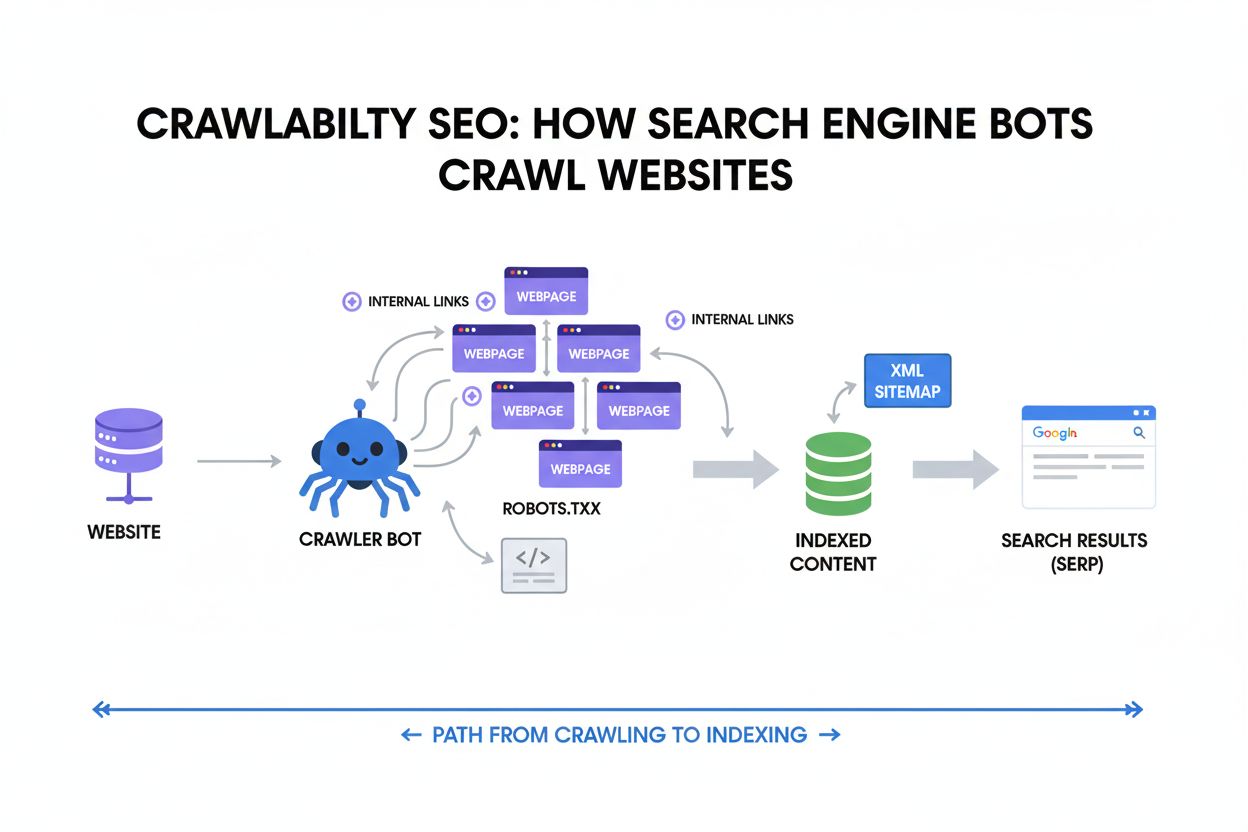

The concept of crawl depth emerged from how search engine crawlers (also called web spiders or bots) operate. When Google’s Googlebot or other search engine bots visit a website, they follow a systematic process: they start at the homepage and follow internal links to discover additional pages. The crawler allocates a finite amount of time and resources to each website, known as the crawl budget. This budget is determined by two factors: crawl capacity limit (how much the crawler can handle without overwhelming the server) and crawl demand (how important and frequently updated the site is). The deeper pages are buried within your site’s structure, the less likely crawlers are to reach them before the crawl budget is exhausted.

Historically, website structures were relatively simple, with most important content within 2-3 clicks of the homepage. However, as e-commerce sites, news portals, and content-heavy websites grew exponentially, many organizations created deeply nested structures with pages 5, 6, or even 10+ levels deep. Research from seoClarity and other SEO platforms has shown that pages at depth 3 and lower generally perform worse in organic search results compared to pages closer to the homepage. This performance gap exists because crawlers prioritize pages closer to the root, and these pages also accumulate more link equity (ranking power) through internal linking. The relationship between crawl depth and indexation rates is particularly pronounced on large websites with thousands or millions of pages, where crawl budget becomes a critical limiting factor.

The rise of AI search engines like Perplexity, ChatGPT, and Google AI Overviews has added another dimension to crawl depth optimization. These AI systems use their own specialized crawlers (such as PerplexityBot and GPTBot) that may have different crawl patterns and priorities than traditional search engines. However, the fundamental principle remains the same: pages that are easily accessible and well-integrated into a site’s structure are more likely to be discovered, crawled, and cited as sources in AI-generated responses. This makes crawl depth optimization relevant not just for traditional SEO, but for AI search visibility and generative engine optimization (GEO) as well.

| Concept | Definition | Perspective | Measurement | Impact on SEO |

|---|---|---|---|---|

| Crawl Depth | How far down a site’s hierarchy crawlers navigate based on internal links and URL structure | Search engine crawler’s view | Number of clicks/steps from homepage | Affects indexation frequency and coverage |

| Click Depth | Number of user clicks needed to reach a page from homepage via shortest path | User’s perspective | Literal clicks required | Affects user experience and navigation |

| Page Depth | Position of a page within the site’s hierarchical structure | Structural view | URL nesting level | Influences link equity distribution |

| Crawl Budget | Total resources (time/bandwidth) allocated to crawl a website | Resource allocation | Pages crawled per day | Determines how many pages get indexed |

| Crawl Efficiency | How effectively crawlers navigate and index a site’s content | Optimization view | Pages indexed vs. crawl budget used | Maximizes indexation within budget limits |

Understanding how crawl depth functions requires examining the mechanics of how search engine crawlers navigate websites. When Googlebot or another crawler visits your site, it begins at the homepage (depth 0) and follows internal links to discover additional pages. Each page linked from the homepage is at depth 1, pages linked from those pages are at depth 2, and so on. The crawler doesn’t necessarily follow a linear path; instead, it discovers multiple pages at each level before moving deeper. However, the crawler’s journey is constrained by the crawl budget, which limits how many pages it can visit in a given timeframe.

The technical relationship between crawl depth and indexation is governed by several factors. First, crawl prioritization plays a crucial role—search engines don’t crawl all pages equally. They prioritize pages based on perceived importance, freshness, and relevance. Pages with more internal links, higher authority, and recent updates are crawled more frequently. Second, the URL structure itself influences crawl depth. A page at /category/subcategory/product/ has a higher crawl depth than a page at /product/, even if both are linked from the homepage. Third, redirect chains and broken links act as obstacles that waste crawl budget. A redirect chain forces the crawler to follow multiple redirects before reaching the final page, consuming resources that could have been used to crawl other content.

The technical implementation of crawl depth optimization involves several key strategies. Internal linking architecture is paramount—by strategically linking important pages from the homepage and high-authority pages, you reduce their effective crawl depth and increase the likelihood they’ll be crawled frequently. XML sitemaps provide crawlers with a direct map of your site’s structure, allowing them to discover pages more efficiently without relying solely on following links. Site speed is another critical factor; faster pages load quicker, allowing crawlers to access more pages within their allocated budget. Finally, robots.txt and noindex tags allow you to control which pages crawlers should prioritize, preventing them from wasting budget on low-value pages like duplicate content or admin pages.

The practical implications of crawl depth extend far beyond technical SEO metrics—they directly impact business outcomes. For e-commerce websites, poor crawl depth optimization means that product pages buried deep in category hierarchies may not be indexed or may be indexed infrequently. This results in reduced organic visibility, fewer product impressions in search results, and ultimately, lost sales. A study by seoClarity found that pages with higher crawl depth had significantly lower indexation rates, with pages at depth 4+ being crawled up to 50% less frequently than pages at depth 1-2. For large retailers with thousands of SKUs, this can translate to millions of dollars in lost organic revenue.

For content-heavy websites like news sites, blogs, and knowledge bases, crawl depth optimization directly affects content discoverability. Articles published deep within category structures may never reach Google’s index, meaning they generate zero organic traffic regardless of their quality or relevance. This is particularly problematic for news sites where freshness is critical—if new articles aren’t crawled and indexed quickly, they miss the window of opportunity to rank for trending topics. Publishers who optimize crawl depth by flattening their structure and improving internal linking see dramatic increases in indexed pages and organic traffic.

The relationship between crawl depth and link equity distribution has significant business implications. Link equity (also called PageRank or ranking power) flows through internal links from the homepage outward. Pages closer to the homepage accumulate more link equity, making them more likely to rank for competitive keywords. By optimizing crawl depth and ensuring important pages are within 2-3 clicks of the homepage, businesses can concentrate link equity on their most valuable pages—typically product pages, service pages, or cornerstone content. This strategic distribution of link equity can dramatically improve rankings for high-value keywords.

Additionally, crawl depth optimization impacts crawl budget efficiency, which becomes increasingly important as websites grow. Large websites with millions of pages face severe crawl budget constraints. By optimizing crawl depth, removing duplicate content, fixing broken links, and eliminating redirect chains, websites can ensure that crawlers spend their budget on valuable, unique content rather than wasting resources on low-value pages. This is particularly critical for enterprise websites and large e-commerce platforms where crawl budget management can mean the difference between having 80% of pages indexed versus 40%.

The emergence of AI search engines and generative AI systems has introduced new dimensions to crawl depth optimization. ChatGPT, powered by OpenAI, uses the GPTBot crawler to discover and index web content. Perplexity, a leading AI search engine, employs PerplexityBot to crawl the web for sources. Google AI Overviews (formerly SGE) uses Google’s own crawlers to gather information for AI-generated summaries. Claude, Anthropic’s AI assistant, also crawls web content for training and retrieval. Each of these systems has different crawl patterns, priorities, and resource constraints compared to traditional search engines.

The key insight is that crawl depth principles apply to AI search engines as well. Pages that are easily accessible, well-linked, and structurally prominent are more likely to be discovered by AI crawlers and cited as sources in AI-generated responses. Research from AmICited and other AI monitoring platforms shows that websites with optimized crawl depth see higher citation rates in AI search results. This is because AI systems prioritize sources that are authoritative, accessible, and frequently updated—all characteristics that correlate with shallow crawl depth and good internal linking structure.

However, there are some differences in how AI crawlers behave compared to Googlebot. AI crawlers may be more aggressive in their crawling patterns, potentially consuming more bandwidth. They may also have different preferences regarding content types and freshness. Some AI systems prioritize recently updated content more heavily than traditional search engines, making crawl depth optimization even more critical for staying visible in AI search results. Additionally, AI crawlers may not respect certain directives like robots.txt or noindex tags in the same way traditional search engines do, though this is evolving as AI companies work to align with SEO best practices.

For businesses focused on AI search visibility and generative engine optimization (GEO), optimizing crawl depth serves a dual purpose: it improves traditional SEO while simultaneously increasing the likelihood that AI systems will discover, crawl, and cite your content. This makes crawl depth optimization a foundational strategy for any organization seeking visibility across both traditional and AI-powered search platforms.

Optimizing crawl depth requires a systematic approach that addresses both structural and technical aspects of your website. The following best practices have been proven effective across thousands of websites:

For large enterprise websites with thousands or millions of pages, crawl depth optimization becomes increasingly complex and critical. Enterprise sites often face severe crawl budget constraints, making it essential to implement advanced strategies. One approach is crawl budget allocation, where you strategically decide which pages deserve crawl resources based on business value. High-value pages (product pages, service pages, cornerstone content) should be kept at shallow depths and linked frequently, while low-value pages (archive content, duplicate pages, thin content) should be noindexed or deprioritized.

Another advanced strategy is dynamic internal linking, where you use data-driven insights to identify which pages need additional internal links to improve their crawl depth. Tools like seoClarity’s Internal Link Analysis can identify pages at excessive depths with few internal links, revealing opportunities to improve crawl efficiency. Additionally, log file analysis allows you to see exactly how crawlers are navigating your site, revealing bottlenecks and inefficiencies in your crawl depth structure. By analyzing crawler behavior, you can identify pages that are being crawled inefficiently and optimize their accessibility.

For multi-language websites and international sites, crawl depth optimization becomes even more important. Hreflang tags and proper URL structure for different language versions can impact crawl efficiency. By ensuring that each language version has an optimized crawl depth structure, you maximize indexation across all markets. Similarly, mobile-first indexing means that crawl depth optimization must consider both desktop and mobile versions of your site, ensuring that important content is accessible on both platforms.

The importance of crawl depth is evolving as search technology advances. With the rise of AI search engines and generative AI systems, crawl depth optimization is becoming relevant to a broader audience beyond traditional SEO professionals. As AI systems become more sophisticated, they may develop different crawl patterns and priorities, potentially making crawl depth optimization even more critical. Additionally, the increasing volume of AI-generated content is putting pressure on Google’s index, making crawl budget management more important than ever.

Looking forward, we can expect several trends to shape crawl depth optimization. First, AI-driven crawl optimization tools will become more sophisticated, using machine learning to identify optimal crawl depth structures for different types of websites. Second, real-time crawl monitoring will become standard, allowing website owners to see exactly how crawlers are navigating their sites and make immediate adjustments. Third, crawl depth metrics will become more integrated into SEO platforms and analytics tools, making it easier for non-technical marketers to understand and optimize this critical factor.

The relationship between crawl depth and AI search visibility will likely become a major focus area for SEO professionals. As more users rely on AI search engines for information, businesses will need to optimize not just for traditional search, but for AI discoverability as well. This means that crawl depth optimization will become part of a broader generative engine optimization (GEO) strategy that encompasses both traditional SEO and AI search visibility. Organizations that master crawl depth optimization early will have a competitive advantage in the AI-powered search landscape.

Finally, the concept of crawl depth may evolve as search technology becomes more sophisticated. Future search engines may use different methods to discover and index content, potentially reducing the importance of traditional crawl depth. However, the underlying principle—that easily accessible, well-structured content is more likely to be discovered and ranked—will likely remain relevant regardless of how search technology evolves. Therefore, investing in crawl depth optimization today is a sound long-term strategy for maintaining search visibility across current and future search platforms.

Crawl depth measures how far search engine bots navigate through your site's hierarchy based on internal links and URL structure, while click depth measures how many user clicks are needed to reach a page from the homepage. A page might have a click depth of 1 (linked in footer) but a crawl depth of 3 (nested in URL structure). Crawl depth is from the search engine's perspective, while click depth is from the user's perspective.

Crawl depth doesn't directly impact rankings, but it significantly influences whether pages get indexed at all. Pages buried deep in your site structure are less likely to be crawled within the allocated crawl budget, meaning they may not be indexed or updated frequently. This reduced indexation and freshness can indirectly harm rankings. Pages closer to the homepage typically receive more crawl attention and link equity, giving them better ranking potential.

Most SEO experts recommend keeping important pages within 3 clicks of the homepage. This ensures they are easily discoverable by both search engines and users. For larger websites with thousands of pages, some depth is necessary, but the goal should be to keep critical pages as shallow as possible. Pages at depth 3 and beyond generally perform worse in search results due to reduced crawl frequency and link equity distribution.

Crawl depth directly impacts how effectively you use your crawl budget. Google allocates a specific crawl budget to each website based on crawl capacity limit and crawl demand. If your site has excessive crawl depth with many pages buried deep, crawlers may exhaust their budget before reaching all important pages. By optimizing crawl depth and reducing unnecessary page layers, you ensure that your most valuable content gets crawled and indexed within the allocated budget.

Yes, you can improve crawl efficiency without restructuring your entire site. Strategic internal linking is the most effective approach—link important deep pages from your homepage, category pages, or high-authority content. Updating your XML sitemap regularly, fixing broken links, and reducing redirect chains also help crawlers reach pages more efficiently. These tactics improve crawl depth without requiring architectural changes.

AI search engines like Perplexity, ChatGPT, and Google AI Overviews use their own specialized crawlers (PerplexityBot, GPTBot, etc.) that may have different crawl patterns than Googlebot. These AI crawlers still respect crawl depth principles—pages that are easily accessible and well-linked are more likely to be discovered and used as sources. Optimizing crawl depth benefits both traditional search engines and AI systems, improving your visibility across all search platforms.

Tools like Google Search Console, Screaming Frog SEO Spider, seoClarity, and Hike SEO provide crawl depth analysis and visualization. Google Search Console shows crawl stats and frequency, while specialized SEO crawlers visualize your site's hierarchical structure and identify pages with excessive depth. These tools help you identify optimization opportunities and track improvements in crawl efficiency over time.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Crawl rate is the speed at which search engines crawl your website. Learn how it affects indexing, SEO performance, and how to optimize it for better search vis...

Crawlability is the ability of search engines to access and navigate website pages. Learn how crawlers work, what blocks them, and how to optimize your site for...

Crawl budget is the number of pages search engines crawl on your website within a timeframe. Learn how to optimize crawl budget for better indexing and SEO perf...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.