Robots.txt for AI: How to Control Which Bots Access Your Content

Learn how to use robots.txt to control which AI bots access your content. Complete guide to blocking GPTBot, ClaudeBot, and other AI crawlers with practical exa...

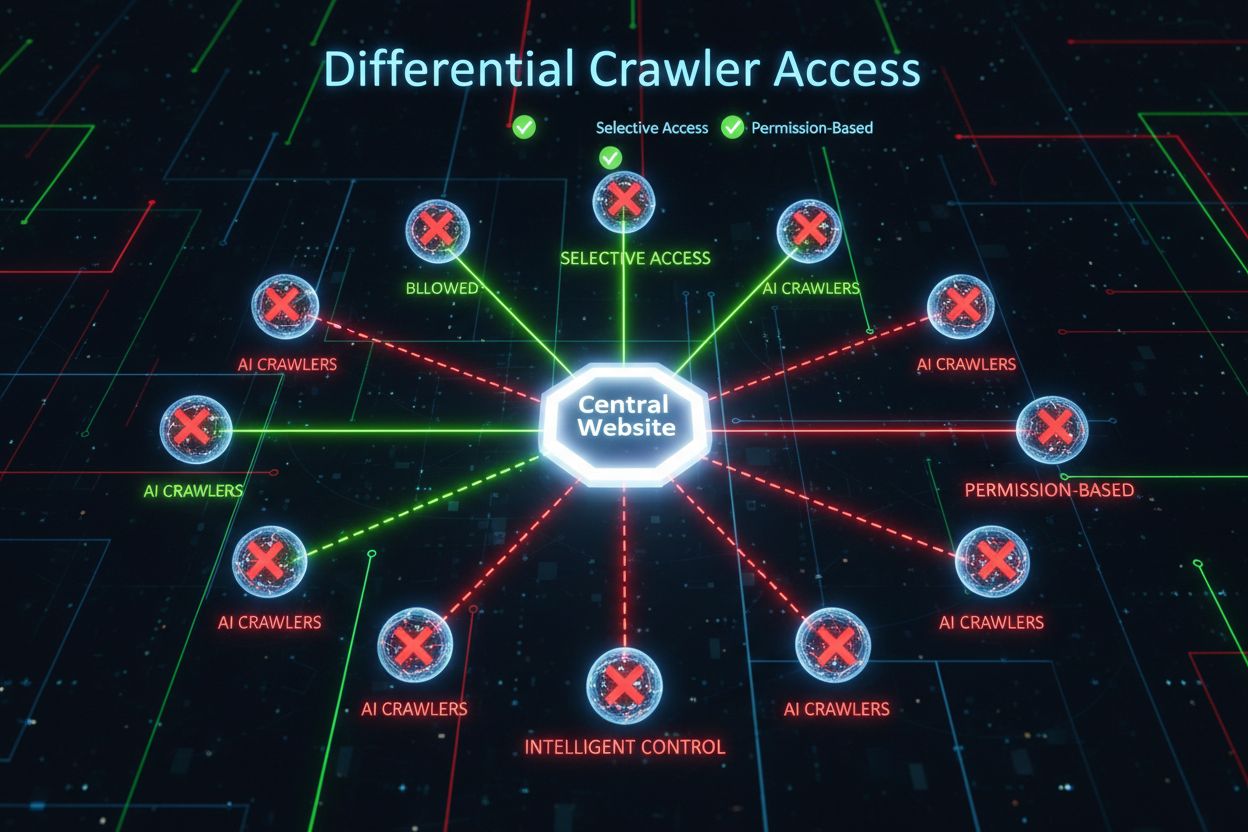

A strategic approach that allows website owners to selectively permit certain AI crawlers while blocking others based on business objectives, content licensing agreements, and value assessment. Rather than implementing blanket policies, differential access evaluates each crawler individually to determine whether it drives traffic, respects licensing terms, or aligns with monetization goals. Publishers use tools like robots.txt, HTTP headers, and platform-specific controls to implement granular access policies. This method balances innovation opportunities with content protection and fair compensation.

A strategic approach that allows website owners to selectively permit certain AI crawlers while blocking others based on business objectives, content licensing agreements, and value assessment. Rather than implementing blanket policies, differential access evaluates each crawler individually to determine whether it drives traffic, respects licensing terms, or aligns with monetization goals. Publishers use tools like robots.txt, HTTP headers, and platform-specific controls to implement granular access policies. This method balances innovation opportunities with content protection and fair compensation.

The explosion of AI crawlers has fundamentally disrupted the decades-old relationship between website owners and bots. For years, the internet operated on a simple exchange: search engines like Google indexed content and directed traffic back to original sources, creating a symbiotic relationship that rewarded quality content creation. Today, a new generation of AI crawlers—including GPTBot, ClaudeBot, PerplexityBot, and dozens of others—operates under different rules. These bots scrape content not to index it for discovery, but to feed it directly into AI models that generate answers without sending users back to the original source. The impact is stark: according to Cloudflare data, OpenAI’s GPTBot maintains a crawl-to-referral ratio of approximately 1,700:1, while Anthropic’s ClaudeBot reaches 73,000:1, meaning for every visitor sent back to a publisher’s site, thousands of pages are crawled for training data. This broken exchange has forced publishers to reconsider their crawler access policies, moving away from the binary choice of “allow all” or “block all” toward a more nuanced strategy: differential crawler access. Rather than implementing blanket policies, savvy publishers now evaluate each crawler individually, asking critical questions about value, licensing, and alignment with business objectives.

Understanding the different types of AI crawlers is essential for implementing an effective differential access strategy, as each serves distinct purposes with varying impacts on your business. AI crawlers fall into three primary categories: training crawlers (GPTBot, ClaudeBot, anthropic-ai, CCBot, Bytespider) that collect content for model training; search crawlers (OAI-SearchBot, PerplexityBot, Google-Extended) that index content for AI-powered search results; and user-triggered agents (ChatGPT-User, Claude-Web, Perplexity-User) that fetch content only when users explicitly request it. The value proposition differs dramatically across these categories. Training crawlers typically generate minimal traffic back to your site—they’re extracting value without reciprocal benefit—making them prime candidates for blocking. Search crawlers, conversely, can drive meaningful referral traffic and subscriber conversions, similar to traditional search engines. User-triggered agents occupy a middle ground, activating only when users actively engage with AI systems. The Atlantic, one of the largest digital publishers, implemented a sophisticated scorecard approach to evaluate crawlers, tracking both traffic volume and subscriber conversions for each bot. Their analysis revealed that while some crawlers drive meaningful value, others generate essentially zero traffic while consuming significant bandwidth. This data-driven approach enables publishers to make informed decisions rather than relying on assumptions.

| Crawler Type | Examples | Primary Purpose | Typical Traffic Value | Recommended Access |

|---|---|---|---|---|

| Training | GPTBot, ClaudeBot, anthropic-ai, CCBot, Bytespider | Model training datasets | Very low (1,700:1 to 73,000:1 ratio) | Often blocked |

| Search | OAI-SearchBot, PerplexityBot, Google-Extended | AI search indexing | Medium to high | Often allowed |

| User-Triggered | ChatGPT-User, Claude-Web, Perplexity-User | Direct user requests | Variable | Case-by-case |

Implementing differential crawler access requires a combination of technical tools and strategic decision-making, with multiple methods available depending on your technical capabilities and business requirements. The most fundamental tool is robots.txt, a simple text file in your website’s root directory that communicates crawler access preferences using User-agent directives. While robots.txt is voluntary and only 40-60% of AI bots respect it, it remains the first line of defense and costs nothing to implement. For publishers seeking stronger enforcement, Cloudflare’s managed robots.txt automatically creates and updates crawler directives, prepending them to your existing file and eliminating the need for manual maintenance. Beyond robots.txt, several enforcement mechanisms provide additional control:

The most effective approach combines multiple layers: robots.txt for compliant crawlers, WAF rules for enforcement, and monitoring tools to track effectiveness and identify new threats.

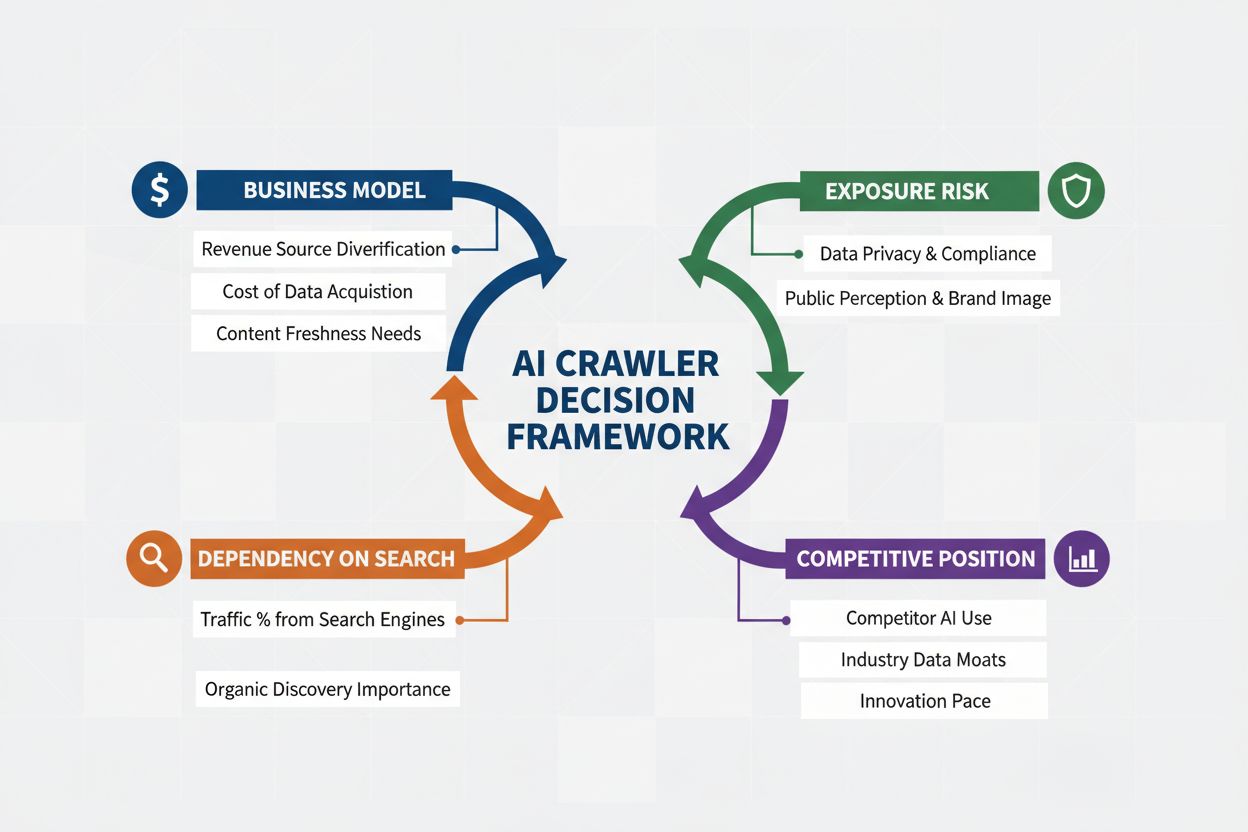

Implementing differential crawler access requires moving beyond technical implementation to develop a coherent business strategy aligned with your revenue model and competitive positioning. The Atlantic’s approach provides a practical framework: they evaluate each crawler based on two primary metrics—traffic volume and subscriber conversions—asking whether the crawler generates sufficient value to justify content access. For a publisher with $80 annual subscriber value, a crawler that drives 1,000 subscribers represents $80,000 in annual revenue, fundamentally changing the access decision. However, traffic and subscriber metrics represent only part of the equation. Publishers must also consider:

The most strategic publishers implement tiered access policies: allowing search crawlers that drive traffic, blocking training crawlers that don’t, and negotiating licensing agreements with high-value AI companies. This approach maximizes both visibility and revenue while protecting intellectual property.

While differential crawler access offers significant advantages, the reality is more complex than the theory, with several fundamental challenges limiting effectiveness and requiring ongoing management. The most critical limitation is that robots.txt is voluntary—crawlers that respect it do so by choice, not obligation. Research indicates that robots.txt stops only 40-60% of AI bots, with another 30-40% caught by user-agent blocking, leaving 10-30% of crawlers operating without restriction. Some AI companies and malicious actors deliberately ignore robots.txt directives, viewing content access as more valuable than compliance. Additionally, crawler evasion techniques continue to evolve: sophisticated bots spoof user agents to appear as legitimate browsers, use distributed IP addresses to avoid detection, and employ headless browsers that mimic human behavior. The Google-Extended dilemma exemplifies the complexity: blocking Google-Extended prevents your content from training Gemini AI, but Google AI Overviews (which appear in search results) use standard Googlebot rules, meaning you cannot opt out of AI Overviews without sacrificing search visibility. Monitoring and enforcement also require significant resources—tracking new crawlers, updating policies, and validating effectiveness demands ongoing attention. Finally, the legal landscape remains uncertain: while copyright law theoretically protects content, enforcement against AI companies is expensive and outcomes unpredictable, leaving publishers in a position of technical control without legal certainty.

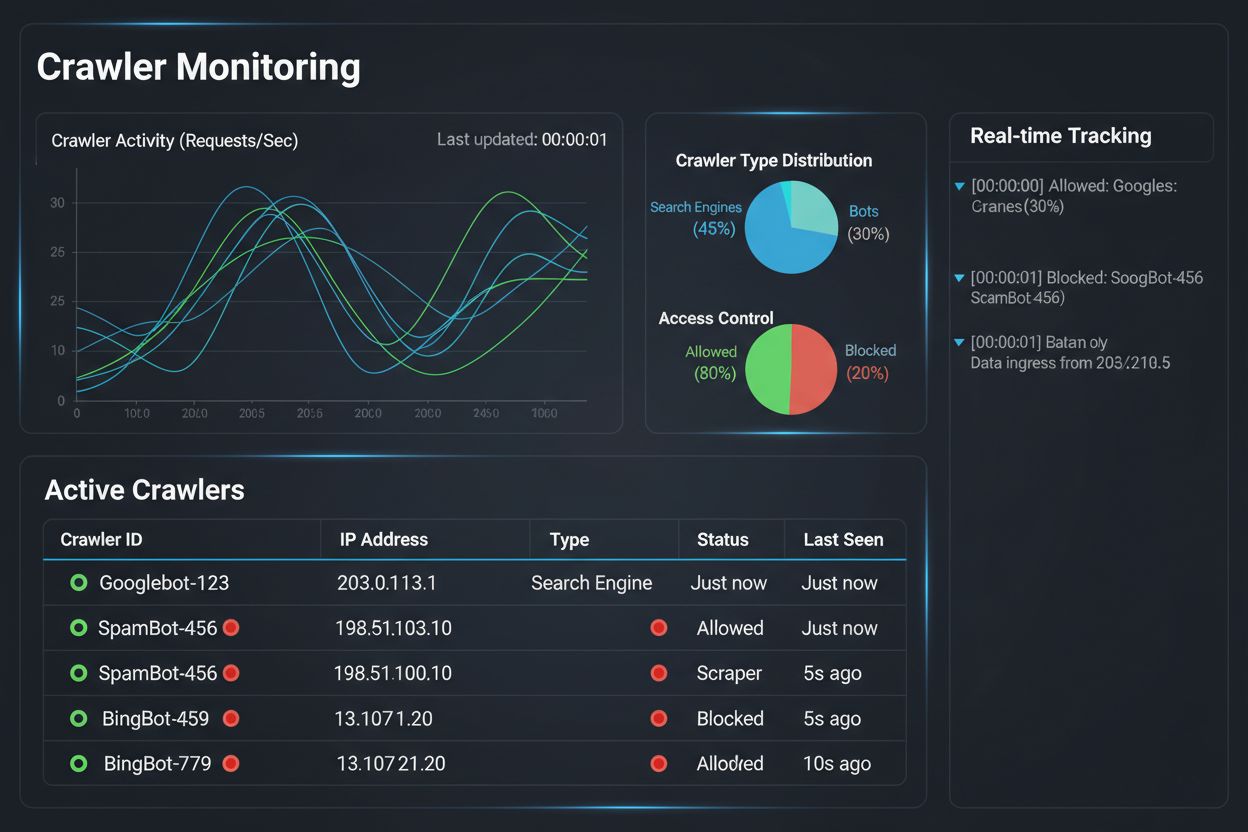

Implementing a differential crawler access strategy is only half the battle; the other half is understanding the actual impact of your policies through comprehensive monitoring and measurement. This is where AmICited.com becomes essential to your crawler management strategy. AmICited specializes in monitoring how AI systems reference and cite your brand across GPTs, Perplexity, Google AI Overviews, and other AI platforms—providing visibility into which crawlers are actually using your content and how it appears in AI-generated responses. Rather than relying on server logs and guesswork, AmICited’s monitoring dashboard shows you exactly which AI systems have accessed your content, how frequently, and most importantly, whether your content is being cited or simply absorbed into training data without attribution. This intelligence directly informs your differential access decisions: if a crawler is accessing your content but never citing it in AI responses, blocking becomes a clear business decision. AmICited also enables competitive benchmarking, showing how your content visibility in AI systems compares to competitors, helping you understand whether your access policies are too restrictive or too permissive. The platform’s real-time alerts notify you when new AI systems begin referencing your content, allowing rapid policy adjustments. By combining AmICited’s monitoring capabilities with Cloudflare’s enforcement tools, publishers gain complete visibility and control: they can see which crawlers access their content, measure the business impact, and adjust policies accordingly. This data-driven approach transforms crawler management from a technical checkbox into a strategic business function.

The landscape of differential crawler access is rapidly evolving, with emerging standards and business models reshaping how publishers and AI companies interact around content. The IETF AI preferences proposal represents a significant development, establishing standardized ways for websites to communicate their preferences regarding AI training, inference, and search use. Rather than relying on robots.txt—a 30-year-old standard designed for search engines—this new framework provides explicit, granular control over how AI systems can use content. Simultaneously, permission-based business models are gaining traction, with Cloudflare’s Pay Per Crawl initiative pioneering a framework where AI companies pay publishers for content access, transforming crawlers from threats into revenue sources. This shift from blocking to licensing represents a fundamental change in internet economics: instead of fighting over access, publishers and AI companies negotiate fair compensation. Crawler authentication and verification standards are also advancing, with cryptographic verification methods allowing publishers to confirm crawler identity and prevent spoofed requests. Looking forward, we can expect increased regulatory frameworks addressing AI training data, potentially mandating explicit consent and compensation for content use. The convergence of these trends—technical standards, licensing models, authentication mechanisms, and regulatory pressure—suggests that differential crawler access will evolve from a defensive strategy into a sophisticated business function where publishers actively manage, monitor, and monetize AI crawler access. Publishers who implement comprehensive monitoring and strategic policies today will be best positioned to capitalize on these emerging opportunities.

Blocking all crawlers removes your content from AI systems entirely, eliminating both risks and opportunities. Differential access allows you to evaluate each crawler individually, blocking those that don't provide value while allowing those that drive traffic or represent licensing opportunities. This nuanced approach maximizes both visibility and revenue while protecting intellectual property.

You can monitor crawler activity through server logs, Cloudflare's analytics dashboard, or specialized monitoring tools like AmICited.com. AmICited specifically tracks which AI systems are accessing your content and how your brand appears in AI-generated responses, providing business-level insights beyond technical logs.

No. Blocking AI training crawlers like GPTBot, ClaudeBot, and CCBot does not affect your Google or Bing search rankings. Traditional search engines use different crawlers (Googlebot, Bingbot) that operate independently. Only block those if you want to disappear from search results entirely.

Yes, this is the most strategic approach for many publishers. You can allow search-focused crawlers like OAI-SearchBot and PerplexityBot (which drive traffic) while blocking training crawlers like GPTBot and ClaudeBot (which typically don't). This maintains visibility in AI search results while protecting content from being absorbed into training datasets.

While major crawlers from OpenAI, Anthropic, and Google respect robots.txt, some bots ignore it deliberately. If a crawler doesn't respect your robots.txt, you'll need additional enforcement methods like WAF rules, IP blocking, or Cloudflare's bot management features. This is why monitoring tools like AmICited are essential—they show you which crawlers are actually respecting your policies.

Review your policies quarterly at minimum, as AI companies regularly introduce new crawlers. Anthropic merged their 'anthropic-ai' and 'Claude-Web' bots into 'ClaudeBot,' giving the new bot temporary unrestricted access to sites that hadn't updated their rules. Regular monitoring with tools like AmICited helps you stay ahead of changes.

Googlebot is Google's search crawler that indexes content for search results. Google-Extended is a control token that specifically governs whether your content gets used for Gemini AI training. You can block Google-Extended without affecting search rankings, but note that Google AI Overviews (which appear in search results) use standard Googlebot rules, so you cannot opt out of AI Overviews without sacrificing search visibility.

Yes, emerging licensing models like Cloudflare's Pay Per Crawl enable publishers to charge AI companies for content access. This transforms crawlers from threats into revenue sources. However, this requires negotiation with AI companies and may involve legal agreements. AmICited's monitoring helps you identify which crawlers represent the most valuable licensing opportunities.

Track which AI systems are accessing your content and how your brand appears in AI-generated responses. Get real-time insights into crawler behavior and measure the business impact of your differential access policies.

Learn how to use robots.txt to control which AI bots access your content. Complete guide to blocking GPTBot, ClaudeBot, and other AI crawlers with practical exa...

Learn how Web Application Firewalls provide advanced control over AI crawlers beyond robots.txt. Implement WAF rules to protect your content from unauthorized A...

Learn how to make strategic decisions about blocking AI crawlers. Evaluate content type, traffic sources, revenue models, and competitive position with our comp...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.