How Dynamic Rendering Affects AI: Impact on Crawlability and Visibility

Learn how dynamic rendering impacts AI crawlers, ChatGPT, Perplexity, and Claude visibility. Discover why AI systems can't render JavaScript and how to optimize...

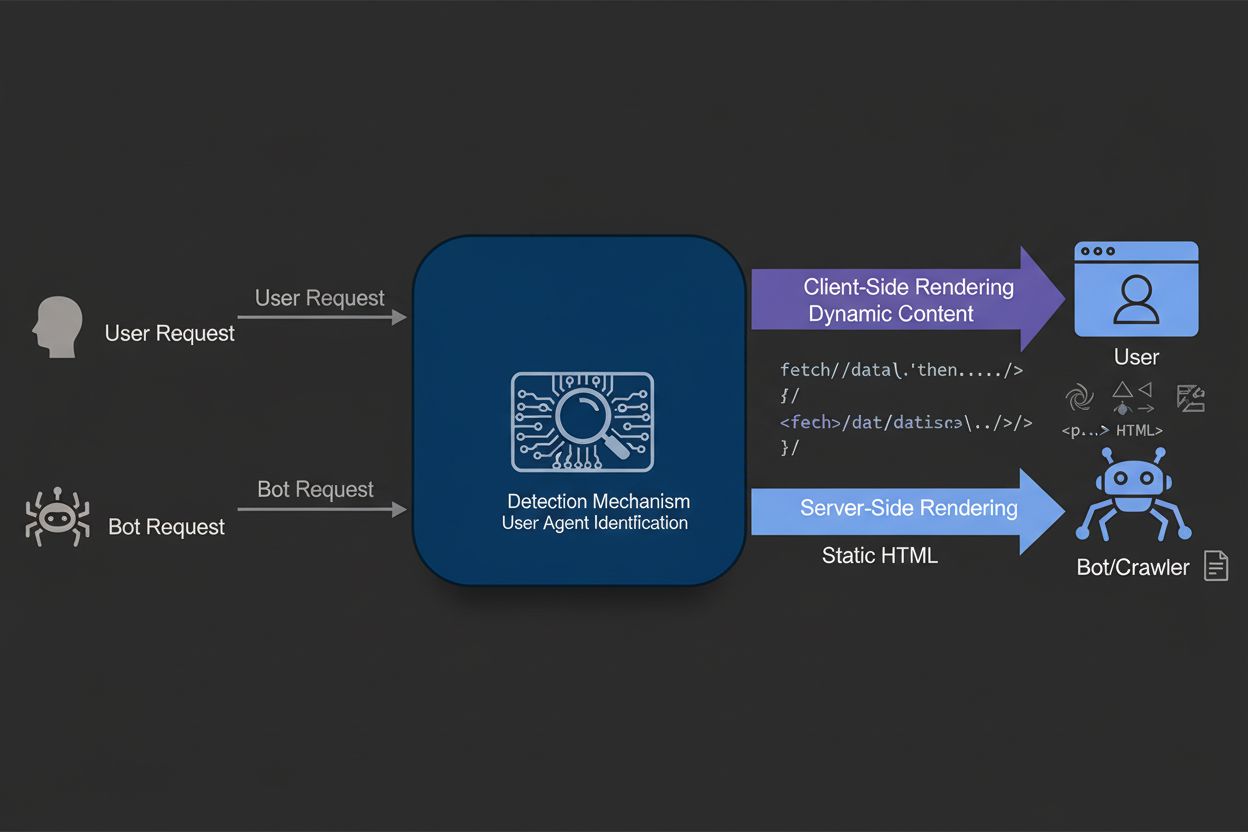

Dynamic rendering is a server-side technique that detects whether a request comes from a user or a search engine bot, then serves different versions of the same content accordingly—client-side rendered JavaScript for users and fully server-side rendered static HTML for bots. This approach optimizes crawlability and indexation while maintaining full user experience.

Dynamic rendering is a server-side technique that detects whether a request comes from a user or a search engine bot, then serves different versions of the same content accordingly—client-side rendered JavaScript for users and fully server-side rendered static HTML for bots. This approach optimizes crawlability and indexation while maintaining full user experience.

Dynamic rendering is a server-side content delivery technique that detects the type of request being made to a website—whether from a human user or a search engine bot—and serves optimized versions of content accordingly. When a user visits a page, they receive the full client-side rendered version with all JavaScript, interactive elements, and dynamic features intact. Conversely, when a search engine bot or AI crawler requests the same page, the server detects this through user-agent identification and routes the request to a rendering engine that converts the JavaScript-heavy content into static, fully-rendered HTML. This static version is then served to the bot, eliminating the need for the bot to execute JavaScript code. The technique emerged as a practical solution to the challenge that search engines face when processing JavaScript at scale, and it has become increasingly important as AI-powered search platforms like ChatGPT, Perplexity, Claude, and Google AI Overviews expand their crawling activities across the web.

Dynamic rendering was formally introduced to the SEO community by Google at its 2018 I/O conference, when John Mueller presented it as a workaround for JavaScript-related indexation challenges. At that time, Google acknowledged that while Googlebot could technically render JavaScript, doing so at web scale consumed significant computational resources and created delays in content discovery and indexation. Bing followed suit in June 2018, updating its Webmaster Guidelines to recommend dynamic rendering specifically for large websites struggling with JavaScript processing limitations. The technique gained traction among enterprise websites and JavaScript-heavy applications as a pragmatic middle ground between maintaining rich user experiences and ensuring search engine accessibility. However, Google’s stance evolved significantly by 2022, when the company updated its official documentation to explicitly state that dynamic rendering is a workaround and not a long-term solution. This shift reflected Google’s preference for more sustainable rendering approaches such as server-side rendering (SSR), static rendering, and hydration. Despite this reclassification, dynamic rendering remains widely implemented across the web, particularly among large e-commerce platforms, single-page applications, and content-heavy websites that cannot immediately migrate to alternative rendering architectures.

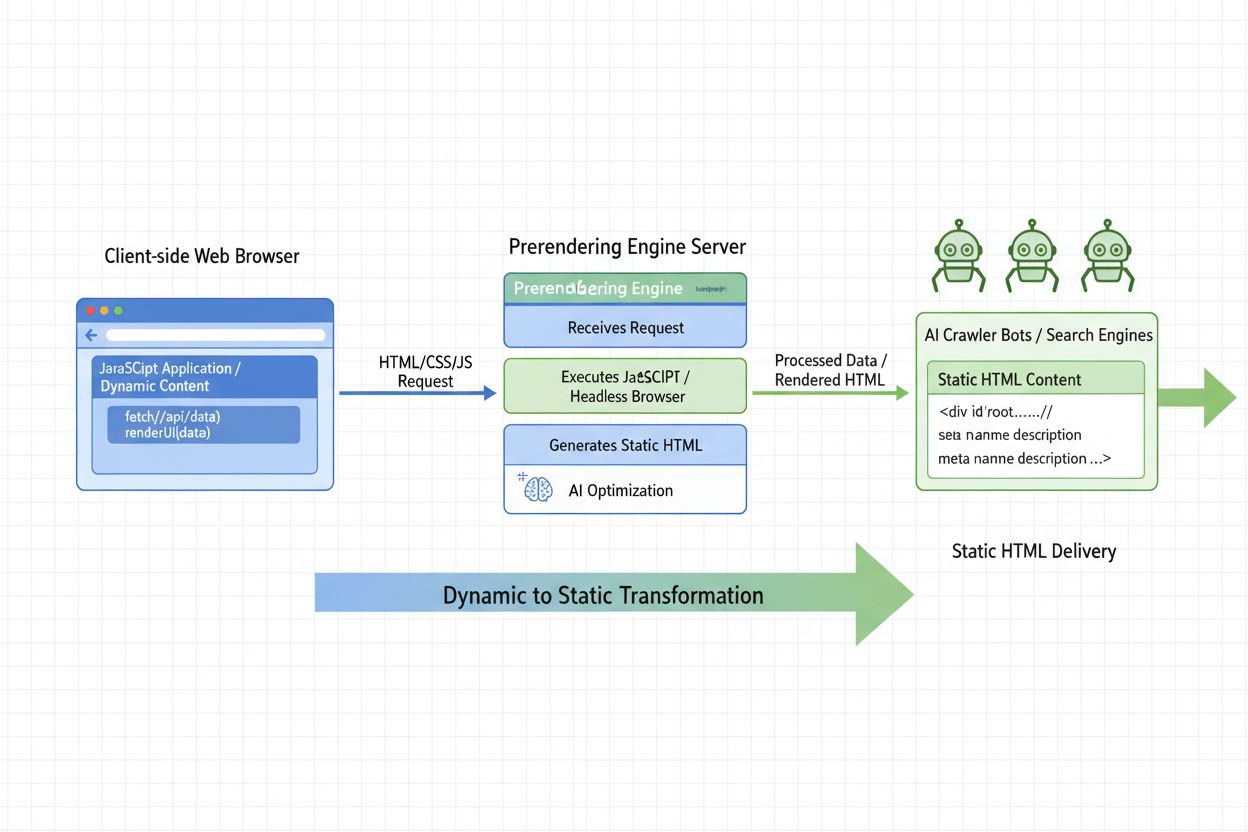

The mechanics of dynamic rendering involve three core components working in concert: user-agent detection, content routing, and rendering and caching. When a request arrives at a web server, the first step is identifying whether the request originates from a human user or an automated bot. This identification occurs by examining the user-agent string in the HTTP request header, which contains information about the client making the request. Search engine bots like Googlebot, Bingbot, and AI crawlers from platforms like Perplexity and Claude identify themselves through specific user-agent strings. Once a bot is detected, the server routes the request to a dynamic rendering service or middleware, which typically uses a headless browser (such as Chromium or Puppeteer) to render the page’s JavaScript and convert it into static HTML. This rendering process executes all JavaScript code, loads dynamic content, and generates the final DOM (Document Object Model) that would normally be created in a user’s browser. The resulting static HTML is then cached to avoid repeated rendering overhead and served directly to the bot. For human users, the request bypasses this rendering pipeline entirely and is served the original client-side rendered version, ensuring they receive the full interactive experience with all animations, real-time updates, and dynamic features intact.

| Aspect | Dynamic Rendering | Server-Side Rendering (SSR) | Static Rendering | Client-Side Rendering (CSR) |

|---|---|---|---|---|

| Content Delivery to Users | Client-side rendered (JavaScript) | Server-side rendered (HTML) | Pre-built static HTML | Client-side rendered (JavaScript) |

| Content Delivery to Bots | Server-side rendered (HTML) | Server-side rendered (HTML) | Pre-built static HTML | Client-side rendered (JavaScript) |

| Implementation Complexity | Moderate | High | Low | Low |

| Resource Requirements | Medium (rendering for bots only) | High (rendering for all requests) | Low (no rendering needed) | Low (client-side only) |

| Performance for Users | Depends on JavaScript | Excellent | Excellent | Variable |

| Performance for Bots | Excellent | Excellent | Excellent | Poor |

| Crawl Budget Impact | Positive (faster bot processing) | Positive (faster bot processing) | Positive (fastest) | Negative (slow rendering) |

| SEO Recommendation | Temporary workaround | Long-term preferred | Long-term preferred | Not recommended for SEO |

| Best Use Cases | Large JS-heavy sites with budget constraints | Modern web applications | Blogs, documentation, static content | User-focused apps without SEO needs |

| Maintenance Burden | Low-moderate | High | Low | Low |

The fundamental reason dynamic rendering exists stems from a critical challenge in modern web development: JavaScript rendering at scale. While JavaScript enables rich, interactive user experiences with real-time updates, animations, and complex functionality, it creates significant obstacles for search engine crawlers. When a search engine bot encounters a page built with JavaScript frameworks like React, Vue, or Angular, the bot must execute the JavaScript code to see the final rendered content. This execution process is computationally expensive and time-consuming. Google has publicly acknowledged this challenge through statements from Martin Splitt, a Google Search Advocate, who explained: “Even though Googlebot can execute JavaScript, we don’t want to rely on that.” The reason is that Google operates with a finite crawl budget—a limited amount of time and computational resources allocated to crawling each website. According to research by Botify analyzing 6.2 billion Googlebot requests across 413 million web pages, approximately 51% of pages on large enterprise websites go uncrawled due to crawl budget constraints. When JavaScript slows down the crawling process, fewer pages get discovered and indexed. Additionally, there exists a render budget separate from crawl budget, meaning that even if a page is crawled, Google may defer rendering its JavaScript until resources become available, potentially delaying indexation by hours or days. This delay is particularly problematic for e-commerce sites with rapidly changing inventory or news sites publishing hundreds of articles daily, where timely indexation directly impacts visibility and traffic.

Crawl budget represents one of the most critical yet often misunderstood concepts in SEO. Google calculates crawl budget using the formula: Crawl Budget = Crawl Capacity + Crawl Demand. Crawl capacity depends on page load speed and server errors, while crawl demand depends on page popularity and freshness signals. When a website implements dynamic rendering, it directly improves crawl capacity by reducing the time bots spend processing each page. Research demonstrates that pages with sub-3-second rendering times receive approximately 45% more frequent re-crawling compared to pages with 500-1000ms load times, and approximately 130% more crawling compared to pages exceeding 1,000ms. By serving pre-rendered static HTML to bots instead of JavaScript-heavy content, dynamic rendering can dramatically reduce page load times for crawlers, allowing them to process more pages within their allocated budget. This efficiency gain translates directly into improved indexation rates. For large websites with thousands or millions of pages, this improvement can mean the difference between having 50% of pages indexed versus 80% or more. Furthermore, dynamic rendering helps ensure that JavaScript-loaded content is immediately visible to bots rather than being deferred to a secondary rendering queue. This is particularly important for content that changes frequently, as it ensures bots see the current version rather than a cached or outdated rendering.

The emergence of AI-powered search platforms like ChatGPT, Perplexity, Claude, and Google AI Overviews has created a new dimension to the dynamic rendering discussion. These platforms operate their own crawlers that process web content to generate AI-powered responses and summaries. Unlike traditional search engines that primarily index pages for ranking purposes, AI crawlers need to access and understand content deeply to generate accurate, contextual responses. Dynamic rendering becomes particularly important in this context because it ensures that AI crawlers can access your content efficiently and completely. When AmICited monitors your brand’s appearance in AI-generated responses across these platforms, the underlying factor determining whether your content gets cited is whether the AI crawler could successfully access and understand your website’s content. If your website relies heavily on JavaScript and lacks dynamic rendering, AI crawlers may struggle to access your content, reducing the likelihood of your brand appearing in AI responses. Conversely, websites with properly implemented dynamic rendering ensure that AI crawlers receive fully-rendered, accessible content, increasing the probability of citation and visibility. This makes dynamic rendering not just an SEO concern but a critical component of Generative Engine Optimization (GEO) strategy. Organizations using AmICited to track their AI search visibility should consider dynamic rendering as a foundational technical implementation to maximize their appearance across all AI platforms.

Implementing dynamic rendering requires careful planning and technical execution. The first step involves identifying which pages require dynamic rendering—typically high-priority pages like homepages, product pages, and content that generates significant traffic or changes frequently. Not every page necessarily needs dynamic rendering; static pages with minimal JavaScript can often be crawled effectively without it. The next step is selecting a rendering solution. Popular options include Prerender.io (a paid service that handles rendering and caching), Rendertron (Google’s open-source rendering solution based on headless Chromium), Puppeteer (a Node.js library for controlling headless Chrome), and specialized platforms like Nostra AI’s Crawler Optimization. Each solution has different trade-offs regarding cost, complexity, and maintenance requirements. After selecting a rendering tool, developers must configure user-agent detection middleware on their server to identify bot requests and route them appropriately. This typically involves checking the user-agent string against a list of known bot identifiers and proxying bot requests to the rendering service. Caching is critical—pre-rendered content should be cached aggressively to avoid rendering the same page repeatedly, which would defeat the purpose of the optimization. Finally, implementation should be verified using Google Search Console’s URL Inspection tool and the Mobile-Friendly Test to confirm that bots are receiving the rendered content correctly.

The primary benefits of dynamic rendering are substantial and well-documented. Improved crawlability is the most immediate advantage—by eliminating JavaScript processing overhead, bots can crawl more pages faster. Better indexation rates follow naturally, as more pages get discovered and indexed within the crawl budget. Faster bot processing reduces server load from rendering requests, as the rendering happens once and is cached rather than repeated for every bot visit. Maintained user experience is a critical advantage that distinguishes dynamic rendering from other approaches—users continue to receive the full, interactive version of your site with no degradation. Lower implementation cost compared to server-side rendering makes it accessible to organizations with limited development resources. However, dynamic rendering has notable limitations. Complexity and maintenance burden can be significant, particularly for large websites with thousands of pages or complex content structures. Caching challenges arise when content changes frequently—the cache must be invalidated and regenerated appropriately. Potential for inconsistencies between the user-facing and bot-facing versions can occur if not carefully managed, potentially leading to indexation issues. Resource consumption for rendering and caching infrastructure adds operational costs. Most importantly, Google’s official stance is that dynamic rendering is a temporary workaround, not a long-term solution, meaning organizations should view it as a bridge strategy while planning migration to more sustainable rendering approaches.

The future of dynamic rendering is intrinsically tied to broader trends in web development and search engine evolution. As JavaScript frameworks continue to dominate modern web development, the need for solutions that bridge the gap between rich user experiences and bot accessibility remains relevant. However, the industry is gradually shifting toward more sustainable approaches. Server-side rendering is becoming more practical as frameworks like Next.js, Nuxt, and Remix make SSR implementation more accessible to developers. Static rendering and incremental static regeneration offer excellent performance for content that doesn’t change constantly. Hydration—where a page is initially rendered on the server and then enhanced with interactivity on the client—provides a middle ground that’s gaining adoption. Google’s updated guidance explicitly recommends these alternatives over dynamic rendering, signaling that the search giant views dynamic rendering as a transitional solution rather than a permanent architectural pattern. The emergence of AI-powered search platforms adds another dimension to this evolution. As these platforms become more sophisticated in their crawling and content understanding capabilities, the importance of accessible, well-structured content increases. Dynamic rendering will likely remain relevant for organizations with legacy systems or specific constraints, but new projects should prioritize more sustainable rendering strategies from the outset. For organizations currently using AmICited to monitor AI search visibility, the strategic implication is clear: while dynamic rendering can improve your immediate visibility in AI responses, planning a migration to more sustainable rendering approaches should be part of your long-term Generative Engine Optimization strategy. The convergence of traditional SEO, technical performance optimization, and AI search visibility means that rendering strategy is no longer a purely technical concern but a core business decision affecting discoverability across all search platforms.

No, Google explicitly states that dynamic rendering is not cloaking as long as the content served to bots and users is substantially similar. Cloaking involves intentionally serving completely different content to deceive search engines, whereas dynamic rendering serves the same content in different formats. However, serving entirely different pages (like cats to users and dogs to bots) would be considered cloaking and violate Google's policies.

Dynamic rendering reduces the computational resources required for search engine bots to process JavaScript, allowing them to crawl more pages within their allocated crawl budget. By serving pre-rendered static HTML instead of JavaScript-heavy content, bots can access and index pages faster. Research shows that pages with sub-3-second rendering times receive approximately 45% more frequent re-crawling compared to slower pages, directly improving indexation rates.

Server-side rendering (SSR) pre-renders content on the server for both users and bots, improving performance for everyone but requiring significant development resources. Dynamic rendering only pre-renders for bots while users receive the normal client-side rendered version, making it less resource-intensive to implement. However, Google now recommends SSR, static rendering, or hydration as long-term solutions over dynamic rendering, which is considered a temporary workaround.

Dynamic rendering is ideal for large JavaScript-heavy websites with rapidly changing content, such as e-commerce platforms with constantly updated inventory, single-page applications, and sites with complex interactive features. Websites struggling with crawl budget issues—where Google fails to crawl significant portions of their content—are prime candidates. According to research, Google misses approximately 51% of pages on large enterprise websites due to crawl budget constraints.

AI crawlers used by platforms like ChatGPT, Perplexity, and Claude process web content similarly to traditional search engine bots, requiring fully accessible HTML content for optimal indexation. Dynamic rendering helps these AI systems access and understand JavaScript-generated content more efficiently, improving the likelihood that your website appears in AI-generated responses and summaries. This is particularly important for AmICited monitoring, as proper rendering ensures your brand appears in AI search results.

Popular dynamic rendering solutions include Prerender.io (paid service), Rendertron (open-source), Puppeteer, and specialized platforms like Nostra AI's Crawler Optimization. These tools detect bot user agents, generate static HTML versions of pages, and cache them for faster delivery. Implementation typically involves installing a renderer on your server, configuring user-agent detection middleware, and verifying the setup through Google Search Console's URL Inspection tool.

No, dynamic rendering has zero impact on user experience because visitors continue to receive the full, client-side rendered version of your website with all interactive elements, animations, and dynamic features intact. Users never see the static HTML version served to bots. The technique is designed specifically to optimize bot crawlability without compromising the rich, interactive experience that human visitors expect and enjoy.

Google recommended dynamic rendering in 2018 as a practical solution to JavaScript rendering limitations at scale. However, as of 2022, Google updated its guidance to clarify that dynamic rendering is a temporary workaround, not a long-term solution. The recommendation shift reflects Google's preference for more sustainable approaches like server-side rendering, static rendering, or hydration. Dynamic rendering remains valid for specific use cases but should be part of a broader performance optimization strategy rather than a standalone solution.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Learn how dynamic rendering impacts AI crawlers, ChatGPT, Perplexity, and Claude visibility. Discover why AI systems can't render JavaScript and how to optimize...

Learn what AI Prerendering is and how server-side rendering strategies optimize your website for AI crawler visibility. Discover implementation strategies for C...

Learn how JavaScript rendering impacts your website's visibility in AI search engines like ChatGPT, Perplexity, and Claude. Discover why AI crawlers struggle wi...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.