Understanding Vector Embeddings: How AI Matches Content to Queries

Learn how vector embeddings enable AI systems to understand semantic meaning and match content to queries. Explore the technology behind semantic search and AI ...

An embedding is a numerical vector representation of text, images, or other data that captures semantic meaning and relationships in a multidimensional space. Embeddings convert complex, unstructured data into dense arrays of floating-point numbers that machine learning models can process, enabling AI systems to understand context, similarity, and meaning rather than relying on keyword matching alone.

An embedding is a numerical vector representation of text, images, or other data that captures semantic meaning and relationships in a multidimensional space. Embeddings convert complex, unstructured data into dense arrays of floating-point numbers that machine learning models can process, enabling AI systems to understand context, similarity, and meaning rather than relying on keyword matching alone.

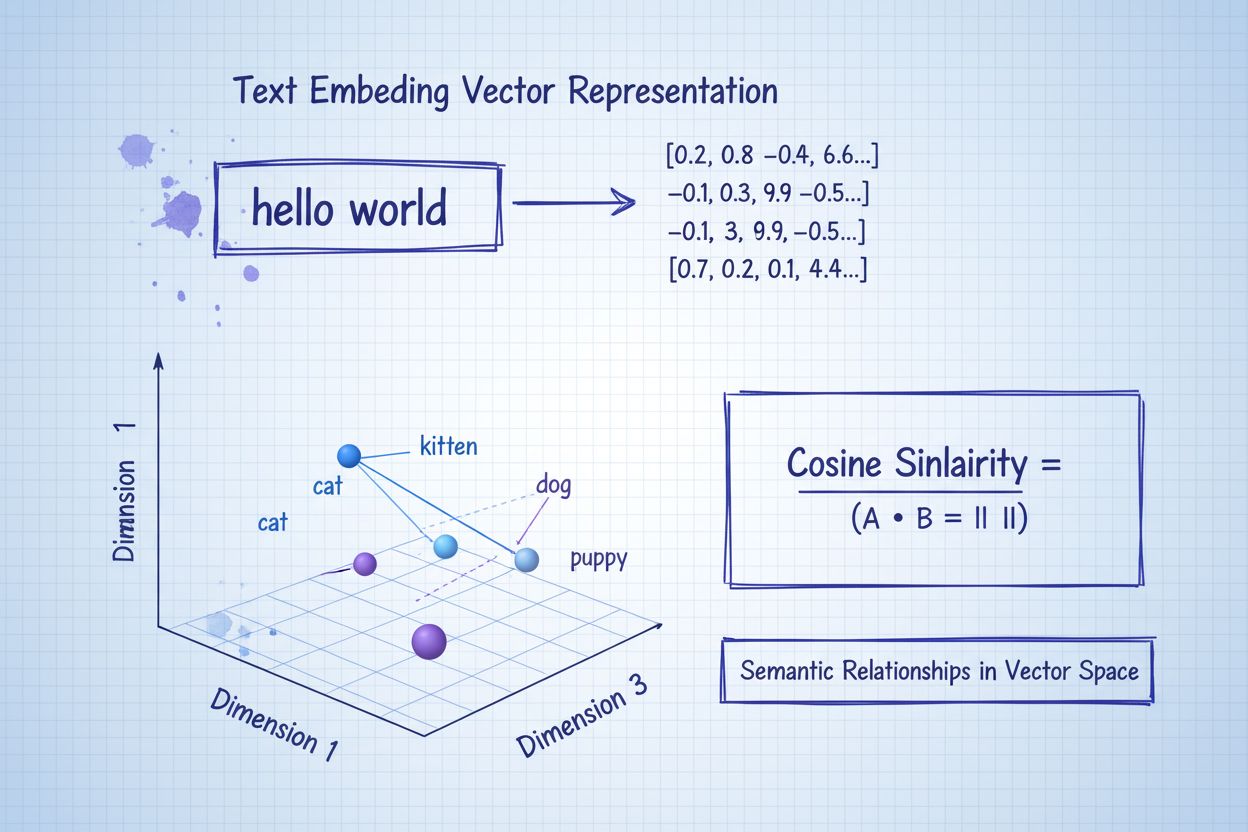

An embedding is a numerical vector representation of text, images, or other data that captures semantic meaning and relationships in a multidimensional space. Rather than treating text as discrete words to be matched, embeddings convert complex, unstructured information into dense arrays of floating-point numbers that machine learning models can process and compare. Each embedding is typically represented as a sequence of numbers such as [0.2, 0.8, -0.4, 0.6, …], where each number corresponds to a specific dimension or feature learned by the embedding model. The fundamental principle underlying embeddings is that semantically similar content produces mathematically similar vectors, enabling AI systems to understand context, measure similarity, and identify relationships without relying on exact keyword matching. This transformation from human-readable text to machine-interpretable numerical representations forms the foundation of modern AI applications, from semantic search engines to large language models and AI monitoring platforms that track brand citations across generative AI systems.

The concept of embeddings emerged from decades of research in natural language processing and machine learning, but gained widespread prominence with the introduction of Word2Vec in 2013, developed by researchers at Google. Word2Vec demonstrated that neural networks could learn meaningful word representations by predicting context words from a target word (Skip-gram) or vice versa (Continuous Bag of Words). This breakthrough showed that embeddings could capture semantic relationships—for example, the vector for “king” minus “man” plus “woman” approximately equals “queen,” revealing that embeddings encode analogical relationships. Following Word2Vec’s success, researchers developed increasingly sophisticated embedding techniques including GloVe (Global Vectors for Word Representation) in 2014, which leveraged global word co-occurrence statistics, and FastText from Facebook, which handled out-of-vocabulary words through character n-grams. The landscape transformed dramatically with the introduction of BERT (Bidirectional Encoder Representations from Transformers) in 2018, which produced contextualized embeddings that understood how the same word carries different meanings in different contexts. Today, embeddings have become ubiquitous in AI systems, with modern implementations using transformer-based models that produce embeddings ranging from 384 to 1536 dimensions depending on the specific model architecture and application requirements.

Embeddings are created through a machine learning process where neural networks learn to convert raw data into meaningful numerical representations. The process begins with preprocessing, where text is cleaned, tokenized, and prepared for the embedding model. The model then processes this input through multiple layers of neural networks, learning patterns and relationships within the data through training on large corpora. During training, the model adjusts its internal parameters to minimize a loss function, ensuring that semantically similar items are mapped closer together in the vector space while dissimilar items are pushed apart. The resulting embeddings capture intricate details about the input, including semantic meaning, syntactic relationships, and contextual information. For text embeddings specifically, the model learns associations between words that frequently appear together, understanding that “neural” and “network” are closely related concepts, while “neural” and “pizza” are semantically distant. The actual numbers within each embedding vector are not meaningful in isolation—it’s the relative values and relationships between numbers that encode semantic information. Modern embedding models like OpenAI’s text-embedding-ada-002 produce 1536-dimensional vectors, while BERT produces 768-dimensional embeddings, and sentence-transformers models like all-MiniLM-L6-v2 produce 384-dimensional vectors. The choice of dimensionality represents a tradeoff: higher dimensions can capture more nuanced semantic information but require more computational resources and storage space, while lower dimensions are more efficient but may lose subtle distinctions.

| Embedding Technique | Dimensionality | Training Approach | Strengths | Limitations |

|---|---|---|---|---|

| Word2Vec (Skip-gram) | 100-300 | Context prediction from target word | Fast training, captures semantic relationships, produces meaningful analogies | Static embeddings, doesn’t handle context variations, struggles with rare words |

| GloVe | 50-300 | Global co-occurrence matrix factorization | Combines local and global context, efficient training, good for general tasks | Requires pre-computed co-occurrence matrix, less contextual awareness than transformers |

| FastText | 100-300 | Character n-gram based word embeddings | Handles out-of-vocabulary words, captures morphological information, good for multiple languages | Larger model size, slower inference than Word2Vec |

| BERT | 768 | Bidirectional transformer with masked language modeling | Contextual embeddings, understands word sense disambiguation, state-of-the-art performance | Computationally expensive, requires fine-tuning for specific tasks, slower inference |

| Sentence-BERT | 384-768 | Siamese network with triplet loss | Optimized for sentence-level similarity, fast inference, excellent for semantic search | Requires specific training data, less flexible than BERT for custom tasks |

| OpenAI text-embedding-ada-002 | 1536 | Proprietary transformer-based model | Production-grade quality, handles long documents, optimized for retrieval tasks | Requires API access, commercial pricing, less transparency about training data |

The semantic space is a multidimensional mathematical landscape where embeddings are positioned based on their meaning and relationships. Imagine a vast coordinate system with hundreds or thousands of axes (dimensions), where each axis represents some aspect of semantic meaning learned by the embedding model. In this space, words and documents with similar meanings cluster together, while dissimilar concepts are positioned far apart. For example, in a semantic space, the words “cat,” “kitten,” “feline,” and “pet” would be positioned close to each other because they share semantic properties related to domesticated animals. Conversely, “cat” and “car” would be positioned far apart because they have minimal semantic overlap. This spatial organization is not random—it emerges from the embedding model’s training process, where the model learns to position similar concepts near each other to minimize prediction errors. The beauty of semantic space is that it captures not just direct similarities but also analogical relationships. The vector difference between “king” and “queen” is similar to the vector difference between “prince” and “princess,” revealing that the embedding model has learned abstract relationships about gender and royalty. When AI systems need to find similar documents, they measure distances in this semantic space using metrics like cosine similarity, which calculates the angle between two vectors. A cosine similarity of 1.0 indicates identical direction (perfect semantic similarity), while 0.0 indicates perpendicular vectors (no semantic relationship), and -1.0 indicates opposite directions (semantic opposition).

Embeddings form the semantic backbone of large language models and modern AI systems, serving as the gateway where raw text transforms into machine-understandable numerical representations. When you interact with ChatGPT, Claude, or Perplexity, embeddings are working behind the scenes at multiple levels. First, when these models process your input text, they convert it into embeddings that capture the semantic meaning of your query. The model then uses these embeddings to understand context, retrieve relevant information, and generate appropriate responses. In Retrieval-Augmented Generation (RAG) systems, embeddings play a critical role in the retrieval phase. When a user asks a question, the system embeds the query and searches a vector database for documents with similar embeddings. These semantically relevant documents are then passed to the language model, which generates an answer grounded in the retrieved content. This approach significantly improves accuracy and reduces hallucinations because the model references authoritative external knowledge rather than relying solely on its training data. For AI monitoring and brand tracking platforms like AmICited, embeddings enable detection of brand mentions even when exact keywords aren’t used. By embedding both your brand content and AI-generated responses, these platforms can identify semantic matches and track how your brand appears across different AI systems. If an AI model discusses your company’s technology using different terminology, embeddings can still recognize the semantic similarity and flag it as a citation. This capability is increasingly important as AI systems become more sophisticated in paraphrasing and rephrasing information.

Embeddings power numerous practical applications across industries and use cases. Semantic search engines use embeddings to understand user intent rather than matching keywords, enabling searches like “how do I fix a leaky faucet” to return results about plumbing repairs even if those exact words don’t appear in the documents. Recommendation systems at Netflix, Amazon, and Spotify use embeddings to represent user preferences and item characteristics, enabling personalized suggestions by finding items with similar embeddings to those the user has previously enjoyed. Anomaly detection systems in cybersecurity and fraud prevention use embeddings to identify unusual patterns by comparing current behavior embeddings to normal behavior embeddings, flagging deviations that might indicate security threats or fraudulent activity. Machine translation systems use multilingual embeddings to map words and phrases from one language to another by positioning them in a shared semantic space, enabling translation without explicit language-to-language rules. Image recognition and computer vision applications use image embeddings generated by convolutional neural networks to classify images, detect objects, and enable reverse image search. Question-answering systems use embeddings to match user questions with relevant documents or pre-trained answers, enabling chatbots to provide accurate responses by finding semantically similar training examples. Content moderation systems use embeddings to identify toxic, harmful, or policy-violating content by comparing user-generated content embeddings to embeddings of known problematic content. The versatility of embeddings across these diverse applications demonstrates their fundamental importance to modern AI systems.

Despite their power, embeddings face significant challenges in production environments. Scalability issues arise when managing billions of high-dimensional embeddings, as the “curse of dimensionality” causes search efficiency to degrade as dimensions increase. Traditional indexing methods struggle with high-dimensional data, though advanced techniques like Hierarchical Navigable Small-World (HNSW) graphs help mitigate this problem. Semantic drift occurs when embeddings become outdated as language evolves, user behavior changes, or domain-specific terminology shifts. For example, the word “virus” carries different semantic weight during a pandemic than during normal times, potentially affecting search results and recommendations. Addressing semantic drift requires regularly retraining embedding models, which demands significant computational resources and expertise. Computational costs for generating and processing embeddings remain substantial, particularly for training large models like BERT or CLIP, which require high-performance GPUs and large datasets costing thousands of dollars. Even after training, real-time querying can strain infrastructure, especially in applications like autonomous driving where embeddings must be processed at millisecond speeds. Bias and fairness concerns arise because embeddings learn from training data that may contain societal biases, potentially perpetuating or amplifying discrimination in downstream applications. Interpretability challenges make it difficult to understand what specific dimensions in an embedding represent or why the model made particular similarity judgments. Storage requirements for embeddings can be substantial—storing embeddings for millions of documents requires significant database infrastructure. Organizations address these challenges through techniques like quantization (reducing precision from 32-bit to 8-bit), dimension truncation (keeping only the most important dimensions), and cloud-based infrastructure that scales on demand.

The field of embeddings continues to evolve rapidly, with several emerging trends shaping the future of AI systems. Multimodal embeddings are becoming increasingly sophisticated, enabling seamless integration of text, images, audio, and video in shared vector spaces. Models like CLIP demonstrate the power of multimodal embeddings for tasks like image search from text descriptions or vice versa. Instruction-tuned embeddings are being developed to better understand specific types of queries and instructions, with specialized models outperforming general-purpose embeddings for domain-specific tasks like legal document search or medical literature retrieval. Efficient embeddings through quantization and pruning techniques are making embeddings more practical for edge devices and real-time applications, enabling embedding generation on smartphones and IoT devices. Adaptive embeddings that adjust their representations based on context or user preferences are emerging, potentially enabling more personalized and contextually relevant search and recommendation systems. Hybrid search approaches combining semantic similarity with traditional keyword matching are becoming standard practice, as research shows that combining both methods often outperforms either approach alone. Temporal embeddings that capture how meaning changes over time are being developed for applications requiring historical context awareness. Explainable embeddings research aims to make embedding models more interpretable, helping users understand why specific documents are considered similar. For AI monitoring and brand tracking, embeddings will likely become more sophisticated at detecting paraphrased citations, understanding context-specific brand mentions, and tracking how AI systems evolve their understanding of brands over time. As embeddings become more central to AI infrastructure, research into their efficiency, interpretability, and fairness will continue to accelerate.

Understanding embeddings is particularly relevant for organizations using AI monitoring platforms like AmICited to track brand visibility across generative AI systems. Traditional monitoring approaches that rely on exact keyword matching miss many important citations because AI models often paraphrase or use different terminology when referencing brands and companies. Embeddings solve this problem by enabling semantic matching—when AmICited embeds both your brand content and AI-generated responses, it can identify when an AI system discusses your company or products even if the exact keywords don’t appear. This capability is crucial for comprehensive brand monitoring because it captures citations that keyword-based systems would miss. For example, if your company specializes in “machine learning infrastructure,” an AI system might describe your offering as “AI model deployment platforms” or “neural network optimization tools.” Without embeddings, these paraphrased references would go undetected. With embeddings, the semantic similarity between your brand description and the AI’s paraphrased version is recognized, ensuring you maintain visibility of how AI systems cite and reference your brand. As AI systems like ChatGPT, Perplexity, Google AI Overviews, and Claude become increasingly important sources of information, the ability to track brand mentions through semantic understanding rather than keyword matching becomes essential for maintaining brand visibility and ensuring citation accuracy in the age of generative AI.

Traditional keyword search matches exact words or phrases, missing semantically similar content that uses different terminology. Embeddings understand meaning by converting text into numerical vectors where similar concepts produce similar vectors. This enables semantic search to find relevant results even when exact keywords don't match, such as finding 'handling missing values' when searching for 'data cleaning.' According to research, 25% of adults in the US report that AI-powered search engines using embeddings deliver more precise results than traditional keyword search.

The semantic space is a multidimensional mathematical space where embeddings are positioned based on their meaning. Similar concepts cluster together in this space, while dissimilar concepts are positioned far apart. For example, words like 'cat' and 'kitten' would be positioned close together because they share semantic properties, while 'cat' and 'car' would be distant. This spatial organization enables algorithms to measure similarity using distance metrics like cosine similarity, allowing AI systems to find related content efficiently.

Popular embedding models include Word2Vec (which learns word relationships from context), BERT (which understands contextual meaning by considering surrounding words), GloVe (which uses global word co-occurrence statistics), and FastText (which handles out-of-vocabulary words through character n-grams). Modern systems also use OpenAI's text-embedding-ada-002 (1536 dimensions) and Sentence-BERT for sentence-level embeddings. Each model produces different dimensional vectors—BERT uses 768 dimensions, while some models produce 384 or 1024-dimensional vectors depending on their architecture and training data.

RAG systems use embeddings to retrieve relevant documents before generating responses. When a user asks a question, the system embeds the query and searches a vector database for documents with similar embeddings. These retrieved documents are then passed to a language model, which generates an informed answer grounded in the retrieved content. This approach significantly improves accuracy and reduces hallucinations in AI responses by ensuring the model references authoritative external knowledge rather than relying solely on training data.

Cosine similarity measures the angle between two embedding vectors, ranging from -1 to 1, where 1 indicates identical direction (perfect similarity) and -1 indicates opposite direction. It's the standard metric for comparing embeddings because it focuses on semantic meaning and direction rather than magnitude. Cosine similarity is computationally efficient and works well in high-dimensional spaces, making it ideal for finding similar documents, recommendations, and semantic relationships in AI systems.

Embeddings power AI monitoring platforms by converting brand mentions, URLs, and content into numerical vectors that can be compared semantically. This allows systems to detect when AI models cite or reference your brand even when exact keywords aren't used. By embedding both your brand content and AI-generated responses, monitoring platforms can identify semantic matches, track how your brand appears across ChatGPT, Perplexity, Google AI Overviews, and Claude, and measure citation accuracy and context.

Key challenges include scalability issues with billions of high-dimensional embeddings, semantic drift where embeddings become outdated as language evolves, and significant computational costs for training and inference. The 'curse of dimensionality' makes search less efficient as dimensions increase, and maintaining embedding quality requires regular model retraining. Solutions include using advanced indexing techniques like HNSW graphs, quantization to reduce storage, and cloud-based GPU infrastructure for cost-effective scaling.

Dimensionality reduction techniques like Principal Component Analysis (PCA) compress high-dimensional embeddings into lower dimensions (typically 2D or 3D) for visualization and analysis. While embeddings typically have hundreds or thousands of dimensions, humans can't visualize beyond 3D. Dimensionality reduction preserves the most important information while making patterns visible. For example, reducing 384-dimensional embeddings to 2D can retain 41% of variance while clearly showing how documents cluster by topic, helping data scientists understand what the embedding model has learned.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Learn how vector embeddings enable AI systems to understand semantic meaning and match content to queries. Explore the technology behind semantic search and AI ...

Learn how embeddings work in AI search engines and language models. Understand vector representations, semantic search, and their role in AI-generated answers.

Community discussion explaining embeddings in AI search. Practical explanations for marketers on how vector embeddings affect content visibility in ChatGPT, Per...