Enterprise AI Visibility Solutions: Choosing the Right Platform

Complete guide to enterprise AI visibility solutions. Compare top platforms like Conductor, Profound, and Athena. Learn evaluation criteria and selection strate...

Enterprise AI Visibility Strategy refers to the comprehensive approach organizations implement to monitor, track, and understand all artificial intelligence systems, models, and applications operating within their infrastructure. This strategy encompasses the ability to see what AI systems are being used, how they perform, who is using them, and what risks they present across the entire organization. For large enterprises managing hundreds or thousands of AI implementations, visibility becomes critically important because shadow AI—unauthorized or undocumented AI tools—can proliferate rapidly without proper oversight. Without comprehensive visibility, organizations cannot ensure compliance, manage risks, optimize performance, or derive maximum value from their AI investments.

Enterprise AI Visibility Strategy refers to the comprehensive approach organizations implement to monitor, track, and understand all artificial intelligence systems, models, and applications operating within their infrastructure. This strategy encompasses the ability to see what AI systems are being used, how they perform, who is using them, and what risks they present across the entire organization. For large enterprises managing hundreds or thousands of AI implementations, visibility becomes critically important because shadow AI—unauthorized or undocumented AI tools—can proliferate rapidly without proper oversight. Without comprehensive visibility, organizations cannot ensure compliance, manage risks, optimize performance, or derive maximum value from their AI investments.

Enterprise AI Visibility Strategy refers to the comprehensive approach organizations implement to monitor, track, and understand all artificial intelligence systems, models, and applications operating within their infrastructure. This strategy encompasses the ability to see what AI systems are being used, how they perform, who is using them, and what risks they present across the entire organization. For large enterprises managing hundreds or thousands of AI implementations, visibility becomes critically important because shadow AI—unauthorized or undocumented AI tools—can proliferate rapidly without proper oversight. The challenge intensifies at scale, where 85% of enterprises now use AI in some capacity, yet only 11% report clear business value, indicating a significant gap between deployment and effective management. Without comprehensive visibility, organizations cannot ensure compliance, manage risks, optimize performance, or derive maximum value from their AI investments.

Enterprise AI visibility operates across three interconnected dimensions that together provide complete organizational awareness of AI systems and their impact. The first dimension, usage monitoring, tracks which AI systems are deployed, who accesses them, how frequently they’re used, and for what business purposes. The second dimension, quality monitoring, ensures that AI models perform as intended, maintain accuracy standards, and don’t degrade over time due to data drift or model decay. The third dimension, security monitoring, protects against unauthorized access, data breaches, prompt injection attacks, and ensures compliance with regulatory requirements. These three dimensions must work in concert, supported by centralized logging, real-time dashboards, and automated alerting systems. Organizations implementing comprehensive visibility across all three dimensions report significantly better governance outcomes and faster incident response times.

| Dimension | Purpose | Key Metrics |

|---|---|---|

| Usage Monitoring | Track AI system deployment and utilization patterns | Active users, API calls, model versions, business unit adoption |

| Quality Monitoring | Ensure model performance and reliability | Accuracy, precision, recall, prediction drift, latency |

| Security Monitoring | Protect against threats and ensure compliance | Access logs, anomalies detected, policy violations, audit trails |

Organizations face substantial obstacles when attempting to implement comprehensive AI visibility across large, complex environments. Shadow AI represents perhaps the most significant challenge—employees and departments deploy AI tools without IT knowledge or approval, creating blind spots that prevent centralized monitoring and governance. Data silos fragment information across departments, making it impossible to correlate AI usage patterns or identify duplicate efforts and wasted resources. Integration complexity emerges when organizations must connect visibility tools with legacy systems, cloud platforms, and diverse AI frameworks that weren’t designed with monitoring in mind. Regulatory fragmentation requires different visibility standards for different jurisdictions, creating compliance complexity that demands flexible, adaptable monitoring infrastructure. Additionally, 84% of IT leaders report lacking a formal governance process, and 72% of organizations report data quality issues that undermine the reliability of visibility metrics themselves.

Key visibility challenges include:

Effective enterprise AI visibility requires alignment with established governance frameworks and standards that provide structure and credibility to monitoring efforts. The NIST AI Risk Management Framework (RMF) offers a comprehensive approach to identifying, measuring, and managing AI risks, providing a foundation for visibility requirements across all organizational functions. ISO/IEC 42001 establishes international standards for AI management systems, including requirements for monitoring, documentation, and continuous improvement that align with visibility objectives. The EU AI Act imposes strict transparency and documentation requirements for high-risk AI systems, mandating detailed records of AI system behavior and decision-making processes. Industry-specific frameworks add additional requirements—financial services organizations must comply with banking regulators’ AI governance expectations, healthcare organizations must meet FDA requirements for clinical AI systems, and government agencies must adhere to federal AI governance directives. Organizations should select frameworks appropriate to their industry, geography, and risk profile, then build visibility infrastructure that demonstrates compliance with chosen standards.

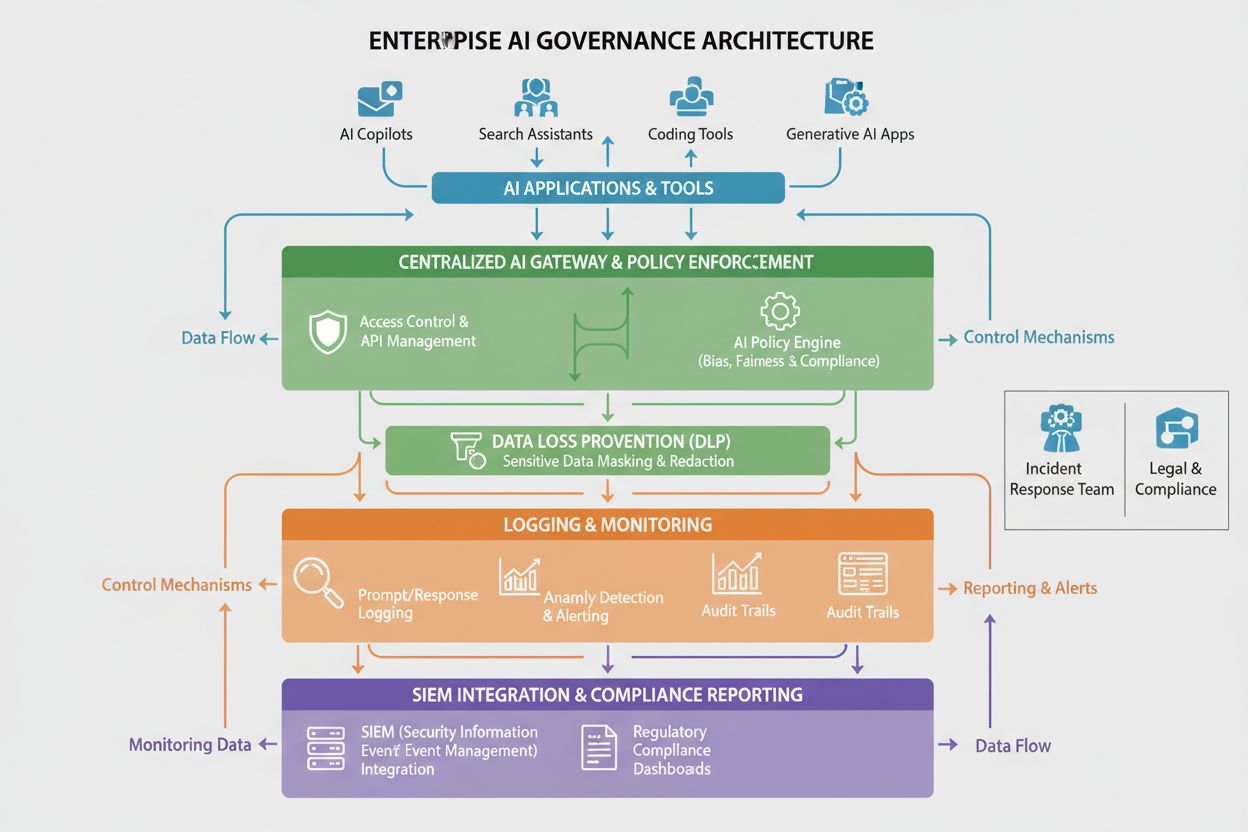

Implementing enterprise AI visibility requires a robust technical foundation that captures, processes, and presents data about AI system behavior and performance. Centralized AI platforms serve as the backbone of visibility infrastructure, providing a single pane of glass where organizations can monitor all AI systems regardless of where they’re deployed. AI gateways act as intermediaries between applications and AI services, capturing metadata about every request and response, enabling detailed usage tracking and security monitoring. Comprehensive logging systems record all AI system activities, model predictions, user interactions, and system performance metrics in centralized repositories that support audit trails and forensic analysis. Data Loss Prevention (DLP) tools monitor AI systems for attempts to exfiltrate sensitive data, preventing models from being trained on confidential information or returning protected data in responses. SIEM integration connects AI visibility data with broader security information and event management systems, enabling correlation of AI-related security events with other organizational threats. Organizations implementing these technical components report 30% reduction in incident response time when AI-related security issues occur. Platforms like Liminal, Ardoq, and Knostic provide governance-focused visibility solutions, while AmICited.com specializes in monitoring AI answer quality across GPTs, Perplexity, and Google AI Overviews.

Successful enterprise AI visibility requires clear organizational structures and defined roles that distribute responsibility for monitoring and governance across the organization. An AI Governance Committee typically serves as the executive body overseeing AI visibility strategy, setting policies, and ensuring alignment with business objectives and regulatory requirements. Model Owners take responsibility for specific AI systems, ensuring they’re properly documented, monitored, and maintained according to organizational standards. AI Champions embedded within business units serve as liaisons between IT governance teams and end users, promoting visibility practices and identifying shadow AI before it becomes unmanageable. Data stewards manage the quality and accessibility of data used to train and monitor AI systems, ensuring that visibility metrics themselves are reliable and trustworthy. Security and compliance teams establish monitoring requirements, conduct audits, and ensure that visibility infrastructure meets regulatory obligations. Clear accountability structures ensure that visibility isn’t treated as an IT-only responsibility but rather as a shared organizational commitment requiring participation from business, technical, and governance functions.

Organizations must establish clear key performance indicators (KPIs) and measurement frameworks to assess whether their AI visibility strategy is delivering value and supporting organizational objectives. Visibility coverage measures the percentage of AI systems that are documented and monitored, with mature organizations targeting 95%+ coverage of all AI deployments. Governance maturity tracks progress through defined stages—from ad-hoc monitoring to standardized processes to optimized, automated governance—using frameworks like the CMMI model adapted for AI governance. Incident detection and response metrics measure how quickly organizations identify and respond to AI-related issues, with improvements in detection speed and response time indicating more effective visibility. Compliance adherence tracks the percentage of AI systems meeting regulatory requirements and internal standards, with audit findings and remediation timelines serving as key metrics. Business value realization measures whether visibility investments translate to tangible benefits such as reduced risk, improved model performance, faster time-to-market for AI initiatives, or better resource allocation. Organizations should implement real-time dashboards that display these metrics to stakeholders, enabling continuous monitoring and rapid course correction when visibility gaps emerge.

Different industries face unique AI visibility requirements driven by regulatory environments, risk profiles, and business models that demand tailored monitoring approaches. Financial services organizations must comply with banking regulators’ expectations for AI governance, including detailed monitoring of AI systems used in lending decisions, fraud detection, and trading algorithms, with particular emphasis on bias detection and fairness metrics. Healthcare organizations must meet FDA requirements for clinical AI systems, including validation of model performance, monitoring for safety issues, and documentation of how AI systems influence clinical decisions. Legal organizations using AI for contract analysis, legal research, and due diligence must ensure visibility into model training data to prevent confidentiality breaches and maintain attorney-client privilege. Government agencies must comply with federal AI governance directives, including transparency requirements, bias auditing, and documentation of AI system decision-making for public accountability. Retail and e-commerce organizations must monitor AI systems used in recommendation engines and personalization for compliance with consumer protection regulations and fair competition laws. Manufacturing organizations must track AI systems used in quality control and predictive maintenance to ensure safety and reliability. Industry-specific visibility requirements should be incorporated into governance frameworks rather than treated as separate compliance exercises.

Organizations implementing enterprise AI visibility should adopt a phased approach that delivers quick wins while building toward comprehensive, mature governance capabilities. Start with inventory and documentation—conduct an audit to identify all AI systems currently in use, including shadow AI, and create a centralized registry documenting each system’s purpose, owner, data sources, and business criticality. Identify quick wins by focusing initial monitoring efforts on high-risk systems such as customer-facing AI, systems processing sensitive data, or models making consequential decisions about individuals. Implement centralized logging as a foundational capability that captures metadata about all AI system activities, enabling both real-time monitoring and historical analysis. Establish governance policies that define standards for AI system documentation, monitoring, and compliance, then communicate these policies clearly to all stakeholders. Build cross-functional teams that include IT, security, business, and compliance representatives, ensuring that visibility initiatives address concerns from all organizational perspectives. Measure and communicate progress by tracking visibility metrics and sharing results with leadership, demonstrating the value of governance investments and building organizational support for continued investment in AI visibility infrastructure.

AI visibility is the ability to see and monitor what AI systems are doing, while AI governance is the broader framework of policies, processes, and controls that manage how AI systems are developed, deployed, and used. Visibility is a foundational component of governance—you cannot govern what you cannot see. Effective AI governance requires comprehensive visibility across all three dimensions: usage, quality, and security monitoring.

Large organizations face unique challenges managing hundreds or thousands of AI implementations across multiple departments, cloud providers, and business units. Without comprehensive visibility, shadow AI proliferates, compliance risks increase, and organizations cannot optimize AI investments or ensure responsible AI use. Visibility enables organizations to identify risks, enforce policies, and derive maximum value from AI initiatives.

Poor AI visibility creates multiple risks: shadow AI systems operate without oversight, sensitive data may be exposed through unmonitored AI systems, compliance violations go undetected, model performance degradation isn't identified, security threats aren't detected, and organizations cannot demonstrate governance to regulators. These risks can result in data breaches, regulatory fines, reputational damage, and loss of customer trust.

Shadow AI—unauthorized AI tools deployed without IT knowledge—creates blind spots that prevent centralized monitoring and governance. Employees may use public AI services like ChatGPT without organizational oversight, potentially exposing sensitive data or violating compliance requirements. Shadow AI also leads to duplicate efforts, wasted resources, and inability to enforce organizational AI policies and standards.

Purpose-built AI governance platforms like Liminal, Ardoq, and Knostic provide centralized monitoring, policy enforcement, and compliance reporting. These platforms integrate with AI services, capture detailed logs, detect anomalies, and provide dashboards for governance teams. Additionally, AmICited specializes in monitoring how AI systems reference your brand across GPTs, Perplexity, and Google AI Overviews, providing visibility into AI answer quality.

Regulatory frameworks like the EU AI Act, GDPR, CCPA, and industry-specific regulations (OCC for banking, FDA for healthcare) mandate specific visibility and documentation requirements. Organizations must implement monitoring that demonstrates compliance with these regulations, including audit trails, bias testing, performance monitoring, and documentation of AI system decision-making. Visibility infrastructure must be designed to meet these regulatory obligations.

Key metrics include: visibility coverage (percentage of AI systems documented and monitored), governance maturity (progress through defined governance stages), incident detection and response time, compliance adherence (percentage of systems meeting regulatory requirements), and business value realization (tangible benefits from visibility investments). Organizations should also track usage metrics (active users, API calls), quality metrics (accuracy, drift), and security metrics (anomalies detected, policy violations).

Implementation timelines vary based on organizational size and complexity. Initial visibility infrastructure (inventory, basic logging, dashboards) can be established in 3-6 months. Achieving comprehensive visibility across all AI systems typically requires 6-12 months. Reaching mature, optimized governance capabilities usually takes 12-24 months. Organizations should adopt a phased approach, starting with high-risk systems and quick wins, then expanding to comprehensive coverage.

AmICited tracks how AI systems like GPTs, Perplexity, and Google AI Overviews reference your brand and content. Get visibility into AI answer quality and brand mentions across all major AI platforms.

Complete guide to enterprise AI visibility solutions. Compare top platforms like Conductor, Profound, and Athena. Learn evaluation criteria and selection strate...

Learn about the AI Visibility Maturity Model, a framework for assessing organizational readiness for AI monitoring and governance. Discover the 5 maturity level...

Learn how to implement effective AI content governance policies with visibility frameworks. Discover regulatory requirements, best practices, and tools for mana...