How AI Understands Entities: Technical Deep Dive

Explore how AI systems recognize and process entities in text. Learn about NER models, transformer architectures, and real-world applications of entity understa...

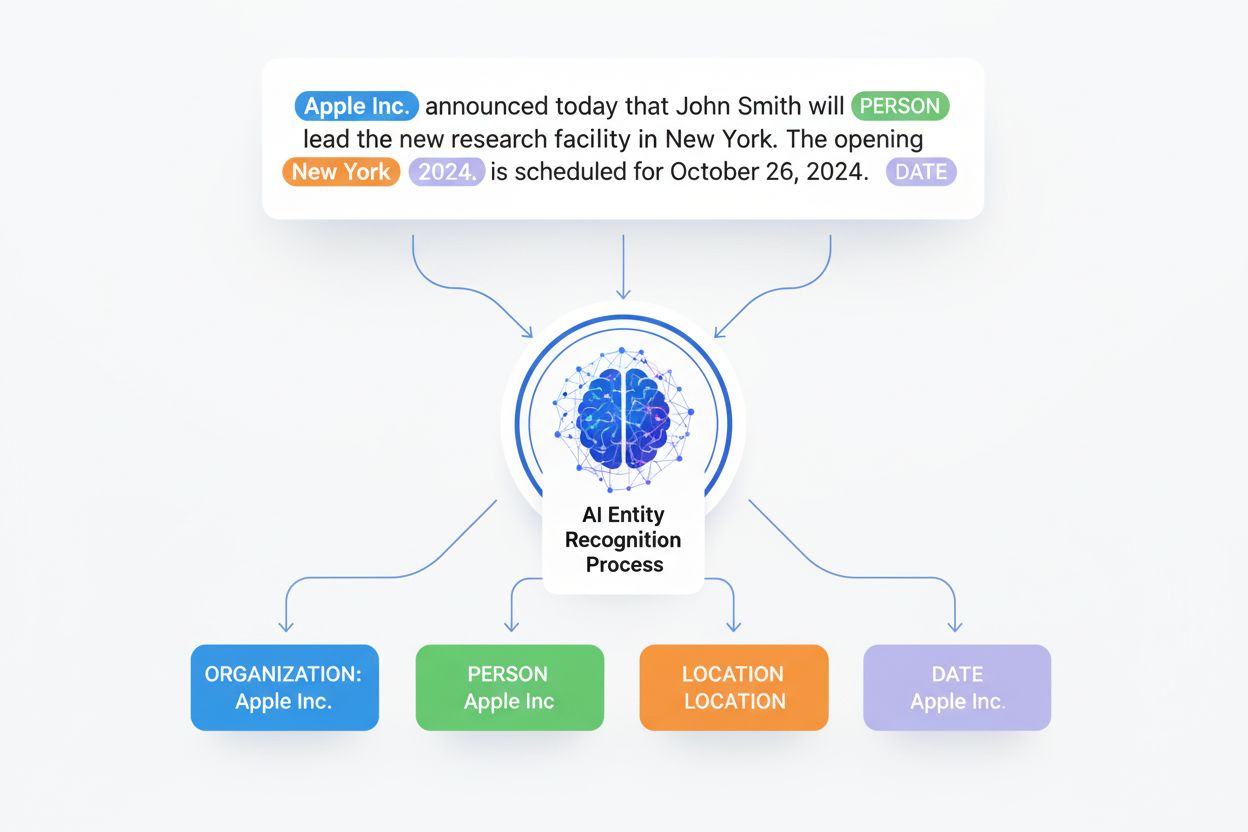

Entity Recognition is an AI capability that identifies and categorizes named entities (such as people, organizations, locations, and dates) within unstructured text. This fundamental Natural Language Processing task converts raw text into structured data by automatically detecting meaningful information and assigning it to predefined categories, enabling AI systems to understand and extract critical information from documents.

Entity Recognition is an AI capability that identifies and categorizes named entities (such as people, organizations, locations, and dates) within unstructured text. This fundamental Natural Language Processing task converts raw text into structured data by automatically detecting meaningful information and assigning it to predefined categories, enabling AI systems to understand and extract critical information from documents.

Entity Recognition is a fundamental capability within Artificial Intelligence and Natural Language Processing (NLP) that automatically identifies and categorizes named entities within unstructured text. Named entities are specific, meaningful pieces of information such as person names, organizational titles, geographic locations, dates, monetary values, and other predefined categories. The primary purpose of Entity Recognition is to convert raw, unstructured textual data into structured, machine-readable information that AI systems can process, analyze, and leverage for downstream applications. This capability has become increasingly critical as organizations seek to extract actionable intelligence from vast amounts of textual content, particularly in the context of AI monitoring and brand visibility tracking across multiple AI platforms.

The significance of Entity Recognition extends beyond simple text parsing. It serves as a foundational layer for numerous advanced NLP tasks, including sentiment analysis, information extraction, knowledge graph construction, and semantic search. By accurately identifying entities and their relationships within text, Entity Recognition enables AI systems to understand context, disambiguate meaning, and provide more intelligent responses. For platforms like AmICited, which monitor brand and domain appearances in AI-generated responses, Entity Recognition is essential for tracking how entities are mentioned, cited, and contextualized across different AI systems including ChatGPT, Perplexity, Google AI Overviews, and Claude.

Entity Recognition emerged as a distinct research area in the 1990s within the Information Extraction community, initially driven by the need to automatically populate databases from unstructured news articles and documents. Early systems relied heavily on rule-based approaches, utilizing hand-crafted linguistic patterns and domain-specific dictionaries to identify entities. These pioneering systems, while effective for well-defined domains, suffered from limited scalability and struggled with ambiguous or novel entity types. The field experienced significant advancement with the introduction of machine learning-based methods in the early 2000s, which enabled systems to learn entity patterns from annotated training data rather than relying on manually crafted rules.

The landscape of Entity Recognition transformed dramatically with the emergence of deep learning technologies in the 2010s. Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks demonstrated superior performance by capturing sequential dependencies in text, while Conditional Random Fields (CRF) provided probabilistic frameworks for sequence labeling. The introduction of Transformer architectures in 2017 revolutionized the field, enabling models like BERT, RoBERTa, and GPT to achieve unprecedented accuracy levels. According to recent research, BERT-LSTM hybrid models achieve F1-scores of 0.91 across diverse entity types, representing a substantial improvement over earlier approaches. Today, the global NLP market, which heavily relies on Entity Recognition capabilities, is projected to grow from $18.9 billion in 2023 to $68.1 billion by 2030, reflecting the increasing importance of these technologies across industries.

Entity Recognition operates through a systematic two-stage process: entity detection and entity classification. During the entity detection phase, the system scans text to identify spans of words that potentially represent meaningful entities. This process begins with tokenization, where text is broken into individual words or subword units that can be processed by machine learning models. The system then extracts relevant features from each token, including morphological characteristics (word form, prefixes, suffixes), syntactic information (part-of-speech tags), semantic properties (word meaning and context), and contextual clues from surrounding words.

The entity classification phase assigns detected entities to predefined categories based on their semantic significance and contextual relationships. This stage requires sophisticated understanding of context, as the same word can represent different entity types depending on surrounding information. For example, the word “Jordan” could refer to a person (Michael Jordan), a country (Jordan), a river (Jordan River), or a brand, depending on context. Modern Entity Recognition systems leverage word embeddings and contextual representations to capture these nuances. Transformer-based models excel at this task by using attention mechanisms that allow the model to simultaneously consider all words in a sentence, understanding how each word relates to others and determining the most appropriate entity classification.

| Approach | Method | Accuracy | Scalability | Flexibility | Computational Cost |

|---|---|---|---|---|---|

| Rule-Based | Hand-crafted patterns, dictionaries, regex | High (domain-specific) | Low | Low | Very Low |

| Machine Learning | SVM, Random Forest, CRF with feature engineering | Medium-High | Medium | Medium | Low-Medium |

| Deep Learning (LSTM/RNN) | Neural networks with sequential processing | High | High | High | Medium-High |

| Transformer-Based | BERT, RoBERTa, attention mechanisms | Very High (F1: 0.91) | Very High | Very High | High |

| Large Language Models | GPT-4, Claude, generative models | Very High | Very High | Very High | Very High |

Entity Recognition has become increasingly sophisticated with the adoption of Transformer-based architectures and Large Language Models. These advanced systems can identify not only traditional entity types (person, organization, location, date) but also domain-specific entities such as medical conditions, legal concepts, financial instruments, and product names. The ability to recognize entities with high precision is particularly important for AI monitoring platforms like AmICited, which must accurately track brand mentions across multiple AI systems. When a user queries ChatGPT about a specific brand, Entity Recognition ensures that the system correctly identifies the brand name, distinguishes it from similar entities, and tracks its appearance in the generated response.

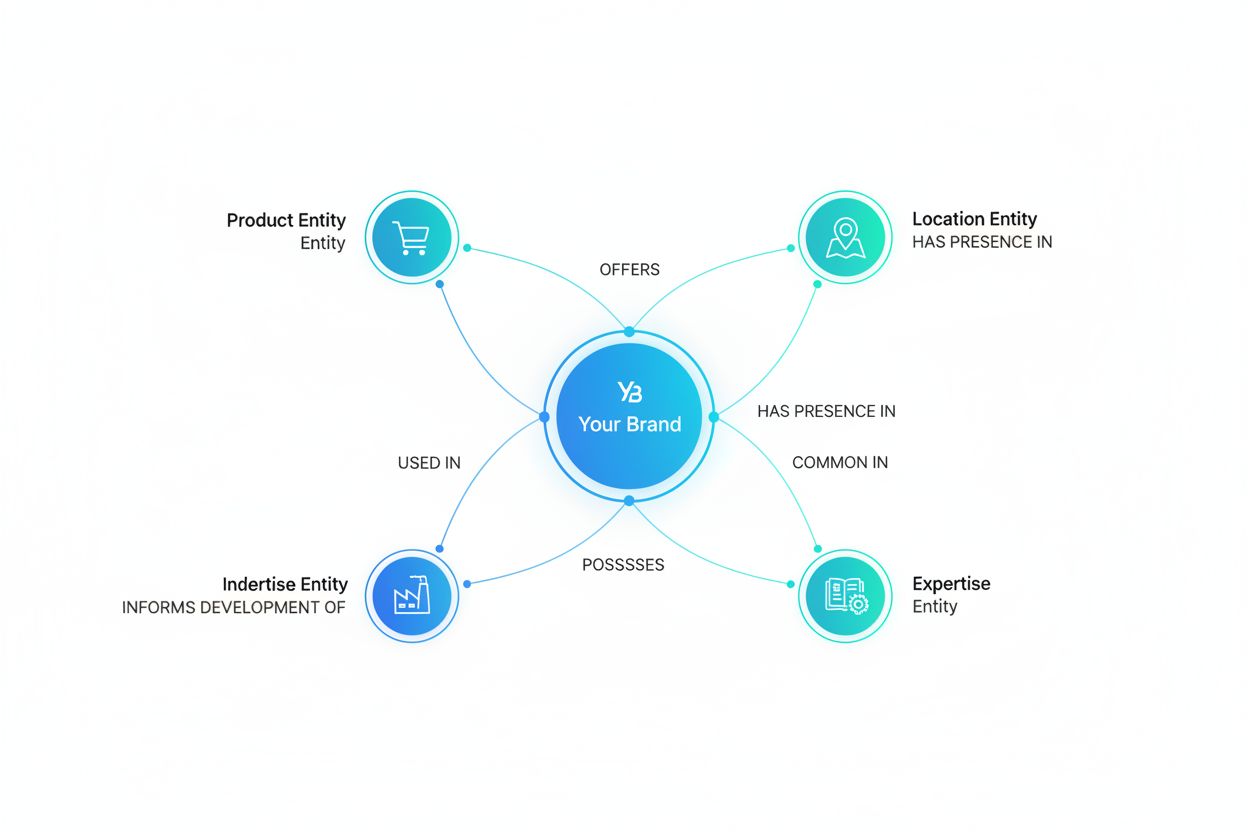

The integration of Entity Recognition with knowledge graphs represents a significant advancement in the field. Knowledge graphs provide rich semantic information about entities, including their attributes, types, and relationships with other entities. By combining Entity Recognition with knowledge graph integration, systems can not only identify entities but also understand their semantic roles and relationships. This synergy is particularly valuable for brand monitoring applications, where understanding the context and relationships surrounding entity mentions provides deeper insights into brand visibility and positioning. For instance, AmICited can track not just that a brand is mentioned, but how it is contextualized relative to competitors, products, and industry concepts.

Rule-based Entity Recognition represents the foundational approach, utilizing predefined patterns, dictionary lookups, and linguistic rules to identify entities. While these methods offer high accuracy for well-defined domains and require minimal computational resources, they lack scalability and struggle with novel or ambiguous entities. Machine learning-based approaches introduced greater flexibility by training models on annotated datasets, enabling systems to learn entity patterns automatically. These methods typically employ algorithms like Support Vector Machines (SVM), Conditional Random Fields (CRF), and Random Forests, combined with carefully engineered features such as word capitalization, surrounding context, and morphological properties.

Deep learning-based Entity Recognition leverages neural network architectures to automatically learn relevant features from raw text without manual feature engineering. LSTM networks and Bidirectional RNNs capture sequential dependencies, making them particularly effective for sequence labeling tasks. Transformer-based models like BERT and RoBERTa represent the current state-of-the-art, utilizing attention mechanisms to understand relationships between all words in a sentence simultaneously. These models can be fine-tuned on specific Entity Recognition tasks, achieving exceptional performance across diverse domains. Large Language Models like GPT-4 and Claude offer additional capabilities, including the ability to understand complex contextual relationships and handle zero-shot entity recognition tasks without task-specific training.

Modern Entity Recognition systems identify a diverse range of entity types, each with distinct characteristics and recognition patterns. Person entities include individual names, titles, and references to specific individuals. Organization entities encompass company names, government agencies, institutions, and other formal organizations. Location entities include countries, cities, regions, and geographic features. Date and Time entities capture temporal expressions, including specific dates, time ranges, and relative temporal references. Quantity entities include numerical values, percentages, measurements, and monetary amounts. Beyond these standard categories, domain-specific Entity Recognition systems can identify specialized entities such as medical conditions, drug names, legal concepts, financial instruments, and product names.

The recognition of these entity types relies on both syntactic patterns (such as capitalization and word order) and semantic understanding (such as contextual meaning and relationships). For example, recognizing a Person entity might involve identifying capitalized words that follow known person name patterns, but distinguishing between a person’s first name and last name requires understanding syntactic structure. Similarly, recognizing an Organization entity might involve identifying capitalized multi-word phrases, but distinguishing between a company name and a location name requires semantic understanding of context. Advanced Entity Recognition systems combine these approaches, using neural networks to learn complex patterns that capture both syntactic and semantic information.

Entity Recognition plays a critical role in AI monitoring platforms that track brand visibility across multiple AI systems. When ChatGPT, Perplexity, Google AI Overviews, or Claude generate responses, they mention various entities including brand names, product names, competitor names, and industry concepts. AmICited uses advanced Entity Recognition to identify these mentions, track their frequency, and analyze their context. This capability enables organizations to understand how their brands are being recognized and cited in AI-generated content, providing insights into brand visibility, competitive positioning, and content attribution.

The challenge of Entity Recognition in AI monitoring is particularly complex because AI-generated responses often contain nuanced references to entities. A brand might be mentioned directly by name, referenced through a product name, or discussed in relation to competitors. Entity Recognition systems must handle these variations, including acronyms, abbreviations, alternative names, and contextual references. For example, recognizing that “AAPL” refers to “Apple Inc.” requires understanding both the entity itself and common abbreviations. Similarly, recognizing that “the Cupertino tech giant” refers to Apple requires semantic understanding of descriptive references. Advanced Entity Recognition systems, particularly those based on Transformer models and Large Language Models, excel at handling these complex variations.

The future of Entity Recognition is being shaped by several emerging trends and technological developments. Few-shot and zero-shot learning capabilities are enabling Entity Recognition systems to identify new entity types with minimal training data, dramatically reducing the annotation burden. Multimodal Entity Recognition, which combines text with images, audio, and other data modalities, is expanding the scope of entity identification beyond text-only approaches. Cross-lingual Entity Recognition is improving, enabling systems to identify entities across multiple languages and scripts, supporting global applications.

The integration of Entity Recognition with Large Language Models and Generative AI is creating new possibilities for entity understanding and reasoning. Rather than simply identifying entities, future systems will be able to reason about entity properties, relationships, and implications. Knowledge graph integration will become increasingly sophisticated, with Entity Recognition systems automatically updating and enriching knowledge graphs based on newly identified entities and relationships. For AI monitoring platforms like AmICited, these advances mean increasingly accurate tracking of brand mentions across AI systems, more sophisticated understanding of entity context and relationships, and better insights into how brands are being recognized and positioned in AI-generated content.

The growing importance of Entity Recognition in AI search optimization and Generative Engine Optimization (GEO) reflects the critical role of entity understanding in modern AI systems. As organizations seek to improve their visibility in AI-generated responses, understanding how Entity Recognition works and how to optimize for entity identification becomes increasingly important. The convergence of Entity Recognition, knowledge graphs, and Large Language Models is creating a new paradigm for information understanding and extraction, with profound implications for how organizations monitor their brand presence, track competitive positioning, and leverage AI-generated content for business intelligence.

Entity Recognition (NER) identifies and categorizes named entities in text, such as detecting 'Apple' as an organization. Entity Linking goes further by connecting that identified entity to a specific real-world object in a knowledge base, determining whether 'Apple' refers to the technology company, the fruit, or another entity. While NER focuses on detection and classification, entity linking adds disambiguation and knowledge base integration to provide semantic meaning and context.

Entity Recognition enables AI systems like ChatGPT, Perplexity, and Google AI Overviews to accurately identify brand mentions, product names, and organizational references within generated responses. For brand monitoring platforms like AmICited, entity recognition helps track how brands appear across different AI systems by precisely detecting entity mentions and categorizing them. This capability is essential for understanding brand visibility in AI-generated content and monitoring competitive positioning across multiple AI platforms.

Entity Recognition can be implemented through four primary approaches: rule-based methods using predefined patterns and dictionaries; machine learning-based methods using algorithms like Support Vector Machines and Conditional Random Fields; deep learning approaches using neural networks like LSTMs and Transformers; and large language models like GPT-4 and BERT. Deep learning methods, particularly Transformer-based architectures, currently achieve the highest accuracy rates, with BERT-LSTM models reaching F1-scores of 0.91 across entity types.

Entity Recognition is fundamental for AI monitoring platforms because it enables precise tracking of how entities (brands, people, organizations, products) appear in AI-generated responses. Without accurate entity recognition, monitoring systems cannot distinguish between different entities with similar names, cannot track brand mentions across different AI platforms, and cannot provide accurate visibility metrics. This capability directly impacts the quality and reliability of brand monitoring and competitive intelligence in the AI search landscape.

Transformer-based models and Large Language Models improve Entity Recognition by capturing deep contextual relationships within text through attention mechanisms. Unlike traditional machine learning approaches that require manual feature engineering, Transformers automatically learn relevant features from data. Models like RoBERTa and BERT can be fine-tuned for specific entity recognition tasks, achieving state-of-the-art performance. These models excel at handling ambiguous entities by understanding surrounding context, making them particularly effective for complex, domain-specific entity recognition tasks.

Modern Entity Recognition systems can identify numerous entity types including: Person (names of individuals), Organization (companies, institutions, agencies), Location (cities, countries, regions), Date/Time (specific dates, time expressions), Quantity (numbers, percentages, measurements), Product (brand names, product titles), Event (named events, conferences), and domain-specific entities like medical terms, legal concepts, or financial instruments. The specific entity types depend on the training data and the particular NER model's configuration.

Entity Recognition enables accurate identification of entities mentioned in AI-generated content, which is essential for proper citation and attribution. By recognizing brand names, author names, organization references, and other key entities, AI monitoring systems can track which entities are cited, how frequently they appear, and in what context. This capability is crucial for AmICited's mission to monitor brand and domain appearances in AI responses, ensuring accurate tracking of entity mentions across ChatGPT, Perplexity, Google AI Overviews, and Claude.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Explore how AI systems recognize and process entities in text. Learn about NER models, transformer architectures, and real-world applications of entity understa...

Learn how entity optimization helps your brand become recognizable to LLMs. Master knowledge graph optimization, schema markup, and entity strategies for AI vis...

Learn how AI systems identify, extract, and understand relationships between entities in text. Discover entity relationship extraction techniques, NLP methods, ...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.