AI Model Fine-Tuning

Learn how AI model fine-tuning adapts pre-trained models for specific industry and brand-related tasks, improving accuracy while reducing costs and computationa...

Fine-tuning is the process of adapting a pre-trained AI model to perform specific tasks by training it on a smaller, domain-specific dataset. This technique adjusts the model’s parameters to excel at specialized applications while leveraging the broad knowledge already learned during initial pre-training, making it more efficient and cost-effective than training from scratch.

Fine-tuning is the process of adapting a pre-trained AI model to perform specific tasks by training it on a smaller, domain-specific dataset. This technique adjusts the model's parameters to excel at specialized applications while leveraging the broad knowledge already learned during initial pre-training, making it more efficient and cost-effective than training from scratch.

Fine-tuning is the process of adapting a pre-trained AI model to perform specific tasks by training it on a smaller, domain-specific dataset. Rather than building an AI model from scratch—which requires massive computational resources and enormous amounts of labeled data—fine-tuning leverages the broad knowledge a model has already acquired during initial pre-training and refines it for specialized applications. This technique has become fundamental to modern deep learning and generative AI, enabling organizations to customize powerful models like large language models (LLMs) for their unique business needs. Fine-tuning represents a practical implementation of transfer learning, where knowledge gained from one task improves performance on a related task. The intuition is straightforward: it’s far easier and cheaper to hone the capabilities of a model that already understands general patterns than to train a new model from scratch for a specific purpose.

Fine-tuning emerged as a critical technique as deep learning models grew exponentially in size and complexity. In the early 2010s, researchers discovered that pre-training models on massive datasets and then adapting them to specific tasks dramatically improved performance while reducing training time. This approach gained prominence with the rise of transformer models and BERT (Bidirectional Encoder Representations from Transformers), which demonstrated that pre-trained models could be effectively fine-tuned for numerous downstream tasks. The explosion of generative AI and large language models like GPT-3, GPT-4, and Claude has made fine-tuning even more relevant, as organizations worldwide seek to customize these powerful models for domain-specific applications. According to recent enterprise adoption data, 51% of organizations using generative AI employ retrieval-augmented generation (RAG), while fine-tuning remains a critical complementary approach for specialized use cases. The evolution of parameter-efficient fine-tuning (PEFT) methods like LoRA (Low-Rank Adaptation) has democratized access to fine-tuning by reducing computational requirements by up to 90%, making the technique accessible to organizations without massive GPU infrastructure.

Fine-tuning operates through a well-defined mathematical and computational process that adjusts a model’s parameters (weights and biases) to optimize performance on new tasks. During pre-training, a model learns general patterns from massive datasets through gradient descent and backpropagation, establishing a broad foundation of knowledge. Fine-tuning begins with these pre-trained weights as a starting point and continues the training process on a smaller, task-specific dataset. The key difference lies in using a significantly smaller learning rate—the magnitude of weight updates during each training iteration—to avoid catastrophic forgetting, where the model loses important general knowledge. The fine-tuning process involves forward passes where the model makes predictions on training examples, loss calculation measuring prediction errors, and backward passes where gradients are computed and weights are adjusted. This iterative process continues for multiple epochs (complete passes through the training data) until the model achieves satisfactory performance on validation data. The mathematical elegance of fine-tuning lies in its efficiency: by starting with pre-trained weights that already capture useful patterns, the model converges to good solutions much faster than training from scratch, often requiring 10-100 times less data and compute resources.

| Aspect | Fine-Tuning | Retrieval-Augmented Generation (RAG) | Prompt Engineering | Full Model Training |

|---|---|---|---|---|

| Knowledge Source | Embedded in model parameters | External database/knowledge base | User-provided context in prompt | Learned from scratch from data |

| Data Freshness | Static until retraining | Real-time/dynamic | Current only in prompt | Frozen at training time |

| Computational Cost | High upfront (training), low inference | Low upfront, moderate inference | Minimal | Extremely high |

| Implementation Complexity | Moderate-High (requires ML expertise) | Moderate (requires infrastructure) | Low (no training needed) | Very High |

| Customization Depth | Deep (model behavior changes) | Shallow (retrieval only) | Superficial (prompt-level) | Complete (from ground up) |

| Update Frequency | Weeks/months (requires retraining) | Real-time (update database) | Per-query (manual) | Impractical for frequent updates |

| Output Consistency | High (learned patterns) | Variable (depends on retrieval) | Moderate (prompt-dependent) | Depends on training data |

| Source Attribution | None (implicit in weights) | Full (documents cited) | Partial (prompt visible) | None |

| Scalability | Multiple models needed per domain | Single model, multiple data sources | Single model, multiple prompts | Impractical at scale |

| Best For | Specialized tasks, consistent formatting | Current information, transparency | Quick iterations, simple tasks | Novel domains, unique requirements |

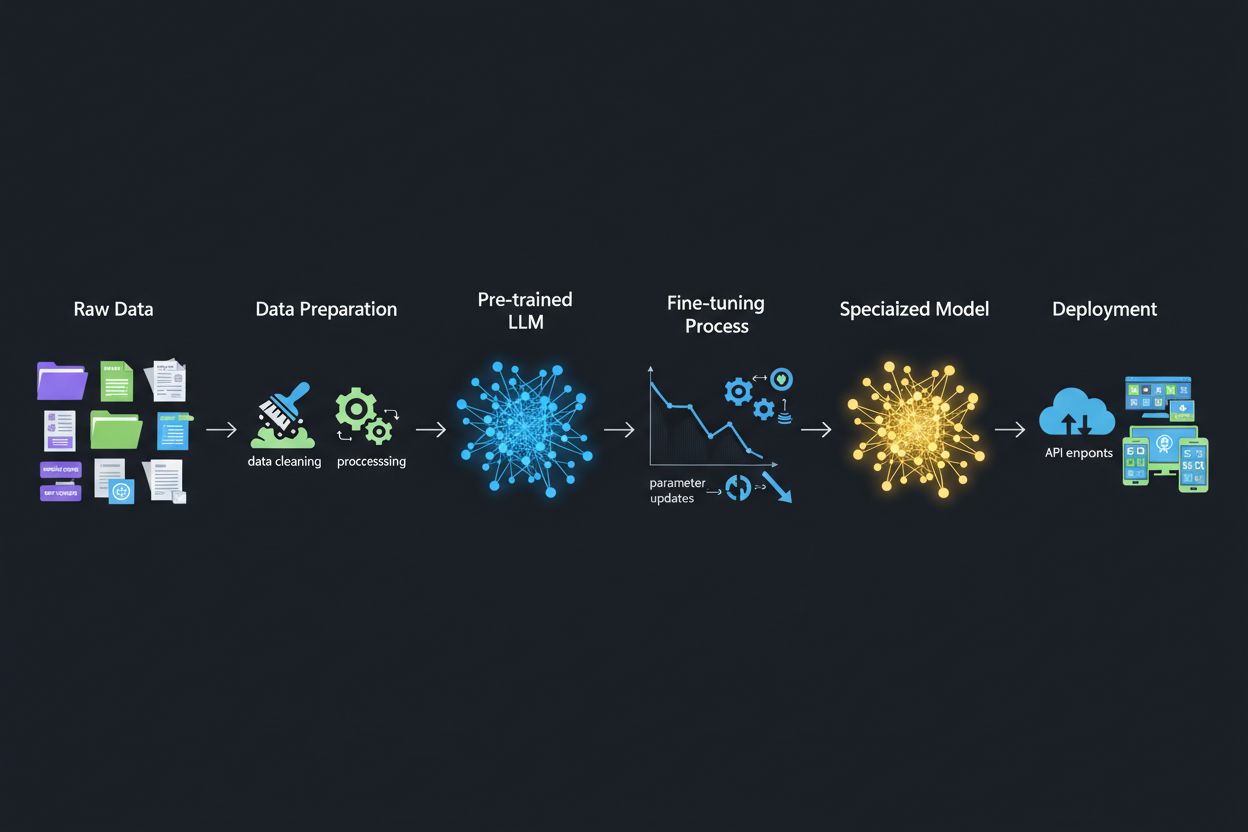

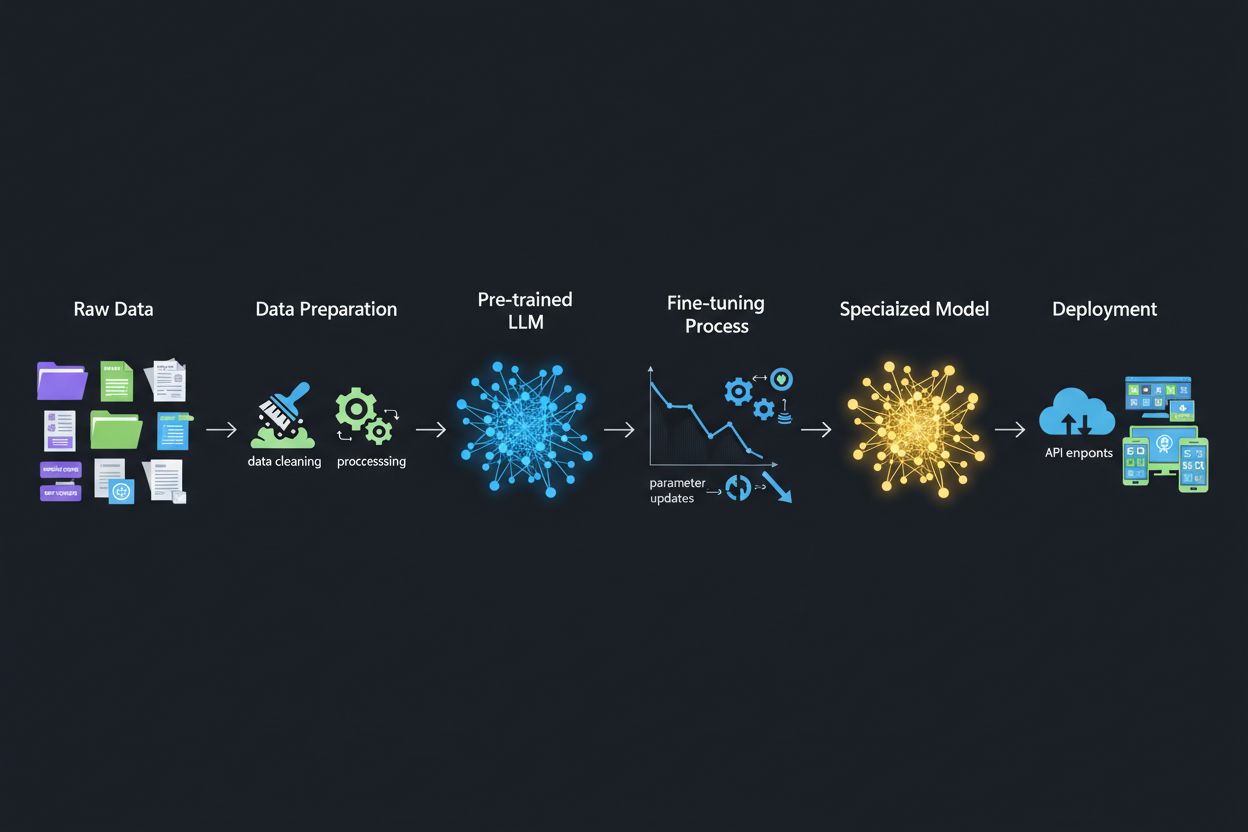

Fine-tuning follows a structured pipeline that transforms a general-purpose model into a specialized expert. The process begins with data preparation, where organizations collect and curate examples relevant to their specific task. For a legal AI assistant, this might involve thousands of legal documents paired with relevant questions and answers. For a medical diagnostic tool, it could be clinical cases with diagnoses. The quality of this dataset is paramount—research consistently shows that a smaller set of high-quality, well-labeled examples produces better results than a larger set of noisy or inconsistent data. Once data is prepared, it’s split into training, validation, and test sets to ensure the model generalizes well to unseen examples.

The actual fine-tuning process begins by loading the pre-trained model and its weights into memory. The model architecture remains unchanged; only the weights are adjusted. During each training iteration, the model processes a batch of training examples, makes predictions, and compares them to the correct answers using a loss function that quantifies prediction errors. Backpropagation then computes gradients—mathematical measures of how each weight should change to reduce loss. An optimization algorithm like Adam or SGD (Stochastic Gradient Descent) uses these gradients to update weights, typically with a learning rate 10-100 times smaller than used in pre-training to preserve general knowledge. This process repeats across multiple epochs, with the model gradually specializing on the task-specific data. Throughout training, the model is evaluated on the validation set to monitor performance and detect overfitting—when the model memorizes training examples rather than learning generalizable patterns. Once validation performance plateaus or begins degrading, training stops to prevent overfitting.

Full fine-tuning updates all model parameters, which can be computationally expensive for large models. A model with billions of parameters requires storing gradients for each parameter during backpropagation, consuming enormous GPU memory. For a 7-billion parameter model, full fine-tuning might require 100+ GB of GPU memory, making it inaccessible to most organizations. However, full fine-tuning often produces the best performance since all model weights can adapt to the new task.

Parameter-efficient fine-tuning (PEFT) methods address this limitation by updating only a small subset of parameters. LoRA (Low-Rank Adaptation), one of the most popular PEFT techniques, adds small trainable matrices to specific layers while keeping original weights frozen. These low-rank matrices capture task-specific adaptations without modifying the base model. Research demonstrates that LoRA achieves performance comparable to full fine-tuning while using 90% less memory and training 3-5 times faster. QLoRA extends this further by quantizing the base model to 4-bit precision, reducing memory requirements by another 75%. Other PEFT approaches include adapters (small task-specific layers inserted into the model), prompt tuning (learning soft prompts rather than model weights), and BitFit (updating only bias terms). These methods have democratized fine-tuning, enabling organizations without massive GPU clusters to customize state-of-the-art models.

Fine-tuning LLMs involves unique considerations distinct from fine-tuning computer vision or traditional NLP models. Pre-trained LLMs like GPT-3 or Llama are trained through self-supervised learning on massive text corpora, learning to predict the next word in sequences. While this pre-training produces powerful text generation capabilities, it doesn’t inherently teach the model to follow user instructions or understand intent. A pre-trained LLM asked “teach me how to write a resumé” might simply complete the sentence with “using Microsoft Word” rather than providing actual resume-writing guidance.

Instruction tuning addresses this limitation by fine-tuning on datasets of (instruction, response) pairs covering diverse tasks. These datasets teach the model to recognize different instruction types and respond appropriately. An instruction-tuned model learns that prompts beginning with “teach me how to” should receive step-by-step guidance, not sentence completions. This specialized fine-tuning approach has proven essential for creating practical AI assistants.

Reinforcement Learning from Human Feedback (RLHF) represents an advanced fine-tuning technique that supplements instruction tuning. Rather than relying solely on labeled examples, RLHF incorporates human preferences to optimize for qualities difficult to specify through discrete examples—like helpfulness, factual accuracy, humor, or empathy. The process involves generating multiple model outputs for prompts, having humans rate their quality, training a reward model to predict which outputs humans prefer, then using reinforcement learning to optimize the LLM according to this reward signal. RLHF has been instrumental in aligning models like ChatGPT with human values and preferences.

Fine-tuning has become central to enterprise AI strategies, enabling organizations to deploy customized models that reflect their unique requirements and brand voice. According to Databricks’ 2024 State of AI report analyzing data from over 10,000 organizations, enterprises are becoming dramatically more efficient at deploying AI models, with the ratio of experimental-to-production models improving from 16:1 to 5:1—a 3x efficiency gain. While RAG adoption has grown to 51% among generative AI users, fine-tuning remains critical for specialized applications where consistent output formatting, domain expertise, or offline deployment is essential.

Financial Services leads AI adoption with the highest GPU utilization and 88% growth in GPU usage over six months, much of it driven by fine-tuning models for fraud detection, risk assessment, and algorithmic trading. Healthcare & Life Sciences has emerged as a surprise early adopter, with 69% of Python library usage devoted to natural language processing, reflecting fine-tuning applications in drug discovery, clinical research analysis, and medical documentation. Manufacturing & Automotive recorded 148% year-over-year NLP growth, using fine-tuned models for quality control, supply chain optimization, and customer feedback analysis. These adoption patterns demonstrate that fine-tuning has moved from experimental projects to production systems delivering measurable business value.

Fine-tuning delivers several compelling advantages that explain its continued prominence despite the rise of alternative approaches. Domain-specific accuracy represents perhaps the most significant benefit—a model fine-tuned on thousands of legal documents doesn’t just know legal terminology; it understands legal reasoning, appropriate clause structures, and relevant precedents. This deep specialization produces outputs that match expert standards in ways generic models cannot achieve. Efficiency gains through fine-tuning can be dramatic; research from Snorkel AI demonstrated that a fine-tuned small model achieved GPT-3 quality performance while being 1,400 times smaller, requiring less than 1% of the training labels, and costing 0.1% as much to run in production. This efficiency transforms the economics of AI deployment, making sophisticated AI accessible to organizations with limited budgets.

Customized tone and style control enables organizations to maintain brand consistency and communication standards. A company-specific chatbot can be fine-tuned to follow organizational voice guidelines, whether formal and professional for legal applications or warm and conversational for retail. Offline deployment capability represents another critical advantage—once fine-tuned, models contain all necessary knowledge in their parameters and don’t require external data access, making them suitable for mobile applications, embedded systems, and secure environments without internet connectivity. Reduced hallucinations in specialized domains occurs because the model has learned accurate patterns specific to that domain during fine-tuning, reducing the tendency to generate plausible-sounding but incorrect information.

Despite its advantages, fine-tuning presents significant challenges that organizations must carefully consider. Data requirements represent a substantial barrier—fine-tuning requires hundreds to thousands of high-quality, labeled examples, and preparing such datasets involves extensive collection, cleaning, and annotation work that can take weeks or months. The computational costs remain high; full fine-tuning of large models demands powerful GPUs or TPUs, with training runs potentially costing tens of thousands of dollars. Even parameter-efficient methods require specialized hardware and expertise that many organizations lack.

Catastrophic forgetting poses a persistent risk where fine-tuning causes models to lose general knowledge learned during pre-training. A model fine-tuned extensively on legal documents might excel at contract analysis but struggle with basic tasks it previously handled well. This narrowing effect often necessitates maintaining multiple specialized models rather than relying on one versatile assistant. Maintenance burden grows as domain knowledge evolves—when new regulations emerge, research advances, or product features change, the model must be retrained on updated data, a process that can take weeks and cost thousands of dollars. This retraining cycle can leave models dangerously outdated in rapidly changing fields.

Lack of source attribution creates transparency and trust issues in high-stakes applications. Fine-tuned models generate answers from internal parameters rather than explicit retrieved documents, making it nearly impossible to verify where specific information originated. In healthcare, doctors cannot verify which studies informed a recommendation. In legal applications, lawyers cannot check which cases shaped advice. This opacity makes fine-tuned models unsuitable for applications requiring audit trails or regulatory compliance. Overfitting risk remains significant, particularly with smaller datasets, where models memorize specific examples rather than learning generalizable patterns, leading to poor performance on cases differing from training examples.

The fine-tuning landscape continues evolving rapidly, with several important trends shaping its future. Continued advancement of parameter-efficient methods promises to make fine-tuning increasingly accessible, with new techniques emerging that reduce computational requirements even further while maintaining or improving performance. Research into few-shot fine-tuning aims to achieve effective specialization with minimal labeled data, potentially reducing the data collection burden that currently limits fine-tuning adoption.

Hybrid approaches combining fine-tuning with RAG are gaining traction as organizations recognize that these techniques complement rather than compete with each other. A model fine-tuned for domain expertise can be augmented with RAG to access current information, combining the strengths of both approaches. This hybrid strategy is becoming increasingly common in production systems, particularly in regulated industries where both specialization and information currency matter.

Federated fine-tuning represents an emerging frontier where models are fine-tuned on distributed data without centralizing sensitive information, addressing privacy concerns in healthcare, finance, and other regulated sectors. Continual learning approaches that enable models to adapt to new information without catastrophic forgetting could transform how organizations maintain fine-tuned models as domains evolve. Multimodal fine-tuning extending beyond text to images, audio, and video will enable organizations to customize models for increasingly diverse applications.

The integration of fine-tuning with AI monitoring platforms like AmICited represents another important trend. As organizations deploy fine-tuned models across various AI platforms—including ChatGPT, Claude, Perplexity, and Google AI Overviews—tracking how these customized models appear in AI-generated responses becomes critical for brand visibility and attribution. This convergence of fine-tuning technology with AI monitoring infrastructure reflects the maturation of generative AI from experimental projects to production systems requiring comprehensive oversight and measurement.

+++

Fine-tuning is a specific subset of transfer learning. While transfer learning broadly refers to using knowledge from one task to improve performance on another task, fine-tuning specifically involves taking a pre-trained model and retraining it on a new, task-specific dataset. Transfer learning is the umbrella concept, and fine-tuning is one implementation method. Fine-tuning adjusts the model's weights through supervised learning on labeled examples, whereas transfer learning can involve various techniques including feature extraction without any retraining.

The amount of data required depends on the model size and task complexity, but generally ranges from hundreds to thousands of labeled examples. Smaller, more focused datasets of high-quality examples often outperform larger datasets with poor quality or inconsistent labeling. Research shows that a smaller set of high-quality data is more valuable than a larger set of low-quality data. For parameter-efficient fine-tuning methods like LoRA, you may need even less data than full fine-tuning approaches.

Catastrophic forgetting occurs when fine-tuning causes a model to lose or destabilize the general knowledge it learned during pre-training. This happens when the learning rate is too high or the fine-tuning dataset is too different from the original training data, causing the model to overwrite important learned patterns. To prevent this, practitioners use smaller learning rates during fine-tuning and employ techniques like regularization to preserve the model's core capabilities while adapting to new tasks.

Parameter-efficient fine-tuning (PEFT) methods like Low-Rank Adaptation (LoRA) reduce computational requirements by updating only a small subset of model parameters instead of all weights. LoRA adds small trainable matrices to specific layers while keeping the original weights frozen, achieving similar performance to full fine-tuning while using 90% less memory and compute. Other PEFT methods include adapters, prompt tuning, and quantization-based approaches, making fine-tuning accessible to organizations without massive GPU resources.

Fine-tuning embeds knowledge directly into model parameters through training, while Retrieval-Augmented Generation (RAG) retrieves information from external databases at query time. Fine-tuning excels at specialized tasks and consistent output formatting but requires significant compute resources and becomes outdated as information changes. RAG provides real-time information access and easier updates but may produce less specialized outputs. Many organizations use both approaches together for optimal results.

Instruction tuning is a specialized form of fine-tuning that trains models to better follow user instructions and respond to diverse tasks. It uses datasets of (instruction, response) pairs covering various use cases like question-answering, summarization, and translation. Standard fine-tuning typically optimizes for a single task, while instruction tuning teaches the model to handle multiple instruction types and follow directions more effectively, making it particularly valuable for creating general-purpose assistants.

Yes, fine-tuned models can be deployed on edge devices and offline environments, which is one of their key advantages over RAG-based approaches. Once fine-tuning is complete, the model contains all necessary knowledge in its parameters and doesn't require external data access. This makes fine-tuned models ideal for mobile applications, embedded systems, IoT devices, and secure environments without internet connectivity, though model size and computational requirements must be considered for resource-constrained devices.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Learn how AI model fine-tuning adapts pre-trained models for specific industry and brand-related tasks, improving accuracy while reducing costs and computationa...

Discover real-time AI adaptation - the technology enabling AI systems to continuously learn from current events and data. Explore how adaptive AI works, its app...

Learn how AI-friendly formatting with tables, lists, and clear sections improves AI parsing accuracy and increases your content's visibility in AI Overviews, Ch...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.