AI-Generated Image

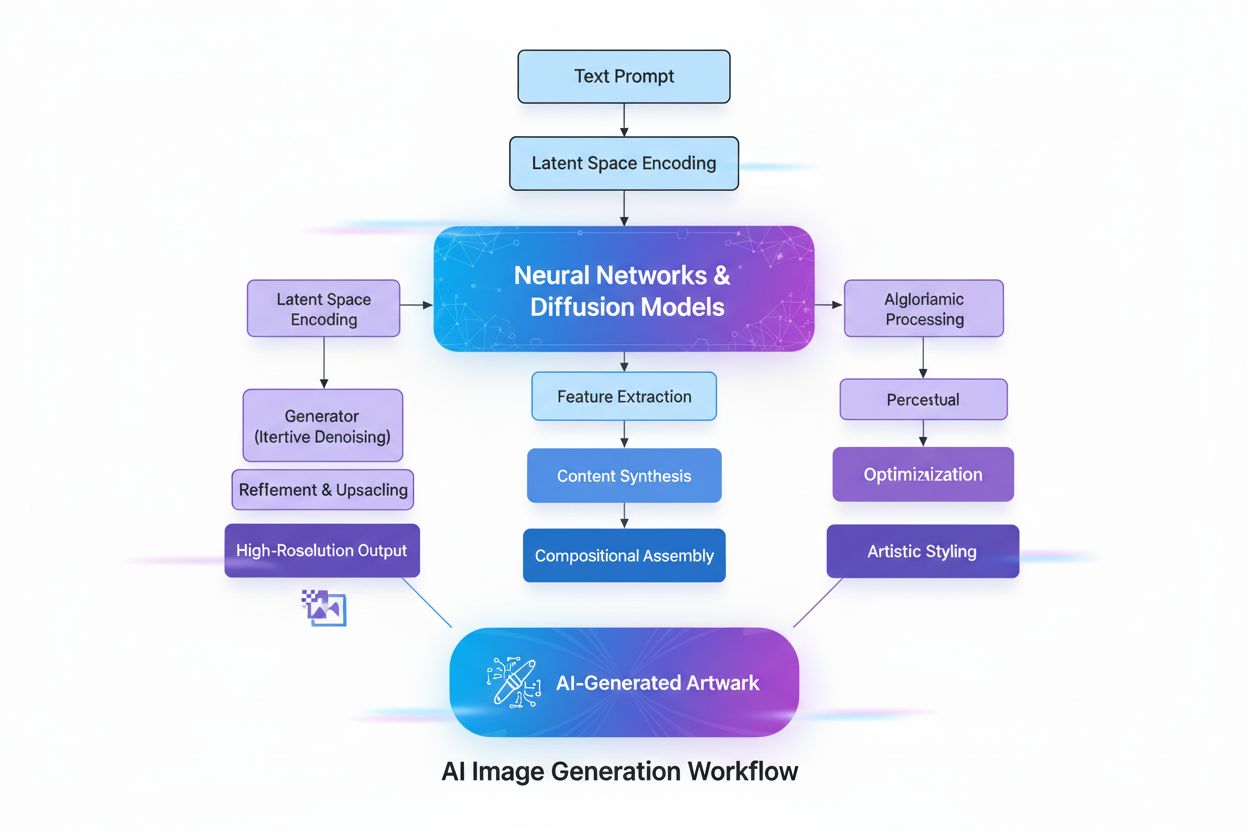

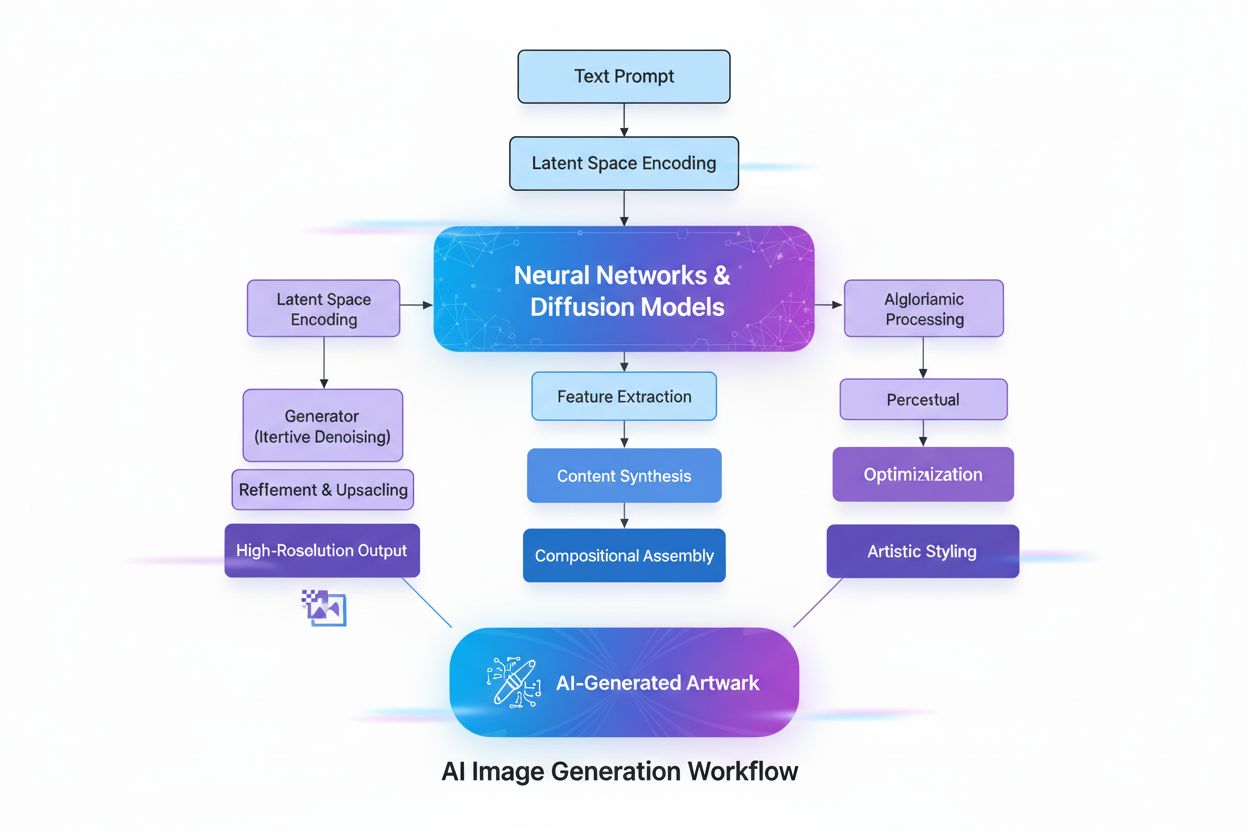

Learn what AI-generated images are, how they're created using diffusion models and neural networks, their applications in marketing and design, and the ethical ...

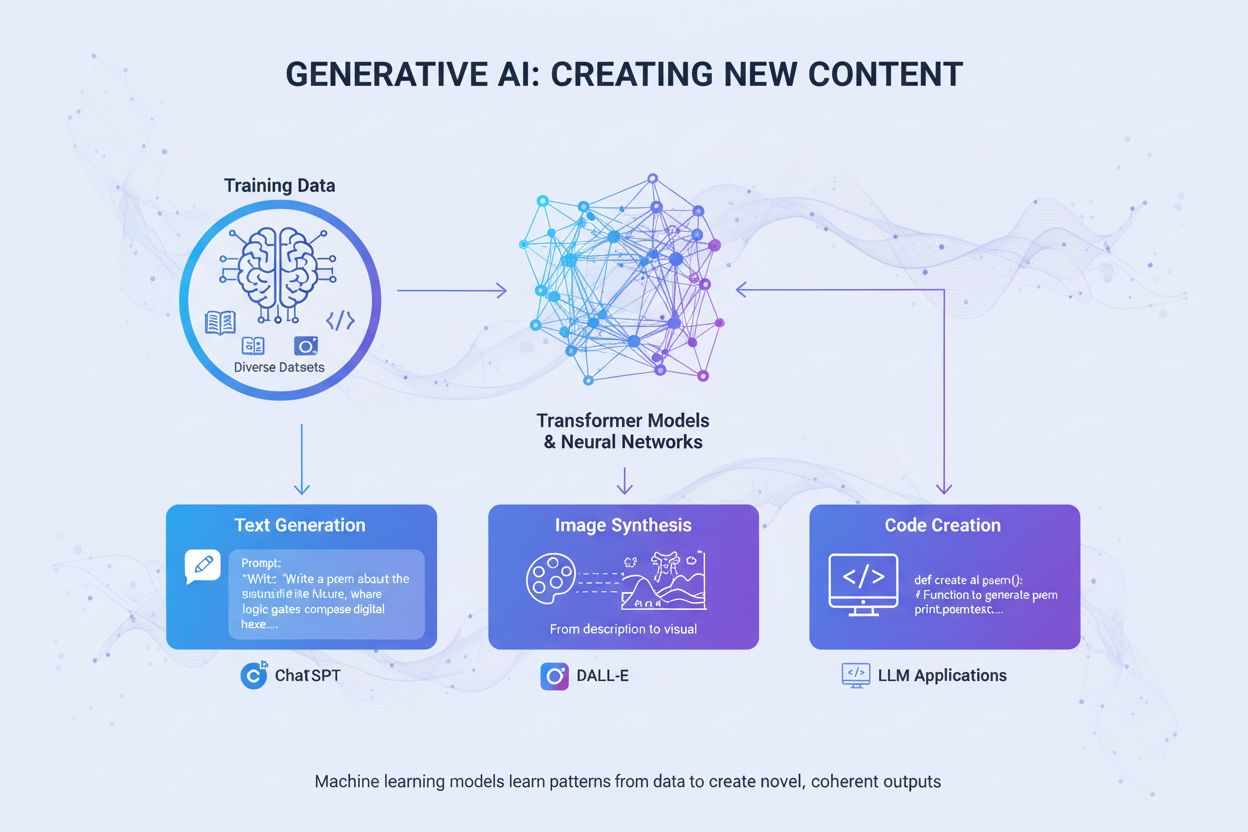

Generative AI is artificial intelligence that creates new, original content such as text, images, videos, code, and audio based on patterns learned from training data. It uses deep learning models like transformers and diffusion models to generate diverse outputs in response to user prompts or requests.

Generative AI is artificial intelligence that creates new, original content such as text, images, videos, code, and audio based on patterns learned from training data. It uses deep learning models like transformers and diffusion models to generate diverse outputs in response to user prompts or requests.

Generative AI is a category of artificial intelligence that creates new, original content based on patterns learned from training data. Unlike traditional AI systems that classify or predict information, generative AI models autonomously produce novel outputs such as text, images, videos, audio, code, and other data types in response to user prompts or requests. These systems leverage sophisticated deep learning models and neural networks to identify complex patterns and relationships within massive datasets, then use that learned knowledge to generate content that resembles but is distinct from the training data. The term “generative” emphasizes the model’s capacity to generate—to create something new rather than simply analyze or categorize existing information. Since the public release of ChatGPT in November 2022, generative AI has become one of the most transformative technologies in computing, fundamentally changing how organizations approach content creation, problem-solving, and decision-making across virtually every industry.

The foundations of generative AI extend back decades, though the technology has evolved dramatically in recent years. Early statistical models in the 20th century laid groundwork for understanding data distributions, but true generative AI emerged with advances in deep learning and neural networks in the 2010s. The introduction of Variational Autoencoders (VAEs) in 2013 marked a significant breakthrough, enabling models to generate realistic variations of data like images and speech. In 2014, Generative Adversarial Networks (GANs) and diffusion models emerged, further improving the quality and realism of generated content. The pivotal moment came in 2017 when researchers published “Attention is All You Need,” introducing the transformer architecture—a breakthrough that fundamentally transformed how generative AI models process and generate sequential data. This innovation enabled the development of Large Language Models (LLMs) like OpenAI’s GPT series, which demonstrated unprecedented capabilities in understanding and generating human language. According to McKinsey research, one-third of organizations were already using generative AI regularly in at least one business function by 2023, with Gartner projecting that more than 80% of enterprises will have deployed generative AI applications or used generative AI APIs by 2026. The rapid acceleration from research curiosity to enterprise necessity represents one of the fastest technology adoption cycles in history.

Generative AI operates through a multi-phase process that begins with training on massive datasets, followed by tuning for specific applications, and continuous generation, evaluation, and retuning cycles. During the training phase, practitioners feed deep learning algorithms terabytes of raw, unstructured data—such as internet text, images, or code repositories—and the algorithm performs millions of “fill in the blank” exercises, predicting the next element in a sequence and adjusting itself to minimize prediction errors. This process creates a neural network of parameters that encode the patterns, entities, and relationships discovered in the training data. The result is a foundation model—a large, pre-trained model capable of performing multiple tasks across different domains. Foundation models like GPT-3, GPT-4, and Stable Diffusion serve as the basis for numerous specialized applications. The tuning phase involves fine-tuning the foundation model with labeled data specific to a particular task, or using Reinforcement Learning with Human Feedback (RLHF), where human evaluators score different outputs to guide the model toward greater accuracy and relevance. Developers and users continuously assess outputs and further tune models—sometimes weekly—to improve performance. Another optimization technique is Retrieval Augmented Generation (RAG), which extends the foundation model to access relevant external sources, ensuring the model always has access to current information while maintaining transparency about its sources.

| Model Type | Training Approach | Generation Speed | Output Quality | Diversity | Best Use Cases |

|---|---|---|---|---|---|

| Diffusion Models | Iterative noise removal from random data | Slow (multiple iterations) | Very High (photorealistic) | High | Image generation, high-fidelity synthesis |

| Generative Adversarial Networks (GANs) | Generator vs. Discriminator competition | Fast | High | Lower | Domain-specific generation, style transfer |

| Variational Autoencoders (VAEs) | Encoder-decoder with latent space | Moderate | Moderate | Moderate | Data compression, anomaly detection |

| Transformer Models | Self-attention on sequential data | Moderate to Fast | Very High (text/code) | Very High | Language generation, code synthesis, LLMs |

| Hybrid Approaches | Combining multiple architectures | Variable | Very High | Very High | Multimodal generation, complex tasks |

The transformer architecture stands as the most influential technology enabling modern generative AI. Transformers use self-attention mechanisms to determine which parts of input data are most important when processing each element, allowing the model to capture long-range dependencies and context. Positional encoding represents the order of input elements, enabling transformers to understand sequence structure without sequential processing. This parallel processing capability dramatically accelerates training compared to earlier recurrent neural networks (RNNs). The transformer’s encoder-decoder structure, combined with multiple layers of attention heads, enables the model to simultaneously consider various aspects of data and refine contextual embeddings at each layer. These embeddings capture everything from grammar and syntax to complex semantic meanings. Large Language Models (LLMs) like ChatGPT, Claude, and Gemini are built on transformer architectures and contain billions of parameters—encoded representations of learned patterns. The scale of these models, combined with training on internet-scale data, enables them to perform diverse tasks from translation and summarization to creative writing and code generation. Diffusion models, another critical architecture, work by first adding noise to training data until it becomes random, then training the algorithm to iteratively remove that noise to reveal desired outputs. While diffusion models require more training time than VAEs or GANs, they offer superior control over output quality, particularly for high-fidelity image generation tools like DALL-E and Stable Diffusion.

The business case for generative AI has proven compelling, with enterprises experiencing measurable productivity gains and cost reductions. According to OpenAI’s 2025 enterprise AI report, users report saving 40–60 minutes per day through generative AI applications, translating to significant productivity improvements across organizations. The generative AI market was valued at USD 16.87 billion in 2024 and is projected to reach USD 109.37 billion by 2030, growing at a CAGR of 37.6%—one of the fastest growth rates in enterprise software history. Enterprise spending on generative AI reached $37 billion in 2025, up from $11.5 billion in 2024, representing a 3.2x year-over-year increase. This acceleration reflects growing confidence in ROI, with AI buyers converting at 47% compared to SaaS’s traditional 25% conversion rate, indicating that generative AI delivers sufficient immediate value to justify rapid adoption. Organizations are deploying generative AI across multiple functions: customer service teams use AI chatbots for personalized responses and first-contact resolution; marketing departments leverage content generation for blogs, emails, and social media; software development teams employ code generation tools to accelerate development cycles; and research teams use generative models to analyze complex datasets and propose novel solutions. Financial services firms use generative AI for fraud detection and personalized financial advice, while healthcare organizations apply it to drug discovery and medical imaging analysis. The technology’s versatility across industries demonstrates its transformative potential for business operations.

Generative AI’s applications span virtually every sector and function. In text generation, models produce coherent, contextually relevant content including documentation, marketing copy, blog articles, research papers, and creative writing. They excel at automating tedious writing tasks like document summarization and metadata generation, freeing human writers for higher-value creative work. Image generation tools like DALL-E, Midjourney, and Stable Diffusion create photorealistic images, original artwork, and perform style transfer and image editing tasks. Video generation capabilities enable animation creation from text prompts and special effects application more quickly than traditional methods. Audio and music generation synthesizes natural-sounding speech for chatbots and digital assistants, creates audiobook narration, and generates original music mimicking professional compositions. Code generation enables developers to write original code, autocomplete snippets, translate between programming languages, and debug applications. In healthcare, generative AI accelerates drug discovery by generating novel protein sequences and molecular structures with desired properties. Synthetic data generation creates labeled training data for machine learning models, particularly valuable when real data is restricted, unavailable, or insufficient for edge cases. In automotive, generative AI creates 3D simulations for vehicle development and generates synthetic data for autonomous vehicle training. Media and entertainment companies use generative AI to create animations, scripts, game environments, and personalized content recommendations. Energy companies apply generative models to grid management, operational safety optimization, and energy production forecasting. The breadth of applications demonstrates generative AI’s role as a foundational technology reshaping how organizations create, analyze, and innovate.

Despite remarkable capabilities, generative AI presents significant challenges that organizations must address. AI hallucinations—plausible-sounding but factually incorrect outputs—occur because generative models predict the next element based on patterns rather than verifying factual accuracy. A lawyer famously used ChatGPT for legal research and received entirely fictional case citations, complete with quotes and attributions. Bias and fairness issues arise when training data contains societal biases, leading models to generate biased, unfair, or offensive content. Inconsistent outputs result from the probabilistic nature of generative models, where identical inputs may produce different outputs—problematic for applications requiring consistency like customer service chatbots. Lack of explainability makes it difficult to understand how models arrive at specific outputs; even engineers struggle to explain the decision-making processes of these “black box” models. Security and privacy threats emerge when proprietary data is used for model training or when models generate content exposing intellectual property or violating others’ IP protections. Deepfakes—AI-generated or manipulated images, video, or audio designed to deceive—represent one of the most concerning applications, with cybercriminals deploying deepfakes in voice phishing scams and financial fraud. Computational costs remain substantial, with training large foundation models requiring thousands of GPUs and weeks of processing costing millions of dollars. Organizations mitigate these risks through guardrails restricting models to trusted data sources, continuous evaluation and tuning to reduce hallucinations, diverse training data to minimize bias, prompt engineering to achieve consistent outputs, and security protocols protecting proprietary information. Transparency about AI use and human oversight of critical decisions remain essential best practices.

As generative AI systems become primary information sources for millions of users, organizations must understand how their brands, products, and content appear in AI-generated responses. AI visibility monitoring involves systematically tracking how major generative AI platforms—including ChatGPT, Perplexity, Google AI Overviews, and Claude—describe brands, products, and competitors. This monitoring is critical because AI systems often cite sources and reference information without traditional search engine visibility metrics. Brands that don’t appear in AI responses miss opportunities for visibility and influence in the AI-driven search landscape. Tools like AmICited enable organizations to track brand mentions, monitor citation accuracy, identify which domains and URLs are referenced in AI responses, and understand how AI systems represent their competitive positioning. This data helps organizations optimize their content for AI citation, identify misinformation or inaccurate representations, and maintain competitive visibility as AI becomes the primary interface between users and information. The practice of GEO (Generative Engine Optimization) focuses on optimizing content specifically for AI citation and visibility, complementing traditional SEO strategies. Organizations that proactively monitor and optimize their AI visibility gain competitive advantages in the emerging AI-driven information ecosystem.

The generative AI landscape continues evolving rapidly, with several key trends shaping the future. Multimodal AI systems that seamlessly integrate text, images, video, and audio are becoming increasingly sophisticated, enabling more complex and nuanced content generation. Agentic AI—autonomous AI systems that can perform tasks and accomplish goals without human intervention—represents the next evolution beyond generative AI, with AI agents using generated content to interact with tools and make decisions. Smaller, more efficient models are emerging as alternatives to massive foundation models, enabling organizations to deploy generative AI with lower computational costs and faster inference speeds. Retrieval Augmented Generation (RAG) continues advancing, allowing models to access current information and external knowledge sources, addressing hallucination and accuracy concerns. Regulatory frameworks are developing globally, with governments establishing guidelines for responsible AI development and deployment. Enterprise customization through fine-tuning and domain-specific models is accelerating, as organizations seek to adapt generative AI to their unique business contexts. Ethical AI practices are becoming competitive differentiators, with organizations prioritizing transparency, fairness, and responsible deployment. The convergence of these trends suggests that generative AI will become increasingly integrated into business operations, more efficient and accessible to organizations of all sizes, and subject to stronger governance and ethical standards. Organizations that invest in understanding generative AI, monitoring their AI visibility, and implementing responsible practices will be best positioned to capture value from this transformative technology while managing associated risks.

Generative AI creates new content by learning the distribution of data and generating novel outputs, while discriminative AI focuses on classification and prediction tasks by learning decision boundaries between categories. Generative AI models like GPT-3 and DALL-E produce creative content, whereas discriminative models are better suited for tasks like image recognition or spam detection. Both approaches have distinct applications depending on whether the goal is content creation or data classification.

Transformer models use self-attention mechanisms and positional encoding to process sequential data like text without requiring sequential processing. This architecture allows transformers to capture long-range dependencies between words and understand context more effectively than previous models. The transformer's ability to process entire sequences simultaneously and learn complex relationships has made it the foundation for most modern generative AI systems, including ChatGPT and GPT-4.

Foundation models are large-scale deep learning models pre-trained on vast amounts of unlabeled data that can perform multiple tasks across different domains. Examples include GPT-3, GPT-4, and Stable Diffusion. These models serve as the base for various generative AI applications and can be fine-tuned for specific use cases, making them highly versatile and cost-effective compared to training models from scratch.

As generative AI systems like ChatGPT, Perplexity, and Google AI Overviews become primary information sources, brands need to track how they appear in AI-generated responses. Monitoring AI visibility helps organizations understand brand perception, ensure accurate information representation, and maintain competitive positioning in the AI-driven search landscape. Tools like AmICited enable brands to track mentions and citations across multiple AI platforms.

Generative AI systems can produce 'hallucinations'—plausible-sounding but factually incorrect outputs—due to their pattern-based learning approach. These models may also reflect biases present in training data, generate inconsistent outputs for identical inputs, and lack transparency in their decision-making processes. Addressing these challenges requires diverse training data, continuous evaluation, and implementation of guardrails to restrict models to trusted data sources.

Diffusion models generate content by iteratively removing noise from random data, offering high-quality outputs but slower generation speeds. GANs use two competing neural networks (generator and discriminator) to produce realistic content quickly but with lower diversity. Diffusion models are currently preferred for high-fidelity image generation, while GANs remain effective for domain-specific applications requiring speed and quality balance.

The generative AI market was valued at USD 16.87 billion in 2024 and is projected to reach USD 109.37 billion by 2030, growing at a compound annual growth rate (CAGR) of 37.6% from 2025 to 2030. Enterprise spending on generative AI reached $37 billion in 2025, representing a 3.2x year-over-year increase from $11.5 billion in 2024, demonstrating rapid adoption across industries.

Responsible generative AI implementation requires starting with internal applications to test outcomes in controlled environments, ensuring transparency by clearly communicating when AI is being used, implementing security guardrails to prevent unauthorized data access, and conducting extensive testing across diverse scenarios. Organizations should also establish clear governance frameworks, monitor outputs for bias and accuracy, and maintain human oversight of critical decisions.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Learn what AI-generated images are, how they're created using diffusion models and neural networks, their applications in marketing and design, and the ethical ...

ChatGPT is OpenAI's conversational AI assistant powered by GPT models. Learn how it works, its impact on AI monitoring, brand visibility, and why it matters for...

Community discussion explaining generative engines. Clear explanations of how ChatGPT, Perplexity, and other AI systems differ from traditional Google search.

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.